Community Articles

- Cloudera Community

- Support

- Community Articles

- Working with S3 Compatible Data Stores via Apache ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 03-03-2017 07:17 PM - edited 08-17-2019 01:58 PM

Working With S3 Compatible Data Stores (and handling single source failure)

With the major outage of S3 in my region, I decided I needed to have an alternative file store. I found a great open source server called Minio that I run on a miniPC running Centos 7. We could also use this solution for connecting to other S3 compatible stores such as RiakCS and Google Cloud Storage. I like to remain cloud and location neutral.

In Apache NiFi, it's really easy. You can have two sources and two destinations, instead of just your regular AWS S3, you can have one for AWS S3 and one for another. Or you can use the second as a disaster recovery data backup. Since my Minio box is local, I can store data locally. It's pretty affordable to get a few terabytes connected to a small Linux box to hold some backups. With Apache NiFi, you have queues to buffer a potentially slower ingest/egress.

Minio Setup

wget https://dl.minio.io/server/minio/release/linux-amd64/minio chmod 755 minio nohup ./minio server files &

Find the version that matches your hardware and OS. It will report back the endpoint (use this in the NiFi endpoint URL), access key and secret key and region. You enter this information in Apache NiFi and any S3 compatible tool like AWS CLI or S3Cmd.

S3 Tool Install

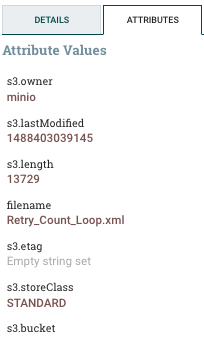

pip install awscli AWS Access Key ID [****************3P2F]: 45454545zfgfgfgfgfgzgggzggggFFF AWS Secret Access Key [****************Y3TG]: FFFDFDFDFDF7d8f7d87f8&D*F7d*&F78 Default region name [us-east-1]: Default output format [None]: aws configure set default.s3.signature_version s3v4 aws --endpoint-url http://192.168.1.155:9000 s3 ls s3://nifi 2017-03-01 16:17:19 13729 Retry_Count_Loop.xml 2017-03-01 16:19:58 19929 tspann7.jpg aws --endpoint-url http://192.168.1.155:9000 s3 ls 2017-03-01 11:19:58 nifi

These are just for testing connectivity.

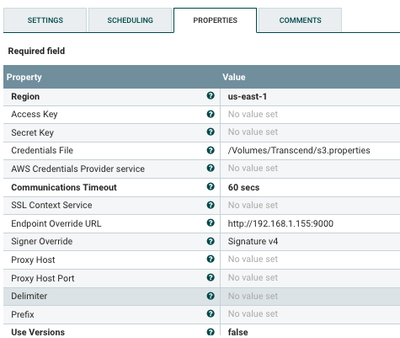

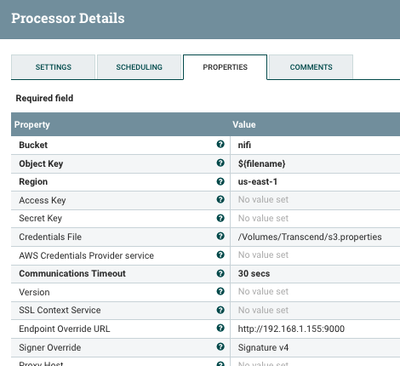

NiFi Setup

Flow 1:

- GetTwitter: Ingest twitter data with keywords: AWS Outage, ...

- EvaluateJSONPath: parse out main Twitter fields from JSON

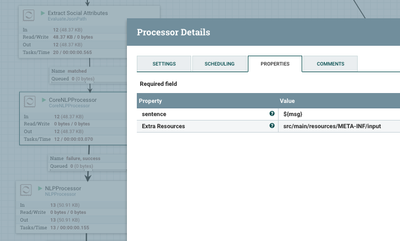

- CoreNLPProcessor: my custom processor to run Stanford CoreNLP sentiment analysis on the message.

- NLPProcessor: my custom processor to run Apache OpenNLP name and location entity resolver on the message.

- AttributeToJSON: convert all the attributes including output from the two custom processors into one unified JSON file.

- PutS3Object: Store to my S3 compatible datastore. Here you can tee the data from AttributeToJson to a number of different S3 stores including Amazon S3.

Flow 2:

- ListS3: list all the files from S3 compatible data store. This is where you can add additional sources to ingest. You can have Amazon S3, Google Cloud Storage, RiakCS, Minio and others.

- FetchS3Object: get the actual file from S3.

- PutFile: store locally

S3.properties file

# Setup endpoint host_base = 192.168.1.155:9000 host_bucket = 192.168.1.155:9000 bucket_location = us-east-1 use_https = True # Setup access keys access_key = DF&D*F&*D&F*&DF&DFDF secret_key = &d7df7f77DDFdjfiqeworsdfFDr34fd accessKey = DF&D*F&*D&F*&DF&DFDF secretKey = &d7df7f77DDFdjfiqeworsdfFDr34fd # Enable S3 v4 signature APIs signature_v2 = False

After sending Twitter JSON files to S3.

References:

- https://github.com/minio/minio

- https://www.minio.io/

- https://dzone.com/articles/aftermath-of-the-aws-s3-outagean-interview-with-ni

- https://aws.amazon.com/message/41926/

- https://cloud.google.com/storage/docs/interoperability

- https://docs.minio.io/docs/aws-cli-with-minio

- https://aws.amazon.com/cli/

- http://s3tools.org/s3cmd

- https://github.com/minio/minio-java

Created on 05-10-2017 10:36 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @Timothy Spann,

I am trying to store files to Minio using PutS3Object processor but I get this error -

to Amazon S3 due to com.amazonaws.AmazonClientException: Unable to reset stream after calculating AWS4 signature: com.amazonaws.AmazonClientException: Unable to reset stream after calculating AWS4 signature

Is it because of the region setting? My minio instance is hosted in the east coast lab but I am trying to access it via NiFi from the west coast. I tried setting the region to us-west-1, us-west-2, us-east-1 but I get the same error. Can you provide any insight?

Created on 05-12-2017 06:30 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Minio should probably be in the same region as those regions are Amazon S3 specific. Minio runs on one machine and is not part of AWS infrastructure.

Created on 05-16-2017 02:45 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Alright. What do you think would cause that error?

Created on 05-30-2017 01:42 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

what are your settings for minio? and you must be running a minio server and have permissions to it.

you need to set your access and secret keys and host base and host bucket