Support Questions

- Cloudera Community

- Support

- Support Questions

- Minimum number of nodes to add in a multi-node clu...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Minimum number of nodes to add in a multi-node cluster

Created on 10-11-2016 02:35 AM - edited 09-16-2022 03:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have

1. Hive

2. Pig

3. Zookeeper

4.HDFS

5. Hue

6. Oozie

7. Sqoop

8. Yarn

9. Sentry

Currently, all of these are deployed on the same host. Now, I would like to add more hosts to it.

But I have a few doubts:

In production,

1. a node means a server, right? No VM'S ?

2. How many servers I would need to add to have a healthy cluster

3. Which of the above mentioned services should be co-located?

4. What should be the distribution like?

Pig is relatively used less but sqoop, Hive , Oozie and Hue most of the times and ofcourse Sentry for authorization part.

What should be the distribution like? Which of these services should be moved to new hosts?Which of these should be co located?

Which of these should have entirely dedicated server to them? I am new to it and would appreciate if you could give the specifications to establishing a multi-node cluster .

Created on 10-12-2016 11:17 AM - edited 10-12-2016 11:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Howdy,

I'm just going to jump in and give you as much info as possible, so strap in. There's going to be a lot of (hopefully helpful) info.

Before I get started, and I state this toward the end too, it’s important to know that all of this info is general “big picture” stuff, and there are a ton of factors that go into speccing your cluster (use cases, future scaling considerations, etc). I cannot stress this enough. That being said, let’s dig in.

I'm going to answer your questions in order.

- In short, yes. we generally recommend bare metal(“node” = physical box) for production clusters. You can get away with using VMs on a hypervisor for development clusters or POCs, but that’s not recommended for production clusters. If you don’t have the resources for a bare metal cluster, it’s generally a better idea to deploy it in the cloud.

For cloud based clusters, I recommend Cloudera Director, which allows you to deploy cloud based clusters that are configured with hadoop performance in mind.

2. It's not simply a question of how many nodes, but what the specs of each node are. We have some good documentation here that explains best practices for speccing your cluster hardware.

The amount of nodes depends on what your workload will be like. This includes how much data you'll be ingesting, how often you'll be ingesting it, and how much you plan on processing said data. That being said, Cloudera Manager makes it super easy to scale out as you're workload grows. I would say the bare minimum is 5 nodes (2 masters, 3 workers) You can always scale out from there by adding additional worker and master nodes.

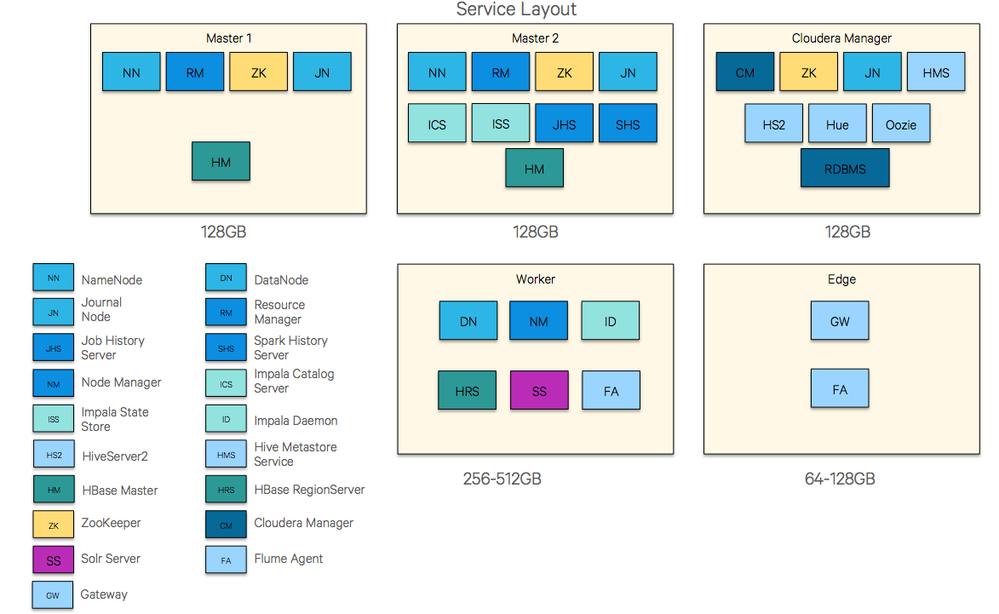

3 and 4. These can be answered with this nifty diagram (memory recommendations are RAM):

This comes from our article on how to deploy clusters like a boss, which covers quite a bit. Additional info on the graphic can be found toward the bottom of the article.

If you look at the diagram, you'll notice a few things:

- The concept of master nodes, worker nodes, and edge nodes. Master nodes have master services like namenode service, resource manager, zookeeper, journal nodes, etc. If the service keeps track of tasks, marks changes, or has the term "manager" in it, you usually want it on a master node. You can put a good amount on single nodes because they don't do too much heavy lifting.

- The placement of DB dependent services. Note that cloudera manager, hue, and all servers that reference a metastore are installed on the master node with an RDBMS installed. You don't have to set it up this way, but it does make logical sense, and is a little more tidy. You will have to consider adding a dedicated RDBMS server eventually, because having it installed on a master node with other servers can easily cause a bottleneck when you’ve scaled enough.

- The worker node(s). this diagram only has one worker node, but it’s important to know that you should have at least three worker nodes for your cluster to function properly, as the default replication factor for HDFS is three. From there, you can add as many worker nodes as your workload dictates. At its base, you don't need many services on a worker node, but what you do need is a lot more memory, because these nodes are where data is stored in HDFS and where the heavy processing will be done.

-The edge node. It's specced similarly, or even lower, than master nodes, and is only really home to gateways and other services that communicate with the outside world. You could add these services to another master node, but it's nice to have one dedicated, especially if you plan on having folks access the cluster externally.

The article also has some good info on where to go with these services as you scale your cluster out further.

One more note. If this is a Proof of Concept cluster, I recommend saving sentry for when you put the cluster into production. When you do add, do note it’s a service that uses an RDBMS.

Some parting thoughts:

When you're planning a cluster, it's important to stop and evaluate exactly what your goal is for said cluster. My recommendation is to start only with the services you need to get the job done. You can always add and activate services later through cloudera manager. If you need any info on each particular service and whether or not you really need it, check out the below links to our official documentation:

And for that matter, you can search through our documentation here.

While this info helps with general ideas and “big picture” topics, you need to consider a lot more info about your planned usage and vision to come up with an optimal setup. Use cases are vitally important to consider when speccing a cluster, especially for production. That being said, you’re more than welcome to get in touch with one our solutions architects to figure out the best configuration for your cluster. Here’s a link to some more info on that.

This is a lot of info, so feel free to take your time digesting it all. Let me know if you have any questions. 🙂

Cheers

Created on 10-12-2016 11:17 AM - edited 10-12-2016 11:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Howdy,

I'm just going to jump in and give you as much info as possible, so strap in. There's going to be a lot of (hopefully helpful) info.

Before I get started, and I state this toward the end too, it’s important to know that all of this info is general “big picture” stuff, and there are a ton of factors that go into speccing your cluster (use cases, future scaling considerations, etc). I cannot stress this enough. That being said, let’s dig in.

I'm going to answer your questions in order.

- In short, yes. we generally recommend bare metal(“node” = physical box) for production clusters. You can get away with using VMs on a hypervisor for development clusters or POCs, but that’s not recommended for production clusters. If you don’t have the resources for a bare metal cluster, it’s generally a better idea to deploy it in the cloud.

For cloud based clusters, I recommend Cloudera Director, which allows you to deploy cloud based clusters that are configured with hadoop performance in mind.

2. It's not simply a question of how many nodes, but what the specs of each node are. We have some good documentation here that explains best practices for speccing your cluster hardware.

The amount of nodes depends on what your workload will be like. This includes how much data you'll be ingesting, how often you'll be ingesting it, and how much you plan on processing said data. That being said, Cloudera Manager makes it super easy to scale out as you're workload grows. I would say the bare minimum is 5 nodes (2 masters, 3 workers) You can always scale out from there by adding additional worker and master nodes.

3 and 4. These can be answered with this nifty diagram (memory recommendations are RAM):

This comes from our article on how to deploy clusters like a boss, which covers quite a bit. Additional info on the graphic can be found toward the bottom of the article.

If you look at the diagram, you'll notice a few things:

- The concept of master nodes, worker nodes, and edge nodes. Master nodes have master services like namenode service, resource manager, zookeeper, journal nodes, etc. If the service keeps track of tasks, marks changes, or has the term "manager" in it, you usually want it on a master node. You can put a good amount on single nodes because they don't do too much heavy lifting.

- The placement of DB dependent services. Note that cloudera manager, hue, and all servers that reference a metastore are installed on the master node with an RDBMS installed. You don't have to set it up this way, but it does make logical sense, and is a little more tidy. You will have to consider adding a dedicated RDBMS server eventually, because having it installed on a master node with other servers can easily cause a bottleneck when you’ve scaled enough.

- The worker node(s). this diagram only has one worker node, but it’s important to know that you should have at least three worker nodes for your cluster to function properly, as the default replication factor for HDFS is three. From there, you can add as many worker nodes as your workload dictates. At its base, you don't need many services on a worker node, but what you do need is a lot more memory, because these nodes are where data is stored in HDFS and where the heavy processing will be done.

-The edge node. It's specced similarly, or even lower, than master nodes, and is only really home to gateways and other services that communicate with the outside world. You could add these services to another master node, but it's nice to have one dedicated, especially if you plan on having folks access the cluster externally.

The article also has some good info on where to go with these services as you scale your cluster out further.

One more note. If this is a Proof of Concept cluster, I recommend saving sentry for when you put the cluster into production. When you do add, do note it’s a service that uses an RDBMS.

Some parting thoughts:

When you're planning a cluster, it's important to stop and evaluate exactly what your goal is for said cluster. My recommendation is to start only with the services you need to get the job done. You can always add and activate services later through cloudera manager. If you need any info on each particular service and whether or not you really need it, check out the below links to our official documentation:

And for that matter, you can search through our documentation here.

While this info helps with general ideas and “big picture” topics, you need to consider a lot more info about your planned usage and vision to come up with an optimal setup. Use cases are vitally important to consider when speccing a cluster, especially for production. That being said, you’re more than welcome to get in touch with one our solutions architects to figure out the best configuration for your cluster. Here’s a link to some more info on that.

This is a lot of info, so feel free to take your time digesting it all. Let me know if you have any questions. 🙂

Cheers