Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: ACLs are enabled and applied but not working

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ACLs are enabled and applied but not working

Created 01-08-2018 05:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear all,

I have enabled ACL on the ambari console and restarted the required services and I'm able to set the permissions for specific group as well. But when they try to execute it is not working. Need your suggestions. My HDP version is 2.4 and hadoop 2.7.

getfacl permission on the folder and file is:

$ hdfs dfs -getfacl -R /abc/month=12/

# file: /abc/month=12

# owner: abiuser

# group: dfsusers

user::rwx

group::r-x

group:data_team:r--

mask::r-x

other::---

default:user::rwx

default:group::r-x

default:group:data_team:r-x

default:mask::r-x

default:other::---

# file: /abc/month=12/file1.bcsf

# owner: abiuser

# group: dfsusers

user::rwx

group::r--

group:data_team:r--

mask::r--

other::---

user A and B are part of data_team, when they try to read the file we are getting the below error.

$ hadoop fs -ls /abc/month=12

ls: Permission denied: user=A, access=EXECUTE, inode="/abc/month=12":abiuser:dfsusers:drwxrwx---

Appreciate any suggestion / help?

Thank you

Created 01-11-2018 04:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have successfully reproduced as you request "created a different file with abiuser as owner and dfsusers as group and add ACL for the group data_team with just read permission?"

Created file acltest2.txt as user abiuser see contents

[root@nakuru ~]# su - abiuser [abiuser@nakuru ~]$ vi acltest2.txt Could you create different file with abiuser as owner and dfsusers as group and add ACL for the group data_team with just read permission? Thank you.

Check the file

[abiuser@nakuru ~]$ ls -al -rw-r--r-- 1 abiuser dfsusers 151 Jan 11 13:00 acltest2.txt

Copied the file to hdfs

[abiuser@nakuru ~]$ hdfs dfs -put acltest2.txt /abc/month=12

Confirmation of file in HDFS note user and group

[abiuser@nakuru ~]$ hdfs dfs -ls /abc/month=12 Found 2 items -rw-r--r-- 3 abiuser dfsusers 151 2018-01-11 13:00 /abc/month=12/acltest2.txt -rw-r--r-- 3 abiuser dfsusers 249 2018-01-11 12:38 /abc/month=12/file1.txt

Set the ACL for group data_team [readonly] where usera and userb belong

[abiuser@nakuru ~]$ hdfs dfs -setfacl -m group:data_team:r-- /abc/month=12/acltest2.txt

Changed to usera

[root@nakuru ~]# su - usera

Successfully read the file as usera

[usera@nakuru ~]$ hdfs dfs -cat /abc/month=12/actest2.txt Could you create different file with abiuser as owner and dfsusers as group and add ACL for the group data_team with just read permission? Thank you.

Now lets check the ACL's

[usera@nakuru ~]$ hdfs dfs -getfacl -R /abc/month=12/ # file: /abc/month=12 # owner: abiuser # group: dfsusers user::rwx group::r-x other::r-x # file: /abc/month=12/acltest2.txt # owner: abiuser # group: dfsusers user::rw- group::r-- group:data_team:r-- group:dfsusers:r-- mask::r-- other::r-- # file: /abc/month=12/file1.txt # owner: abiuser # group: dfsusers user::rw- group::r-- other::r--

Hope that answers your issue where did you encounter the problem is there a step you missed?

Please accept and close this thread

Created 01-15-2018 08:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am sure if it was a BUG then, hortonworks would have notified its customers having said that, it might sound trivial but try to go over your code, personally I don't see the issue with HDP 2.4 but if I may ask why haven't you upgraded?

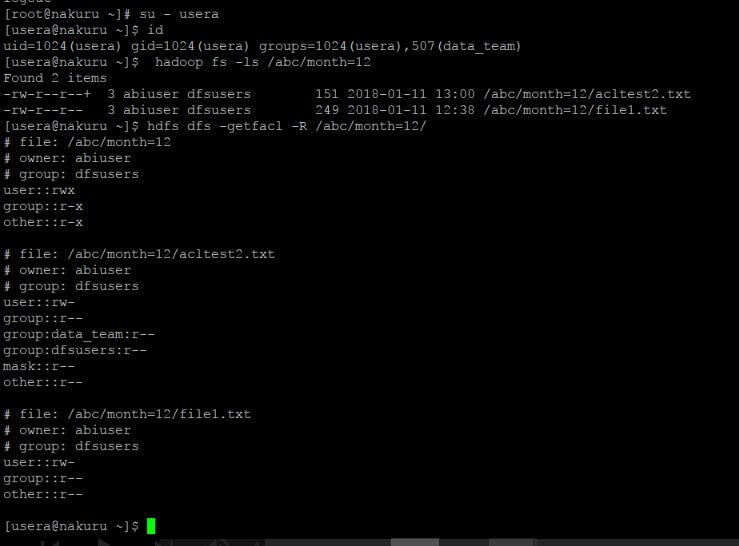

[root@nakuru ~]# su - usera [usera@nakuru ~]$ id uid=1024(usera) gid=1024(usera) groups=1024(usera),507(data_team) [usera@nakuru ~]$ hadoop fs -ls /abc/month=12 Found 2 items -rw-r--r--+ 3 abiuser dfsusers 151 2018-01-11 13:00 /abc/month=12/acltest2.txt -rw-r--r-- 3 abiuser dfsusers 249 2018-01-11 12:38 /abc/month=12/file1.txt [usera@nakuru ~]$ hdfs dfs -getfacl -R /abc/month=12/ # file: /abc/month=12 # owner: abiuser # group: dfsusers user::rwx group::r-x other::r-x # file: /abc/month=12/acltest2.txt # owner: abiuser # group: dfsusers user::rw- group::r-- group:data_team:r-- group:dfsusers:r-- mask::r-- other::r-- # file: /abc/month=12/file1.txt # owner: abiuser # group: dfsusers user::rw- group::r-- other::r-- [usera@nakuru ~]$

Since I reproduced your use case and provided the solution, I think its better you accept and close the thread. The hortonworks demo HDFS ACLS: fine-grained permissions for hdfs files in hadoop was delivered using HDP 2.4 , so at times when I get in such a situation I ask a friend to crosscheck my code you might have forgotten something.

Cheers !

Created 01-16-2018 02:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks will close the thread. Yes the steps are verified multiple times and we end up with that error. We have not subscribed for even hortonworks basic support, because of this risk we have not upgraded. In case we stuck up with some issues there is no one to help. Client is aware of this.

Created 01-16-2018 07:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay cheers I will try to build an HDP 2.4 on VM what Ambari you ambari version. I hate to leave unfinished work.

Will update you

Created 01-16-2018 09:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think HDP 2.4 is not downloadable from hortonworks site? Because we will be setting up new environment in which we will install the latest version and only latest one is downloadable. May be there will be someother link for 2.4. Even I think it might be a bug on the version. There is no hint found for this error apart from the regular steps you have provided. Below are details you have asked for.

Ambari Server

$ rpm -qa | grep -i ambari

ambari-server-2.2.1.0-161.x86_64

$ rpm -qa | grep -i hadoop

hadoop_2_4_0_0_169-mapreduce-2.7.1.2.4.0.0-169.el6.x86_64

hadoop_2_4_0_0_169-yarn-2.7.1.2.4.0.0-169.el6.x86_64

hadoop_2_4_0_0_169-libhdfs-2.7.1.2.4.0.0-169.el6.x86_64

hadoop_2_4_0_0_169-2.7.1.2.4.0.0-169.el6.x86_64

hadoop_2_4_0_0_169-hdfs-2.7.1.2.4.0.0-169.el6.x86_64

$ rpm -qa | grep -i ambari

ambari-metrics-monitor-2.2.1.0-161.x86_64

ambari-metrics-collector-2.2.1.0-161.x86_64

ambari-agent-2.2.1.0-161.x86_64

ambari-metrics-hadoop-sink-2.2.1.0-161.x86_64

$ rpm -qa | grep -i hdp

hdp-select-2.4.0.0-169.el6.noarch

Created 01-16-2018 12:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

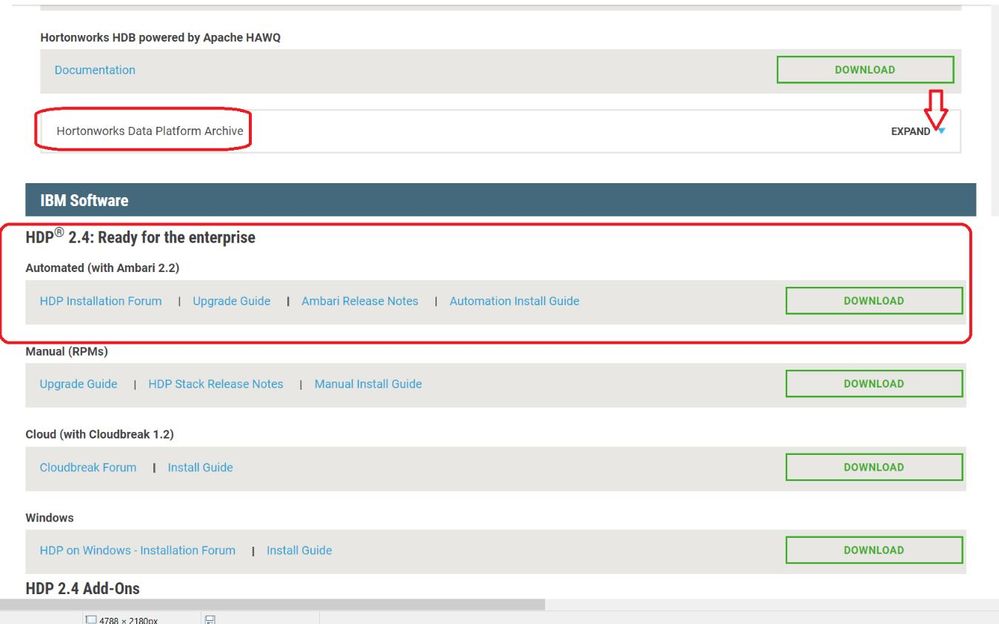

HDP 2.4 is still downloadable are https://hortonworks.com/downloads/#data-platform HDP Downloads Click view all locate Hortonworks Data Platform Archive and expand on the right of your screen

See attached screen.

I will download the sandbox and reproduce your use case.

Created 01-17-2018 10:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for the information.

- « Previous

-

- 1

- 2

- Next »