Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Adding Hosts to a Cluster

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Adding Hosts to a Cluster

- Labels:

-

Apache Ambari

Created on 05-09-2016 04:32 PM - edited 08-19-2019 01:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi:

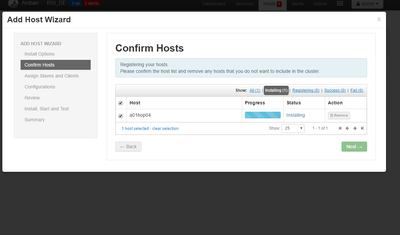

I am triying to add new host into the cluster but, long time the website is like that, any suggestions???

Created on 05-10-2016 03:13 PM - edited 08-19-2019 01:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi:

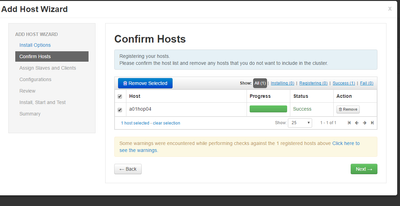

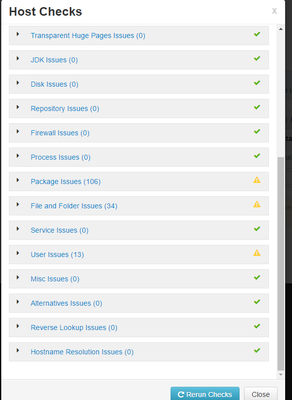

after install the ambari version 2.2.1.0 same like ambari server and execute

ambari-agent start

now is working well

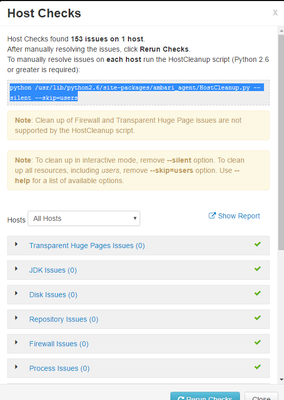

then I registered MANUALLYthe host, the warning are because there are some directories that i need to remove,,but is ok

[root@xxxxhdp]# python /usr/lib/python2.6/site-packages/ambari_agent/HostCleanup.py --silent --skip=users

after Rerun check ()the blue botton, everything is fine.

and now all nodes are working

{

"href" : "http://xxxxx:8080/api/v1/hosts",

"items" : [

{

"href" : "http://xxxxxx:8080/api/v1/hosts/a01hop04",

"Hosts" : {

"host_name" : "a01hop04"

}

},

{

"href" : "http://xxxxx:8080/api/v1/hosts/a01hop01",

"Hosts" : {

"cluster_name" : "RSI_DE",

"host_name" : "a01hop01"

}

},

{

"href" : "http://xxxx:8080/api/v1/hosts/a01hop02",

"Hosts" : {

"cluster_name" : "RSI_DE",

"host_name" : "a01hop02"

}

},

{

"href" : "http://xxxx:8080/api/v1/hosts/a01hop03",

"Hosts" : {

"cluster_name" : "RSI_DE",

"host_name" : "a01hop03"

}

}

]

}Thanks everyone

Created 05-09-2016 04:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you click on the "installing" which is side to the progress bar. It will display logs.

You can check on which steps its taking long time. If possible pls share the logs.

Created 05-09-2016 04:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi:

ani logs is there.

One question, I need to install somenthing before??

thanks

Created 05-09-2016 04:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No. You just need to make sure you have passworless ssh configure from ambari host to node a01hop04.

make sure a01hop04 has firewall and selinux disabled.

Created 05-09-2016 05:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Roberto Sancho You can go with ambari-agent and have the properties file in /etc/ambari-agent/conf/ambari-agent.ini to point to the ambari server and start the agent. That would make things faster. Please make sure you disable iptables and selinux.

Created 05-10-2016 07:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi:

Everything is fine, my question is, i need to install the tarball o start the ambari-agent from the host??

[root@a01hop04 .ssh]# cat /etc/sysconfig/selinux # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of three two values: # targeted - Targeted processes are protected, # minimum - Modification of targeted policy. Only selected processes are protected. # mls - Multi Level Security protection. SELINUXTYPE=targeted

[root@a01hop04 .ssh]# systemctl disable firewalld [root@a01hop04 .ssh]# systemctl stop firewalld Failed to stop firewalld.service: Unit firewalld.service not loaded. [root@a01hop04 .ssh]# systemctl status firewalld ● firewalld.service Loaded: not-found (Reason: No such file or directory) Active: inactive (dead)

Created on 05-10-2016 07:52 AM - edited 08-19-2019 01:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How did you install Ambari Server? Did you use RPM ?

There are 2 ways to install -

1. If you have install ambari server and now if you are trying to install HDP from ambari, then ambari takes care of packages to be installed on the nodes which you are going to add to HDP cluster via Ambari UI.

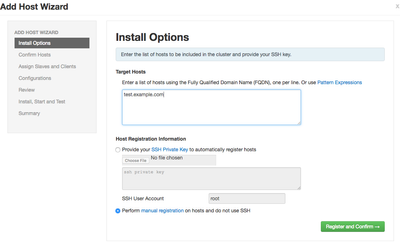

2. You can also do manual registration of hosts which requires no password to be provided in ambari UI. Please check the steps below for manual host registration -

https://ambari.apache.org/1.2.1/installing-hadoop-using-ambari/content/ambari-chap6-1.html

Once done with above steps, in ambari UI on "ADD HOST" wizard click on manual registration as shown below -

Created 05-10-2016 01:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Possible reason could be that Ambari Server is not able to talk to new Node.

If you have manually installed ambari-agent then can you open http://<abmari-server-ip>:8080/api/v1/hosts in other tab and check if you can see entry for new node.

If you are going with passwordless SSH then try to SSH to new node from ambari-server and check if that is working.

Also, Can you share ambari-server log and ambari-agent log(from new node if ambari-agent is installed) ?

Created 05-10-2016 02:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi:

i cant see the new node

{

"href" : "http://xxx:8080/api/v1/hosts",

"items" : [

{

"href" : "http://xxx:8080/api/v1/hosts/a01hop01",

"Hosts" : {

"cluster_name" : "RSI_DE",

"host_name" : "a01hop01"

}

},

{

"href" : "http://xxx:8080/api/v1/hosts/a01hop02",

"Hosts" : {

"cluster_name" : "RSI_DE",

"host_name" : "a01hop02"

}

},

{

"href" : "http://xxx:8080/api/v1/hosts/a01hop03",

"Hosts" : {

"cluster_name" : "RSI_DE",

"host_name" : "a01hop03"

}

}

]

}Also i can do ssh connection correctly

the log for the new ambari agent is:

INFO 2016-05-10 15:44:36,284 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,284 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling ams_metrics_collector_zookeeper_server_process with UUID 853d41c8-220e-4e49-95c1-3048d0573e7a

INFO 2016-05-10 15:44:36,284 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,284 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling ams_metrics_collector_process with UUID d43e1a99-cc8f-4ea0-a06d-06d54c3d94de

INFO 2016-05-10 15:44:36,284 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,284 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling ams_metrics_collector_hbase_master_process with UUID 7fbe2a5d-f7e5-4a00-a4bb-8e8bc89bd07e

INFO 2016-05-10 15:44:36,284 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,284 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling ams_metrics_collector_hbase_master_cpu with UUID d86eb46e-5073-431a-80da-fb39e0790a20

INFO 2016-05-10 15:44:36,285 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,285 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling zookeeper_server_process with UUID aee44599-813e-4cb0-8479-eb3a75eaabe7

INFO 2016-05-10 15:44:36,285 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,285 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling ambari_agent_disk_usage with UUID eb07da21-8c4c-414e-a0c5-ea3297179b1a

INFO 2016-05-10 15:44:36,285 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,285 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling ams_metrics_collector_autostart with UUID cf511140-d46d-4be5-83a2-b5dae7a7b051

INFO 2016-05-10 15:44:36,285 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,285 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling journalnode_process with UUID 185ec91b-4be4-4c1a-a053-75f9e20e5b34

INFO 2016-05-10 15:44:36,285 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,286 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling datanode_unmounted_data_dir with UUID f94a35f8-f048-4fa9-bb17-54485a3620a4

INFO 2016-05-10 15:44:36,286 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,286 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling yarn_nodemanager_health with UUID 3752fb53-f1a4-4589-aff2-90ef704b13da

INFO 2016-05-10 15:44:36,286 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,286 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling datanode_process with UUID 412ebaeb-f960-4ff1-ace7-bd6b01aad068

INFO 2016-05-10 15:44:36,286 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,286 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling yarn_nodemanager_webui with UUID 56a16f35-ce74-4ec8-a90b-5555113a33c1

INFO 2016-05-10 15:44:36,286 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,286 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling datanode_webui with UUID 2f15cec8-5d37-435e-bb02-5e4953b40957

INFO 2016-05-10 15:44:36,287 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,287 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling ams_metrics_monitor_process with UUID 8e6b65e3-62bd-4872-9c34-1b16f4662c1a

INFO 2016-05-10 15:44:36,287 scheduler.py:287 - Adding job tentatively -- it will be properly scheduled when the scheduler starts

INFO 2016-05-10 15:44:36,287 AlertSchedulerHandler.py:330 - [AlertScheduler] Scheduling datanode_storage with UUID eaf7eee7-f0b9-4c39-bf03-1afcff9e4a60

INFO 2016-05-10 15:44:36,287 AlertSchedulerHandler.py:139 - [AlertScheduler] Starting <ambari_agent.apscheduler.scheduler.Scheduler object at 0x2df8050>; currently running: False

INFO 2016-05-10 15:44:38,293 hostname.py:89 - Read public hostname 'a01hop04' using socket.getfqdn()

INFO 2016-05-10 15:44:38,305 logger.py:67 - call['test -w /'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,311 logger.py:67 - call returned (0, '')

INFO 2016-05-10 15:44:38,311 logger.py:67 - call['test -w /dev'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,317 logger.py:67 - call returned (0, '')

INFO 2016-05-10 15:44:38,317 logger.py:67 - call['test -w /dev/shm'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,323 logger.py:67 - call returned (0, '')

INFO 2016-05-10 15:44:38,323 logger.py:67 - call['test -w /run'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,329 logger.py:67 - call returned (0, '')

INFO 2016-05-10 15:44:38,329 logger.py:67 - call['test -w /sys/fs/cgroup'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,335 logger.py:67 - call returned (1, '')

INFO 2016-05-10 15:44:38,335 logger.py:67 - call['test -w /tmp'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,340 logger.py:67 - call returned (0, '')

INFO 2016-05-10 15:44:38,340 logger.py:67 - call['test -w /var/log'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,346 logger.py:67 - call returned (0, '')

INFO 2016-05-10 15:44:38,346 logger.py:67 - call['test -w /usr/hdp'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,351 logger.py:67 - call returned (0, '')

INFO 2016-05-10 15:44:38,352 logger.py:67 - call['test -w /home'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,357 logger.py:67 - call returned (0, '')

INFO 2016-05-10 15:44:38,357 logger.py:67 - call['test -w /boot'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,363 logger.py:67 - call returned (0, '')

INFO 2016-05-10 15:44:38,363 logger.py:67 - call['test -w /rsiiri/syspri2'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,368 logger.py:67 - call returned (1, '')

INFO 2016-05-10 15:44:38,368 logger.py:67 - call['test -w /rsiiri/syspri3'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,374 logger.py:67 - call returned (1, '')

INFO 2016-05-10 15:44:38,374 logger.py:67 - call['test -w /run/user/0'] {'sudo': True, 'timeout': 5}

INFO 2016-05-10 15:44:38,379 logger.py:67 - call returned (0, '')

INFO 2016-05-10 15:44:38,494 Controller.py:145 - Registering with a01hop04 (172.22.3.246) (agent='{"hardwareProfile": {"kernel": "Linux", "domain": "", "physicalprocessorcount": 2, "kernelrelease": "3.10.0-327.el7.x86_64", "uptime_days": "0", "memorytotal": 1884384, "swapfree": "2.00 GB", "memorysize": 1884384, "osfamily": "redhat", "swapsize": "2.00 GB", "processorcount": 2, "netmask": "255.255.240.0", "timezone": "CET", "hardwareisa": "x86_64", "memoryfree": 592136, "operatingsystem": "centos", "kernelmajversion": "3.10", "kernelversion": "3.10.0", "macaddress": "00:50:56:BB:5F:63", "operatingsystemrelease": "7.2.1511", "ipaddress": "172.22.3.246", "hostname": "a01hop04", "uptime_hours": "21", "fqdn": "a01hop04", "id": "root", "architecture": "x86_64", "selinux": false, "mounts": [{"available": "16257780", "used": "36145420", "percent": "69%", "device": "/dev/mapper/centos-root", "mountpoint": "/", "type": "xfs", "size": "52403200"}, {"available": "932032", "used": "0", "percent": "0%", "device": "devtmpfs", "mountpoint": "/dev", "type": "devtmpfs", "size": "932032"}, {"available": "942192", "used": "0", "percent": "0%", "device": "tmpfs", "mountpoint": "/dev/shm", "type": "tmpfs", "size": "942192"}, {"available": "933492", "used": "8700", "percent": "1%", "device": "tmpfs", "mountpoint": "/run", "type": "tmpfs", "size": "942192"}, {"available": "26168620", "used": "32980", "percent": "1%", "device": "/dev/mapper/centos-tmp", "mountpoint": "/tmp", "type": "xfs", "size": "26201600"}, {"available": "25656252", "used": "545348", "percent": "3%", "device": "/dev/mapper/centos-var_log", "mountpoint": "/var/log", "type": "xfs", "size": "26201600"}, {"available": "26168672", "used": "32928", "percent": "1%", "device": "/dev/mapper/centos-usr_hdp", "mountpoint": "/usr/hdp", "type": "xfs", "size": "26201600"}, {"available": "73078988", "used": "1333556", "percent": "2%", "device": "/dev/mapper/centos-home", "mountpoint": "/home", "type": "xfs", "size": "74412544"}, {"available": "383204", "used": "125384", "percent": "25%", "device": "/dev/sda1", "mountpoint": "/boot", "type": "xfs", "size": "508588"}, {"available": "188440", "used": "0", "percent": "0%", "device": "tmpfs", "mountpoint": "/run/user/0", "type": "tmpfs", "size": "188440"}], "hardwaremodel": "x86_64", "uptime_seconds": "78040", "interfaces": "eno16780032,lo"}, "currentPingPort": 8670, "prefix": "/var/lib/ambari-agent/data", "agentVersion": "2.2.0.0", "agentEnv": {"transparentHugePage": "", "hostHealth": {"agentTimeStampAtReporting": 1462887878490, "activeJavaProcs": [], "liveServices": [{"status": "Healthy", "name": "ntpd", "desc": ""}]}, "reverseLookup": true, "alternatives": [], "umask": "18", "firewallName": "iptables", "stackFoldersAndFiles": [{"type": "directory", "name": "/etc/hadoop"}, {"type": "directory", "name": "/etc/hive"}, {"type": "directory", "name": "/etc/oozie"}, {"type": "directory", "name": "/etc/zookeeper"}, {"type": "directory", "name": "/etc/hive-hcatalog"}, {"type": "directory", "name": "/etc/tez"}, {"type": "directory", "name": "/etc/falcon"}, {"type": "directory", "name": "/etc/hive-webhcat"}, {"type": "directory", "name": "/etc/spark"}, {"type": "directory", "name": "/etc/pig"}, {"type": "directory", "name": "/var/log/hadoop"}, {"type": "directory", "name": "/var/log/hive"}, {"type": "directory", "name": "/var/log/oozie"}, {"type": "directory", "name": "/var/log/zookeeper"}, {"type": "directory", "name": "/var/log/hive-hcatalog"}, {"type": "directory", "name": "/var/log/falcon"}, {"type": "directory", "name": "/var/log/hadoop-yarn"}, {"type": "directory", "name": "/var/log/hadoop-mapreduce"}, {"type": "directory", "name": "/var/log/spark"}, {"type": "directory", "name": "/usr/lib/flume"}, {"type": "directory", "name": "/usr/lib/storm"}, {"type": "directory", "name": "/var/lib/hive"}, {"type": "directory", "name": "/var/lib/oozie"}, {"type": "directory", "name": "/var/lib/hadoop-hdfs"}, {"type": "directory", "name": "/var/lib/hadoop-yarn"}, {"type": "directory", "name": "/var/lib/hadoop-mapreduce"}, {"type": "directory", "name": "/var/lib/spark"}, {"type": "directory", "name": "/hadoop/zookeeper"}, {"type": "directory", "name": "/hadoop/hdfs"}, {"type": "directory", "name": "/hadoop/yarn"}], "existingUsers": [{"status": "Available", "name": "hive", "homeDir": "/home/hive"}, {"status": "Available", "name": "zookeeper", "homeDir": "/home/zookeeper"}, {"status": "Available", "name": "ams", "homeDir": "/home/ams"}, {"status": "Available", "name": "oozie", "homeDir": "/home/oozie"}, {"status": "Available", "name": "ambari-qa", "homeDir": "/home/ambari-qa"}, {"status": "Available", "name": "tez", "homeDir": "/home/tez"}, {"status": "Available", "name": "hdfs", "homeDir": "/home/hdfs"}, {"status": "Available", "name": "yarn", "homeDir": "/home/yarn"}, {"status": "Available", "name": "hcat", "homeDir": "/home/hcat"}, {"status": "Available", "name": "mapred", "homeDir": "/home/mapred"}, {"status": "Available", "name": "falcon", "homeDir": "/var/lib/falcon"}, {"status": "Available", "name": "flume", "homeDir": "/home/flume"}, {"status": "Available", "name": "spark", "homeDir": "/home/spark"}], "firewallRunning": false}, "timestamp": 1462887878406, "hostname": "a01hop04", "responseId": -1, "publicHostname": "a01hop04"}')

INFO 2016-05-10 15:44:38,494 NetUtil.py:60 - Connecting to https://a01hop01:8440/connection_info

INFO 2016-05-10 15:44:38,582 security.py:99 - SSL Connect being called.. connecting to the server

INFO 2016-05-10 15:44:38,667 security.py:60 - SSL connection established. Two-way SSL authentication is turned off on the server.

ERROR 2016-05-10 15:44:38,672 Controller.py:165 - Cannot register host with non compatible agent version, hostname=a01hop04, agentVersion=2.2.0.0, serverVersion=2.2.1.0

INFO 2016-05-10 15:44:38,672 Controller.py:392 - Registration response from a01hop01 was FAILED

ERROR 2016-05-10 15:44:38,673 main.py:315 - Fatal exception occurred:

Traceback (most recent call last):

File "/usr/lib/python2.6/site-packages/ambari_agent/main.py", line 312, in <module>

main(heartbeat_stop_callback)

File "/usr/lib/python2.6/site-packages/ambari_agent/main.py", line 303, in main

ExitHelper.execute_cleanup()

TypeError: unbound method execute_cleanup() must be called with ExitHelper instance as first argument (got nothing instead)

INFO 2016-05-10 15:45:36,347 logger.py:67 - Pid file /var/run/ambari-metrics-monitor/ambari-metrics-monitor.pid is empty or does not exist

ERROR 2016-05-10 15:45:36,349 script_alert.py:112 - [Alert][ams_metrics_monitor_process] Failed with result CRITICAL: ['Ambari Monitor is NOT running on a01hop03']

INFO 2016-05-10 15:46:36,355 logger.py:67 - Host contains mounts: ['/sys', '/proc', '/dev', '/sys/kernel/security', '/dev/shm', '/dev/pts', '/run', '/sys/fs/cgroup', '/sys/fs/cgroup/systemd', '/sys/fs/pstore', '/sys/fs/cgroup/cpu,cpuacct', '/sys/fs/cgroup/freezer', '/sys/fs/cgroup/cpuset', '/sys/fs/cgroup/blkio', '/sys/fs/cgroup/perf_event', '/sys/fs/cgroup/memory', '/sys/fs/cgroup/hugetlb', '/sys/fs/cgroup/net_cls', '/sys/fs/cgroup/devices', '/sys/kernel/config', '/', '/proc/sys/fs/binfmt_misc', '/dev/hugepages', '/sys/kernel/debug', '/dev/mqueue', '/var/lib/nfs/rpc_pipefs', '/proc/fs/nfsd', '/tmp', '/var/log', '/usr/hdp', '/home', '/boot', '/rsiiri/syspri2', '/rsiiri/syspri3', '/run/user/0', '/proc/sys/fs/binfmt_misc'].

INFO 2016-05-10 15:46:36,357 logger.py:67 - Mount point for directory /hadoop/hdfs/data is /

INFO 2016-05-10 15:46:36,364 logger.py:67 - Pid file /var/run/ambari-metrics-monitor/ambari-metrics-monitor.pid is empty or does not exist

ERROR 2016-05-10 15:46:36,367 script_alert.py:112 - [Alert][ams_metrics_monitor_process] Failed with result CRITICAL: ['Ambari Monitor is NOT running on a01hop03']

INFO 2016-05-10 15:47:36,354 logger.py:67 - Pid file /var/run/ambari-metrics-monitor/ambari-metrics-monitor.pid is empty or does not exist

ERROR 2016-05-10 15:47:36,354 script_alert.py:112 - [Alert][ams_metrics_monitor_process] Failed with result CRITICAL: ['Ambari Monitor is NOT running on a01hop03']

INFO 2016-05-10 15:48:36,321 logger.py:67 - Mount point for directory /hadoop/hdfs/data is /

INFO 2016-05-10 15:48:36,341 logger.py:67 - Pid file /var/run/ambari-metrics-monitor/ambari-metrics-monitor.pid is empty or does not exist

ERROR 2016-05-10 15:48:36,341 script_alert.py:112 - [Alert][ams_metrics_monitor_process] Failed with result CRITICAL: ['Ambari Monitor is NOT running on a01hop03']

WARNING 2016-05-10 15:49:36,322 base_alert.py:140 - [Alert][ams_metrics_collector_hbase_master_cpu] Unable to execute alert. [Alert][ams_metrics_collector_hbase_master_cpu] Unable to extract JSON from JMX response

INFO 2016-05-10 15:49:36,349 logger.py:67 - Pid file /var/run/ambari-metrics-monitor/ambari-metrics-monitor.pid is empty or does not exist

ERROR 2016-05-10 15:49:36,351 script_alert.py:112 - [Alert][ams_metrics_monitor_process] Failed with result CRITICAL: ['Ambari Monitor is NOT running on a01hop03']

INFO 2016-05-10 15:50:36,330 logger.py:67 - Mount point for directory /hadoop/hdfs/data is /

INFO 2016-05-10 15:50:36,353 logger.py:67 - Pid file /var/run/ambari-metrics-monitor/ambari-metrics-monitor.pid is empty or does not exist

ERROR 2016-05-10 15:50:36,353 script_alert.py:112 - [Alert][ams_metrics_monitor_process] Failed with result CRITICAL: ['Ambari Monitor is NOT running on a01hop03']

This machine is a clone image from the a01hop03, so...maybe i need to remoce some files.

ERROR 2016-05-10 15:44:38,672 Controller.py:165 - Cannot register host with non compatible agent version, hostname=a01hop04, agentVersion=2.2.0.0, serverVersion=2.2.1.0 INFO 2016-05-10 15:44:38,672 Controller.py:392 - Registration response from a01hop01 was FAILED ERROR 2016-05-10 15:44:38,673 main.py:315 - Fatal exception occurred: Traceback (most recent call last):

i will install the new version and ill see.

Thanks

Created 05-10-2016 02:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This error line suggest that you have mismatch in ambari server and ambari agent version :

- ERROR 2016-05-1015:44:38,672Controller.py:165-Cannotregister host with non compatible agent version, hostname=a01hop04, agentVersion=2.2.0.0, serverVersion=2.2.1.0

1. Start a new clean machine and follwo the Hortonworks guide to install pre-requisite and ambari-agent .

2. Edit /etc/ambari-agent/conf/ambari-agent.ini and update Ambari server IP/FQDN

3. Restart ambari-agent

4 Open http://<abmari-server-ip>:8080/api/v1/hosts , if you are able to see new node then it is registered successfully and you can proceed installation.But if you cannot see than check the ambari-agent log and look for error.