Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: After hive overwrite, the data of HDFS is not ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

After hive overwrite, the data of HDFS is not deleted, but retained as historical data. As a result, table data occupies more and more space. What should we do?

- Labels:

-

Apache Hadoop

-

Apache Hive

Created 12-26-2018 02:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 12-26-2018 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seems your tables are not partitions. When you try to “INSERT OVERWRITE” to a partition of an external table under existing HDFS directory, depending on whether the partition definition already exists in the HIVE metastore or not, Hive will behave differently:

- Suppose if partition definition does not exist, it will not try to guess where the target partition directories are either static or dynamic partitions, so it will not be able to delete existing files/data under those partitions. So that will retained as historical data of HDFS like your case.

- But if partition definition does exist, it will attempt to remove all files/data under the target partition directory before writing new data into those directories.

Reference here

Please accept the answer you found most useful.

Created 12-26-2018 07:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My table is a normal table. When insert overwrite, I found that I would put the old data under the HDFS directory into a folder such as base_0000003. Why not put the old data into the HDFS recycling station, which I can not understand.

Created 12-26-2018 07:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

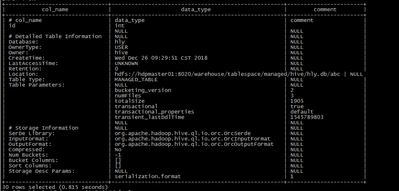

Please can you share output of below command. It is hive 3.x or hive 2.x ?

DESCRIBE FORMATTED <table_name>

Created on 12-26-2018 07:42 AM - edited 08-17-2019 03:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I use hive 3.0,which is the information I provided

Created 12-26-2018 07:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jack

From your above output I can see it's MANAGED_TABLE. If the table has TBLPROPERTIES ("auto.purge"="true") the previous data of the table is not moved to Trash when INSERT OVERWRITE query is run against the table. This functionality is applicable only for managed tables and is turned off when "auto.purge" property is unset or set to false. For more detail HIVE-15880