Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Ambari-Metrics collector not starting

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ambari-Metrics collector not starting

- Labels:

-

Apache Ambari

Created 02-10-2016 12:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I start ambari-metrics collector, there is no error in starting but it never starts. When I checked the log file, below is what I see:

Value of zookeper.znode.parent is: /hbase-unsecure

retries=35, started=229269 ms ago, cancelled=false, msg= 2016-02-09 19:39:15,043 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:39:15,043 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=20, retries=35, started=249286 ms ago, cancelled=false, msg= 2016-02-09 19:39:35,080 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:39:35,081 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=21, retries=35, started=269324 ms ago, cancelled=false, msg= 2016-02-09 19:39:55,151 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:39:55,152 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=22, retries=35, started=289395 ms ago, cancelled=false, msg= 2016-02-09 19:40:15,192 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:40:15,193 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=23, retries=35, started=309436 ms ago, cancelled=false, msg= 2016-02-09 19:40:35,275 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:40:35,276 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=24, retries=35, started=329519 ms ago, cancelled=false, msg= 2016-02-09 19:40:55,283 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:40:55,283 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=25, retries=35, started=349526 ms ago, cancelled=false, msg= 2016-02-09 19:41:15,437 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:41:15,437 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=26, retries=35, started=369680 ms ago, cancelled=false, msg= 2016-02-09 19:41:35,604 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:41:35,604 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=27, retries=35, started=389847 ms ago, cancelled=false, msg= 2016-02-09 19:41:55,633 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:41:55,635 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=28, retries=35, started=409878 ms ago, cancelled=false, msg= 2016-02-09 19:42:15,646 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:42:15,646 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=29, retries=35, started=429889 ms ago, cancelled=false, msg= 2016-02-09 19:42:35,841 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:42:35,841 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=30, retries=35, started=450084 ms ago, cancelled=false, msg= 2016-02-09 19:42:56,032 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:42:56,032 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=31, retries=35, started=470275 ms ago, cancelled=false, msg= 2016-02-09 19:43:16,088 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:43:16,088 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=32, retries=35, started=490331 ms ago, cancelled=false, msg= 2016-02-09 19:43:36,265 ERROR org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation: The node /hbase is not in ZooKeeper. It should have been written by the master. Check the value configured in 'zookeeper.znode.parent'. There could be a mismatch with the one configured in the master. 2016-02-09 19:43:36,265 INFO org.apache.hadoop.hbase.client.RpcRetryingCaller: Call exception, tries=33, retries=35, started=510508 ms ago, cancelled=false, msg=

Created 02-29-2016 03:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I could finally solve it by combining some of the steps mentioned above.

I first checked what is the value of `zookeeper.znode.parent` in HBase. I tried setting that same value in Ambari, but that did not work because some of the metrics processes were already running on that machine. So, i had to `ps -ef | grep metrics` and kill all of them as they were caching the `/hbase` value.

Watch the ambari metrics collector logs ( /var/log/ambari-metrics-collector/ambari-metrics-collector.log) while you do the below steps

Steps:

0. tail -f /var/log/ambari-metrics-collector/ambari-metrics-collector.log

1. Stop Ambari

2. Kill all the metrics processes

3. curl --user admin:admin -i -H "X-Requested-By: ambari" -X DELETE http://`hostname -f`:8080/api/v1/clusters/CLUSTERNAME/services/AMBARI_METRICS

=> Make sure you replace CLUSTERNAME with your cluster name

4. Refresh Ambari UI

5. Add Service

6. Select Ambari Metrics

7. In the configuration screen, make sure to set the value of `zookeeper.znode.parent` to what is configured in the HBase service. By default in Ambari Metrics it is set to empty value.

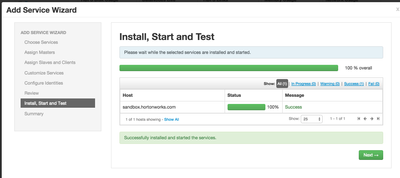

8. Deploy

Created 02-12-2016 10:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try

curl --user admin:admin -i -H "X-Requested-By: ambari" -X DELETE http://`hostname -f`:8080/api/v1/clusters/CLUSTERNAME/services/AMBARI_METRICS

for example: I used this for sandbox

curl --user admin:admin -i -H "X-Requested-By: ambari" -X DELETE http://`hostname -f`:8080/api/v1/clusters/Sandbox/services/AMBARI_METRICS

Created on 02-12-2016 10:31 PM - edited 08-19-2019 01:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 02-14-2016 12:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Prakash Punj There are a few mis-configs in your setting

hbase.rootdir --hdfs://hdp-m.samitsolutions.com:8020/apps/hbase/data hbase.cluster.distributed - TRUE Metrics service operation mode - embedded hbase.zookeeper.property.clientPort --2181 hbase.zookeeper.quorum --- hdp-m.samitsolutions.com

If you run AMS in embedded mode then hbase.cluster.distributed should be false, and hbase.rootdir set to a local directory using the "file://" scheme. If you prefer distributed mode then timeline.metrics.service.operation.mode should be "distributed". In either case, for AMS HBase AMS runs its own instance of ZooKeeper which by default listens on port 61181. It looks like your are trying to use ZooKeeper of the cluster instead. My recommendetion: Switch to the "embedded" mode (unless your cluster has hundreds of nodes), and the default AMS ZK on port 61181.

Created 02-29-2016 03:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I could finally solve it by combining some of the steps mentioned above.

I first checked what is the value of `zookeeper.znode.parent` in HBase. I tried setting that same value in Ambari, but that did not work because some of the metrics processes were already running on that machine. So, i had to `ps -ef | grep metrics` and kill all of them as they were caching the `/hbase` value.

Watch the ambari metrics collector logs ( /var/log/ambari-metrics-collector/ambari-metrics-collector.log) while you do the below steps

Steps:

0. tail -f /var/log/ambari-metrics-collector/ambari-metrics-collector.log

1. Stop Ambari

2. Kill all the metrics processes

3. curl --user admin:admin -i -H "X-Requested-By: ambari" -X DELETE http://`hostname -f`:8080/api/v1/clusters/CLUSTERNAME/services/AMBARI_METRICS

=> Make sure you replace CLUSTERNAME with your cluster name

4. Refresh Ambari UI

5. Add Service

6. Select Ambari Metrics

7. In the configuration screen, make sure to set the value of `zookeeper.znode.parent` to what is configured in the HBase service. By default in Ambari Metrics it is set to empty value.

8. Deploy

Created 08-09-2016 07:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you.

I had followed all steps before without any success except for 'zookeeper.znode.parent' part.

Metrics are now up and running!

Version 2.1.1

Created 02-21-2017 10:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This solved a similar issue of mine. Didn't have to change anything about the zookeeper settings but the re-install as described here did the trick. Thank you.

Created 03-30-2016 05:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had a similar problem and moved the metrics collector to another server via Ambari Gui and my problem got fixed.

Created 05-16-2016 04:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ran into a similar issue; collector would start and later die. This was after it had been moved to distributed and was running fine for some time. it was saying:

org.apache.hadoop.hbase.MasterNotRunningException: java.io.IOException: Can't get master address from ZooKeeper; znode data == null Caused by: java.io.IOException: Can't get master address from ZooKeeper; znode data == null

however the issue was that hbase.rootdir was not HA aware. Once this was set to the nameservice it was fine. The issue evidently appeared when the namenode switched. Anyway, thought I would note this in case someone else ran into it.

Created 06-02-2016 01:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A. Run Ambari Metrics in Distributed Mode rather than embedded If you are running with more than 3 nodes, I strongly suggest running in distributed mode and writing hbase.root.dir contents to hdfs directly, rather than to the local disk of a single node. This applies to already installed and running IOP clusters.

- In the Ambari Web UI, select the Ambari Metrics service and navigate to Configs. Update the following properties:

- Restart Metrics Collector and affected Metrics monitors

Created 07-29-2016 05:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jonas I'm facing kerberos error after restart of metrics collector. it was working fine for sometime after the kerberos implementation. I do see a valid key for ams id (and it's an application id so it should renew on its own).

ERROR: 2016-07-29 12:34:15,212 WARN [TGT Renewer for amshbase/ip@example.COM] security.UserGroupInformation: Exception encountered while running the renewal command. Aborting renew thread. ExitCodeException exitCode=1: kinit: Ticket expired while renewing credentials

hbase.regionserver.kerberos.principal - hbase/_HOST@example.COM

hbase.master.kerberos.principal - hbase/_HOST@example.COM

hbase.rootdir - hdfs://<hostname>/user/ams/hbase

hbase.cluster.distributed - TRUE

Metrics service operation mode - distributed

hbase.zookeeper.property.clientPort - 2181

hbase.zookeeper.quorum - zknode1,zknode2,zknode3