Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Ambari Pig View not working in HDP 2.5 multi-n...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ambari Pig View not working in HDP 2.5 multi-node install

- Labels:

-

Apache Ambari

-

Apache Pig

Created 12-06-2016 03:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In HDP 2.5 (Ambari 2.4.2) the Pig View is not working in a multi-node install.

It works ok in the Sandbox install (single node) but fails in a multiple node installation. I have followed all the steps (proxy settings) in the Ambari Views Guide for this and previous versions, and the settings in the Pig view configuration are the same working for the Sandbox. The Pig View loads without errors but will give error when you try to run any script.

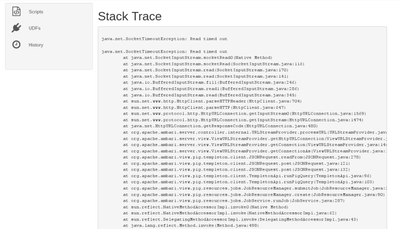

It always returns an error "Job failed to start" and the "Stack Trace" show something like:

java.net.SocketTimeoutException: Read timed out

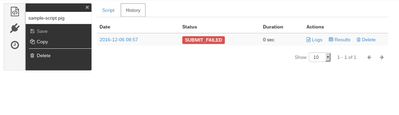

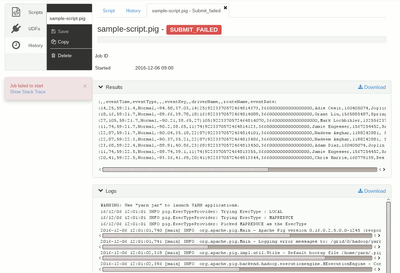

What is more strange is that if I go to the History tab, the job appears with an error status SUBMIT_FAILED, but there is no error in the Logs and if I click in the Date link I will get the results of the job as if it had completed succesfully!!

Also using Pig from command line on the same Ambari server works perfectly.

Does anybody knows how to fix this to be able to use the Pig View without these errors!?

Created 12-12-2016 01:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems I have finally fixed this: it was really a timeout problem and the higher values I had set were not enough.

I increased views.request.read.timeout to 40 seconds:

views.request.read.timeout.millis = 40000

and now it's allways working (until now).

Created 12-06-2016 03:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

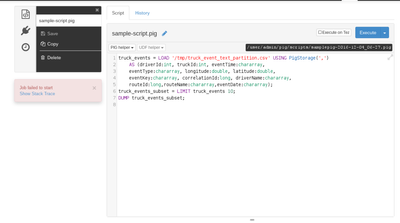

Just to give more information, the Pig script I'm using to test is basic load/dump example from the Beguinners Guide, like this:

truck_events = LOAD '/tmp/truck_event_text_partition.csv' USING PigStorage(',')

AS (driverId:int, truckId:int, eventTime:chararray,

eventType:chararray, longitude:double, latitude:double,

eventKey:chararray, correlationId:long, driverName:chararray,

routeId:long,routeName:chararray,eventDate:chararray);

truck_events_subset = LIMIT truck_events 10;

DUMP truck_events_subset;

Created 12-06-2016 03:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will test this and report back tomorrow

Created 12-10-2016 06:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any news on this?

Created 12-06-2016 04:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As you are getting the "SocketTimeoutException: Read timed out" so will it be possible to try increasing the following properly value to bit larger in your "/etc/ambari-server/conf/ambari.properties" file to see if we are providing the enough read timeout. Sometimes some queries might take some time to execute.

views.ambari.request.read.timeout.millis=60000

- A restart of ambari server will be required after making this change.

.

Created 12-06-2016 12:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jss Thank you very much for your answer and suggestions.I guessed that was the deal, but I've tried this and the same problem persist. These are the changes I've made to the view timeout parameters:

-views.ambari.request.connect.timeout.millis=30000 -views.ambari.request.read.timeout.millis=45000 +views.ambari.request.connect.timeout.millis=60000 +views.ambari.request.read.timeout.millis=90000 ... -views.request.connect.timeout.millis=5000 -views.request.read.timeout.millis=10000 +views.request.connect.timeout.millis=10000 +views.request.read.timeout.millis=20000

But nothing changes and the same SUBMIT_FAILED error happens when I run any script 😞

Created on 12-06-2016 12:32 PM - edited 08-19-2019 01:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Something new I've noted after several tries is that even when I always get the error, sometimes the results are show correctly if I go to the execution details and sometimes they are empty. I'm including some screenshots to illustrate the situation.

Script exec error:

Stack trace:

History job error:

Job details: script results are OK (this happens only sometimes)

Created 12-12-2016 01:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems I have finally fixed this: it was really a timeout problem and the higher values I had set were not enough.

I increased views.request.read.timeout to 40 seconds:

views.request.read.timeout.millis = 40000

and now it's allways working (until now).