Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Ambari files view upload error: java.net.NoRou...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ambari files view upload error: java.net.NoRouteToHostException: myhost:50075: No route to host (Host unreachable)

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created on 07-25-2019 01:06 AM - edited 08-17-2019 04:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Trying to upload a simple csv file into HDFS via the Ambari files view, getting the error below

java.net.NoRouteToHostException: hw04.ucera.local:50075: No route to host (Host unreachable) at java.net.PlainSocketImpl.socketConnect(Native Method) at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350) at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206) at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket....

For reference, here the hosts on the cluster

and the services on the HW04 node

- Accumulo Client

- DataNode

- HBase Client

- HDFS Client

- Hive Client

- HST Agent

- Infra Solr Client

- Log Feeder

- MapReduce2 Client

- Metrics Monitor

- Oozie Client

- Pig Client

- Spark2 Client

- Sqoop Client

- Tez Client

- YARN Client

- ZooKeeper Client

Not sure what the error means. Any debugging suggestions or fixes?

Created 07-26-2019 09:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Think I found the problem, TLDR: firewalld (nodes running on centos7) was still running, when should be disabled on HDP clusters.

From another community post:

For Ambari to communicate during setup with the hosts it deploys to and manages, certain ports must be open and available. The easiest way to do this is to temporarily disable iptables, as follows:

systemctl disable firewalld

service firewalld stop

So apparently iptables and firewalld need to be disabled across the cluster (supporting docs can be found here, I only disabled them on the Ambari installation node). After stopping these services across the cluster (I recommend using clush), was able to run the upload job without incident.

Created 07-25-2019 01:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The most important requirement for the Cluster is that Not only ambari to be able to resolve each cluster nodes with they FQDN.

BUT all the cluster nodes also should be able to resolve each other using their FQDN.

So please make sure that all your cluster nodes are able to resolve each other using their hostname / FQDN

Please check the "/etc/hosts" file on ambari server node as well as on other cluster nodes. (they can be same to resolve all the cluster nodes). If you are using DNS entry then please make sure that each host is able to resolve each others DNS name.

https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.2.2/bk_ambari-installation-ppc/content/edit_the...

https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.2.2/bk_ambari-installation-ppc/content/check_dn...

Created 07-25-2019 01:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please check if you can acccess the hostname "hw04.ucera.local" from all your cluster nodes? Without any hostname/firewall issue?

Please run the same command from all cluster nodes:

# ping hw04.ucera.local # telnet hw04.ucera.local 50075 # hostname -f

.

Created 07-25-2019 01:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Checking the /etc/hosts files on the hw[1-3] cluster nodes, there was no hw04 FQDN (note that these were the original nodes, hw04 was a node that was added later via Ambari), have added now as well as to hw04. But was able to ping hw04 from all the other nodes regardless. However, was not able to run

telnet hw04.ucera.local 50075

from any of the nodes. Not sure what this means or how to fix it, though?

** Also interesting that Ambari does not automatically added the new FQDN to the /etc/hosts file of the existing nodes when added another node (since already has root access). So seems that this means that any time an admin wanted to add more nodes, they would need to go through all the cluster nodes and add this manually. Any specific reason for this design?

Created 07-25-2019 02:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Few things:

1. Ambari never adds any host entry inside the "/etc/hosts" file. It is the responsibility of the cluster admin to make sure all the hostname entries are added to either in a DNS server or inside the "/etc/hosts" file of each node of the cluster (irrespective it is a new node or old node)

2. Every cluster node should return a Fully Qualified Hostname (FQDN) when you run the following command on any cluster node.

# hostname -f

3. Each and every node present in your cluster should be able to resolve each other using their FQDN (not using the alias hostname)

So ping hw04 and ping hw04.ucera.local are not same

.

So to fix the issue please perform the above checks. And make sure that the "/etc/hosts" file entry on ambari server host and all cluster nodes are identical.

Then verify if the ping and telnet works file from all cluster nodes and they are able to reach "hw04.ucera.local" correctly.

# cat /etc/hosts # ping hw04.ucera.local # telnet hw04.ucera.local 50075

.

Created on 07-25-2019 08:22 PM - edited 08-17-2019 04:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

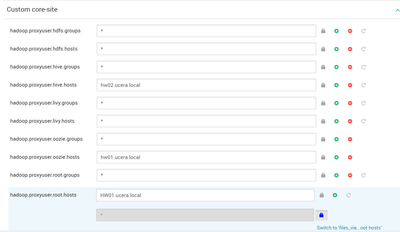

Think I have determined the source of the error. Was previously getting an error of

Unauthorized connection for super-user: root from IP

from the files view when attempting to upload files. In order to fix this, did

hadoop.proxyuser.root.groups=* hadoop.proxyuser.root.hosts=*

from http://community.hortonworks.com/answers/70794/view.html

This initially appeared to work, but suddenly stopped working. Am finding that the Ambari HDFS configs that I had previously set are continually reverting back to the default configs group (back from the configs that I had made to address the original problem). The dashboard configs history shows this...

yet the HDFS service says it is using "version 1" (the initial configs) and configs>advance>core-site shows...

implying that in fact the custom changes are not being used. This is a bit confusing to me.

Need to continue debugging the situation. Any suggestions?

Created 07-25-2019 09:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Running you precribed tests across all the cluster nodes, getting...

[root@HW01 groups.d]# clush -ab hostname -f --------------- HW01 --------------- HW01.ucera.local --------------- HW02 --------------- HW02.ucera.local --------------- HW03 --------------- HW03.ucera.local --------------- HW04 --------------- HW04.ucera.local

[root@HW01 groups.d]# clush -ab cat /etc/hosts --------------- HW[01-04] (4) --------------- 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.18.4.46 HW01.ucera.local 172.18.4.47 HW02.ucera.local 172.18.4.48 HW03.ucera.local 172.18.4.49 HW04.ucera.local [root@HW01 groups.d]# [root@HW01 groups.d]# [root@HW01 groups.d]# [root@HW01 groups.d]# clush -ab ping hw04.ucera.local ^CHW03: Killed by signal 2. HW04: Killed by signal 2. HW02: Killed by signal 2. Warning: Caught keyboard interrupt! --------------- HW01 --------------- PING HW04.ucera.local (172.18.4.49) 56(84) bytes of data. 64 bytes from HW04.ucera.local (172.18.4.49): icmp_seq=1 ttl=64 time=0.231 ms 64 bytes from HW04.ucera.local (172.18.4.49): icmp_seq=2 ttl=64 time=0.300 ms --------------- HW02 --------------- PING HW04.ucera.local (172.18.4.49) 56(84) bytes of data. 64 bytes from HW04.ucera.local (172.18.4.49): icmp_seq=1 ttl=64 time=0.177 ms --------------- HW03 --------------- PING HW04.ucera.local (172.18.4.49) 56(84) bytes of data. 64 bytes from HW04.ucera.local (172.18.4.49): icmp_seq=1 ttl=64 time=0.260 ms --------------- HW04 --------------- PING HW04.ucera.local (172.18.4.49) 56(84) bytes of data. 64 bytes from HW04.ucera.local (172.18.4.49): icmp_seq=1 ttl=64 time=0.095 ms Keyboard interrupt (HW[01-04] did not complete). [root@HW01 groups.d]# [root@HW01 groups.d]# [root@HW01 groups.d]# [root@HW01 groups.d]# clush -ab "telnet hw04.ucera.local 50075" HW01: telnet: connect to address 172.18.4.49: No route to host HW02: telnet: connect to address 172.18.4.49: No route to host HW03: telnet: connect to address 172.18.4.49: No route to host HW04: Killed by signal 2. Warning: Caught keyboard interrupt! --------------- HW[01-03] (3) --------------- Trying 172.18.4.49... --------------- HW04 --------------- Trying 172.18.4.49... Connected to hw04.ucera.local. Escape character is '^]'. clush: HW[01-03] (3): exited with exit code 1 Keyboard interrupt.

So other than telnet not working things seem to be OK. Will continue looking into why telnet can't connect.

Created 07-26-2019 09:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Think I found the problem, TLDR: firewalld (nodes running on centos7) was still running, when should be disabled on HDP clusters.

From another community post:

For Ambari to communicate during setup with the hosts it deploys to and manages, certain ports must be open and available. The easiest way to do this is to temporarily disable iptables, as follows:

systemctl disable firewalld

service firewalld stop

So apparently iptables and firewalld need to be disabled across the cluster (supporting docs can be found here, I only disabled them on the Ambari installation node). After stopping these services across the cluster (I recommend using clush), was able to run the upload job without incident.