Support Questions

- Cloudera Community

- Support

- Support Questions

- Ambari-server failed to start namenode after insta...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ambari-server failed to start namenode after installation.

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created on 06-24-2016 12:41 AM - edited 08-18-2019 05:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Jitendra Yadav,

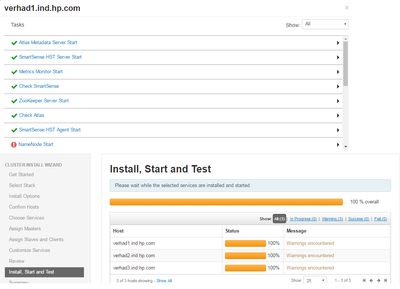

After installing all the components now it's unable to start the namenode and failed.(PFB log)

Also no retry option is available now so how to retry(screenshot attached).

Could you please help

stderr: /var/lib/ambari-agent/data/errors-2760.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/namenode.py", line 401, in <module>

NameNode().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 219, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/namenode.py", line 102, in start

namenode(action="start", hdfs_binary=hdfs_binary, upgrade_type=upgrade_type, env=env)

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/hdfs_namenode.py", line 146, in namenode

create_log_dir=True

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/utils.py", line 267, in service

Execute(daemon_cmd, not_if=process_id_exists_command, environment=hadoop_env_exports)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 154, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 158, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 121, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 238, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 70, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 92, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 140, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 291, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of 'ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode'' returned 1. starting namenode, logging to /var/log/hadoop/hdfs/hadoop-hdfs-namenode-VerHad1.outstdout: /var/lib/ambari-agent/data/output-2760.txt

2016-06-23 21:21:41,300 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:41,300 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:41,300 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:41,325 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:41,325 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:41,350 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:41,350 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:41,350 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:41,495 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:41,495 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:41,495 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:41,528 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:41,528 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:41,559 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:41,560 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:41,560 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:41,562 - Group['spark'] {}

2016-06-23 21:21:41,564 - Group['hadoop'] {}

2016-06-23 21:21:41,564 - Group['users'] {}

2016-06-23 21:21:41,565 - Group['knox'] {}

2016-06-23 21:21:41,565 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,566 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,567 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,568 - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,569 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,570 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,571 - User['falcon'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,572 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,573 - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,574 - User['mahout'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,575 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,578 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,579 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,580 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,581 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,582 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,583 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,584 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,585 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,587 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,588 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,589 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-06-23 21:21:41,591 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2016-06-23 21:21:41,597 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2016-06-23 21:21:41,598 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'recursive': True, 'mode': 0775, 'cd_access': 'a'}

2016-06-23 21:21:41,599 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-06-23 21:21:41,601 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2016-06-23 21:21:41,606 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2016-06-23 21:21:41,607 - Group['hdfs'] {}

2016-06-23 21:21:41,607 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'hdfs']}

2016-06-23 21:21:41,608 - Directory['/etc/hadoop'] {'mode': 0755}

2016-06-23 21:21:41,638 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2016-06-23 21:21:41,639 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0777}

2016-06-23 21:21:41,661 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2016-06-23 21:21:41,669 - Skipping Execute[('setenforce', '0')] due to not_if

2016-06-23 21:21:41,670 - Directory['/var/log/hadoop'] {'owner': 'root', 'mode': 0775, 'group': 'hadoop', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:41,674 - Directory['/var/run/hadoop'] {'owner': 'root', 'group': 'root', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:41,674 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:41,682 - File['/usr/hdp/current/hadoop-client/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:41,686 - File['/usr/hdp/current/hadoop-client/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:41,687 - File['/usr/hdp/current/hadoop-client/conf/log4j.properties'] {'content': ..., 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2016-06-23 21:21:41,710 - File['/usr/hdp/current/hadoop-client/conf/hadoop-metrics2.properties'] {'content': Template('hadoop-metrics2.properties.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:41,711 - File['/usr/hdp/current/hadoop-client/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2016-06-23 21:21:41,722 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop'}

2016-06-23 21:21:41,728 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2016-06-23 21:21:42,061 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:42,061 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:42,062 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:42,086 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:42,087 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:42,110 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:42,111 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:42,111 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:42,112 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:42,112 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:42,113 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:42,137 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:42,138 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:42,161 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:42,161 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:42,161 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:42,170 - Directory['/etc/security/limits.d'] {'owner': 'root', 'group': 'root', 'recursive': True}

2016-06-23 21:21:42,177 - File['/etc/security/limits.d/hdfs.conf'] {'content': Template('hdfs.conf.j2'), 'owner': 'root', 'group': 'root', 'mode': 0644}

2016-06-23 21:21:42,178 - XmlConfig['hadoop-policy.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,190 - Generating config: /usr/hdp/current/hadoop-client/conf/hadoop-policy.xml

2016-06-23 21:21:42,191 - File['/usr/hdp/current/hadoop-client/conf/hadoop-policy.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,201 - XmlConfig['ssl-client.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,212 - Generating config: /usr/hdp/current/hadoop-client/conf/ssl-client.xml

2016-06-23 21:21:42,213 - File['/usr/hdp/current/hadoop-client/conf/ssl-client.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,219 - Directory['/usr/hdp/current/hadoop-client/conf/secure'] {'owner': 'root', 'group': 'hadoop', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:42,220 - XmlConfig['ssl-client.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf/secure', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,231 - Generating config: /usr/hdp/current/hadoop-client/conf/secure/ssl-client.xml

2016-06-23 21:21:42,231 - File['/usr/hdp/current/hadoop-client/conf/secure/ssl-client.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,241 - XmlConfig['ssl-server.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,252 - Generating config: /usr/hdp/current/hadoop-client/conf/ssl-server.xml

2016-06-23 21:21:42,253 - File['/usr/hdp/current/hadoop-client/conf/ssl-server.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,260 - XmlConfig['hdfs-site.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,274 - Generating config: /usr/hdp/current/hadoop-client/conf/hdfs-site.xml

2016-06-23 21:21:42,274 - File['/usr/hdp/current/hadoop-client/conf/hdfs-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,324 - XmlConfig['core-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hdfs', 'configurations': ...}

2016-06-23 21:21:42,334 - Generating config: /usr/hdp/current/hadoop-client/conf/core-site.xml

2016-06-23 21:21:42,335 - File['/usr/hdp/current/hadoop-client/conf/core-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,360 - File['/usr/hdp/current/hadoop-client/conf/slaves'] {'content': Template('slaves.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:42,361 - Directory['/hadoop/hdfs/namenode'] {'owner': 'hdfs', 'recursive': True, 'group': 'hadoop', 'mode': 0755, 'cd_access': 'a'}

2016-06-23 21:21:42,362 - Called service start with upgrade_type: None

2016-06-23 21:21:42,362 - Ranger admin not installed

2016-06-23 21:21:42,373 - Execute['ls /hadoop/hdfs/namenode | wc -l | grep -q ^0$'] {}

2016-06-23 21:21:42,378 - Execute['yes Y | hdfs --config /usr/hdp/current/hadoop-client/conf namenode -format'] {'path': ['/usr/hdp/current/hadoop-client/bin'], 'user': 'hdfs'}

2016-06-23 21:21:45,451 - Directory['/hadoop/hdfs/namenode/namenode-formatted/'] {'recursive': True}

2016-06-23 21:21:45,451 - Creating directory Directory['/hadoop/hdfs/namenode/namenode-formatted/'] since it doesn't exist.

2016-06-23 21:21:45,454 - File['/etc/hadoop/conf/dfs.exclude'] {'owner': 'hdfs', 'content': Template('exclude_hosts_list.j2'), 'group': 'hadoop'}

2016-06-23 21:21:45,454 - Writing File['/etc/hadoop/conf/dfs.exclude'] because it doesn't exist

2016-06-23 21:21:45,455 - Changing owner for /etc/hadoop/conf/dfs.exclude from 0 to hdfs

2016-06-23 21:21:45,455 - Changing group for /etc/hadoop/conf/dfs.exclude from 0 to hadoop

2016-06-23 21:21:45,455 - Option for start command:

2016-06-23 21:21:45,455 - Directory['/var/run/hadoop'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0755}

2016-06-23 21:21:45,456 - Changing owner for /var/run/hadoop from 0 to hdfs

2016-06-23 21:21:45,456 - Changing group for /var/run/hadoop from 0 to hadoop

2016-06-23 21:21:45,456 - Directory['/var/run/hadoop/hdfs'] {'owner': 'hdfs', 'recursive': True}

2016-06-23 21:21:45,456 - Directory['/var/log/hadoop/hdfs'] {'owner': 'hdfs', 'recursive': True}

2016-06-23 21:21:45,457 - File['/var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'] {'action': ['delete'], 'not_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'}

2016-06-23 21:21:45,461 - Execute['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode''] {'environment': {'HADOOP_LIBEXEC_DIR': '/usr/hdp/current/hadoop-client/libexec'}, 'not_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'}

Created 06-24-2016 04:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you see any error in the file "/var/lib/ambari-agent/data/errors-2760.txt" (if yes, then can you please post it's content here ?

Also is it (NameNode) failing to start every time ?

In such cases it is better to complete the installation first and then later try starting the Component later. So can you complete/finish the installation first (even if the NameNode is not starting, do not retry) and then later try to start the name node again through Ambari.

Created 06-24-2016 04:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you see any error in the file "/var/lib/ambari-agent/data/errors-2760.txt" (if yes, then can you please post it's content here ?

Also is it (NameNode) failing to start every time ?

In such cases it is better to complete the installation first and then later try starting the Component later. So can you complete/finish the installation first (even if the NameNode is not starting, do not retry) and then later try to start the name node again through Ambari.

Created 06-24-2016 07:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try to restart NN services using Ambari post installation. If it fails to come up, review the namenode log file to find what the issue is.

Created 06-29-2016 07:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is not much details why Namenode is failing to start. Can you share the NameNode log when trying to start the namenode service? It will help to identify what is causing the NameNode to fail to start.

Meanwhile you can try to test if namenode can be manually started from command line?

Run following :

/usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode

or

/var/lib/ambari-agent/ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ;/usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode