Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Apache Ambari Server installation giving error...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Apache Ambari Server installation giving errors while using public repositories (from cloudera wih username authentication)

- Labels:

-

Apache Ambari

Created 07-22-2021 10:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi.

I am trying to install Ambari server on a three node cluster with one namenode and other two as data nodes.

Ambari Version: 2.7.3.0

HDP Version: 3.1.0

Linux Version: RedHat 7

I am able to connect to the internet through each server and hence using public repository to install ambari server. I have also the necessary authentications from cloudera for ambari and hdp repos.

I have followed instructions as mentioned in the following url

My repository base urls are as follows

- Ambari: https:///LOGIN:PASSWORD@https://archive.cloudera.com/p/ambari/2.x/2.7.3.0/centos7/

- HDP: http:///LOGIN:PASSWORD@archive.cloudera.com/p/HDP/3.x/3.1.0.0/centos7

- HDP Utils: http://LOGIN:PASSWORD@archive.cloudera.com/p/HDP-UTILS/1.1.0.22/repos/centos7

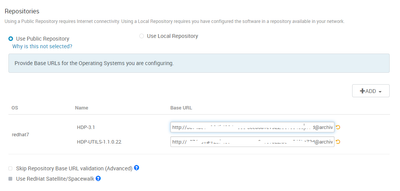

I am using the same above urls in Select Version step of installaton wizard and checked on Public Repository as below

I follow the same steps and all the servers are registered, chose the required services and then deployed.

I am facing the following errors:

Activity Analyser Install Failure on One server

stderr:

Failed to execute command: rpm -qa | grep smartsense- || yum -y install smartsense-hst || rpm -i /var/lib/ambari-agent/cache/stacks/HDP/3.0/services/SMARTSENSE/package/files/rpm/*.rpm; Exit code: 1; stdout: Loaded plugins: langpacks, product-id, search-disabled-repos

; stderr:

One of the configured repositories failed (Unknown),

and yum doesn't have enough cached data to continue. At this point the only

safe thing yum can do is fail. There are a few ways to work "fix" this:

1. Contact the upstream for the repository and get them to fix the problem.

2. Reconfigure the baseurl/etc. for the repository, to point to a working

upstream. This is most often useful if you are using a newer

distribution release than is supported by the repository (and the

packages for the previous distribution release still work).

3. Run the command with the repository temporarily disabled

yum --disablerepo=<repoid> ...

4. Disable the repository permanently, so yum won't use it by default. Yum

will then just ignore the repository until you permanently enable it

again or use --enablerepo for temporary usage:

yum-config-manager --disable <repoid>

or

subscription-manager repos --disable=<repoid>

5. Configure the failing repository to be skipped, if it is unavailable.

Note that yum will try to contact the repo. when it runs most commands,

so will have to try and fail each time (and thus. yum will be be much

slower). If it is a very temporary problem though, this is often a nice

compromise:

yum-config-manager --save --setopt=<repoid>.skip_if_unavailable=true

Cannot find a valid baseurl for repo: HDP-3.1-repo-3

error: File not found by glob: /var/lib/ambari-agent/cache/stacks/HDP/3.0/services/SMARTSENSE/package/files/rpm/*.rpm

stdout:

2021-07-23 10:09:25,002 - Stack Feature Version Info: Cluster Stack=3.1, Command Stack=None, Command Version=None -> 3.1

2021-07-23 10:09:25,006 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2021-07-23 10:09:25,007 - Group['livy'] {}

2021-07-23 10:09:25,008 - Group['spark'] {}

2021-07-23 10:09:25,008 - Group['hdfs'] {}

2021-07-23 10:09:25,009 - Group['hadoop'] {}

2021-07-23 10:09:25,009 - Group['users'] {}

2021-07-23 10:09:25,009 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,010 - User['yarn-ats'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,011 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['livy', 'hadoop'], 'uid': None}

2021-07-23 10:09:25,011 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,012 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['spark', 'hadoop'], 'uid': None}

2021-07-23 10:09:25,013 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,014 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2021-07-23 10:09:25,014 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,015 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2021-07-23 10:09:25,016 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop'], 'uid': None}

2021-07-23 10:09:25,017 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,017 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,018 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2021-07-23 10:09:25,019 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2021-07-23 10:09:25,025 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2021-07-23 10:09:25,026 - Group['hdfs'] {}

2021-07-23 10:09:25,026 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop', u'hdfs']}

2021-07-23 10:09:25,027 - FS Type: HDFS

2021-07-23 10:09:25,027 - Directory['/etc/hadoop'] {'mode': 0755}

2021-07-23 10:09:25,027 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2021-07-23 10:09:25,043 - Repository['HDP-3.1-repo-3'] {'base_url': '', 'action': ['prepare'], 'components': [u'HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-3', 'mirror_list': None}

2021-07-23 10:09:25,049 - Repository['HDP-UTILS-1.1.0.22-repo-3'] {'base_url': '', 'action': ['prepare'], 'components': [u'HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-3', 'mirror_list': None}

2021-07-23 10:09:25,051 - Repository[None] {'action': ['create']}

2021-07-23 10:09:25,052 - File['/tmp/tmpmDXhdK'] {'content': '[HDP-3.1-repo-3]\nname=HDP-3.1-repo-3\nbaseurl=\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-UTILS-1.1.0.22-repo-3]\nname=HDP-UTILS-1.1.0.22-repo-3\nbaseurl=\n\npath=/\nenabled=1\ngpgcheck=0'}

2021-07-23 10:09:25,052 - Writing File['/tmp/tmpmDXhdK'] because contents don't match

2021-07-23 10:09:25,052 - File['/tmp/tmpCCtufc'] {'content': StaticFile('/etc/yum.repos.d/ambari-hdp-3.repo')}

2021-07-23 10:09:25,053 - Writing File['/tmp/tmpCCtufc'] because contents don't match

2021-07-23 10:09:25,054 - Package['unzip'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2021-07-23 10:09:25,317 - Skipping installation of existing package unzip

2021-07-23 10:09:25,317 - Package['curl'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2021-07-23 10:09:25,439 - Skipping installation of existing package curl

2021-07-23 10:09:25,439 - Package['hdp-select'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2021-07-23 10:09:25,566 - Skipping installation of existing package hdp-select

2021-07-23 10:09:25,574 - Skipping stack-select on SMARTSENSE because it does not exist in the stack-select package structure.

installing using command: {sudo} rpm -qa | grep smartsense- || {sudo} yum -y install smartsense-hst || {sudo} rpm -i /var/lib/ambari-agent/cache/stacks/HDP/3.0/services/SMARTSENSE/package/files/rpm/*.rpm

Command: rpm -qa | grep smartsense- || yum -y install smartsense-hst || rpm -i /var/lib/ambari-agent/cache/stacks/HDP/3.0/services/SMARTSENSE/package/files/rpm/*.rpm

Exit code: 1

Std Out: Loaded plugins: langpacks, product-id, search-disabled-repos

Std Err:

One of the configured repositories failed (Unknown),

and yum doesn't have enough cached data to continue. At this point the only

safe thing yum can do is fail. There are a few ways to work "fix" this:

1. Contact the upstream for the repository and get them to fix the problem.

2. Reconfigure the baseurl/etc. for the repository, to point to a working

upstream. This is most often useful if you are using a newer

distribution release than is supported by the repository (and the

packages for the previous distribution release still work).

3. Run the command with the repository temporarily disabled

yum --disablerepo=<repoid> ...

4. Disable the repository permanently, so yum won't use it by default. Yum

will then just ignore the repository until you permanently enable it

again or use --enablerepo for temporary usage:

yum-config-manager --disable <repoid>

or

subscription-manager repos --disable=<repoid>

5. Configure the failing repository to be skipped, if it is unavailable.

Note that yum will try to contact the repo. when it runs most commands,

so will have to try and fail each time (and thus. yum will be be much

slower). If it is a very temporary problem though, this is often a nice

compromise:

yum-config-manager --save --setopt=<repoid>.skip_if_unavailable=true

Cannot find a valid baseurl for repo: HDP-3.1-repo-3

error: File not found by glob: /var/lib/ambari-agent/cache/stacks/HDP/3.0/services/SMARTSENSE/package/files/rpm/*.rpm

2021-07-23 10:09:26,860 - Skipping stack-select on SMARTSENSE because it does not exist in the stack-select package structure.

Command failed after 1 tries

Timeline Service 1.5 warning in 2nd server

2021-07-23 10:09:25,037 - Stack Feature Version Info: Cluster Stack=3.1, Command Stack=None, Command Version=None -> 3.1

2021-07-23 10:09:25,041 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2021-07-23 10:09:25,042 - Group['livy'] {}

2021-07-23 10:09:25,043 - Group['spark'] {}

2021-07-23 10:09:25,044 - Group['hdfs'] {}

2021-07-23 10:09:25,044 - Group['hadoop'] {}

2021-07-23 10:09:25,044 - Group['users'] {}

2021-07-23 10:09:25,044 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,045 - User['yarn-ats'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,046 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['livy', 'hadoop'], 'uid': None}

2021-07-23 10:09:25,047 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,048 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['spark', 'hadoop'], 'uid': None}

2021-07-23 10:09:25,048 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,049 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2021-07-23 10:09:25,050 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,050 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2021-07-23 10:09:25,051 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop'], 'uid': None}

2021-07-23 10:09:25,052 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,053 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:25,053 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2021-07-23 10:09:25,055 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2021-07-23 10:09:25,061 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2021-07-23 10:09:25,061 - Group['hdfs'] {}

2021-07-23 10:09:25,062 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop', u'hdfs']}

2021-07-23 10:09:25,062 - FS Type: HDFS

2021-07-23 10:09:25,062 - Directory['/etc/hadoop'] {'mode': 0755}

2021-07-23 10:09:25,062 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2021-07-23 10:09:25,078 - Repository['HDP-3.1-repo-3'] {'base_url': '', 'action': ['prepare'], 'components': [u'HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-3', 'mirror_list': None}

2021-07-23 10:09:25,084 - Repository['HDP-UTILS-1.1.0.22-repo-3'] {'base_url': '', 'action': ['prepare'], 'components': [u'HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-3', 'mirror_list': None}

2021-07-23 10:09:25,086 - Repository[None] {'action': ['create']}

2021-07-23 10:09:25,086 - File['/tmp/tmpQNi9Ro'] {'content': '[HDP-3.1-repo-3]\nname=HDP-3.1-repo-3\nbaseurl=\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-UTILS-1.1.0.22-repo-3]\nname=HDP-UTILS-1.1.0.22-repo-3\nbaseurl=\n\npath=/\nenabled=1\ngpgcheck=0'}

2021-07-23 10:09:25,087 - Writing File['/tmp/tmpQNi9Ro'] because contents don't match

2021-07-23 10:09:25,087 - File['/tmp/tmp7l4TFJ'] {'content': StaticFile('/etc/yum.repos.d/ambari-hdp-3.repo')}

2021-07-23 10:09:25,088 - Writing File['/tmp/tmp7l4TFJ'] because contents don't match

2021-07-23 10:09:25,088 - Package['unzip'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2021-07-23 10:09:25,390 - Skipping installation of existing package unzip

2021-07-23 10:09:25,391 - Package['curl'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2021-07-23 10:09:25,525 - Skipping installation of existing package curl

2021-07-23 10:09:25,525 - Package['hdp-select'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2021-07-23 10:09:25,662 - Skipping installation of existing package hdp-select

2021-07-23 10:09:25,728 - call[('ambari-python-wrap', u'/usr/bin/hdp-select', 'versions')] {}

2021-07-23 10:09:25,831 - call returned (1, 'Traceback (most recent call last):\n File "/usr/bin/hdp-select", line 461, in <module>\n printVersions()\n File "/usr/bin/hdp-select", line 300, in printVersions\n for f in os.listdir(root):\nOSError: [Errno 2] No such file or directory: \'/usr/hdp\'')

2021-07-23 10:09:26,027 - Command repositories: HDP-3.1-repo-3, HDP-UTILS-1.1.0.22-repo-3

2021-07-23 10:09:26,027 - Applicable repositories: HDP-3.1-repo-3, HDP-UTILS-1.1.0.22-repo-3

2021-07-23 10:09:26,028 - Looking for matching packages in the following repositories: HDP-3.1-repo-3, HDP-UTILS-1.1.0.22-repo-3

Command aborted. Reason: 'Server considered task failed and automatically aborted it'

Command failed after 1 tries

Data Node Install Warning in 3rd server

2021-07-23 10:09:24,955 - Stack Feature Version Info: Cluster Stack=3.1, Command Stack=None, Command Version=None -> 3.1

2021-07-23 10:09:24,959 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2021-07-23 10:09:24,960 - Group['livy'] {}

2021-07-23 10:09:24,962 - Group['spark'] {}

2021-07-23 10:09:24,962 - Group['hdfs'] {}

2021-07-23 10:09:24,962 - Group['hadoop'] {}

2021-07-23 10:09:24,962 - Group['users'] {}

2021-07-23 10:09:24,962 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:24,963 - User['yarn-ats'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:24,964 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['livy', 'hadoop'], 'uid': None}

2021-07-23 10:09:24,965 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:24,966 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['spark', 'hadoop'], 'uid': None}

2021-07-23 10:09:24,966 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:24,967 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2021-07-23 10:09:24,968 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:24,968 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2021-07-23 10:09:24,969 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop'], 'uid': None}

2021-07-23 10:09:24,970 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:24,971 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2021-07-23 10:09:24,971 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2021-07-23 10:09:24,972 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2021-07-23 10:09:24,979 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2021-07-23 10:09:24,979 - Group['hdfs'] {}

2021-07-23 10:09:24,980 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop', u'hdfs']}

2021-07-23 10:09:24,980 - FS Type: HDFS

2021-07-23 10:09:24,980 - Directory['/etc/hadoop'] {'mode': 0755}

2021-07-23 10:09:24,980 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2021-07-23 10:09:24,995 - Repository['HDP-3.1-repo-3'] {'base_url': '', 'action': ['prepare'], 'components': [u'HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-3', 'mirror_list': None}

2021-07-23 10:09:25,001 - Repository['HDP-UTILS-1.1.0.22-repo-3'] {'base_url': '', 'action': ['prepare'], 'components': [u'HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-3', 'mirror_list': None}

2021-07-23 10:09:25,003 - Repository[None] {'action': ['create']}

2021-07-23 10:09:25,003 - File['/tmp/tmp37xwLS'] {'content': '[HDP-3.1-repo-3]\nname=HDP-3.1-repo-3\nbaseurl=\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-UTILS-1.1.0.22-repo-3]\nname=HDP-UTILS-1.1.0.22-repo-3\nbaseurl=\n\npath=/\nenabled=1\ngpgcheck=0'}

2021-07-23 10:09:25,004 - Writing File['/tmp/tmp37xwLS'] because contents don't match

2021-07-23 10:09:25,004 - File['/tmp/tmpIosjBs'] {'content': StaticFile('/etc/yum.repos.d/ambari-hdp-3.repo')}

2021-07-23 10:09:25,005 - Writing File['/tmp/tmpIosjBs'] because contents don't match

2021-07-23 10:09:25,005 - Package['unzip'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2021-07-23 10:09:25,274 - Skipping installation of existing package unzip

2021-07-23 10:09:25,274 - Package['curl'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2021-07-23 10:09:25,387 - Skipping installation of existing package curl

2021-07-23 10:09:25,388 - Package['hdp-select'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2021-07-23 10:09:25,504 - Skipping installation of existing package hdp-select

2021-07-23 10:09:25,563 - call[('ambari-python-wrap', u'/usr/bin/hdp-select', 'versions')] {}

2021-07-23 10:09:25,661 - call returned (1, 'Traceback (most recent call last):\n File "/usr/bin/hdp-select", line 461, in <module>\n printVersions()\n File "/usr/bin/hdp-select", line 300, in printVersions\n for f in os.listdir(root):\nOSError: [Errno 2] No such file or directory: \'/usr/hdp\'')

2021-07-23 10:09:25,849 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2021-07-23 10:09:25,850 - Stack Feature Version Info: Cluster Stack=3.1, Command Stack=None, Command Version=None -> 3.1

2021-07-23 10:09:25,868 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2021-07-23 10:09:25,882 - Command repositories: HDP-3.1-repo-3, HDP-UTILS-1.1.0.22-repo-3

2021-07-23 10:09:25,882 - Applicable repositories: HDP-3.1-repo-3, HDP-UTILS-1.1.0.22-repo-3

2021-07-23 10:09:25,883 - Looking for matching packages in the following repositories: HDP-3.1-repo-3, HDP-UTILS-1.1.0.22-repo-3

Command aborted. Reason: 'Server considered task failed and automatically aborted it'

Command failed after 1 triesWhen I looked at the ambari-hdp-repo in all servers, I noticed tht the base url for HDP and HDP Utils is blank.

[HDP-3.1-repo-3]

name=HDP-3.1-repo-3

baseurl=

path=/

enabled=1

gpgcheck=0

[HDP-UTILS-1.1.0.22-repo-3]

name=HDP-UTILS-1.1.0.22-repo-3

baseurl=

path=/

enabled=1

gpgcheck=0

As Far as internet connectivity to the base urls go, I am able to open the urls in browser and also in CLI using wget with the username authentication.

I have failed many times to understand the origin of these errors and request your intervention in resolving this issue. Have been stuck in this step for a month. Kindly help to resolve this matter and I would greatly appreciate it.

Awaiting your response keenly

Thanks

Created 07-26-2021 12:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@SSRIL Can you keep only one OS repository link and remove other OS properties from the Repository page

Also can check the repo under /etc/yum.repos.d/

Created 07-27-2021 10:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue has been resolved.

Summarising the resolution as below so that it helps others,

As suspected, the hdp repository was not completely configured.

So we deleted all the hdp repos from the servers.

Created a new hdp repo in ambari-server host with the respective urls along with username and password for HDP and HDP-UTILS.

Yum clean all, and yum repolist

Then started with the ambari server installation as mentioned in the official documentation.

Faced issues while installing hive especially with the mysql-connector-jar whose file size was around 24MB. Thanks for your help in providing the correct jar for the installation.

The official documentation is very helpful for beginners like us.

Now all the services are up and running successfully.

Again Thank you and your team for your help in resolving this issue.

Created 07-23-2021 12:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@SSRIL From the stack trace I can see, it is failing to install Smartsense hst package, Smartsense hst package is shipped with ambari reporitory

Make sure that ambari repository exists on the host

For the HDP repo, can you share a complete screenshot of the repositories page

Created 07-23-2021 04:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your response.

ambari-repo does exist in the host, since ambari server was installed using the ambari-repo into consideration. Smartsense is included, but somehow it is not able to download it, which is my concern.

The hdp repo from the screenshot is as follows

I have commented out the username and password for security purposes. It is similar as follows:

HDP: http://LOGIN:PASSWORD@archive.cloudera.com/p/HDP/3.x/3.1.0.0/centos7

HDPUTILS: http://LOGIN:PASSWORD@archive.cloudera.com/p/HDP-UTILS/1.1.0.22/repos/centos7/

The above url with username and password I am able to access from browser and CLI both, however in the hdp.repo file all the baseurls are blank.

Created 07-23-2021 07:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@SSRIL Can you check the ambari server logs which are getting generating while clicking on Save button in the repository page

Created 07-25-2021 10:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Scharan

I have tried to restart the ambari-server and I have attached the logs (even though the ambari server is not succesfully deployed yet)

Following errors can be seen in the logs

2021-07-26 10:42:47,527 INFO [main] StackServiceDirectory:130 - No repository information defined for , serviceName=HDFS, repoFolder=/var/lib/ambari-server/resources/stacks/HDP/2.0/services/HDFS/repos

2021-07-26 10:42:47,528 INFO [main] StackServiceDirectory:130 - No repository information defined for , serviceName=HIVE, repoFolder=/var/lib/ambari-server/resources/stacks/HDP/2.0/services/HIVE/repos

2021-07-26 10:42:47,529 INFO [main] StackServiceDirectory:130 - No repository information defined for , serviceName=OOZIE, repoFolder=/var/lib/ambari-server/resources/stacks/HDP/2.0/services/OOZIE/repos

2021-07-26 10:42:47,530 INFO [main] StackServiceDirectory:130 - No repository information defined for , serviceName=PIG, repoFolder=/var/lib/ambari-server/resources/stacks/HDP/2.0/services/PIG/repos

2021-07-26 10:42:47,531 INFO [main] StackServiceDirectory:130 - No repository information defined for , serviceName=SQOOP, repoFolder=/var/lib/ambari-server/resources/stacks/HDP/2.0/services/SQOOP/repos

2021-07-26 10:42:47,531 INFO [main] StackServiceDirectory:130 - No repository information defined for , serviceName=YARN, repoFolder=/var/lib/ambari-server/resources/stacks/HDP/2.0/services/YARN/repos

........

https://archive.cloudera.com/p/HDP/2.x/2.6.5.1150/sles12/HDP-2.6.5.1150-31.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.1150/sles12/HDP-2.6.5.1150-31.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.1150/sles12/HDP-2.6.5.1150-31.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,174 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.5.1050/sles12/HDP-2.6.5.1050-37.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.1050/sles12/HDP-2.6.5.1050-37.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.1050/sles12/HDP-2.6.5.1050-37.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,174 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.5.1100/sles12/HDP-2.6.5.1100-53.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.1100/sles12/HDP-2.6.5.1100-53.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.1100/sles12/HDP-2.6.5.1100-53.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,176 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.2.0/sles12/HDP-2.6.2.0-205.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.2.0/sles12/HDP-2.6.2.0-205.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.2.0/sles12/HDP-2.6.2.0-205.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,179 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.3.0/sles12/HDP-2.6.3.0-235.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.3.0/sles12/HDP-2.6.3.0-235.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.3.0/sles12/HDP-2.6.3.0-235.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,180 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.5.0/sles12/HDP-2.6.5.0-292.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.0/sles12/HDP-2.6.5.0-292.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.0/sles12/HDP-2.6.5.0-292.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,181 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.5.1175/sles12/HDP-2.6.5.1175-1.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.1175/sles12/HDP-2.6.5.1175-1.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.1175/sles12/HDP-2.6.5.1175-1.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,183 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.2.14/sles12/HDP-2.6.2.14-5.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.2.14/sles12/HDP-2.6.2.14-5.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.2.14/sles12/HDP-2.6.2.14-5.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,184 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.1.0/sles12/HDP-2.6.1.0-129.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.1.0/sles12/HDP-2.6.1.0-129.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.1.0/sles12/HDP-2.6.1.0-129.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,187 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.4.0/sles12/HDP-2.6.4.0-91.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.4.0/sles12/HDP-2.6.4.0-91.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.4.0/sles12/HDP-2.6.4.0-91.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,231 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.5.0/suse11sp3/HDP-2.6.5.0-292.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.0/suse11sp3/HDP-2.6.5.0-292.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.5.0/suse11sp3/HDP-2.6.5.0-292.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,231 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.4.0/suse11sp3/HDP-2.6.4.0-91.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.4.0/suse11sp3/HDP-2.6.4.0-91.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.4.0/suse11sp3/HDP-2.6.4.0-91.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

2021-07-26 10:42:49,232 INFO [Stack Version Loading Thread] RepoVdfCallable:130 - Stack HDP-2.6 cannot resolve OS ubuntu18 to the supported ones: redhat-ppc7,suse12,suse11,ubuntu16,redhat7,debian7,redhat6,ubuntu14,ubuntu12. Family: ubuntu18

2021-07-26 10:42:49,233 WARN [Stack Version Loading Thread] RepoVdfCallable:142 - Could not load version definition for HDP-2.6 identified by https://archive.cloudera.com/p/HDP/2.x/2.6.2.0/suse11sp3/HDP-2.6.2.0-205.xml. Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.2.0/suse11sp3/HDP-2.6.2.0-205.xml

java.io.IOException: Server returned HTTP response code: 401 for URL: https://archive.cloudera.com/p/HDP/2.x/2.6.2.0/suse11sp3/HDP-2.6.2.0-205.xml

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1876)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1474)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getInputStream(HttpsURLConnectionImpl.java:254)

at java.net.URL.openStream(URL.java:1045)

at org.apache.ambari.server.state.repository.VersionDefinitionXml.load(VersionDefinitionXml.java:543)

at org.apache.ambari.server.state.stack.RepoVdfCallable.timedVDFLoad(RepoVdfCallable.java:154)

at org.apache.ambari.server.state.stack.RepoVdfCallable.mergeDefinitions(RepoVdfCallable.java:136)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:79)

at org.apache.ambari.server.state.stack.RepoVdfCallable.call(RepoVdfCallable.java:41)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

......It is going through all the repositories, no matter what the system is (rhel or ubuntu) and returning

Server returned HTTP response code: 401.

This is making me more confused, since I have just started the server and not proceeded to Step one of the installation wizard yet( Where we mention the repos)

Created 07-26-2021 12:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@SSRIL Can you keep only one OS repository link and remove other OS properties from the Repository page

Also can check the repo under /etc/yum.repos.d/

Created 07-26-2021 12:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yum repolist gave me the following repos

IDCRHEL7 REHL7 31,656

IDCRHEL7OPT RHEL7OPT 22,379

IDCRHEL7XTRA RHEL7XTRA 1,378

ambari-2.7.3.0 ambari Version - ambari-2.7.3.0 13

nodesource/x86_64 Node.js Packages for Enterprise Linux 7 - x86_64 52

yarn Yarn Repository 51

and these are the repos that are there in etc/yum.repos.d. Not sure which one to delete

ambari.repo

elasticsearch.repo

mas1.repo

nodesource-el7.repo

redhat.repo

yarn.repo

Created 07-27-2021 10:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue has been resolved.

Summarising the resolution as below so that it helps others,

As suspected, the hdp repository was not completely configured.

So we deleted all the hdp repos from the servers.

Created a new hdp repo in ambari-server host with the respective urls along with username and password for HDP and HDP-UTILS.

Yum clean all, and yum repolist

Then started with the ambari server installation as mentioned in the official documentation.

Faced issues while installing hive especially with the mysql-connector-jar whose file size was around 24MB. Thanks for your help in providing the correct jar for the installation.

The official documentation is very helpful for beginners like us.

Now all the services are up and running successfully.

Again Thank you and your team for your help in resolving this issue.