Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Apache Nifi: Insert json data into table as si...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Apache Nifi: Insert json data into table as single column data into table after concatenating all the keys and values of json.

- Labels:

-

Apache NiFi

Created 02-16-2023 08:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please help design data flow in nifi, I need to insert the incoming JSON data into table which having single column. The condition are I need to concat all the keys and values and insert that data.

Created 02-17-2023 07:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

thanks for the information , I came across situation before and I'm not sure if there is better way but you can use the following processors after you split to data into individual records :

ExtractText -> PUTSQL

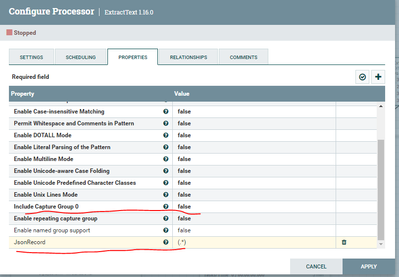

For the ExtractText:

1- Add dynamic Property to capture all the Json Content from incoming flowfile as follows:

Note: You have to be careful with if each record data can be large (> 1024 Chars). In this case you need to look into modifying "Maximum Buffer Size" and "Maximum Capture Group Length" accordingly otherwise the data will be truncated.

For the PutSQL: Once you configure the JDBC Connection Pool , you can set the SQL Statement Property with something like this:

insert into myTable (jsonCol) values ('${JsonRecord}')

If that helps please accept solution

Created 02-16-2023 09:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

Can you provide sample\example data of the json and how do you expect to save it as ?

Created 02-16-2023 08:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, SAM. Thank you for response. I am using ListenHTTP to get json data-->ConvertRecord(to validate json) -->SplitJson(to split)-->Now I have individial Json Objects. Now I have concatenate all the keys and values of each json object and insert to a table have single column called JSON_DATA.

This is my input data,

Created 02-17-2023 07:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

thanks for the information , I came across situation before and I'm not sure if there is better way but you can use the following processors after you split to data into individual records :

ExtractText -> PUTSQL

For the ExtractText:

1- Add dynamic Property to capture all the Json Content from incoming flowfile as follows:

Note: You have to be careful with if each record data can be large (> 1024 Chars). In this case you need to look into modifying "Maximum Buffer Size" and "Maximum Capture Group Length" accordingly otherwise the data will be truncated.

For the PutSQL: Once you configure the JDBC Connection Pool , you can set the SQL Statement Property with something like this:

insert into myTable (jsonCol) values ('${JsonRecord}')

If that helps please accept solution

Created 02-19-2023 09:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

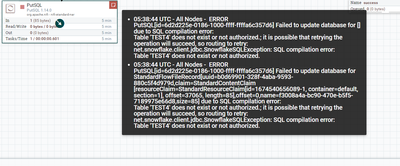

Hi SAM, Thank you for response. I am facing one more error, while inserting data into table. Even though i have tables named test4 in my snowflake table, The PUTSQL processor keep telling "The Table 'test4' doesn't exist or not Authorized(I did give permission too). This is my sql statement(INSERT INTO test4 (JSON_DATA) values ('${JsonRecord}').

Any help will be appreciated.

Created 02-19-2023 10:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi SAM, Actually it worked for me now. No error now. Thank you for your help.