Support Questions

- Cloudera Community

- Support

- Support Questions

- Apache Nifi - Using PutParquet, the HDFS file form...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Apache Nifi - Using PutParquet, the HDFS file format transferred remains native (.txt)

- Labels:

-

Apache NiFi

Created 01-10-2021 10:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

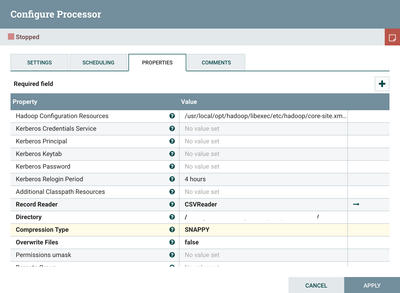

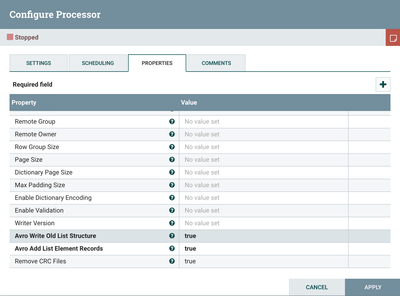

I used the PutParquet processor with CSVReader to compress files.txt in parquet format and moving them to HDFS.

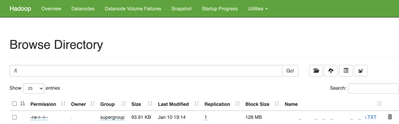

Checking from the Browse Directory of Hadoop however the files keep .txt

Is it normal? Are they saved in parquet format?

Thank you! @ApacheNifi

Configuration of PutParquet processor:

Hadoop:

Created 01-11-2021 05:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Lallagreta You should be able to define the filename, or change the filename to what you want. That said the filename doesnt dictate the type, so you can have parquet saved as .txt.

One recommendation I have is to use parquet command line tools during the testing of your use case. This is the best way to validate that files are looking right, have the right schema, and right results.

https://pypi.org/project/parquet-tools/

I apologize i do not have any exact samples, but from my recall of a year ago, you should be able to get simple commands to check schema of a file, and another command to show the data results. You may have to copy your hdfs file to local file system to inspect them from command line.

If this answer resolves your issue or allows you to move forward, please choose to ACCEPT this solution and close this topic. If you have further dialogue on this topic please comment here or feel free to private message me. If you have new questions related to your Use Case please create separate topic and feel free to tag me in your post.

Thanks,

Steven

Created 01-11-2021 05:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Lallagreta You should be able to define the filename, or change the filename to what you want. That said the filename doesnt dictate the type, so you can have parquet saved as .txt.

One recommendation I have is to use parquet command line tools during the testing of your use case. This is the best way to validate that files are looking right, have the right schema, and right results.

https://pypi.org/project/parquet-tools/

I apologize i do not have any exact samples, but from my recall of a year ago, you should be able to get simple commands to check schema of a file, and another command to show the data results. You may have to copy your hdfs file to local file system to inspect them from command line.

If this answer resolves your issue or allows you to move forward, please choose to ACCEPT this solution and close this topic. If you have further dialogue on this topic please comment here or feel free to private message me. If you have new questions related to your Use Case please create separate topic and feel free to tag me in your post.

Thanks,

Steven