Support Questions

- Cloudera Community

- Support

- Support Questions

- Best Practices for Storm Deployment on a Hadoop Cl...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Best Practices for Storm Deployment on a Hadoop Cluster using Ambari. How would you allocate components in production?

- Labels:

-

Apache Ambari

-

Apache Storm

Created 01-20-2016 04:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What are best practices for Deploying Storm Components on a cluster for scalablity and growth? We are thinking of having dedicated nodes for Storm on YARN. Also would anything go on an edge node?

For example in a cluster, the thought is to have three Storm nodes (S1, S2, S3) dedicated with the following allocations:

Storm Nimbus:

- Choose S1 as Storm Master to deploy Storm Nimbus... Or Probably a best practice is to not co-locate Nimbus with any worker node

- S1 node will also have the Storm UI

Storm Supervisors/ Workers

- Choose S1, S2, S3 to deploy Storm Supervisors

Zookeeper Cluster

- Since Kafka is usually used with Storm, have a separate Zookeeper cluster for Kafka and Storm.

- DON"T put the Zookeeper cluster on the Kafka nodes (K1, K2, K3).

- Put the Zookeeper on the Storm nodes (S1, S2, S3)

Storm UI

- Will be on the same node as the Nimbus: S1 or Edge

DRPC Server

- What is the best practice to place this?

So in Summary, if we have three dedicated nodes for Storm, the thinking is to allocate as follows:

S1 Node:

- Storm Nimbus/ Storm UI (Maybe it is not a best practice to put Storm Nimbus on worker nodes and put this on Edge node?)

- Storm Supervisor

- Zookeeper

S2 Node:

- Storm Supervisor

- Zookeeper

S3 Node:

- Storm Supervisor

- Zookeeper

Edge Node:

- Storm Nimbus/ Storm UI (Maybe it is not a best practice to put Storm Nimbus on worker nodes and put this on Edge node?)

Finally would the DRPC go on the Nimbus node? Any thoughts on this? Am I on the right track? Would anything go on an edge node?

Created 01-21-2016 06:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Ancil McBarnett my 2 cents:

- Nothing on Edge nodes, you have no idea what the guys will do there

- Nimbus, Storm UI and DRPC on one of cluster master nodes. If this is a stand-alone Storm&Kafka cluster then set a master and put these guys together with Ambari there.

- Supervisors on dedicated nodes. In hdfs cluster you can collocate them with Data nodes.

- Dedicated Kafka broker nodes, but see below

- Dedicated ZK for Kafka, however in case of Kafka-0.8.2 or higher, if consumers don't keep offsets on ZK, low to medium traffic and you have at least 3 brokers,then you can start with collocating Kafka ZK with brokers. In this case ZK should use dedicated disk.

Created 02-02-2016 04:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ancil McBarnett accept best answer 🙂

Created on 02-05-2016 01:53 AM - edited 08-19-2019 04:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

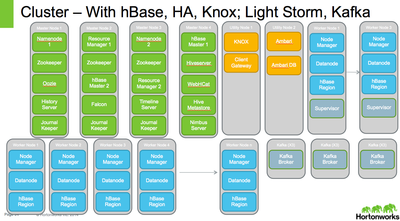

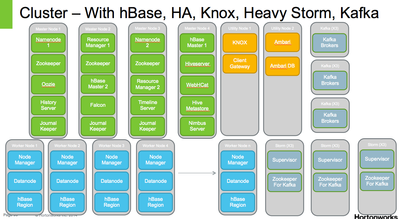

This is the picture I have come up with

Created 02-05-2016 03:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cute pic! And everything looks good, however, even in the "Light Storm, Kafka" case I'd create a dedicated Kafka ZooKeeper and put it on Kafka brokers, so that ZKs write to their own disks (for example, 5 disks for Kafka, 1 for ZK).

Created 03-31-2017 12:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I am planning to create Ambari Hadoop Storm Cluster and as this is fresh new for me I have some doubts how to setup it on the best way. Here is what I have for resources:

- Platform: AWS (8 EC2 instances - 1 master. 4 slaves, 3 workers (zookeepers))

- Tool: As I want to automate setup, I will use Terraform, Ansible and Blueprint to setup all environment

- I research a little bit and create some conclusion and I need some advice/opinion is this a good path???

Thanks

| MASTER | SLAVE | ZOO |

| NAMENODE | SECONDARY_NAMENODE | DATANODE |

| NIMBUS | RESOURCE_MANAGER | NODEMANAGER |

| DRPC_SERVER | SUPERVISOR | ZOOKEEPER_SERVER |

| STORM_UI_SERVER | ZOOKEEPER_CLIENT | METRICS_MONITOR |

| ZOOKEEPER_CLIENT | METRICS_MONITOR | MAPREDUCE2_CLIENT |

| HDFS_CLIENT | HDFS_CLIENT | HDFS_CLIENT |

| PIG | PIG | PIG |

| TEZ_CLIENT | TEZ_CLIENT | TEZ_CLIENT |

| YARN_CLIENT | YARN_CLIENT | YARN_CLIENT |

| METRICS_COLLECTOR | HISTORY_SERVER | |

| METRICS_GRAFANA | MAPREDUCE2_CLIENT | |

| APP_TIMELINE_SERVER | ||

| HIVE_SERVER | HCAT | |

| HIVE_METASTORE | WEBHCAT_SERVER | |

| MYSQL_SERVER | HIVE_CLIENT |

- « Previous

-

- 1

- 2

- Next »