Support Questions

- Cloudera Community

- Support

- Support Questions

- Best Way to Transform & Process Data

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Best Way to Transform & Process Data

- Labels:

-

Apache Flume

-

Apache Hadoop

-

Apache Hive

Created on 12-11-2016 02:37 AM - edited 08-18-2019 06:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone reading this.

As you can see, the main subject of this post is to get tips from you all for taking the best decision.

I've been working with Hortonworks Sandbox 2,5 since a month ago.

I've been playing with Flume, collecting data from twitter and trying to transform it into a readable format inside a Hive Table.

Streaming-twitter-data-using-flume/

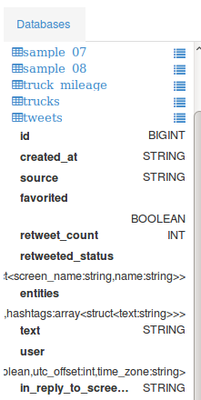

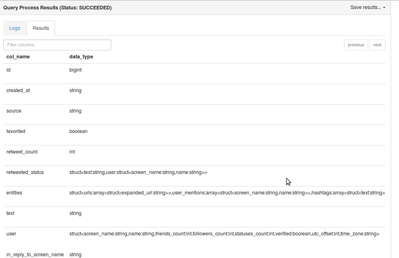

Until now, i successfully loaded data into Hive table (called tweets) using this guide Tweets using Hive

My problem is the following.

It's not easy for me at this step to select and process data. I would like to make it more readable (is it possible? if so, can you tell me how?). Also, would like to filter data.

I want to now, for example:

1.List most used words 2.List most used time for tweeting 3.List active users and so on...

Any technique, technology is welcome. I am trying to learn as fast as i can, but your help will be always welcome.

Let's work together if you want, you're welcome.

Regards,

Cristian

Created 12-11-2016 02:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I highly recommend you use Apache NiFi instead of flume for most if not all data movement into and out of hadoop.

For your use case, there is a prebuilt nifi template to push tweets to hive and solr (for searching and trending)

If you want further analysis (ie most used words), this can be done several ways.

1. for real time, use spark streaming with nifi. microbatch your counts

2. batch, run hive sql from nifi

3. batch, call hive script from nifi to calculate analysis every x internal

4. batch, setup oozie job to calculate analysis every internal (may be kicked off from nifi as well).

so you have options. hope that helps.

Created 12-11-2016 02:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I highly recommend you use Apache NiFi instead of flume for most if not all data movement into and out of hadoop.

For your use case, there is a prebuilt nifi template to push tweets to hive and solr (for searching and trending)

If you want further analysis (ie most used words), this can be done several ways.

1. for real time, use spark streaming with nifi. microbatch your counts

2. batch, run hive sql from nifi

3. batch, call hive script from nifi to calculate analysis every x internal

4. batch, setup oozie job to calculate analysis every internal (may be kicked off from nifi as well).

so you have options. hope that helps.

Created 12-12-2016 02:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Sunile,

First of all. Thanks for answering my question. I really appreciate your recommendation, i'll definitely use NiFi next time.

For my use case, what do you think about trying to process data with Hive? I'm thinking about creating new tables and then inserting data filtered with the parameters of my interest (as i explained before).

I'd like to know if there is a best practice (or alternative like programming something in java for Hadoop, or scala for Spark) to continue transforming data through the path that i have chosen and get results.

Regards

Created 12-13-2016 04:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You def use hive but you are not using the easy button. "best practice" is a abused term in our industry. I say a best practice for customer A may not be best practice for customer B. Its all about cluster size, hardware config, and use case which applies the "best practice" for again your specific use case. if you want to transform data the entire industry is moving to Spark. Spark is nice since it has multipule api for the same dataset. I recommend you open another HCC question if you are looking for a "best practice" on a specfic use case. I recommend NiFi for what you have identified.