Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Both of our HDFS nanenode are down and they wo...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Both of our HDFS nanenode are down and they won't start

Created 12-16-2021 02:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI We are currently trying to restart the HDFS name nodes in our cluster (HA). It seems that we are not able to start them. The process of restarting the node takes a long time until it finally fail with the following output on AMBARI:

Operation failed: Call From XX-XXX-XX-XXXX.XXXXX.XX/XX.X.XX.XX to XX-XXX-XX-XXXX.XXXXX.XX:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

2021-12-16 17:02:46,616 - call returned (255, '21/12/16 17:02:46 INFO ipc.Client: Retrying connect to server: XX-XXX-XX-XXXX.XXXXX.XX/XX.X.XX.XX:8020. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=1, sleepTime=1000 MILLISECONDS)\nOperation failed: Call From XX-XXX-XX-XXXX.XXXXX.XX/XX.X.XX.XX to XX-XXX-XX-XXXX.XXXXX.XX:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused')

2021-12-16 17:02:46,616 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl --negotiate -u : -s -k '"'"'https://XX-XXX-XX-XXXX.XXXXX.XX:50470/jmx?qry=Hadoop:service=NameNode,name=FSNamesystem'"'"' 1>/tmp/tmpUzuIj9 2>/tmp/tmp8V3Uai''] {'quiet': False}

2021-12-16 17:02:46,684 - call returned (7, '')

2021-12-16 17:02:46,684 - call['hdfs haadmin -ns metrodev -getServiceState nn2'] {'logoutput': True, 'user': 'hdfs'}

21/12/16 17:02:48 INFO ipc.Client: Retrying connect to server: XX-XXX-XX-XXXX.XXXXX.XX/XX.X.XX.XX:8020. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=1, sleepTime=1000 MILLISECONDS)

Operation failed: Call From XX-XXX-XX-XXXX.XXXXX.XX/XX.X.XX.XX to XX-XXX-XX-XXXX.XXXXX.XX:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

2021-12-16 17:02:48,615 - call returned (255, '21/12/16 17:02:48 INFO ipc.Client: Retrying connect to server: XX-XXX-XX-XXXX.XXXXX.XX/XX.X.XX.XX:8020. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=1, sleepTime=1000 MILLISECONDS)\nOperation failed: Call From XX-XXX-XX-XXXX.XXXXX.XX/XX.X.XX.XX to XX-XXX-XX-XXXX.XXXXX.XX:8020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused')

2021-12-16 17:02:48,615 - NameNode HA states: active_namenodes = [], standby_namenodes = [], unknown_namenodes = [(u'nn1', 'XX-XXX-XX-XXXX.XXXXX.XX:50470'), (u'nn2', 'XX-XXX-XX-XXXX.XXXXX.XX:50470')]

2021-12-16 17:02:48,615 - Will retry 3 time(s), caught exception: No active NameNode was found.. Sleeping for 5 sec(s)

On the log i get the following message on the log XXXX-XXXX-XXXX-XX-XXX-XX-XXXXX.XXXX.XX.log

2021-12-16 17:03:57,212 ERROR namenode.EditLogInputStream (EditLogFileInputStream.java:nextOpImpl(192)) - caught exception initializing https://XX-XXX-XX-XXXX.XXXXX.XX:8481/getJournal?jid=metrodev&segmentTxId=274488049&storageInfo=-64%3A1482798275%3A1538749182266%3ACID-4128f9aa-86b4-4add-9c9a-38c3b06c7384&inProgressOk=true

javax.net.ssl.SSLHandshakeException: Error while authenticating with endpoint: https://XX-XXX-XX-XXXX.XXXXX.XX:8481/getJournal?jid=metrodev&segmentTxId=274488049&storageInfo=-64%3A1482798275%3A1538749182266%3ACID-4128f9aa-86b4-4add-9c9a-38c3b06c7384&inProgressOk=true

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.security.authentication.client.KerberosAuthenticator.wrapExceptionWithMessage(KerberosAuthenticator.java:232)

at org.apache.hadoop.security.authentication.client.KerberosAuthenticator.authenticate(KerberosAuthenticator.java:216)

at org.apache.hadoop.security.authentication.client.AuthenticatedURL.openConnection(AuthenticatedURL.java:348)

at org.apache.hadoop.hdfs.web.URLConnectionFactory.openConnection(URLConnectionFactory.java:219)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream$URLLog$1.run(EditLogFileInputStream.java:426)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream$URLLog$1.run(EditLogFileInputStream.java:420)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730)

at org.apache.hadoop.security.SecurityUtil.doAsUser(SecurityUtil.java:515)

at org.apache.hadoop.security.SecurityUtil.doAsCurrentUser(SecurityUtil.java:509)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream$URLLog.getInputStream(EditLogFileInputStream.java:419)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream.init(EditLogFileInputStream.java:139)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream.nextOpImpl(EditLogFileInputStream.java:190)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream.nextOp(EditLogFileInputStream.java:248)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.skipUntil(EditLogInputStream.java:151)

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:179)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.skipUntil(EditLogInputStream.java:151)

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:179)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:213)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadFSEdits(FSEditLogLoader.java:160)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadEdits(FSImage.java:890)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadFSImage(FSImage.java:745)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:323)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1090)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:714)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:632)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:694)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:937)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:910)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1643)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1710)

Caused by: javax.net.ssl.SSLHandshakeException: java.security.cert.CertificateExpiredException: NotAfter: Thu Dec 16 15:58:08 EST 2021

at sun.security.ssl.Alerts.getSSLException(Alerts.java:192)

at sun.security.ssl.SSLSocketImpl.fatal(SSLSocketImpl.java:1946)

at sun.security.ssl.Handshaker.fatalSE(Handshaker.java:316)

at sun.security.ssl.Handshaker.fatalSE(Handshaker.java:310)

at sun.security.ssl.ClientHandshaker.serverCertificate(ClientHandshaker.java:1639)

at sun.security.ssl.ClientHandshaker.processMessage(ClientHandshaker.java:223)

at sun.security.ssl.Handshaker.processLoop(Handshaker.java:1037)

at sun.security.ssl.Handshaker.process_record(Handshaker.java:965)

at sun.security.ssl.SSLSocketImpl.readRecord(SSLSocketImpl.java:1064)

at sun.security.ssl.SSLSocketImpl.performInitialHandshake(SSLSocketImpl.java:1367)

at sun.security.ssl.SSLSocketImpl.startHandshake(SSLSocketImpl.java:1395)

at sun.security.ssl.SSLSocketImpl.startHandshake(SSLSocketImpl.java:1379)

at sun.net.www.protocol.https.HttpsClient.afterConnect(HttpsClient.java:559)

at sun.net.www.protocol.https.AbstractDelegateHttpsURLConnection.connect(AbstractDelegateHttpsURLConnection.java:185)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.connect(HttpsURLConnectionImpl.java:167)

at org.apache.hadoop.security.authentication.client.KerberosAuthenticator.authenticate(KerberosAuthenticator.java:189)

... 33 more

Caused by: java.security.cert.CertificateExpiredException: NotAfter: Thu Dec 16 15:58:08 EST 2021

at sun.security.x509.CertificateValidity.valid(CertificateValidity.java:274)

at sun.security.x509.X509CertImpl.checkValidity(X509CertImpl.java:629)

at sun.security.validator.SimpleValidator.engineValidate(SimpleValidator.java:201)

at sun.security.validator.Validator.validate(Validator.java:262)

at sun.security.ssl.X509TrustManagerImpl.validate(X509TrustManagerImpl.java:330)

at sun.security.ssl.X509TrustManagerImpl.checkTrusted(X509TrustManagerImpl.java:237)

at sun.security.ssl.X509TrustManagerImpl.checkServerTrusted(X509TrustManagerImpl.java:113)

at org.apache.hadoop.security.ssl.ReloadingX509TrustManager.checkServerTrusted(ReloadingX509TrustManager.java:135)

at sun.security.ssl.AbstractTrustManagerWrapper.checkServerTrusted(SSLContextImpl.java:1099)

at sun.security.ssl.ClientHandshaker.serverCertificate(ClientHandshaker.java:1621)

... 44 more

2021-12-16 17:03:57,215 ERROR namenode.RedundantEditLogInputStream (RedundantEditLogInputStream.java:nextOp(222)) - Got error reading edit log input stream https://XX-XXX-XX-XXXX.XXXXX.XX:8481/getJournal?jid=metrodev&segmentTxId=274488049&storageInfo=-64%3A1482798275%3A1538749182266%3ACID-4128f9aa-86b4-4add-9c9a-38c3b06c7384&inProgressOk=true; failing over to edit log https://XX-XXX-XX-XXXX.XXXXX.XX:8481/getJournal?jid=metrodev&segmentTxId=274488049&storageInfo=-64%3A1482798275%3A1538749182266%3ACID-4128f9aa-86b4-4add-9c9a-38c3b06c7384&inProgressOk=true

org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream$PrematureEOFException: got premature end-of-file at txid 274488048; expected file to go up to 274488109

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:197)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.skipUntil(EditLogInputStream.java:151)

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:179)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:213)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadFSEdits(FSEditLogLoader.java:160)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadEdits(FSImage.java:890)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadFSImage(FSImage.java:745)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:323)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1090)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:714)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:632)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:694)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:937)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:910)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1643)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1710)

2021-12-16 17:03:57,216 INFO namenode.RedundantEditLogInputStream (RedundantEditLogInputStream.java:nextOp(177)) - Fast-forwarding stream 'https://XX-XXX-XX-XXXX.XXXXX.XX:8481/getJournal?jid=metrodev&segmentTxId=274488049&storageInfo=-64%3A1482798275%3A1538749182266%3ACID-4128f9aa-86b4-4add-9c9a-38c3b06c7384&inProgressOk=true' to transaction ID 274488049

2021-12-16 17:03:57,223 ERROR namenode.EditLogInputStream (EditLogFileInputStream.java:nextOpImpl(192)) - caught exception initializing https://XX-XXX-XX-XXXX.XXXXX.XX:8481/getJournal?jid=metrodev&segmentTxId=274488049&storageInfo=-64%3A1482798275%3A1538749182266%3ACID-4128f9aa-86b4-4add-9c9a-38c3b06c7384&inProgressOk=true

javax.net.ssl.SSLHandshakeException: Error while authenticating with endpoint: https://XX-XXX-XX-XXXX.XXXXX.XX:8481/getJournal?jid=metrodev&segmentTxId=274488049&storageInfo=-64%3A1482798275%3A1538749182266%3ACID-4128f9aa-86b4-4add-9c9a-38c3b06c7384&inProgressOk=true

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.security.authentication.client.KerberosAuthenticator.wrapExceptionWithMessage(KerberosAuthenticator.java:232)

at org.apache.hadoop.security.authentication.client.KerberosAuthenticator.authenticate(KerberosAuthenticator.java:216)

at org.apache.hadoop.security.authentication.client.AuthenticatedURL.openConnection(AuthenticatedURL.java:348)

at org.apache.hadoop.hdfs.web.URLConnectionFactory.openConnection(URLConnectionFactory.java:219)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream$URLLog$1.run(EditLogFileInputStream.java:426)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream$URLLog$1.run(EditLogFileInputStream.java:420)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730)

at org.apache.hadoop.security.SecurityUtil.doAsUser(SecurityUtil.java:515)

at org.apache.hadoop.security.SecurityUtil.doAsCurrentUser(SecurityUtil.java:509)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream$URLLog.getInputStream(EditLogFileInputStream.java:419)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream.init(EditLogFileInputStream.java:139)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream.nextOpImpl(EditLogFileInputStream.java:190)

at org.apache.hadoop.hdfs.server.namenode.EditLogFileInputStream.nextOp(EditLogFileInputStream.java:248)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.skipUntil(EditLogInputStream.java:151)

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:179)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.skipUntil(EditLogInputStream.java:151)

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:179)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:213)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadFSEdits(FSEditLogLoader.java:160)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadEdits(FSImage.java:890)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadFSImage(FSImage.java:745)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:323)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1090)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:714)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:632)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:694)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:937)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:910)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1643)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1710)

Caused by: javax.net.ssl.SSLHandshakeException: java.security.cert.CertificateExpiredException: NotAfter: Thu Dec 16 15:58:09 EST 2021

at sun.security.ssl.Alerts.getSSLException(Alerts.java:192)

at sun.security.ssl.SSLSocketImpl.fatal(SSLSocketImpl.java:1946)

at sun.security.ssl.Handshaker.fatalSE(Handshaker.java:316)

at sun.security.ssl.Handshaker.fatalSE(Handshaker.java:310)

at sun.security.ssl.ClientHandshaker.serverCertificate(ClientHandshaker.java:1639)

at sun.security.ssl.ClientHandshaker.processMessage(ClientHandshaker.java:223)

at sun.security.ssl.Handshaker.processLoop(Handshaker.java:1037)

at sun.security.ssl.Handshaker.process_record(Handshaker.java:965)

at sun.security.ssl.SSLSocketImpl.readRecord(SSLSocketImpl.java:1064)

at sun.security.ssl.SSLSocketImpl.performInitialHandshake(SSLSocketImpl.java:1367)

at sun.security.ssl.SSLSocketImpl.startHandshake(SSLSocketImpl.java:1395)

at sun.security.ssl.SSLSocketImpl.startHandshake(SSLSocketImpl.java:1379)

at sun.net.www.protocol.https.HttpsClient.afterConnect(HttpsClient.java:559)

at sun.net.www.protocol.https.AbstractDelegateHttpsURLConnection.connect(AbstractDelegateHttpsURLConnection.java:185)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.connect(HttpsURLConnectionImpl.java:167)

at org.apache.hadoop.security.authentication.client.KerberosAuthenticator.authenticate(KerberosAuthenticator.java:189)

... 33 more

Caused by: java.security.cert.CertificateExpiredException: NotAfter: Thu Dec 16 15:58:09 EST 2021

at sun.security.x509.CertificateValidity.valid(CertificateValidity.java:274)

at sun.security.x509.X509CertImpl.checkValidity(X509CertImpl.java:629)

at sun.security.validator.SimpleValidator.engineValidate(SimpleValidator.java:201)

at sun.security.validator.Validator.validate(Validator.java:262)

at sun.security.ssl.X509TrustManagerImpl.validate(X509TrustManagerImpl.java:330)

at sun.security.ssl.X509TrustManagerImpl.checkTrusted(X509TrustManagerImpl.java:237)

at sun.security.ssl.X509TrustManagerImpl.checkServerTrusted(X509TrustManagerImpl.java:113)

at org.apache.hadoop.security.ssl.ReloadingX509TrustManager.checkServerTrusted(ReloadingX509TrustManager.java:135)

at sun.security.ssl.AbstractTrustManagerWrapper.checkServerTrusted(SSLContextImpl.java:1099)

at sun.security.ssl.ClientHandshaker.serverCertificate(ClientHandshaker.java:1621)

... 44 more

2021-12-16 17:03:57,224 ERROR namenode.FSImage (FSEditLogLoader.java:loadEditRecords(222)) - Error replaying edit log at offset 0. Expected transaction ID was 274488049

org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream$PrematureEOFException: got premature end-of-file at txid 274488048; expected file to go up to 274488109

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:197)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.skipUntil(EditLogInputStream.java:151)

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:179)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:213)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadFSEdits(FSEditLogLoader.java:160)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadEdits(FSImage.java:890)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadFSImage(FSImage.java:745)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:323)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1090)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:714)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:632)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:694)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:937)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:910)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1643)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1710)

2021-12-16 17:03:57,335 WARN namenode.FSNamesystem (FSNamesystem.java:loadFromDisk(716)) - Encountered exception loading fsimage

org.apache.hadoop.hdfs.server.namenode.EditLogInputException: Error replaying edit log at offset 0. Expected transaction ID was 274488049

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:226)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadFSEdits(FSEditLogLoader.java:160)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadEdits(FSImage.java:890)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadFSImage(FSImage.java:745)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:323)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1090)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:714)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:632)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:694)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:937)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:910)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1643)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1710)

Caused by: org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream$PrematureEOFException: got premature end-of-file at txid 274488048; expected file to go up to 274488109

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:197)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.skipUntil(EditLogInputStream.java:151)

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:179)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:213)

... 12 more

2021-12-16 17:03:57,338 INFO handler.ContextHandler (ContextHandler.java:doStop(910)) - Stopped o.e.j.w.WebAppContext@44e3a2b2{/,null,UNAVAILABLE}{/hdfs}

2021-12-16 17:03:57,340 INFO server.AbstractConnector (AbstractConnector.java:doStop(318)) - Stopped ServerConnector@2101b44a{SSL,[ssl, http/1.1]}{XX-XXX-XX-XXXX.XXXXX.XX:50470}

2021-12-16 17:03:57,340 INFO handler.ContextHandler (ContextHandler.java:doStop(910)) - Stopped o.e.j.s.ServletContextHandler@134d26af{/static,file:///usr/hdp/3.0.1.0-187/hadoop-hdfs/webapps/static/,UNAVAILABLE}

2021-12-16 17:03:57,341 INFO handler.ContextHandler (ContextHandler.java:doStop(910)) - Stopped o.e.j.s.ServletContextHandler@421bba99{/logs,file:///var/log/hadoop/hdfs/,UNAVAILABLE}

2021-12-16 17:03:57,342 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:stop(210)) - Stopping NameNode metrics system...

2021-12-16 17:03:57,343 INFO impl.MetricsSinkAdapter (MetricsSinkAdapter.java:publishMetricsFromQueue(141)) - timeline thread interrupted.

2021-12-16 17:03:57,344 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:stop(216)) - NameNode metrics system stopped.

2021-12-16 17:03:57,344 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:shutdown(607)) - NameNode metrics system shutdown complete.

2021-12-16 17:03:57,344 ERROR namenode.NameNode (NameNode.java:main(1715)) - Failed to start namenode.

org.apache.hadoop.hdfs.server.namenode.EditLogInputException: Error replaying edit log at offset 0. Expected transaction ID was 274488049

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:226)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadFSEdits(FSEditLogLoader.java:160)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadEdits(FSImage.java:890)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadFSImage(FSImage.java:745)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:323)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1090)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:714)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:632)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:694)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:937)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:910)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1643)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1710)

Caused by: org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream$PrematureEOFException: got premature end-of-file at txid 274488048; expected file to go up to 274488109

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:197)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.skipUntil(EditLogInputStream.java:151)

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:179)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:213)

... 12 more

2021-12-16 17:03:57,345 INFO util.ExitUtil (ExitUtil.java:terminate(210)) - Exiting with status 1: org.apache.hadoop.hdfs.server.namenode.EditLogInputException: Error replaying edit log at offset 0. Expected transaction ID was 274488049

2021-12-16 17:03:57,347 INFO namenode.NameNode (LogAdapter.java:info(51)) - SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at XX-XXX-XX-XXXX.XXXXX.XX/XX.X.XX.XX

************************************************************/

Could you please help?

Thank you

Created 12-19-2021 04:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes you obviously cannot run safe mode when the namenodes are down

I can see the JN and ZKFC are all up can you run the below command on the last known good Namenode nn01 hopping you are running it as root

su -l hdfs -c "/usr/hdp/current/hadoop-hdfs-namenode/../hadoop/sbin/hadoop-daemon.sh start namenode"If nn01 starts without any issue then run the same command on nn02 else share the logs from nn01

Created 12-16-2021 08:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you please give me the steps that need to be follow in case the fs image and edit log are corrup in HA setup (2 namenodes)? I am still note able to start both namenode.

Thank you

Created 12-16-2021 08:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

According to the log namenode2 is not able to start because of that:

org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream$PrematureEOFException: got premature end-of-file at txid 274488048; expected file to go up to 274488109

Any idea on how to fix this?

Created 12-17-2021 08:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This issue seems linked to your previous posting. Your last healthy name node was nn01, right? The assumption here is you are logged in as root

Instructions to fix that one journal node.

1) Put both nn01 and nn02 in safe mode ( NN HA)

$ sudo su - hdfs

[hdfs@host ~]$ hdfs dfsadmin -safemode enterSafe mode is ON in nn01/<nn01_IP>:8020

Safe mode is ON in nn02/<nn02_IP>:8020

2) Save Namespace

[hdfs@host ~]$ hdfs dfsadmin -saveNamespaceSave namespace successful for nn01/<nn01_IP>:8020

Save namespace successful for nn02/<nn02_IP>:8020

3) Backup zip/tar the journal dir from a working JN node of (nn01) and copy it to the non-working JN's of (nn02)node to something like

/hadoop/hdfs/journal/<Cluster_name>/current

4) Leave safe mode

[hdfs@host ~]$ hdfs dfsadmin -safemode leaveSafe mode is OFF in nn01/<nn01_IP>:8020

Safe mode is OFF in nn02/<nn02_IP>:8020

4) Restart HDFS

From Ambari you can now start the nn01 first when it comes up then start nn02

Please let me know.

Created on 12-18-2021 01:58 PM - edited 12-18-2021 02:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @Shelton

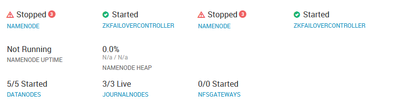

The thing is from the ambari UI console we have the following view:

As you can see both namenode are down is there a way to start them back?

Created 12-19-2021 04:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes you obviously cannot run safe mode when the namenodes are down

I can see the JN and ZKFC are all up can you run the below command on the last known good Namenode nn01 hopping you are running it as root

su -l hdfs -c "/usr/hdp/current/hadoop-hdfs-namenode/../hadoop/sbin/hadoop-daemon.sh start namenode"If nn01 starts without any issue then run the same command on nn02 else share the logs from nn01

Created on 12-19-2021 05:27 AM - edited 12-19-2021 05:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will try the command

Created 12-19-2021 05:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

May i contact you directly regarind this namenode issue if you are available?

Thank you

Created 12-19-2021 08:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any updates on the commands ?

Created 12-19-2021 11:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have executed the command

"su -l hdfs -c "/usr/hdp/current/hadoop-hdfs-namenode/../hadoop/sbin/hadoop-daemon.sh start namenode"

i have the following warning on the command line:

WARNING: Use of this script to start HDFS daemons is deprecated.

WARNING: Attempting to execute replacement "hdfs --daemon start" instead.

and the following error on the log:

2021-12-19 14:06:55,554 ERROR namenode.NameNode (NameNode.java:main(1715)) - Failed to start namenode.

org.apache.hadoop.hdfs.server.namenode.EditLogInputException: Error replaying edit log at offset 0. Expected transaction ID was 274473528

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:226)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadFSEdits(FSEditLogLoader.java:160)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadEdits(FSImage.java:890)

at org.apache.hadoop.hdfs.server.namenode.FSImage.loadFSImage(FSImage.java:745)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:323)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1090)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:714)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:632)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:694)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:937)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:910)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1643)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1710)

Caused by: org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream$PrematureEOFException: got premature end-of-file at txid 274473527; expected file to go up to 274474058

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:197)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.skipUntil(EditLogInputStream.java:151)

at org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream.nextOp(RedundantEditLogInputStream.java:179)

at org.apache.hadoop.hdfs.server.namenode.EditLogInputStream.readOp(EditLogInputStream.java:85)

at org.apache.hadoop.hdfs.server.namenode.FSEditLogLoader.loadEditRecords(FSEditLogLoader.java:213)

... 12 more

2021-12-19 14:06:55,557 INFO util.ExitUtil (ExitUtil.java:terminate(210)) - Exiting with status 1: org.apache.hadoop.hdfs.server.namenode.EditLogInputException: Error replaying edit log at offset 0. Expected transaction ID was 274473528

2021-12-19 14:06:55,558 INFO namenode.NameNode (LogAdapter.java:info(51)) - SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at XX-XXX-XX-XXXX.XXXXX.XX/XX.X.XX.XXOne thing, went to check the 3 host that have the journal nodes (nn1, nn2, host3). I i did the following command:

cd /hadoop/hdfs/journal/<Cluster_name>/current

ll | wc -l

9653they all have the same amount of files.