Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: CDSW 1.6 does not recognize NVIDA GPUs

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

CDSW 1.6 does not recognize NVIDA GPUs

Created 10-15-2019 08:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm struggling with enabling GPU Support on our CDSW 1.6. We have a CDSW Cluster with 1 Master-Node and 2 Woker-Nodes (one of the Worker-Nodes is equipped with NVIDIA GPUs)

What I did so far:

- Successfully upgraded our CDSW Cluster to CDSW 1.6

- Prepared the GPU-Host regarding this CDSW NVIDIA-GPU Guide

- Disabled Nouveau on the GPU-Host

- Installed successfully the NVIDIA Driver with respect to the right kernel header version.

(This NVIDIA-Guide was very useful) - Checked that the NVIDIA driver is correct installed with the command

nvidia-smiThe output shows the correct number of NVIDIA GPUs which are installed on the GPU-Host. - Added the GPU-Host with Cloudera-Manager to the CDSW-Cluster and added the worker and docker daemon role instances to the GPU-Host.

- Enable GPU Support for CDSW on Cloudera-Manager.

- Restarted the CDSW Service with Cloudera-Manager successfully.

When I log into the CDSW Web-GUI I don't see any available GPUs as shown in the CDSW NVIDIA-GPU Guide.

But what I can see is that my CDSW-Cluster now has more CPU and memory available since I added the GPU-Host to the CDSW-Cluster. That means that CDSW does recognize the new Host but unfortunately not my GPUs.

Also, when I type the command on the GPU-Host

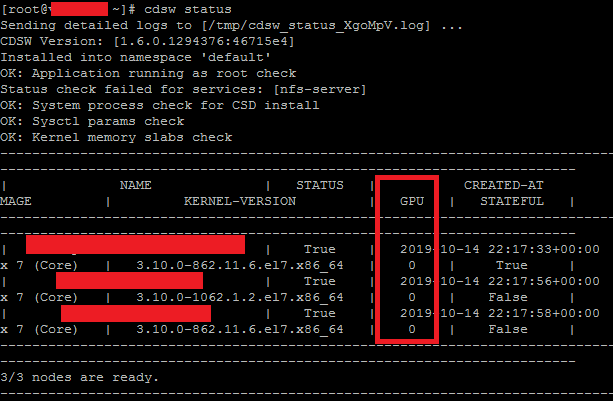

cdsw statusI can see that CDSW does not recognize the GPUs -> 0 GPUs available.

Can someone please help me out?

Thanks!

Created 10-17-2019 08:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Baris We’ve known issue where in air-gapped environment the nvidia-device-plugin-daemonset pod will throw ImagePullBackOff due to inability to find a k8s-device-plugin:1.11 image in the cache. Eventually it tries to looks up to the public repository over internet and fails because the env doen’t have public access. The workaround for that is to tag the image on CDSW hosts.

Please run the below command on the CDSW hosts.

# docker tag docker-registry.infra.cloudera.com/cdsw/third-party/nvidia/k8s-device-plugin:1.11 nvidia/k8s-device-plugin:1.11Let me know if your environment is outside internet. Try the above command and keep us posted.

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 10-15-2019 03:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Baris Can you paste a CDSW home page's screenshot here? Also check the latest cdsw.conf file on the master role host like below and upload here.

/run/cloudera-scm-agent/process/xx-cdsw-CDSW_MASTER/cdsw.conf (Where xx is the greatest number)

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 10-15-2019 04:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @GangWar,

thank you for the fast reply.

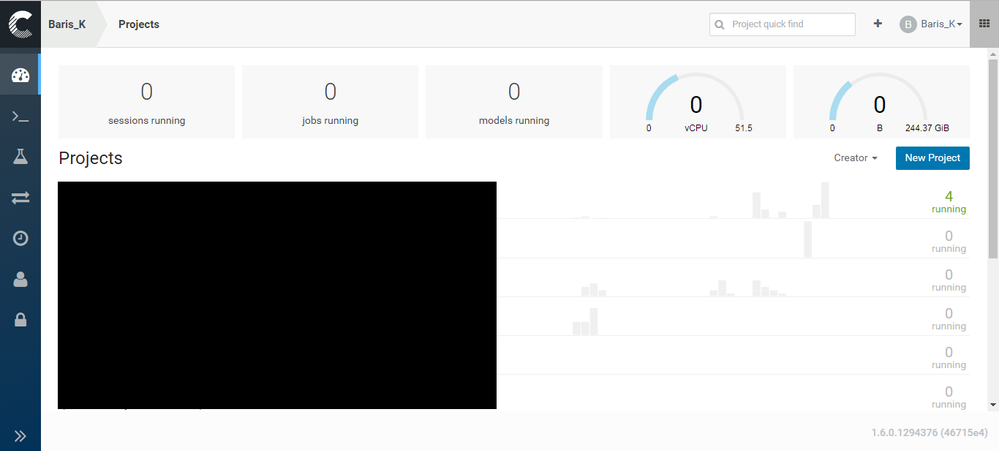

Here is a screenshot from our CDSW home page

And this my cdsw.conf file:

JAVA_HOME="/usr/java/jdk1.8.0_181"

KUBE_TOKEN="34434e.107e63749c742556"

DISTRO="CDH"

DISTRO_DIR="/opt/cloudera/parcels"

AUXILIARY_NODES="ADDRESS_OF_GPU-HOST"

CDSW_CLUSTER_SECRET="CLUSTER_SECRET_KEY"

DOMAIN="workbench.company.com"

LOGS_STAGING_DIR="/var/lib/cdsw/tmp"

MASTER_IP="ADDRESS_OF_CDSW-MASTER"

NO_PROXY=""

NVIDIA_GPU_ENABLE="true"

RESERVE_MASTER="false"

Regards!

Created 10-16-2019 03:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Baris Things looks good and the steps you followed looks also fine. Can you stop CDSW service and reboot the host and restart the CDSW again.

Check if the CDSW detecting GPU, If not then please run below command send us the cdsw logs from the cdsw master role host.

cdsw logs

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created on 10-16-2019 05:09 PM - edited 10-17-2019 01:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By restarting the CDSW role I ran into another issue but I could solve it by myself. The Docker Daemon on the GPU Host couldn't started and gave these error messages:

(I put it here for the sake of completeness)

+ result=0

+ shift

+ err_msg='Unable to copy [/run/cloudera-scm-agent/process/5807-cdsw-CDSW_DOCKER/cdsw.conf] => [/etc/cdsw/scratch/dockerd.conf].'

+ '[' 0 -eq 0 ']'

+ return

+ SERVICE_PID_FILE=/etc/cdsw/scratch/dockerd.pid

+ curr_pid=15571

+ echo 15571

+ dockerd_opts=()

+ dockerd_opts+=(--log-driver=journald)

+ dockerd_opts+=(--log-opt labels=io.kubernetes.pod.namespace,io.kubernetes.container.name,io.kubernetes.pod.name)

+ dockerd_opts+=(--iptables=false)

+ '[' devicemapper == devicemapper ']'

+ dockerd_opts+=(-s devicemapper)

+ dockerd_opts+=(--storage-opt dm.basesize=100G)

+ dockerd_opts+=(--storage-opt dm.thinpooldev=/dev/mapper/docker-thinpool)

+ dockerd_opts+=(--storage-opt dm.use_deferred_removal=true)

+ /usr/bin/nvidia-smi

+ '[' 0 -eq 0 ']'

+ '[' true == true ']'

+ dockerd_opts+=(--add-runtime=nvidia=${CDSW_ROOT}/nvidia/bin/nvidia-container-runtime)

+ dockerd_opts+=(--default-runtime=nvidia)

+ mkdir -p /var/lib/cdsw/docker-tmp

+ die_on_error 0 'Unable to create directory [/var/lib/cdsw/docker-tmp].'

+ result=0

+ shift

+ err_msg='Unable to create directory [/var/lib/cdsw/docker-tmp].'

+ '[' 0 -eq 0 ']'

+ return

+ HTTP_PROXY=

+ HTTPS_PROXY=

+ NO_PROXY=

+ ALL_PROXY=

+ DOCKER_TMPDIR=/var/lib/cdsw/docker-tmp

+ exec /opt/cloudera/parcels/CDSW-1.6.0.p1.1294376/docker/bin/dockerd --log-driver=journald --log-opt labels=io.kubernetes.pod.namespace,io.kubernetes.container.name,io.kubernetes.pod.name --iptables=false -s devicemapper --storage-opt dm.basesize=100G --storage-opt dm.thinpooldev=/dev/mapper/docker-thinpool --storage-opt dm.use_deferred_removal=true --add-runtime=nvidia=/opt/cloudera/parcels/CDSW-1.6.0.p1.1294376/nvidia/bin/nvidia-container-runtime --default-runtime=nvidia

time="2019-10-17T00:54:34.267458369+02:00" level=info msg="libcontainerd: new containerd process, pid: 15799"

Error starting daemon: error initializing graphdriver: devmapper: Base Device UUID and Filesystem verification failed: devicemapper: Error running deviceCreate (ActivateDevice) dm_task_run failed

I could solve it as written here by removing /var/lib/docker. After this, restarting the CDSW role was possible.

However, the reboot of the GPU-Host and restart of the role changed nothing unfortunately.

How can I send you the logs, because I can't attach them here?

Thank you!

Created 10-17-2019 08:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Baris We’ve known issue where in air-gapped environment the nvidia-device-plugin-daemonset pod will throw ImagePullBackOff due to inability to find a k8s-device-plugin:1.11 image in the cache. Eventually it tries to looks up to the public repository over internet and fails because the env doen’t have public access. The workaround for that is to tag the image on CDSW hosts.

Please run the below command on the CDSW hosts.

# docker tag docker-registry.infra.cloudera.com/cdsw/third-party/nvidia/k8s-device-plugin:1.11 nvidia/k8s-device-plugin:1.11Let me know if your environment is outside internet. Try the above command and keep us posted.

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created on 10-17-2019 08:19 AM - edited 10-17-2019 08:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @GangWar,

thank you, this was the solution!

Yes we have an airgapped environment.

After I ran the command you suggested on my GPU-Host, CDSW detected the GPUs.

docker tag docker-registry.infra.cloudera.com/cdsw/third-party/nvidia/k8s-device-plugin:1.11 nvidia/k8s-device-plugin:1.11

A reboot or a restart of the CDSW roles was not necessary in my case.

Maybe the docs of Cloudera could be updated with this information.

Thank you again Sir!

Regards.

Created 10-17-2019 08:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cheers,

Keep visiting and contributing Cloudera Community

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.