Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: CDSW bad status

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

CDSW bad status

Created on

10-22-2019

09:38 AM

- last edited on

10-22-2019

01:37 PM

by

cjervis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Failed to connect to Kubernetes Master api. * Web is not yet up.

I need help

Created 10-23-2019 10:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@lafi_oussama What version of CDSW you are using and is this RPM based install or CSD based?

The error could caused by various issue, however could you please execute below steps (If this is CSD based and managed by Cloudera Manager):

- Stop the CDSW service from the CM.

- Clear all IP tables from the Master Role host by running below command:

sudo iptables -P INPUT ACCEPT

sudo iptables -P FORWARD ACCEPT

sudo iptables -P OUTPUT ACCEPT

sudo iptables -t nat -F

sudo iptables -t mangle -F

sudo iptables -F - On master node run weave reset:

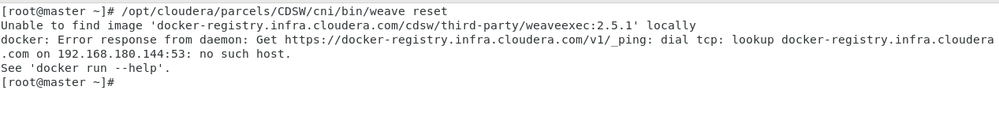

# opt/cloudera/parcels/CDSW/cni/bin/weave reset

- Goto the CM > CDSW > Action > Prepare Node. Wait till this finish.

- Goto the CM > CDSW > Instances > and select the Docker role on the master host and start, after finishing select Master role on master host start, wait till finish and then select Application on Master node and start, then select Docker, Worker daemons on Worker nodes and start it.

Let us know if this resolves the issue. If not then please send us the following command output from the CDSW master node:

#cdsw validate

#cdsw status

#ps -eaf | grep -i kube

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 10-24-2019 04:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your msgs, I use cdsw 1.6 csd based.

But the error is still appears.

[root@master ~]# cdsw validate

[Validating host configuration]

> Prechecking OS Version........[OK]

> Prechecking kernel Version........[OK]

> Prechecking that SELinux is disabled........[OK]

> Prechecking scaling limits for processes........

WARNING: Cloudera Data Science Workbench recommends that all users have a max-user-processes limit of at least 65536.

It is currently set to [54991] as per 'ulimit -u'

Press enter to continue

> Prechecking scaling limits for open files........

WARNING: Cloudera Data Science Workbench recommends that all users have a max-open-files limit set to 1048576.

It is currently set to [1024] as per 'ulimit -n'

Press enter to continue

> Loading kernel module [ip_tables]...

> Loading kernel module [iptable_nat]...

> Loading kernel module [iptable_filter]...

> Prechecking that iptables are not configured........

WARNING: Cloudera Data Science Workbench requires iptables, but does not support preexisting iptables rules. Press enter to continue

> Prechecking kernel parameters........[OK]

> Prechecking to ensure kernel memory accounting disabled:........[OK]

> Prechecking Java distribution and version........[OK]

> Checking unlimited Java encryption policy for AES........[OK]

> Prechecking size of root volume........[OK]

[Validating networking setup]

> Checking if kubelet iptables rules exist

The following chains are missing from iptables: [KUBE-EXTERNAL-SERVICES, WEAVE-NPC-EGRESS, WEAVE-NPC, WEAVE-NPC-EGRESS-ACCEPT, KUBE-SERVICES, WEAVE-NPC-INGRESS, WEAVE-NPC-EGRESS-DEFAULT, WEAVE-NPC-DEFAULT, WEAVE-NPC-EGRESS-CUSTOM, KUBE-FIREWALL]

WARNING:: Verification of iptables rules failed: 1

> Checking if DNS server is running on localhost

> Checking the number of DNS servers in resolv.conf

> Checking DNS entries for CDSW main domain

> Checking reverse DNS entries for CDSW main domain

WARNING:: DNS doesn't resolve 192.168.180.144 to master.example.com; DNS is not configured properly: 1

> Checking DNS entries for CDSW wildcard domain

WARNING:: DNS doesn't resolve *.master.example.com to 192.168.180.144; DNS is not configured properly: 1

> Checking that firewalld is disabled

[Validating Kubernetes versions]

> Checking kubernetes client version

> Checking kubernetes server version

WARNING:: Kubernetes server is not running, version couldn't be checked.: 1

[Validating NFS and Application Block Device setup]

> Checking if nfs or nfs-server is active and enabled

> Checking if rpcbind.socket is active and enabled

> Checking if rpcbind.service is active and enabled

> Checking if the project folder is exported over nfs

WARNING:: The projects folder /var/lib/cdsw/current/projects must be exported over nfs: 1

> Checking if application mountpoint exists

> Checking if the application directory is on a separate block device

WARNING:: The application directory is mounted on the root device.: 1

> Checking the root directory (/) free space

> Checking the application directory (/var/lib/cdsw) free space

[Validating Kubernetes cluster state]

> Checking if we have exactly one master node

WARNING:: There must be exactly one Kubernetes node labelled 'stateful=true': 1

> Checking if the Kubernetes nodes are ready

> Checking kube-apiserver pod

WARNING: Unable to reach k8s pod kube-apiserver.

WARNING: [kube-apiserver] pod(s) are not ready under kube-system namespace.

WARNING: Unable to bring up kube-apiserver in the kube-system cluster. Skipping other checks..

[Validating CDSW application]

> Checking connectivity over ingress

WARNING:: Could not curl the application over the ingress controller: 7

--------------------------------------------------------------------------

Errors detected.

Please review the issues listed above. Further details can be collected by

capturing logs from all nodes using "cdsw logs".

[root@master ~]# cdsw status

Sending detailed logs to [/tmp/cdsw_status_lKFEXD.log] ...

CDSW Version: [1.6.0.1294376:46715e4]

Installed into namespace 'default'

OK: Application running as root check

OK: NFS service check

Processes are not running: [kubelet]. Check the CDSW role logs in Cloudera Manager for more details.

OK: Sysctl params check

OK: Kernel memory slabs check

Failed to connect to Kubernetes Master api.

Checking web at url: http://master.example.com

Web is not yet up.

Cloudera Data Science Workbench is not ready yet

Created 10-24-2019 06:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From the error message on the weave reset, seems the docker daemon is not running or it's unable to handshake with the underlying network.

Since CDSW 1.6 stale configurations will be removed during the restart itself. Could you please try to review the application by these steps.

> stop all roles in CDSW

> validate no services with kubernetes running in the node [ps -ef | grep -i kube]. if so kill them

> start the master node alone -- if the service has more than one node

1) docker daemon [DD]

2) master

3) application

> wait for a while as such the pods get created [ this may take 10 - 15 mins]

> once the application comes up, then start adding the worker node one after the other by starting roles in this order

1) DD

2) worker

if the service runs on a single node and the role by role start does not work, share the output of below command from the master node.

`kubectl get pods --all-namespaces`

Created 10-24-2019 07:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did what you said but didn't works.

[root@master ~]# kubectl get pods --all-namespaces

The connection to the server localhost:8080 was refused - did you specify the right host or port?

Created 10-24-2019 07:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Was the kubectl command executed as root?

If not, could you please try as root and share the output?

If yes, the response indicates that the Kube API server is not up. pls share the output of ps -ef | grep -i kube from master node.

By the way, how many nodes in CDSW.

Created 10-24-2019 08:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@lafi_oussama The connection refused error looks likes the DNS and on top of that you are using CDSW 1.6 version so you need to be enable IPV6 in all the CDSW hosts. It's a known issue with this version.

Please enable IPV6 by below method on CDSW hosts:

- Edit /etc/default/grub and delete the ipv6.disable=1 entry from GRUB_CMDLINE_LINUX. For example:

GRUB_CMDLINE_LINUX="rd.lvm.lv=rhel/swap crashkernel=auto rd.lvm.lv=rhel/root"

- Run the grub2-mkconfig command to regenerate the grub.cfg file:

grub2-mkconfig -o /boot/grub2/grub.cfg

Alternatively, on UEFI systems, you would run the following command:grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg

- Delete the /etc/sysctl.d/ipv6.conf file which contains the following entry:

# To disable for all interfaces net.ipv6.conf.all.disable_ipv6 = 1 # the protocol can be disabled for specific interfaces as well. net.ipv6.conf.<interface>.disable_ipv6 = 1

- Check the contents of the /etc/ssh/sshd_config file and make sure the AddressFamily line is commented out.

#AddressFamily inet

- Make sure the following line exists in /etc/hosts, and is not commented out:

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

- Enable IPv6 support on the ethernet interface. Double check /etc/sysconfig/network and /etc/sysconfig/network-scripts/ifcfg-* to ensure you've set IPV6INIT=yes. This setting is required for IPv6 static and DHCP assignment of IPv6 addresses.

- Stop the Cloudera Data Science Workbench service.

- Reboot all the Cloudera Data Science Workbench hosts to enable IPv6 support.

- Test your changes:

- Run dmesg. The output should NOT contain ipv6.disable=1 as shown here:

$ dmesg [ 0.000000] Kernel command line: BOOT_IMAGE=/boot/vmlinuz-3.10.0-514.el7.x86_64 root=UUID=3e109aa3-f171-4614-ad07-c856f20f9d25 ro console=tty0 crashkernel=auto console=ttyS0,115200 ipv6.disable=1 [ 5.956480] IPv6: Loaded, but administratively disabled, reboot required to enable

This shows that IPv6 was not correctly enabled on reboot. - Run sysctl to ensure IPv6 is not disabled. The output should NOT contain the following line:

net.ipv6.conf.all.disable_ipv6 =1

- Run the ip a commands to check IP addresses on all ethernet interfaces such as weave. They should now display an inet6 address.

- Run dmesg. The output should NOT contain ipv6.disable=1 as shown here:

- Start the Cloudera Data Science Workbench service. following the steps as I suggested earlier in the post https://community.cloudera.com/t5/Support-Questions/CDSW-bad-status/m-p/281186/highlight/true#M20923... .

- Run dmesg on the CDSW hosts to ensure there are no segfault errors seen.

Also it's worth to check if you have followed all the prerequisite of CDSW like wildcard DNS setup, SeLinux etc. Make sure your DNS is resolving the names correctly.

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 10-24-2019 09:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How I can check if DNS works good

Created 10-24-2019 11:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@lafi_oussama you can simply do a nslookup <hostname>

Cheers!

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 10-25-2019 01:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I turn off wifi.

[root@master ~]# nslookup master.example.com

Server: 127.0.0.1

Address: 127.0.0.1#53

Name: master.example.com

Address: 192.168.180.144

then when wifi is ON

[root@master ~]# nslookup master.example.com

Server: 192.168.180.2

Address: 192.168.180.2#53

** server can't find master.example.com: NXDOMAIN