Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: CM-HDFS

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

CM-HDFS

- Labels:

-

HDFS

Created 08-23-2023 08:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

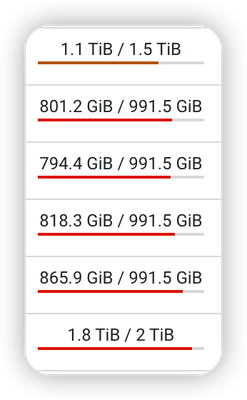

Does HDFS perform load balancing on the storage of data blocks when the disk space of each node is different?

Created 08-24-2023 12:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Crash The HDFS balancer works based on the DFS used%. By default the threshold is 10%. So if the DFS used % on a particular data node is greater or lesser than 10% of the average DFS used% across all data nodes, then running the balancer will help to balance the nodes, if the DFS used % is not greater or less than 10% of the average DFS used%, then HDFS will consider the data node to be balanced.

Created 08-25-2023 06:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Generally, the size of data blocks would be 128mb across all the Datanodes. But, if you have small files then you might see smaller blocks on some Datanodes as well. So Datanodes with different disk spaces would have uneven "Number of Blocks" and the Balancing happens based on the difference in the DFS usage and not by the difference in block count.

Created 08-23-2023 11:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Crash, Welcome to our community! To help you get the best possible answer, I have tagged in our HDFS experts @rki_ @willx who may be able to assist you further.

Please feel free to provide any additional information or details about your query, and we hope that you will find a satisfactory solution to your question.

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community:

Created 08-24-2023 12:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Crash The HDFS balancer works based on the DFS used%. By default the threshold is 10%. So if the DFS used % on a particular data node is greater or lesser than 10% of the average DFS used% across all data nodes, then running the balancer will help to balance the nodes, if the DFS used % is not greater or less than 10% of the average DFS used%, then HDFS will consider the data node to be balanced.

Created 08-24-2023 03:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Crash you can set up load balance at a disk level for the Datanodes. Refer : https://docs.cloudera.com/documentation/enterprise/5-16-x/topics/admin_dn_storage_balancing.html

Created 08-24-2023 08:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The size of HDFS data blocks should be the same on each node, even if the disk space size of each node is different. right ?

Created 08-25-2023 06:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Generally, the size of data blocks would be 128mb across all the Datanodes. But, if you have small files then you might see smaller blocks on some Datanodes as well. So Datanodes with different disk spaces would have uneven "Number of Blocks" and the Balancing happens based on the difference in the DFS usage and not by the difference in block count.

Created 08-31-2023 10:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Crash, Have the replies helped resolve your issue? If so, please mark the appropriate reply as the solution, as it will make it easier for others to find the answer in the future. d?

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community: