Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Can you create a hive table in ORC Format from...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Can you create a hive table in ORC Format from SparkSQL directly

- Labels:

-

Apache Hive

-

Apache Spark

Created 05-31-2016 07:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've done so with a sqlContext.sql ("create table...") then a sqlContext.sql("insert into")

but a dataframe.write.orc will produce an ORC file that cannot be seen as hive.

What are all the ways to work with ORC from Spark?

Created 05-31-2016 07:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you tried with this syntax?

var Rddtb= objHiveContext.sql("select * from sample")

val dfTable = Rddtb.toDF()

dfTable.write.format("orc").mode(SaveMode.Overwrite).saveAsTable("db1.test1")

Created 05-31-2016 07:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you tried with this syntax?

var Rddtb= objHiveContext.sql("select * from sample")

val dfTable = Rddtb.toDF()

dfTable.write.format("orc").mode(SaveMode.Overwrite).saveAsTable("db1.test1")

Created 05-31-2016 08:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jitendra Yadav that worked for me in zeppelin and the data looks good.

Created 06-08-2016 08:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Answer to your Question "What are all the ways to work with ORC from Spark?"

I am using spark-sql and have created ORC table as well as other formats and found no issue.

Created on 06-15-2016 07:46 AM - edited 08-19-2019 04:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

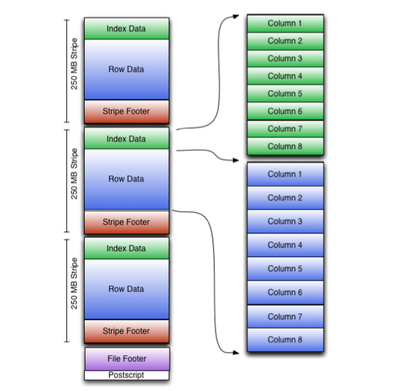

The Optimized Row Columnar (ORC) file format provides a highly efficient way to store Hive data.

It just like a File to store group of rows called stripes, along with auxiliary information in a file footer. It just a storage format, nothing to do with ORC/Spark.