Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Cannot convert CHOICE, type must be explicit

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cannot convert CHOICE, type must be explicit

- Labels:

-

Apache NiFi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all

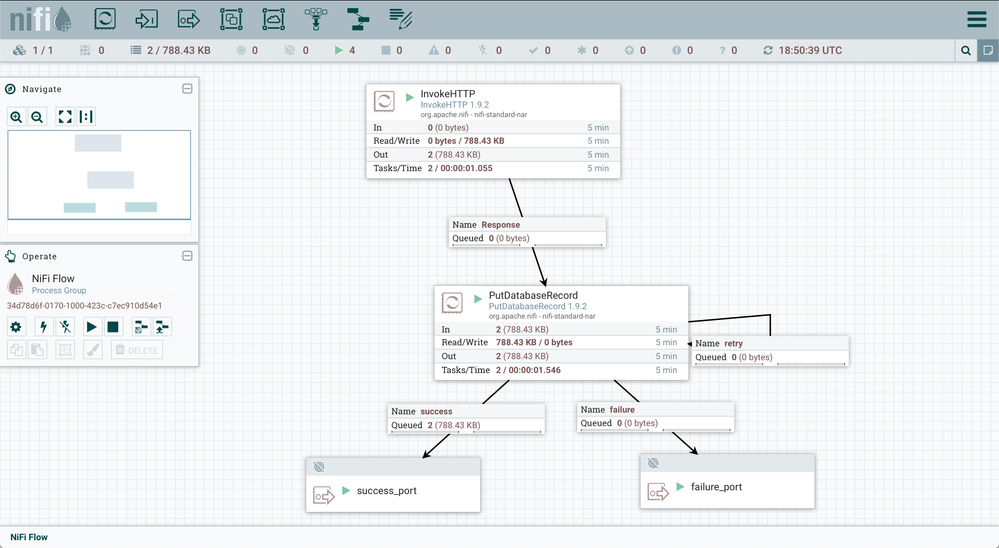

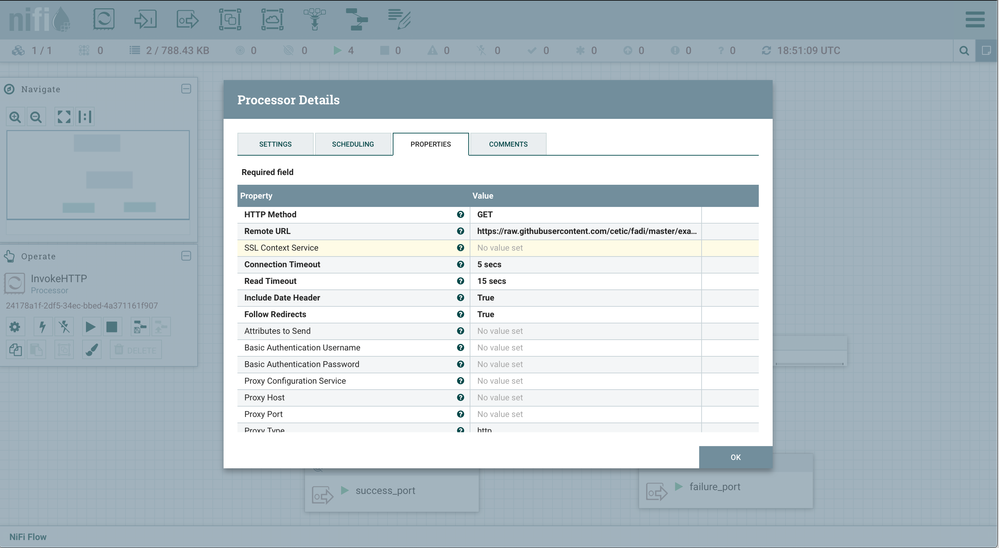

I have the following Nifi template which ingests data from a csv file and put them in a database.

The template is available here

https://github.com/cetic/fadi/blob/master/examples/basic/basic_example_final_template.xml

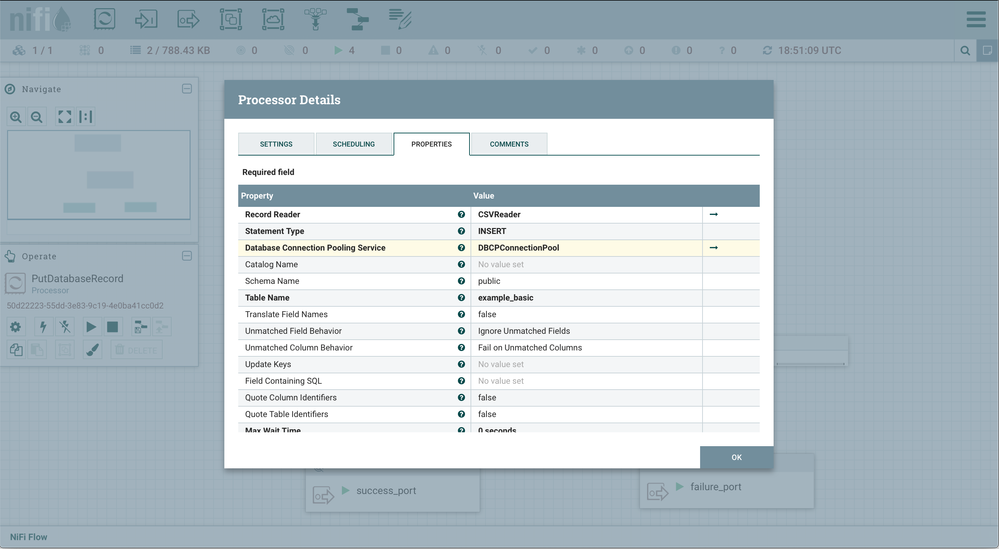

The template is composed of two processes InvokeHTTP and PutDatabaseRecord

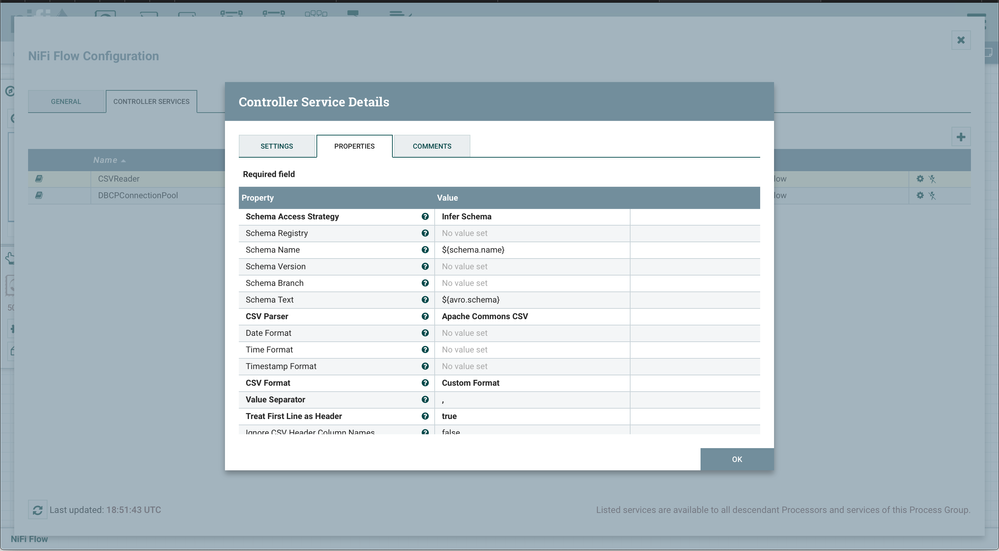

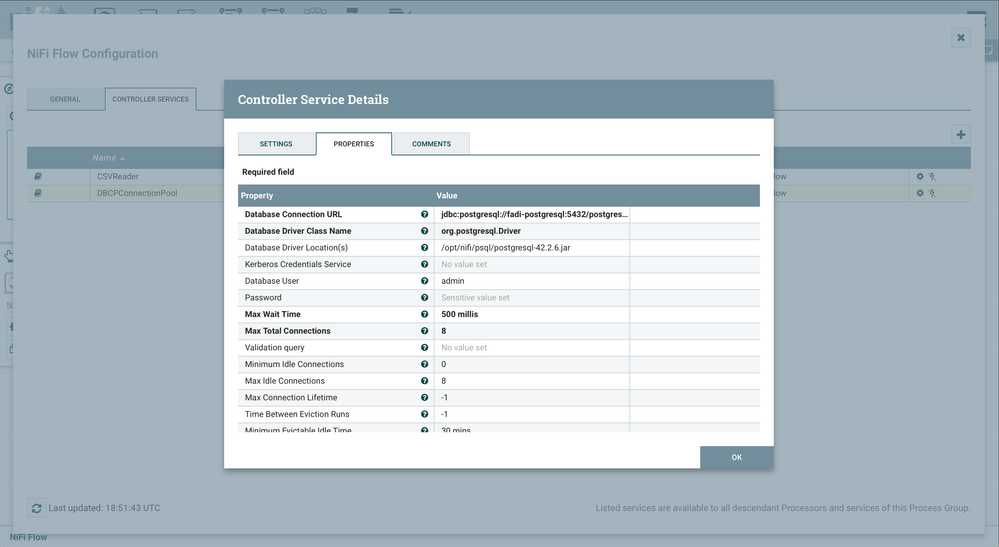

It has also two controller services : CSV Reader and DBCPCOnnectionPool

The complete explanation of this small example is available here

https://github.com/cetic/fadi/blob/master/USERGUIDE.md#3-ingest-measurements

The csv file is here

https://raw.githubusercontent.com/cetic/fadi/master/examples/basic/sample_data.csv

The table creation is:

CREATE TABLE example_basic (

measure_ts TIMESTAMP NOT NULL,

temperature FLOAT (50)

);

Until Apache Nifi 1.9.2 everything works are expected!

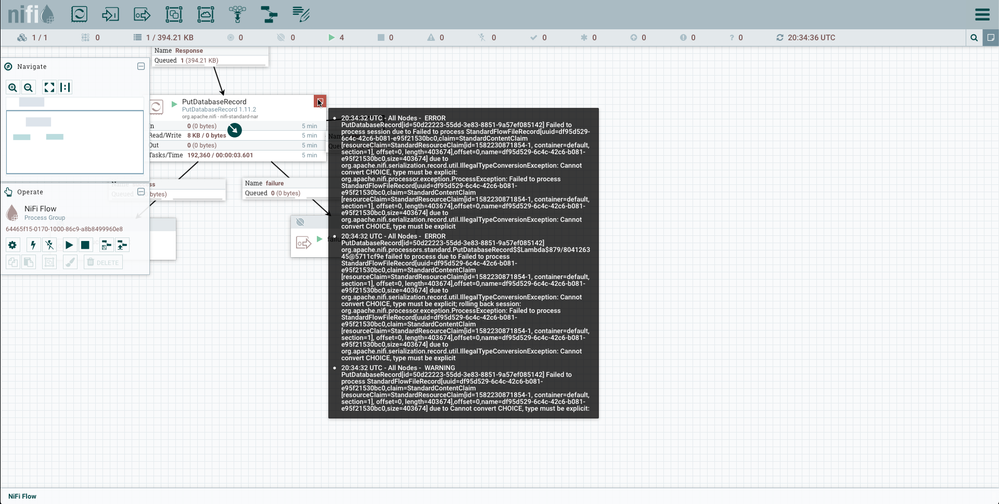

Since Apache Nifi 10.0.0, I have an Error in the PutDatabaseRecord processor

"org.apache.nifi.serialization.record.util.IllegalTypeConversionException: Cannot convert CHOICE, type must be explicit"

It seems to be related to this https://github.com/apache/nifi/blob/master/nifi-commons/nifi-record/src/main/java/org/apache/nifi/se...

Any idea how can I edit the template to fix this error?

Created 02-25-2020 06:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

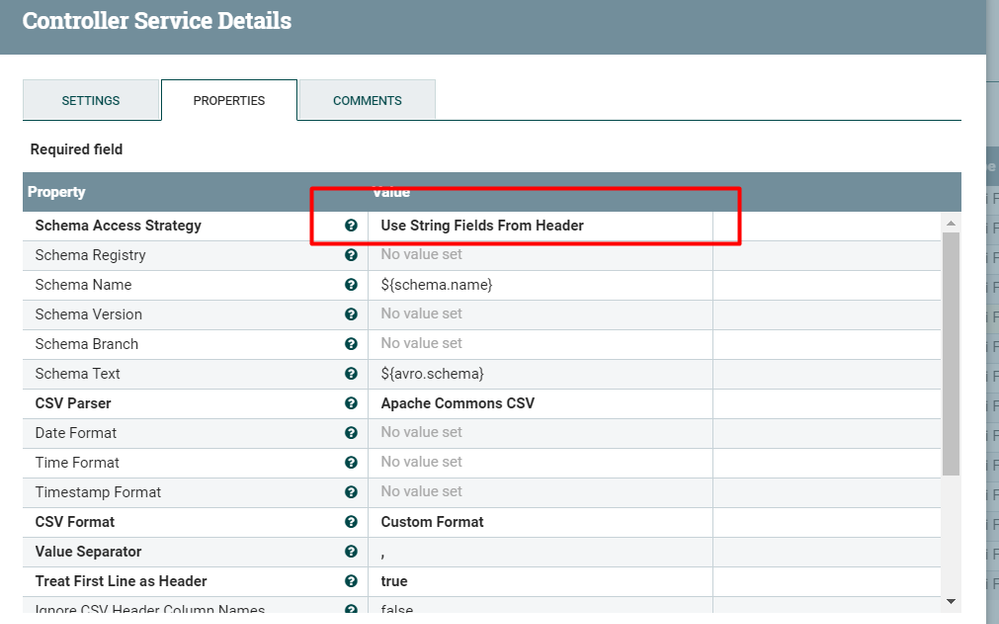

The solution is to change the schema access strategy to Use String Fields From Header.

Created 02-20-2020 01:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried to update the schema text of CSV reader with the following content:

{

"type" : "record",

"name" : "userInfo",

"namespace" : "my.example",

"fields" : [{"name" : "measure_ts", "type" : {

"type" : "long",

"logicalType" : "timestamp-millis"

}},{"name" : "temperature", "type" : "long"}]

}

but always the same error !

Created 02-25-2020 06:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The solution is to change the schema access strategy to Use String Fields From Header.