Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Cannot start NameNode service on the master no...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cannot start NameNode service on the master node

- Labels:

-

Apache Ambari

Created on 04-09-2018 12:51 PM - edited 08-17-2019 08:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I created a cluster using Ambari. The problem is that most of services are marked in red. During the installation process the setup of Solr failed, which caused the aborting of the rest of setup jobs.

Now I am trying to install services manually from OpenStack UI.

For example, I tried to run NameNode/HDFS in the master node, but it fails with the following message (see below). I wonder what is the correct way to re-install the services. Is there a preferred installation sequence? Or is it better to reset Ambari and start from 0? (I hope that this option can be avoided). Attached is a screenshot of the state ofservices on a master node.

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/namenode.py", line 420, in <module>

NameNode().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/namenode.py", line 101, in start

upgrade_suspended=params.upgrade_suspended, env=env)

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/hdfs_namenode.py", line 156, in namenode

create_log_dir=True

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/utils.py", line 269, in service

Execute(daemon_cmd, not_if=process_id_exists_command, environment=hadoop_env_exports)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 155, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 273, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of 'ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode'' returned 1. starting namenode, logging to /var/log/hadoop/hdfs/hadoop-hdfs-namenode-eureambarimaster1.local.eurecat.org.outstdout: /var/lib/ambari-agent/data/output-152.txt

2018-04-09 12:37:42,621 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.3.0-37

2018-04-09 12:37:42,623 - Checking if need to create versioned conf dir /etc/hadoop/2.5.3.0-37/0

2018-04-09 12:37:42,625 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2018-04-09 12:37:42,648 - call returned (1, '/etc/hadoop/2.5.3.0-37/0 exist already', '')

2018-04-09 12:37:42,648 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2018-04-09 12:37:42,671 - checked_call returned (0, '')

2018-04-09 12:37:42,671 - Ensuring that hadoop has the correct symlink structure

2018-04-09 12:37:42,672 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2018-04-09 12:37:42,814 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.3.0-37

2018-04-09 12:37:42,816 - Checking if need to create versioned conf dir /etc/hadoop/2.5.3.0-37/0

2018-04-09 12:37:42,818 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2018-04-09 12:37:42,841 - call returned (1, '/etc/hadoop/2.5.3.0-37/0 exist already', '')

2018-04-09 12:37:42,841 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2018-04-09 12:37:42,863 - checked_call returned (0, '')

2018-04-09 12:37:42,864 - Ensuring that hadoop has the correct symlink structure

2018-04-09 12:37:42,864 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2018-04-09 12:37:42,865 - Group['livy'] {}

2018-04-09 12:37:42,866 - Group['spark'] {}

2018-04-09 12:37:42,867 - Group['zeppelin'] {}

2018-04-09 12:37:42,867 - Group['hadoop'] {}

2018-04-09 12:37:42,867 - Group['users'] {}

2018-04-09 12:37:42,867 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2018-04-09 12:37:42,868 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2018-04-09 12:37:42,869 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2018-04-09 12:37:42,869 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2018-04-09 12:37:42,870 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2018-04-09 12:37:42,870 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2018-04-09 12:37:42,871 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users']}

2018-04-09 12:37:42,871 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2018-04-09 12:37:42,872 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2018-04-09 12:37:42,872 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2018-04-09 12:37:42,873 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2018-04-09 12:37:42,874 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop']}

2018-04-09 12:37:42,874 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-04-09 12:37:42,876 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2018-04-09 12:37:42,880 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2018-04-09 12:37:42,881 - Group['hdfs'] {}

2018-04-09 12:37:42,881 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': [u'hadoop', u'hdfs']}

2018-04-09 12:37:42,881 - FS Type:

2018-04-09 12:37:42,882 - Directory['/etc/hadoop'] {'mode': 0755}

2018-04-09 12:37:42,895 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2018-04-09 12:37:42,896 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2018-04-09 12:37:42,909 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2018-04-09 12:37:42,917 - Skipping Execute[('setenforce', '0')] due to only_if

2018-04-09 12:37:42,917 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2018-04-09 12:37:42,919 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2018-04-09 12:37:42,920 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'create_parents': True, 'cd_access': 'a'}

2018-04-09 12:37:42,924 - File['/usr/hdp/current/hadoop-client/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2018-04-09 12:37:42,925 - File['/usr/hdp/current/hadoop-client/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2018-04-09 12:37:42,926 - File['/usr/hdp/current/hadoop-client/conf/log4j.properties'] {'content': ..., 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2018-04-09 12:37:42,937 - File['/usr/hdp/current/hadoop-client/conf/hadoop-metrics2.properties'] {'content': Template('hadoop-metrics2.properties.j2'), 'owner': 'hdfs', 'group': 'hadoop'}

2018-04-09 12:37:42,938 - File['/usr/hdp/current/hadoop-client/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2018-04-09 12:37:42,939 - File['/usr/hdp/current/hadoop-client/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2018-04-09 12:37:42,943 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop'}

2018-04-09 12:37:42,946 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2018-04-09 12:37:43,136 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.3.0-37

2018-04-09 12:37:43,138 - Checking if need to create versioned conf dir /etc/hadoop/2.5.3.0-37/0

2018-04-09 12:37:43,140 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2018-04-09 12:37:43,163 - call returned (1, '/etc/hadoop/2.5.3.0-37/0 exist already', '')

2018-04-09 12:37:43,163 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2018-04-09 12:37:43,186 - checked_call returned (0, '')

2018-04-09 12:37:43,186 - Ensuring that hadoop has the correct symlink structure

2018-04-09 12:37:43,187 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2018-04-09 12:37:43,187 - Stack Feature Version Info: stack_version=2.5, version=2.5.3.0-37, current_cluster_version=2.5.3.0-37 -> 2.5.3.0-37

2018-04-09 12:37:43,202 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.3.0-37

2018-04-09 12:37:43,204 - Checking if need to create versioned conf dir /etc/hadoop/2.5.3.0-37/0

2018-04-09 12:37:43,206 - call[('ambari-python-wrap', u'/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2018-04-09 12:37:43,228 - call returned (1, '/etc/hadoop/2.5.3.0-37/0 exist already', '')

2018-04-09 12:37:43,229 - checked_call[('ambari-python-wrap', u'/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

2018-04-09 12:37:43,251 - checked_call returned (0, '')

2018-04-09 12:37:43,251 - Ensuring that hadoop has the correct symlink structure

2018-04-09 12:37:43,251 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2018-04-09 12:37:43,263 - checked_call['rpm -q --queryformat '%{version}-%{release}' hdp-select | sed -e 's/\.el[0-9]//g''] {'stderr': -1}

2018-04-09 12:37:43,300 - checked_call returned (0, '2.5.3.0-37', '')

2018-04-09 12:37:43,304 - Directory['/etc/security/limits.d'] {'owner': 'root', 'create_parents': True, 'group': 'root'}

2018-04-09 12:37:43,310 - File['/etc/security/limits.d/hdfs.conf'] {'content': Template('hdfs.conf.j2'), 'owner': 'root', 'group': 'root', 'mode': 0644}

2018-04-09 12:37:43,311 - XmlConfig['hadoop-policy.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2018-04-09 12:37:43,320 - Generating config: /usr/hdp/current/hadoop-client/conf/hadoop-policy.xml

2018-04-09 12:37:43,320 - File['/usr/hdp/current/hadoop-client/conf/hadoop-policy.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2018-04-09 12:37:43,329 - XmlConfig['ssl-client.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2018-04-09 12:37:43,337 - Generating config: /usr/hdp/current/hadoop-client/conf/ssl-client.xml

2018-04-09 12:37:43,337 - File['/usr/hdp/current/hadoop-client/conf/ssl-client.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2018-04-09 12:37:43,343 - Directory['/usr/hdp/current/hadoop-client/conf/secure'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'cd_access': 'a'}

2018-04-09 12:37:43,344 - XmlConfig['ssl-client.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf/secure', 'configuration_attributes': {}, 'configurations': ...}

2018-04-09 12:37:43,351 - Generating config: /usr/hdp/current/hadoop-client/conf/secure/ssl-client.xml

2018-04-09 12:37:43,352 - File['/usr/hdp/current/hadoop-client/conf/secure/ssl-client.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2018-04-09 12:37:43,358 - XmlConfig['ssl-server.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2018-04-09 12:37:43,365 - Generating config: /usr/hdp/current/hadoop-client/conf/ssl-server.xml

2018-04-09 12:37:43,366 - File['/usr/hdp/current/hadoop-client/conf/ssl-server.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2018-04-09 12:37:43,372 - XmlConfig['hdfs-site.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {u'final': {u'dfs.support.append': u'true', u'dfs.datanode.data.dir': u'true', u'dfs.namenode.http-address': u'true', u'dfs.namenode.name.dir': u'true', u'dfs.webhdfs.enabled': u'true', u'dfs.datanode.failed.volumes.tolerated': u'true'}}, 'configurations': ...}

2018-04-09 12:37:43,380 - Generating config: /usr/hdp/current/hadoop-client/conf/hdfs-site.xml

2018-04-09 12:37:43,380 - File['/usr/hdp/current/hadoop-client/conf/hdfs-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2018-04-09 12:37:43,423 - XmlConfig['core-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'mode': 0644, 'configuration_attributes': {u'final': {u'fs.defaultFS': u'true'}}, 'owner': 'hdfs', 'configurations': ...}

2018-04-09 12:37:43,431 - Generating config: /usr/hdp/current/hadoop-client/conf/core-site.xml

2018-04-09 12:37:43,431 - File['/usr/hdp/current/hadoop-client/conf/core-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-04-09 12:37:43,453 - File['/usr/hdp/current/hadoop-client/conf/slaves'] {'content': Template('slaves.j2'), 'owner': 'hdfs'}

2018-04-09 12:37:43,456 - Directory['/hadoop/hdfs/namenode'] {'owner': 'hdfs', 'group': 'hadoop', 'create_parents': True, 'mode': 0755, 'cd_access': 'a'}

2018-04-09 12:37:43,459 - Called service start with upgrade_type: None

2018-04-09 12:37:43,459 - Ranger admin not installed

2018-04-09 12:37:43,459 - /hadoop/hdfs/namenode/namenode-formatted/ exists. Namenode DFS already formatted

2018-04-09 12:37:43,459 - Directory['/hadoop/hdfs/namenode/namenode-formatted/'] {'create_parents': True}

2018-04-09 12:37:43,461 - File['/etc/hadoop/conf/dfs.exclude'] {'owner': 'hdfs', 'content': Template('exclude_hosts_list.j2'), 'group': 'hadoop'}

2018-04-09 12:37:43,461 - Options for start command are:

2018-04-09 12:37:43,462 - Directory['/var/run/hadoop'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0755}

2018-04-09 12:37:43,462 - Changing owner for /var/run/hadoop from 0 to hdfs

2018-04-09 12:37:43,462 - Changing group for /var/run/hadoop from 0 to hadoop

2018-04-09 12:37:43,462 - Directory['/var/run/hadoop/hdfs'] {'owner': 'hdfs', 'group': 'hadoop', 'create_parents': True}

2018-04-09 12:37:43,462 - Directory['/var/log/hadoop/hdfs'] {'owner': 'hdfs', 'group': 'hadoop', 'create_parents': True}

2018-04-09 12:37:43,463 - File['/var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'] {'action': ['delete'], 'not_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'}

2018-04-09 12:37:43,474 - Deleting File['/var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid']

2018-04-09 12:37:43,474 - Execute['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode''] {'environment': {'HADOOP_LIBEXEC_DIR': '/usr/hdp/current/hadoop-client/libexec'}, 'not_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'}

2018-04-09 12:37:47,563 - Execute['find /var/log/hadoop/hdfs -maxdepth 1 -type f -name '*' -exec echo '==> {} <==' \; -exec tail -n 40 {} \;'] {'logoutput': True, 'ignore_failures': True, 'user': 'hdfs'}

==> /var/log/hadoop/hdfs/hadoop-hdfs-datanode-eureambarimaster1.local.eurecat.org.out <==

ulimit -a for user hdfs

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 64057

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 128000

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 65536

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

==> /var/log/hadoop/hdfs/gc.log-201804091115 <==

Java HotSpot(TM) 64-Bit Server VM (25.77-b03) for linux-amd64 JRE (1.8.0_77-b03), built on Mar 20 2016 22:00:46 by "java_re" with gcc 4.3.0 20080428 (Red Hat 4.3.0-8)

Memory: 4k page, physical 16433116k(5901656k free), swap 0k(0k free)

CommandLine flags: -XX:CMSInitiatingOccupancyFraction=70 -XX:ErrorFile=/var/log/hadoop/hdfs/hs_err_pid%p.log -XX:InitialHeapSize=1073741824 -XX:MaxHeapSize=1073741824 -XX:MaxNewSize=134217728 -XX:MaxTenuringThreshold=6 -XX:NewSize=134217728 -XX:OldPLABSize=16 -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:ParallelGCThreads=8 -XX:+PrintGC -XX:+PrintGCDateStamps -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+UseCMSInitiatingOccupancyOnly -XX:+UseCompressedClassPointers -XX:+UseCompressedOops -XX:+UseConcMarkSweepGC -XX:+UseParNewGC

2018-04-09T11:15:34.918+0000: 1.124: [GC (Allocation Failure) 2018-04-09T11:15:34.918+0000: 1.124: [ParNew: 104960K->11724K(118016K), 0.0234380 secs] 104960K->11724K(1035520K), 0.0235235 secs] [Times: user=0.08 sys=0.00, real=0.02 secs]

Heap

par new generation total 118016K, used 26967K [0x00000000c0000000, 0x00000000c8000000, 0x00000000c8000000)

eden space 104960K, 14% used [0x00000000c0000000, 0x00000000c0ee2e80, 0x00000000c6680000)

from space 13056K, 89% us2018-04-09T11:15:30.500+0000: 3.407: [CMS-concurrent-abortable-preclean-start]

CMS: abort preclean due to time 2018-04-09T11:15:35.526+0000: 8.433: [CMS-concurrent-abortable-preclean: 1.539/5.025 secs] [Times: user=1.54 sys=0.00, real=5.02 secs]

2018-04-09T11:15:35.526+0000: 8.433: [GC (CMS Final Remark) [YG occupancy: 95843 K (184320 K)]2018-04-09T11:15:35.526+0000: 8.433: [Rescan (parallel) , 0.0064158 secs]2018-04-09T11:15:35.532+0000: 8.439: [weak refs processing, 0.0000165 secs]2018-04-09T11:15:35.532+0000: 8.439: [class unloading, 0.0036592 secs]2018-04-09T11:15:35.536+0000: 8.443: [scrub symbol table, 0.0026185 secs]2018-04-09T11:15:35.539+0000: 8.446: [scrub string table, 0.0005247 secs][1 CMS-remark: 0K(843776K)] 95843K(1028096K), 0.0137909 secs] [Times: user=0.03 sys=0.00, real=0.02 secs]

2018-04-09T11:15:35.540+0000: 8.447: [CMS-concurrent-sweep-start]

2018-04-09T11:15:35.540+0000: 8.447: [CMS-concurrent-sweep: 0.000/0.000 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

2018-04-09T11:15:35.540+0000: 8.447: [CMS-concurrent-reset-start]

2018-04-09T11:15:35.545+0000: 8.452: [CMS-concurrent-reset: 0.005/0.005 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

2018-04-09T11:43:38.920+0000: 1691.827: [GC (Allocation Failure) 2018-04-09T11:43:38.920+0000: 1691.827: [ParNew: 177628K->14557K(184320K), 0.0272813 secs] 177628K->19448K(1028096K), 0.0273847 secs] [Times: user=0.08 sys=0.00, real=0.03 secs]

==> /var/log/hadoop/hdfs/hadoop-hdfs-datanode-eureambarimaster1.local.eurecat.org.log <==

2018-04-09 12:37:08,105 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 4 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:09,106 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 5 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:10,107 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 6 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:11,107 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 7 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:12,108 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 8 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:13,109 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 9 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:14,109 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 10 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:15,110 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 11 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:16,111 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 12 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:17,112 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 13 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:18,112 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 14 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:19,113 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 15 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:20,114 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 16 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:21,114 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 17 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:22,115 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 18 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:23,116 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 19 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:24,116 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 20 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:25,117 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 21 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:26,118 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 22 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:27,118 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 23 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:28,119 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 24 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:29,120 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 25 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:30,120 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 26 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:31,121 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 27 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:32,122 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 28 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:33,122 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 29 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:34,123 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 30 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:35,124 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 31 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:36,125 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 32 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:37,125 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 33 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:38,126 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 34 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:39,127 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 35 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:40,127 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 36 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:41,128 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 37 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:42,129 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 38 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:43,129 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 39 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:44,130 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 40 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:45,131 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 41 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:46,131 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 42 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

2018-04-09 12:37:47,132 INFO ipc.Client (Client.java:handleConnectionFailure(904)) - Retrying connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020. Already tried 43 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=50, sleepTime=1000 MILLISECONDS)

==> /var/log/hadoop/hdfs/SecurityAuth.audit <==

==> /var/log/hadoop/hdfs/hdfs-audit.log <==

==> /var/log/hadoop/hdfs/hadoop-hdfs-namenode-eureambarimaster1.local.eurecat.org.log <==

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1754)

Caused by: java.net.BindException: Cannot assign requested address

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:433)

at sun.nio.ch.Net.bind(Net.java:425)

at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223)

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74)

at org.mortbay.jetty.nio.SelectChannelConnector.open(SelectChannelConnector.java:216)

at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:958)

... 8 more

2018-04-09 12:37:44,759 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:stop(211)) - Stopping NameNode metrics system...

2018-04-09 12:37:44,760 INFO impl.MetricsSinkAdapter (MetricsSinkAdapter.java:publishMetricsFromQueue(141)) - timeline thread interrupted.

2018-04-09 12:37:44,760 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:stop(217)) - NameNode metrics system stopped.

2018-04-09 12:37:44,760 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:run(416)) - Closing HadoopTimelineMetricSink. Flushing metrics to collector...

2018-04-09 12:37:44,761 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:shutdown(606)) - NameNode metrics system shutdown complete.

2018-04-09 12:37:44,761 ERROR namenode.NameNode (NameNode.java:main(1759)) - Failed to start namenode.

java.net.BindException: Port in use: eureambarimaster1.local.eurecat.org:50070

at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:963)

at org.apache.hadoop.http.HttpServer2.start(HttpServer2.java:900)

at org.apache.hadoop.hdfs.server.namenode.NameNodeHttpServer.start(NameNodeHttpServer.java:170)

at org.apache.hadoop.hdfs.server.namenode.NameNode.startHttpServer(NameNode.java:933)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:746)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:992)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:976)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1686)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1754)

Caused by: java.net.BindException: Cannot assign requested address

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:433)

at sun.nio.ch.Net.bind(Net.java:425)

at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223)

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74)

at org.mortbay.jetty.nio.SelectChannelConnector.open(SelectChannelConnector.java:216)

at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:958)

... 8 more

2018-04-09 12:37:44,762 INFO util.ExitUtil (ExitUtil.java:terminate(124)) - Exiting with status 1

2018-04-09 12:37:44,763 INFO namenode.NameNode (LogAdapter.java:info(47)) - SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at eureambarimaster1.local.eurecat.org/172.20.61.91

************************************************************/

==> /var/log/hadoop/hdfs/gc.log-201804091136 <==

Java HotSpot(TM) 64-Bit Server VM (25.77-b03) for linux-amd64 JRE (1.8.0_77-b03), built on Mar 20 2016 22:00:46 by "java_re" with gcc 4.3.0 20080428 (Red Hat 4.3.0-8)

Memory: 4k page, physical 16433116k(5854524k free), swap 0k(0k free)

CommandLine flags: -XX:CMSInitiatingOccupancyFraction=70 -XX:ErrorFile=/var/log/hadoop/hdfs/hs_err_pid%p.log -XX:InitialHeapSize=1073741824 -XX:MaxHeapSize=1073741824 -XX:MaxNewSize=134217728 -XX:MaxTenuringThreshold=6 -XX:NewSize=134217728 -XX:OldPLABSize=16 -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:ParallelGCThreads=8 -XX:+PrintGC -XX:+PrintGCDateStamps -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+UseCMSInitiatingOccupancyOnly -XX:+UseCompressedClassPointers -XX:+UseCompressedOops -XX:+UseConcMarkSweepGC -XX:+UseParNewGC

2018-04-09T11:36:21.937+0000: 1.108: [GC (GCLocker Initiated GC) 2018-04-09T11:36:21.937+0000: 1.108: [ParNew: 104960K->11695K(118016K), 0.0129496 secs] 104960K->11695K(1035520K), 0.0130306 secs] [Times: user=0.05 sys=0.00, real=0.01 secs]

Heap

par new generation total 118016K, used 26938K [0x00000000c0000000, 0x00000000c8000000, 0x00000000c8000000)

eden space 104960K, 14% used [0x00000000c0000000, 0x00000000c0ee2cb8, 0x00000000c6680000)

from space 13056K, 89% used [0x00000000c7340000, 0x00000000c7eabf10, 0x00000000c8000000)

to space 13056K, 0% used [0x00000000c6680000, 0x00000000c6680000, 0x00000000c7340000)

concurrent mark-sweep generation total 917504K, used 0K [0x00000000c8000000, 0x0000000100000000, 0x0000000100000000)

Metaspace used 16841K, capacity 17062K, committed 17280K, reserved 1064960K

class space used 2046K, capacity 2161K, committed 2176K, reserved 1048576K

==> /var/log/hadoop/hdfs/gc.log-201804091156 <==

Java HotSpot(TM) 64-Bit Server VM (25.77-b03) for linux-amd64 JRE (1.8.0_77-b03), built on Mar 20 2016 22:00:46 by "java_re" with gcc 4.3.0 20080428 (Red Hat 4.3.0-8)

Memory: 4k page, physical 16433116k(4733640k free), swap 0k(0k free)

CommandLine flags: -XX:CMSInitiatingOccupancyFraction=70 -XX:ErrorFile=/var/log/hadoop/hdfs/hs_err_pid%p.log -XX:InitialHeapSize=1073741824 -XX:MaxHeapSize=1073741824 -XX:MaxNewSize=134217728 -XX:MaxTenuringThreshold=6 -XX:NewSize=134217728 -XX:OldPLABSize=16 -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:ParallelGCThreads=8 -XX:+PrintGC -XX:+PrintGCDateStamps -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+UseCMSInitiatingOccupancyOnly -XX:+UseCompressedClassPointers -XX:+UseCompressedOops -XX:+UseConcMarkSweepGC -XX:+UseParNewGC

2018-04-09T11:56:45.167+0000: 1.128: [GC (Allocation Failure) 2018-04-09T11:56:45.167+0000: 1.128: [ParNew: 104960K->11708K(118016K), 0.0150730 secs] 104960K->11708K(1035520K), 0.0151584 secs] [Times: user=0.06 sys=0.01, real=0.01 secs]

Heap

par new generation total 118016K, used 26952K [0x00000000c0000000, 0x00000000c8000000, 0x00000000c8000000)

eden space 104960K, 14% used [0x00000000c0000000, 0x00000000c0ee2e90, 0x00000000c6680000)

from space 13056K, 89% used [0x00000000c7340000, 0x00000000c7eaf1c0, 0x00000000c8000000)

to space 13056K, 0% used [0x00000000c6680000, 0x00000000c6680000, 0x00000000c7340000)

concurrent mark-sweep generation total 917504K, used 0K [0x00000000c8000000, 0x0000000100000000, 0x0000000100000000)

Metaspace used 16837K, capacity 17062K, committed 17280K, reserved 1064960K

class space used 2046K, capacity 2161K, committed 2176K, reserved 1048576K

==> /var/log/hadoop/hdfs/gc.log-201804091217 <==

Java HotSpot(TM) 64-Bit Server VM (25.77-b03) for linux-amd64 JRE (1.8.0_77-b03), built on Mar 20 2016 22:00:46 by "java_re" with gcc 4.3.0 20080428 (Red Hat 4.3.0-8)

Memory: 4k page, physical 16433116k(4664928k free), swap 0k(0k free)

CommandLine flags: -XX:CMSInitiatingOccupancyFraction=70 -XX:ErrorFile=/var/log/hadoop/hdfs/hs_err_pid%p.log -XX:InitialHeapSize=1073741824 -XX:MaxHeapSize=1073741824 -XX:MaxNewSize=134217728 -XX:MaxTenuringThreshold=6 -XX:NewSize=134217728 -XX:OldPLABSize=16 -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:ParallelGCThreads=8 -XX:+PrintGC -XX:+PrintGCDateStamps -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+UseCMSInitiatingOccupancyOnly -XX:+UseCompressedClassPointers -XX:+UseCompressedOops -XX:+UseConcMarkSweepGC -XX:+UseParNewGC

2018-04-09T12:17:25.529+0000: 1.117: [GC (GCLocker Initiated GC) 2018-04-09T12:17:25.529+0000: 1.117: [ParNew: 104960K->11697K(118016K), 0.0123646 secs] 104960K->11697K(1035520K), 0.0124491 secs] [Times: user=0.05 sys=0.00, real=0.01 secs]

Heap

par new generation total 118016K, used 26940K [0x00000000c0000000, 0x00000000c8000000, 0x00000000c8000000)

eden space 104960K, 14% used [0x00000000c0000000, 0x00000000c0ee2e08, 0x00000000c6680000)

from space 13056K, 89% used [0x00000000c7340000, 0x00000000c7eac5f0, 0x00000000c8000000)

to space 13056K, 0% used [0x00000000c6680000, 0x00000000c6680000, 0x00000000c7340000)

concurrent mark-sweep generation total 917504K, used 0K [0x00000000c8000000, 0x0000000100000000, 0x0000000100000000)

Metaspace used 16836K, capacity 17062K, committed 17280K, reserved 1064960K

class space used 2046K, capacity 2161K, committed 2176K, reserved 1048576K

==> /var/log/hadoop/hdfs/hadoop-hdfs-namenode-eureambarimaster1.local.eurecat.org.out.4 <==

ulimit -a for user hdfs

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 64057

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 128000

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 65536

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

==> /var/log/hadoop/hdfs/hadoop-hdfs-namenode-eureambarimaster1.local.eurecat.org.out.3 <==

ulimit -a for user hdfs

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 64057

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 128000

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 65536

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

==> /var/log/hadoop/hdfs/hadoop-hdfs-namenode-eureambarimaster1.local.eurecat.org.out.2 <==

ulimit -a for user hdfs

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 64057

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 128000

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 65536

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

==> /var/log/hadoop/hdfs/hadoop-hdfs-namenode-eureambarimaster1.local.eurecat.org.out.1 <==

ulimit -a for user hdfs

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 64057

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 128000

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 65536

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

==> /var/log/hadoop/hdfs/hadoop-hdfs-namenode-eureambarimaster1.local.eurecat.org.out <==

ulimit -a for user hdfs

core file size (blocks, -c) unlimited

data seg size (kbytes, -d) unlimited

scheduling priority (-e) 0

file size (blocks, -f) unlimited

pending signals (-i) 64057

max locked memory (kbytes, -l) 64

max memory size (kbytes, -m) unlimited

open files (-n) 128000

pipe size (512 bytes, -p) 8

POSIX message queues (bytes, -q) 819200

real-time priority (-r) 0

stack size (kbytes, -s) 8192

cpu time (seconds, -t) unlimited

max user processes (-u) 65536

virtual memory (kbytes, -v) unlimited

file locks (-x) unlimited

==> /var/log/hadoop/hdfs/gc.log-201804091237 <==

Java HotSpot(TM) 64-Bit Server VM (25.77-b03) for linux-amd64 JRE (1.8.0_77-b03), built on Mar 20 2016 22:00:46 by "java_re" with gcc 4.3.0 20080428 (Red Hat 4.3.0-8)

Memory: 4k page, physical 16433116k(4992540k free), swap 0k(0k free)

CommandLine flags: -XX:CMSInitiatingOccupancyFraction=70 -XX:ErrorFile=/var/log/hadoop/hdfs/hs_err_pid%p.log -XX:InitialHeapSize=1073741824 -XX:MaxHeapSize=1073741824 -XX:MaxNewSize=134217728 -XX:MaxTenuringThreshold=6 -XX:NewSize=134217728 -XX:OldPLABSize=16 -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node" -XX:ParallelGCThreads=8 -XX:+PrintGC -XX:+PrintGCDateStamps -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+UseCMSInitiatingOccupancyOnly -XX:+UseCompressedClassPointers -XX:+UseCompressedOops -XX:+UseConcMarkSweepGC -XX:+UseParNewGC

2018-04-09T12:37:44.696+0000: 1.115: [GC (GCLocker Initiated GC) 2018-04-09T12:37:44.696+0000: 1.115: [ParNew: 104960K->11698K(118016K), 0.0134024 secs] 104960K->11698K(1035520K), 0.0134976 secs] [Times: user=0.06 sys=0.00, real=0.02 secs]

Heap

par new generation total 118016K, used 26942K [0x00000000c0000000, 0x00000000c8000000, 0x00000000c8000000)

eden space 104960K, 14% used [0x00000000c0000000, 0x00000000c0ee2ee8, 0x00000000c6680000)

from space 13056K, 89% used [0x00000000c7340000, 0x00000000c7eac968, 0x00000000c8000000)

to space 13056K, 0% used [0x00000000c6680000, 0x00000000c6680000, 0x00000000c7340000)

concurrent mark-sweep generation total 917504K, used 0K [0x00000000c8000000, 0x0000000100000000, 0x0000000100000000)

Metaspace used 16831K, capacity 17062K, committed 17280K, reserved 1064960K

class space used 2046K, capacity 2161K, committed 2176K, reserved 1048576K

Command failed after 1 tries

Created 04-09-2018 12:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like it is failing because the HDFS service is not starting. Based on the Error it looks like the NameNode port 50070 is in conflict and hence NameNode is not starting because of the port conflict.

2018-04-09 12:37:44,761 ERROR namenode.NameNode (NameNode.java:main(1759)) - Failed to start namenode.java.net.BindException: Port in use: xxxxxxmaster1.local.xxxxxx.org:50070

at org.apache.hadoop.http.HttpServer2.openListeners(HttpServer2.java:963)

.

.

Caused by: java.net.BindException: Cannot assign requested address

at sun.nio.ch.Net.bind0(Native Method)

at sun.nio.ch.Net.bind(Net.java:433)

at sun.nio.ch.Net.bind(Net.java:425).

So please find the process that is listening on the port 50070 and then kill it and then try to start the HDFS service again.

# netstat -tnlpa | grep 50070

Then kill the processID which is returned by the above command

# kill -9 $PID

.

Also please check if you have defined the correct hostname (xxxxxxmaster1.local.xxxxxx.org) for your name node and if the port 8020 & 50070 are free on the host where you are trying to run the NameNode process.

Created 04-09-2018 02:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I checked the ports 8020 and 50070. No processes are running there. Also the hostname is correct. I think that it's indeed might be related to memory settings. I used the ones offered by default. Is there any recommendation about this?

Created 04-09-2018 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Indeed HDFS cannot be started. I increased the Java heap size of NameNode and DataNode, but still the same issue. Also no processes are running on ports 8020 and 50070.

I do not really understand why do I get the error "java.net.BindException: Port in use: eureambarimaster1.local.eurecat.org:50070", while "netstat -tnlpa | grep 50070" shows no process running on this port.

Created 04-09-2018 04:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also, when I try to start SNameNode on a slave, I get this error "Failed to connect to server: eureambarimaster1.local.eurecat.org/172.20.61.91:8020: retries get failed due to exceeded maximum allowed retries number: 50 java.net.ConnectException: Connection refused". The port 8020 is opened and the hostname is correct...

Created 04-09-2018 01:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your problem seem to be memory related, the log clearly indicates it

ErrorFile=/var/log/hadoop/hdfs/hs_err_pid%p.log -XX:InitialHeapSize=1073741824-XX:MaxHeapSize=1073741824-XX:MaxNewSize=134217728-XX:MaxTenuringThreshold=6-XX:NewSize=134217728-XX:OldPLABSize=16-XX:OnOutOfMemory Error="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node"-XX:OnOutOfMemoryError="/usr/hdp/current/hadoop-hdfs-namenode/bin/kill-name-node"-XX:OnOutOfMemoryError=

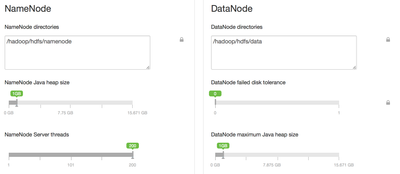

Can you share your memory setting? Ambari UI--->HDFS---> Configs

NameNode/DataNode Java heap sizes

Created on 04-09-2018 01:55 PM - edited 08-17-2019 08:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see the memory settings:

Created 04-09-2018 02:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am running a single test node with below setting try to use my setting to validate put read the info below for better settings

NameNode Java heap size=3GB DataNode maximum Java heap size=3GB

Here is the official document for calculating your memory setting

First download yarn-utils.py check the in on this page above the Table 1.5. download and unzip it in a temporary directory then run e.g

python yarn-utils.py -c 16 -m 64 -d 4 -k True

Hope that helps

Created 04-09-2018 04:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you try starting the components manually

# su -l hdfs -c "/usr/hdp/current/hadoop-hdfs-namenode/../hadoop/sbin/hadoop-daemon.sh start namenode" # su -l hdfs -c "/usr/hdp/current/hadoop-hdfs-datanode/../hadoop/sbin/hadoop-daemon.sh start datanode"

Validate that the port is not blocked by the firewall

# iptables -nvL

If you don't see TCP ports 8020 and 50070 add them following this syntax

# iptables -I INPUT 5 -p tcp --dport 50070 -j ACCEPT

Can you restart the cluster that looks a bizzare case.

Please revert

Created 04-09-2018 08:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I executed "iptables -I INPUT 5 -p tcp --dport 50070 -j ACCEPT" and "iptables -I INPUT 5 -p tcp --dport 8020 -j ACCEPT" in all nodes. Then I reset the cluster. It seems that the connection problem was solved. But now I get the following error (see below). When I run "sudo netstat -plnat | grep 50070" in "eureambarimaster1.local.eurecat.org", I get the empty output.

2018-04-09 20:49:38,779 ERROR namenode.NameNode (NameNode.java:main(1759)) - Failed to start namenode. java.net.BindException: Port in use: eureambarimaster1.local.eurecat.org:50070