Support Questions

- Cloudera Community

- Support

- Support Questions

- Cloudbreak failing to create cluster in AWS: Salt ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cloudbreak failing to create cluster in AWS: Salt Orchestrator Failing

- Labels:

-

Hortonworks Cloudbreak

Created 10-19-2016 08:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is a single node "cluster" with most of the HDP stack installed on a single 32GB node. I am able to log into the node via SSH and into Ambari UI. I can see that all of the service are up and running and I am able to use service like Hive, Hbase, Atlas, ect. The deployment seems to be successfull but Cloudbreak eventually shows the cluster creation attempt as failed. In the logs it trie sthe following 90 times:

2016-10-19 20:39:53,954 [containerBootstrapBuilderExecutor-9] call:68 WARN c.s.c.o.OrchestratorBootstrapRunner - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Orchestrator component SaltJobIdTracker failed to start, retrying [89/90] Elapsed time: 752 ms; Reason: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attempt, additional info: SaltJobIdTracker{saltJobRunner=HighStateChecker{BaseSaltJobRunner{target=[10.0.41.157], jid=JobId{jobId='20161019202401136474'}, jobState=IN_PROGRESS}}}

cloudbreak_1 | 2016-10-19 20:40:03,954 [containerBootstrapBuilderExecutor-9] isExitNeeded:87 DEBUG c.s.c.o.OrchestratorBootstrapRunner - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] exitCriteriaModel: ClusterDeletionBasedExitCriteriaModel{stackId=6, clusterId=6}, exitCriteria: com.sequenceiq.cloudbreak.core.bootstrap.service.ClusterDeletionBasedExitCriteria@25e33b4f

cloudbreak_1 | 2016-10-19 20:40:03,954 [containerBootstrapBuilderExecutor-9] isExitNeeded:19 DEBUG c.s.c.c.b.s.ClusterDeletionBasedExitCriteria - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Check isExitNeeded for model: ClusterDeletionBasedExitCriteriaModel{stackId=6, clusterId=6}

cloudbreak_1 | 2016-10-19 20:40:03,954 [containerBootstrapBuilderExecutor-9] isExitNeeded:90 DEBUG c.s.c.o.OrchestratorBootstrapRunner - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] isExitNeeded: false

cloudbreak_1 | 2016-10-19 20:40:03,955 [containerBootstrapBuilderExecutor-9] call:59 INFO c.s.c.o.OrchestratorBootstrapRunner - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Calling orchestrator bootstrap: SaltJobIdTracker, additional info: SaltJobIdTracker{saltJobRunner=HighStateChecker{BaseSaltJobRunner{target=[10.0.41.157], jid=JobId{jobId='20161019202401136474'}, jobState=IN_PROGRESS}}}

cloudbreak_1 | 2016-10-19 20:40:03,955 [containerBootstrapBuilderExecutor-9] call:43 INFO c.s.c.o.s.p.SaltJobIdTracker - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Job: 20161019202401136474 is running currently checking the current state.

cloudbreak_1 | 2016-10-19 20:40:04,830 [containerBootstrapBuilderExecutor-9] run:99 INFO c.s.c.o.s.c.SaltConnector - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Salt run response: RunningJobsResponse{result=[{20161019202401136474={Function=state.highstate, Returned=[], Target-type=glob, Running=[{ip-10-0-41-157.ec2.internal=27848}], User=saltuser, Arguments=[], Target=*}}]}

cloudbreak_1 | 2016-10-19 20:40:04,831 [containerBootstrapBuilderExecutor-9] jobIsRunning:124 INFO c.s.c.o.s.s.SaltStates - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Active salt jobs: RunningJobsResponse{result=[{20161019202401136474={Function=state.highstate, Returned=[], Target-type=glob, Running=[{ip-10-0-41-157.ec2.internal=27848}], User=saltuser, Arguments=[], Target=*}}]}

cloudbreak_1 | 2016-10-19 20:40:04,831 [containerBootstrapBuilderExecutor-9] checkIsFinished:66 INFO c.s.c.o.s.p.SaltJobIdTracker - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Job: 20161019202401136474 is running currently, waiting for next polling attempt.

cloudbreak_1 | 2016-10-19 20:40:04,831 [containerBootstrapBuilderExecutor-9] call:68 WARN c.s.c.o.OrchestratorBootstrapRunner - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Orchestrator component SaltJobIdTracker failed to start, retrying [90/90] Elapsed time: 876 ms; Reason: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attempt, additional info: SaltJobIdTracker{saltJobRunner=HighStateChecker{BaseSaltJobRunner{target=[10.0.41.157], jid=JobId{jobId='20161019202401136474'}, jobState=IN_PROGRESS}}}

After the 90th attempt:

cloudbreak_1 | 2016-10-19 20:40:04,831 [containerBootstrapBuilderExecutor-9] call:78 ERROR c.s.c.o.OrchestratorBootstrapRunner - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Orchestrator component failed to start in 90 attempts: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attempt

cloudbreak_1 | 2016-10-19 20:40:04,846 [reactorDispatcher-89] executeRecipes:300 ERROR c.s.c.o.s.SaltOrchestrator - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Error occurred during recipe execution

cloudbreak_1 | java.util.concurrent.ExecutionException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attempt

cloudbreak_1 | at java.util.concurrent.FutureTask.report(FutureTask.java:122)

cloudbreak_1 | at java.util.concurrent.FutureTask.get(FutureTask.java:192)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.runNewService(SaltOrchestrator.java:274)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.executeRecipes(SaltOrchestrator.java:294)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.postInstallRecipes(SaltOrchestrator.java:233)

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.OrchestratorRecipeExecutor.postInstall(OrchestratorRecipeExecutor.java:77)

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.RecipeEngine.executePostInstall(RecipeEngine.java:94)

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.AmbariClusterConnector.buildAmbariCluster(AmbariClusterConnector.java:223)

cloudbreak_1 | at com.sequenceiq.cloudbreak.core.cluster.AmbariClusterCreationService.buildAmbariCluster(AmbariClusterCreationService.java:27)

cloudbreak_1 | at com.sequenceiq.cloudbreak.reactor.handler.cluster.InstallClusterHandler.accept(InstallClusterHandler.java:35)

cloudbreak_1 | at com.sequenceiq.cloudbreak.reactor.handler.cluster.InstallClusterHandler.accept(InstallClusterHandler.java:18)

cloudbreak_1 | at reactor.bus.EventBus$3.accept(EventBus.java:317)

cloudbreak_1 | at reactor.bus.EventBus$3.accept(EventBus.java:310)

cloudbreak_1 | at reactor.bus.routing.ConsumerFilteringRouter.route(ConsumerFilteringRouter.java:72)

cloudbreak_1 | at reactor.bus.routing.TraceableDelegatingRouter.route(TraceableDelegatingRouter.java:51)

cloudbreak_1 | at reactor.bus.EventBus.accept(EventBus.java:591)

cloudbreak_1 | at reactor.bus.EventBus.accept(EventBus.java:63)

cloudbreak_1 | at reactor.core.dispatch.AbstractLifecycleDispatcher.route(AbstractLifecycleDispatcher.java:160)

cloudbreak_1 | at reactor.core.dispatch.MultiThreadDispatcher$MultiThreadTask.run(MultiThreadDispatcher.java:74)

cloudbreak_1 | at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

cloudbreak_1 | at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

cloudbreak_1 | at java.lang.Thread.run(Thread.java:745)

cloudbreak_1 | Caused by: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attempt

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.poller.SaltJobIdTracker.call(SaltJobIdTracker.java:54)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.OrchestratorBootstrapRunner.call(OrchestratorBootstrapRunner.java:60)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.OrchestratorBootstrapRunner.call(OrchestratorBootstrapRunner.java:14)

cloudbreak_1 | at java.util.concurrent.FutureTask.run(FutureTask.java:266)

cloudbreak_1 | ... 3 common frames omitted

cloudbreak_1 | 2016-10-19 20:40:04,847 [reactorDispatcher-89] buildAmbariCluster:238 ERROR c.s.c.s.c.f.AmbariClusterConnector - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] Error while building the Ambari cluster. Message com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: java.util.concurrent.ExecutionException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attempt, throwable: {}

cloudbreak_1 | com.sequenceiq.cloudbreak.core.CloudbreakException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: java.util.concurrent.ExecutionException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attempt

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.OrchestratorRecipeExecutor.postInstall(OrchestratorRecipeExecutor.java:80)

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.RecipeEngine.executePostInstall(RecipeEngine.java:94)

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.AmbariClusterConnector.buildAmbariCluster(AmbariClusterConnector.java:223)

cloudbreak_1 | at com.sequenceiq.cloudbreak.core.cluster.AmbariClusterCreationService.buildAmbariCluster(AmbariClusterCreationService.java:27)

cloudbreak_1 | at com.sequenceiq.cloudbreak.reactor.handler.cluster.InstallClusterHandler.accept(InstallClusterHandler.java:35)

cloudbreak_1 | at com.sequenceiq.cloudbreak.reactor.handler.cluster.InstallClusterHandler.accept(InstallClusterHandler.java:18)

cloudbreak_1 | at reactor.bus.EventBus$3.accept(EventBus.java:317)

cloudbreak_1 | at reactor.bus.EventBus$3.accept(EventBus.java:310)

cloudbreak_1 | at reactor.bus.routing.ConsumerFilteringRouter.route(ConsumerFilteringRouter.java:72)

cloudbreak_1 | at reactor.bus.routing.TraceableDelegatingRouter.route(TraceableDelegatingRouter.java:51)

cloudbreak_1 | at reactor.bus.EventBus.accept(EventBus.java:591)

cloudbreak_1 | at reactor.bus.EventBus.accept(EventBus.java:63)

cloudbreak_1 | at reactor.core.dispatch.AbstractLifecycleDispatcher.route(AbstractLifecycleDispatcher.java:160)

cloudbreak_1 | at reactor.core.dispatch.MultiThreadDispatcher$MultiThreadTask.run(MultiThreadDispatcher.java:74)

cloudbreak_1 | at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

cloudbreak_1 | at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

cloudbreak_1 | at java.lang.Thread.run(Thread.java:745)

cloudbreak_1 | Caused by: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: java.util.concurrent.ExecutionException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attempt

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.executeRecipes(SaltOrchestrator.java:301)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.postInstallRecipes(SaltOrchestrator.java:233)

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.OrchestratorRecipeExecutor.postInstall(OrchestratorRecipeExecutor.java:77)

cloudbreak_1 | ... 16 common frames omitted

cloudbreak_1 | Caused by: java.util.concurrent.ExecutionException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attempt

cloudbreak_1 | at java.util.concurrent.FutureTask.report(FutureTask.java:122)

cloudbreak_1 | at java.util.concurrent.FutureTask.get(FutureTask.java:192)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.runNewService(SaltOrchestrator.java:274)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.executeRecipes(SaltOrchestrator.java:294)

cloudbreak_1 | ... 18 common frames omitted

cloudbreak_1 | Caused by: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attempt

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.poller.SaltJobIdTracker.call(SaltJobIdTracker.java:54)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.OrchestratorBootstrapRunner.call(OrchestratorBootstrapRunner.java:60)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.OrchestratorBootstrapRunner.call(OrchestratorBootstrapRunner.java:14)

cloudbreak_1 | at java.util.concurrent.FutureTask.run(FutureTask.java:266)

cloudbreak_1 | ... 3 common frames omitted

cloudbreak_1 | 2016-10-19 20:40:04,847 [reactorDispatcher-89] accept:70 DEBUG c.s.c.c.f.Flow2Handler - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:6] [name:credit-fraud-demo] flow control event arrived: key: INSTALLCLUSTERFAILED, flowid: ae4d5d90-b13d-4b82-a0d4-0b0a9c75f0ac, payload: com.sequenceiq.cloudbreak.reactor.api.event.cluster.InstallClusterFailed@3af981e

In Cloudbreak UI:

Ambari cluster could not be created. Reason: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: java.util.concurrent.ExecutionException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161019202401136474'} is running currently, waiting for next polling attemptI am using the default fully open network config for aws.

Created 10-21-2016 11:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think I have found the issue. The debug: True is set in /etc/salt/master.d/custom.conf

.... rest_cherrypy: .... port: 3080 .... debug: True

Because of this flag the salt-api prints out more useful info for debugging, but it has the side effect that it will reload itself when the underlying code is changed: https://docs.saltstack.com/en/latest/ref/netapi/all/salt.netapi.rest_cherrypy.html

I saw the "Restarting because ..." messages in the Salt master log while the Ambari was installing the packages for HDP (e.g. in my case the email/mime/__init__.py was touched and triggered the restart, which was not handled very gracefully by cherrypy and caused the port collision.

2016-10-21 21:25:30,016 [cherrypy.error ][INFO ][3454] [21/Oct/2016:21:25:30] ENGINE Restarting because /usr/lib64/python2.6/email/mime/__init__.py changed.

2016-10-21 21:25:30,016 [cherrypy.error ][INFO ][3454] [21/Oct/2016:21:25:30] ENGINE Stopped thread 'Autoreloader'.

2016-10-21 21:25:30,017 [cherrypy.error ][INFO ][3454] [21/Oct/2016:21:25:30] ENGINE Bus STOPPING

2016-10-21 21:25:30,027 [cherrypy.error ][INFO ][3454] [21/Oct/2016:21:25:30] ENGINE HTTP Server cherrypy._cpwsgi_server.CPWSGIServer(('0.0.0.0', 3080)) shut down

2

I was thinking about a workaround, but I was not able to figure out any what you can apply. I will try to make a fix for it.

Attila

Created 10-20-2016 07:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cloudbreak runs a few post-install scripts after the installation is done. Can you check the /var/log/recipes/ directory to see if there is an error in one of those files? If you use a recipe it might fail as well.

Created 10-20-2016 02:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just tried a fresh deployment. I am defining a recipe but I don't see anything that references it in the logs. I also don't see a /var/log/recipes directory. Here is the doctor output:

[root@vvaks-cb cloudbreak-deployment]# DEBUG=1 cbd doctor [DEBUG] Use profile: Profile [DEBUG] Creating '.tmp' directory if not exist [DEBUG] Cloudbreak Deployer 1.6.0 ===> Deployer doctor: Checks your environment, and reports a diagnose. uname: Linux vvaks-cb 3.10.0-327.18.2.el7.x86_64 #1 SMP Thu May 12 11:03:55 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux local version:1.6.0 latest release:1.6.0 docker images: hortonworks/haveged:1.1.0 hortonworks/socat:1.0.0 hortonworks/cloudbreak-uaa-db:v3.6.0 hortonworks/cloudbreak-uaa:3.6.0 hortonworks/cloudbreak-server-db:1.2.0 hortonworks/cloudbreak:1.6.0 hortonworks/cloudbreak-auth:1.6.0 hortonworks/cloudbreak-web:1.6.0 hortonworks/cloudbreak-autoscale-db:1.2.0 hortonworks/cloudbreak-autoscale:1.6.0 docker command exists: OK [DEBUG] versionstr=Docker version 1.9.1, build a34a1d5 [DEBUG] fullver=1.9.1 [DEBUG] numver=191 docker client version: 1.9.1 [DEBUG] serverVer=1.9.1 docker client version: 1.9.1 ping 8.8.8.8 on host: OK ping github.com on host: OK ping 8.8.8.8 in container: OK ping github.com in container: OK [DEBUG] nameservers on host: 10.42.1.20 10.42.1.21 [DEBUG] bridge=172.17.0.1 [DEBUG] DOCKER_CONSUL_OPTIONS= -recursor 10.42.1.20 -recursor 10.42.1.21 [DEBUG] Generating docker-compose yaml: /root/cloudbreak-deployment/.deps/tmp/docker-compose-delme.yml ... [DEBUG] docker-compose.yml already exist, and generate wouldn't change it. [DEBUG] Generating Identity server config: /root/cloudbreak-deployment/.deps/tmp/uaa-delme.yml ... [DEBUG] uaa.yml exists and generate wouldn't change it [DEBUG] kill all exited container labeled as: cbreak.sidekick

Listing of /var/log directory:

[root@vvaks-cb cloudbreak-deployment]# ls /var/log/ anaconda boot.log chrony cloud-init-output.log dmesg grubby maillog ntpstats secure tallylog wtmp audit btmp cloud-init.log cron dmesg.old lastlog messages ppp spooler tuned yum.log

Created 10-20-2016 02:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The /var/log/recipes folder should exist on the node where Ambari is installed, so please SSH into there to see. If you define a custom recipe please make sure your script logs somewhere and check if it can run successfully.

Created 10-20-2016 05:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just took another pass at it. The /var/log/recipes folder on the Ambari node of that cluster is empty. However, I noticed the /var/log/salt directory and took a look at /var/log/salt/master. Here is the output:

2016-10-20 16:46:51,190 [salt.utils.process ][DEBUG ][3610] Started 'salt.loaded.int.netapi.rest_cherrypy.start' with pid 2406

2016-10-20 16:46:51,193 [salt.utils.event ][DEBUG ][2406] MasterEvent PUB socket URI: ipc:///var/run/salt/master/master_event_pub.ipc

2016-10-20 16:46:51,193 [salt.utils.event ][DEBUG ][2406] MasterEvent PULL socket URI: ipc:///var/run/salt/master/master_event_pull.ipc

2016-10-20 16:46:51,194 [salt.utils.event ][DEBUG ][2406] Sending event - data = {'_stamp': '2016-10-20T16:46:51.194082'}

2016-10-20 16:46:51,208 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:51] ENGINE Listening for SIGHUP.

2016-10-20 16:46:51,208 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:51] ENGINE Listening for SIGTERM.

2016-10-20 16:46:51,208 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:51] ENGINE Listening for SIGUSR1.

2016-10-20 16:46:51,208 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:51] ENGINE Bus STARTING

2016-10-20 16:46:51,209 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:51] ENGINE Started monitor thread '_TimeoutMonitor'.

2016-10-20 16:46:51,210 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:51] ENGINE Started monitor thread 'Autoreloader'.

2016-10-20 16:46:56,251 [cherrypy.error ][ERROR ][2406] [20/Oct/2016:16:46:56] ENGINE Error in 'start' listener <bound method Server.start of <cherrypy._cpserver.Server object at 0x2ee7890>>

Traceback (most recent call last):

File "/usr/lib/python2.6/site-packages/cherrypy/process/wspbus.py", line 197, in publish

output.append(listener(*args, **kwargs))

File "/usr/lib/python2.6/site-packages/cherrypy/_cpserver.py", line 151, in start

ServerAdapter.start(self)

File "/usr/lib/python2.6/site-packages/cherrypy/process/servers.py", line 167, in start

wait_for_free_port(*self.bind_addr)

File "/usr/lib/python2.6/site-packages/cherrypy/process/servers.py", line 410, in wait_for_free_port

raise IOError("Port %r not free on %r" % (port, host))

IOError: Port 3080 not free on '0.0.0.0'

2016-10-20 16:46:56,251 [cherrypy.error ][ERROR ][2406] [20/Oct/2016:16:46:56] ENGINE Shutting down due to error in start listener:

Traceback (most recent call last):

File "/usr/lib/python2.6/site-packages/cherrypy/process/wspbus.py", line 235, in start

self.publish('start')

File "/usr/lib/python2.6/site-packages/cherrypy/process/wspbus.py", line 215, in publish

raise exc

ChannelFailures: IOError("Port 3080 not free on '0.0.0.0'",)

2016-10-20 16:46:56,252 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:56] ENGINE Bus STOPPING

2016-10-20 16:46:56,252 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:56] ENGINE HTTP Server cherrypy._cpwsgi_server.CPWSGIServer(('0.0.0.0', 3080)) already shut down

2016-10-20 16:46:56,253 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:56] ENGINE Stopped thread '_TimeoutMonitor'.

2016-10-20 16:46:56,253 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:56] ENGINE Stopped thread 'Autoreloader'.

2016-10-20 16:46:56,254 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:56] ENGINE Bus STOPPED

2016-10-20 16:46:56,254 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:56] ENGINE Bus EXITING

2016-10-20 16:46:56,255 [cherrypy.error ][INFO ][2406] [20/Oct/2016:16:46:56] ENGINE Bus EXITED

2016-10-20 16:46:56,257 [salt.utils.process ][INFO ][3610] Process <function start at 0x2d54410> (2406) died with exit status None, restarting...

This is the process listening on 3080

root 9513 3610 0 16:52 ? 00:00:02 /usr/bin/python2.6 /usr/bin/salt-api -d

I tried killing this process but it just get recreated. I think this is the problem, just not sure how to address it.

2016-10-20 16:46:56,251 [cherrypy.error ][ERROR ][2406] [20/Oct/2016:16:46:56] ENGINE Error in 'start' listener <bound method Server.start of <cherrypy._cpserver.Server object at 0x2ee7890>>

Traceback (most recent call last):

File "/usr/lib/python2.6/site-packages/cherrypy/process/wspbus.py", line 197, in publish

output.append(listener(*args, **kwargs))

File "/usr/lib/python2.6/site-packages/cherrypy/_cpserver.py", line 151, in start

ServerAdapter.start(self)

File "/usr/lib/python2.6/site-packages/cherrypy/process/servers.py", line 167, in start

wait_for_free_port(*self.bind_addr)

File "/usr/lib/python2.6/site-packages/cherrypy/process/servers.py", line 410, in wait_for_free_port

raise IOError("Port %r not free on %r" % (port, host))

IOError: Port 3080 not free on '0.0.0.0'

2016-10-20 16:46:56,251 [cherrypy.error ][ERROR ][2406] [20/Oct/2016:16:46:56] ENGINE Shutting down due to error in start listener:

Traceback (most recent call last):

File "/usr/lib/python2.6/site-packages/cherrypy/process/wspbus.py", line 235, in start

self.publish('start')

File "/usr/lib/python2.6/site-packages/cherrypy/process/wspbus.py", line 215, in publish

raise exc

ChannelFailures: IOError("Port 3080 not free on '0.0.0.0'",)

Created 10-20-2016 09:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Vadim Vaks, Is this error reproducible? Or was a single failure? If it is reproducible then could you send us the blueprint, please?

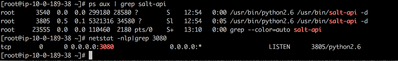

The salt-api should use the port 3080, so that seems correct. Could you check that how many instances are running of the salt-api with: ps aux | grep salt-api

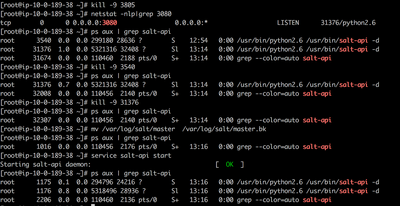

You can try to kill it/them with kill -9. If you kill it with -9 then it won't be restarted. You can also try to stop it with service salt-api stop.

After you have successfully stop the salt-api please move the /var/log/salt/master to e.g. /var/log/salt/master.bak and start the salt-api with service salt-api start and send us the content of the new /var/log/salt/master which will contain only the boot log of salt-api.

Attila

Created on 10-21-2016 01:33 PM - edited 08-19-2019 01:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The blueprint is attached.

Screenshot of ps aux | grep salt-api and netstat to show the one listening on 3080. (during Blueprint deployment)

Screenshot of steps taken post completion of HDP Cluster provisioning vi Bluepring

The Cloudbreak log continues to show the following:

cloudbreak_1 | 2016-10-21 13:19:07,602 [containerBootstrapBuilderExecutor-19] call:68 WARN c.s.c.o.OrchestratorBootstrapRunner - [owner:46c1f915-0f53-4567-b233-de54ed271274] [type:STACK] [id:18] [name:credit-fraud-demo] Orchestrator component SaltJobIdTracker failed to start, retrying [43/90] Elapsed time: 773 ms; Reason: Job: JobId{jobId='20161021131136120098'} is running currently, waiting for next polling attempt, additional info: SaltJobIdTracker{saltJobRunner=HighStateChecker{BaseSaltJobRunner{target=[10.0.189.38], jid=JobId{jobId='20161021131136120098'}, jobState=IN_PROGRESS}}}

salt master log after moving /var/log/salt/master to /var/log/salt/master.bak and capturing the new /var/log/salt/master

Cloudbreak log after 90 attempts and failures

cloudbreak_1 | com.sequenceiq.cloudbreak.core.CloudbreakException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: java.util.concurrent.ExecutionException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161021131136120098'} is running currently, waiting for next polling attempt

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.OrchestratorRecipeExecutor.postInstall(OrchestratorRecipeExecutor.java:80)

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.RecipeEngine.executePostInstall(RecipeEngine.java:94)

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.AmbariClusterConnector.buildAmbariCluster(AmbariClusterConnector.java:223)

cloudbreak_1 | at com.sequenceiq.cloudbreak.core.cluster.AmbariClusterCreationService.buildAmbariCluster(AmbariClusterCreationService.java:27)

cloudbreak_1 | at com.sequenceiq.cloudbreak.reactor.handler.cluster.InstallClusterHandler.accept(InstallClusterHandler.java:35)

cloudbreak_1 | at com.sequenceiq.cloudbreak.reactor.handler.cluster.InstallClusterHandler.accept(InstallClusterHandler.java:18)

cloudbreak_1 | at reactor.bus.EventBus$3.accept(EventBus.java:317)

cloudbreak_1 | at reactor.bus.EventBus$3.accept(EventBus.java:310)

cloudbreak_1 | at reactor.bus.routing.ConsumerFilteringRouter.route(ConsumerFilteringRouter.java:72)

cloudbreak_1 | at reactor.bus.routing.TraceableDelegatingRouter.route(TraceableDelegatingRouter.java:51)

cloudbreak_1 | at reactor.bus.EventBus.accept(EventBus.java:591)

cloudbreak_1 | at reactor.bus.EventBus.accept(EventBus.java:63)

cloudbreak_1 | at reactor.core.dispatch.AbstractLifecycleDispatcher.route(AbstractLifecycleDispatcher.java:160)

cloudbreak_1 | at reactor.core.dispatch.MultiThreadDispatcher$MultiThreadTask.run(MultiThreadDispatcher.java:74)

cloudbreak_1 | at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

cloudbreak_1 | at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

cloudbreak_1 | at java.lang.Thread.run(Thread.java:745)

cloudbreak_1 | Caused by: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: java.util.concurrent.ExecutionException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161021131136120098'} is running currently, waiting for next polling attempt

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.executeRecipes(SaltOrchestrator.java:301)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.postInstallRecipes(SaltOrchestrator.java:233)

cloudbreak_1 | at com.sequenceiq.cloudbreak.service.cluster.flow.OrchestratorRecipeExecutor.postInstall(OrchestratorRecipeExecutor.java:77)

cloudbreak_1 | ... 16 common frames omitted

cloudbreak_1 | Caused by: java.util.concurrent.ExecutionException: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161021131136120098'} is running currently, waiting for next polling attempt

cloudbreak_1 | at java.util.concurrent.FutureTask.report(FutureTask.java:122)

cloudbreak_1 | at java.util.concurrent.FutureTask.get(FutureTask.java:192)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.runNewService(SaltOrchestrator.java:274)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.SaltOrchestrator.executeRecipes(SaltOrchestrator.java:294)

cloudbreak_1 | ... 18 common frames omitted

cloudbreak_1 | Caused by: com.sequenceiq.cloudbreak.orchestrator.exception.CloudbreakOrchestratorFailedException: Job: JobId{jobId='20161021131136120098'} is running currently, waiting for next polling attempt

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.salt.poller.SaltJobIdTracker.call(SaltJobIdTracker.java:54)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.OrchestratorBootstrapRunner.call(OrchestratorBootstrapRunner.java:60)

cloudbreak_1 | at com.sequenceiq.cloudbreak.orchestrator.OrchestratorBootstrapRunner.call(OrchestratorBootstrapRunner.java:14)

cloudbreak_1 | at java.util.concurrent.FutureTask.run(FutureTask.java:266)

cloudbreak_1 | ... 3 common frames omitted

cloudbreak_1 | 2016-10-21 13:27:36,531 [reactorDispatcher-98] accept:70 DEBUG c.s.c.c.f.F

As before, the Ambari cluster is up and fully usable but shows as failed in Cloudbreak console

Created 10-21-2016 04:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. The master.txt looks error free. I am trying to reproduce the issue with the attached blueprint.

Attila

Created 10-21-2016 07:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just want to chime in and say I'm seeing the same issue. I am using a default blueprint and no recipe.

Created 10-21-2016 11:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think I have found the issue. The debug: True is set in /etc/salt/master.d/custom.conf

.... rest_cherrypy: .... port: 3080 .... debug: True

Because of this flag the salt-api prints out more useful info for debugging, but it has the side effect that it will reload itself when the underlying code is changed: https://docs.saltstack.com/en/latest/ref/netapi/all/salt.netapi.rest_cherrypy.html

I saw the "Restarting because ..." messages in the Salt master log while the Ambari was installing the packages for HDP (e.g. in my case the email/mime/__init__.py was touched and triggered the restart, which was not handled very gracefully by cherrypy and caused the port collision.

2016-10-21 21:25:30,016 [cherrypy.error ][INFO ][3454] [21/Oct/2016:21:25:30] ENGINE Restarting because /usr/lib64/python2.6/email/mime/__init__.py changed.

2016-10-21 21:25:30,016 [cherrypy.error ][INFO ][3454] [21/Oct/2016:21:25:30] ENGINE Stopped thread 'Autoreloader'.

2016-10-21 21:25:30,017 [cherrypy.error ][INFO ][3454] [21/Oct/2016:21:25:30] ENGINE Bus STOPPING

2016-10-21 21:25:30,027 [cherrypy.error ][INFO ][3454] [21/Oct/2016:21:25:30] ENGINE HTTP Server cherrypy._cpwsgi_server.CPWSGIServer(('0.0.0.0', 3080)) shut down

2

I was thinking about a workaround, but I was not able to figure out any what you can apply. I will try to make a fix for it.

Attila