Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Cloudera Manager Failed to format namenode whe...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cloudera Manager Failed to format namenode when add cluster

- Labels:

-

Cloudera Manager

Created 07-17-2019 10:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

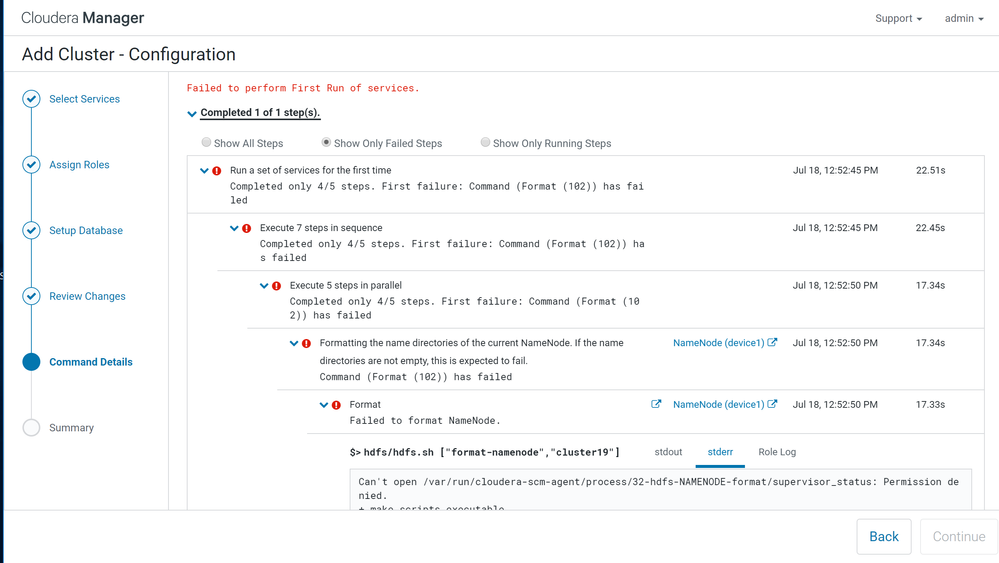

I wanna add a new server to cluster. But there was an issue in the last link.

My namenode is device1, and I was going to add device2 to the cluster.

The information printed as below:

Failed to perform First Run of services.

Completed 1 of 1 step(s).

Show All Steps Show Only Failed Steps Show Only Running Steps

Run a set of services for the first time

Completed only 4/5 steps. First failure: Command (Format (102)) has failed

Jul 18, 12:52:45 PM 22.51s

Execute 7 steps in sequence

Completed only 4/5 steps. First failure: Command (Format (102)) has failed

Jul 18, 12:52:45 PM 22.45s

Execute 5 steps in parallel

Completed only 4/5 steps. First failure: Command (Format (102)) has failed

Jul 18, 12:52:50 PM 17.34s

Formatting the name directories of the current NameNode. If the name directories are not empty, this is expected to fail.

Command (Format (102)) has failed

NameNode (device1)

Jul 18, 12:52:50 PM 17.34s

Format

Failed to format NameNode.

NameNode (device1)

Jul 18, 12:52:50 PM 17.33s

$> hdfs/hdfs.sh ["format-namenode","cluster19"]

stdout

stderr

Role Log

Can't open /var/run/cloudera-scm-agent/process/32-hdfs-NAMENODE-format/supervisor_status: Permission denied.

+ make_scripts_executable

+ find /var/run/cloudera-scm-agent/process/32-hdfs-NAMENODE-format -regex '.*\.\(py\|sh\)$' -exec chmod u+x '{}' ';'

+ '[' DATANODE_MAX_LOCKED_MEMORY '!=' '' ']'

+ ulimit -l

+ export HADOOP_IDENT_STRING=hdfs

+ HADOOP_IDENT_STRING=hdfs

+ '[' -n '' ']'

+ '[' mkdir '!=' format-namenode ']'

+ acquire_kerberos_tgt hdfs.keytab

+ '[' -z hdfs.keytab ']'

+ KERBEROS_PRINCIPAL=

+ '[' '!' -z '' ']'

+ '[' -n '' ']'

+ '[' validate-writable-empty-dirs = format-namenode ']'

+ '[' file-operation = format-namenode ']'

+ '[' bootstrap = format-namenode ']'

+ '[' failover = format-namenode ']'

+ '[' transition-to-active = format-namenode ']'

+ '[' initializeSharedEdits = format-namenode ']'

+ '[' initialize-znode = format-namenode ']'

+ '[' format-namenode = format-namenode ']'

+ '[' -z /dfs/nn ']'

+ for dfsdir in $DFS_STORAGE_DIRS

+ '[' -e /dfs/nn ']'

+ '[' '!' -d /dfs/nn ']'

+ CLUSTER_ARGS=

+ '[' 2 -eq 2 ']'

+ CLUSTER_ARGS='-clusterId cluster19'

+ '[' 3 = 6 ']'

+ '[' -3 = 6 ']'

+ exec /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-hdfs/bin/hdfs --config /var/run/cloudera-scm-agent/process/32-hdfs-NAMENODE-format namenode -format -clusterId cluster19 -nonInteractive

WARNING: HADOOP_PREFIX has been replaced by HADOOP_HOME. Using value of HADOOP_PREFIX.

WARNING: HADOOP_NAMENODE_OPTS has been replaced by HDFS_NAMENODE_OPTS. Using value of HADOOP_NAMENODE_OPTS.

Running in non-interactive mode, and data appears to exist in Storage Directory /dfs/nn. Not formatting.

Created 07-18-2019 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the namenode log, and there is no any error

STARTUP_MSG: build = http://github.com/cloudera/hadoop -r d1dff3d3a126da44e3458bbf148c3bc16ff55bd8; compiled by 'jenkins' on 2019-03-14T06:39Z STARTUP_MSG: java = 1.8.0_162 ************************************************************/ 2019-07-18 15:52:05,190 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 2019-07-18 15:52:05,234 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: createNameNode [-format, -clusterId, cluster19, -nonInteractive] 2019-07-18 15:52:05,485 INFO org.apache.hadoop.hdfs.server.namenode.FSEditLog: Edit logging is async:true 2019-07-18 15:52:05,494 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: KeyProvider: null 2019-07-18 15:52:05,494 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: fsLock is fair: true 2019-07-18 15:52:05,495 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Detailed lock hold time metrics enabled: false 2019-07-18 15:52:05,498 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: fsOwner = hdfs (auth:SIMPLE) 2019-07-18 15:52:05,499 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: supergroup = supergroup 2019-07-18 15:52:05,499 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: isPermissionEnabled = true 2019-07-18 15:52:05,499 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: HA Enabled: false 2019-07-18 15:52:05,522 INFO org.apache.hadoop.hdfs.server.common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling 2019-07-18 15:52:05,532 WARN org.apache.hadoop.hdfs.util.CombinedHostsFileReader: /var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format/dfs_all_hosts.txt has invalid JSON format.Try the old format without top-level token defined. 2019-07-18 15:52:05,573 INFO org.apache.hadoop.hdfs.server.blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000 2019-07-18 15:52:05,573 INFO org.apache.hadoop.hdfs.server.blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 2019-07-18 15:52:05,575 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 2019-07-18 15:52:05,576 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: The block deletion will start around 2019 Jul 18 15:52:05 2019-07-18 15:52:05,577 INFO org.apache.hadoop.util.GSet: Computing capacity for map BlocksMap 2019-07-18 15:52:05,577 INFO org.apache.hadoop.util.GSet: VM type = 64-bit 2019-07-18 15:52:05,578 INFO org.apache.hadoop.util.GSet: 2.0% max memory 1.8 GB = 36.2 MB 2019-07-18 15:52:05,579 INFO org.apache.hadoop.util.GSet: capacity = 2^22 = 4194304 entries 2019-07-18 15:52:05,598 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: dfs.block.access.token.enable = false 2019-07-18 15:52:05,601 INFO org.apache.hadoop.conf.Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS 2019-07-18 15:52:05,601 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 2019-07-18 15:52:05,602 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 1 2019-07-18 15:52:05,602 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000 2019-07-18 15:52:05,602 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: defaultReplication = 3 2019-07-18 15:52:05,602 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: maxReplication = 512 2019-07-18 15:52:05,602 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: minReplication = 1 2019-07-18 15:52:05,602 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: maxReplicationStreams = 20 2019-07-18 15:52:05,602 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms 2019-07-18 15:52:05,602 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: encryptDataTransfer = false 2019-07-18 15:52:05,602 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: maxNumBlocksToLog = 1000 2019-07-18 15:52:05,618 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: GLOBAL serial map: bits=24 maxEntries=16777215 2019-07-18 15:52:05,627 INFO org.apache.hadoop.util.GSet: Computing capacity for map INodeMap 2019-07-18 15:52:05,627 INFO org.apache.hadoop.util.GSet: VM type = 64-bit 2019-07-18 15:52:05,627 INFO org.apache.hadoop.util.GSet: 1.0% max memory 1.8 GB = 18.1 MB 2019-07-18 15:52:05,627 INFO org.apache.hadoop.util.GSet: capacity = 2^21 = 2097152 entries 2019-07-18 15:52:05,630 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: ACLs enabled? false 2019-07-18 15:52:05,630 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: POSIX ACL inheritance enabled? true 2019-07-18 15:52:05,630 INFO org.apache.hadoop.hdfs.server.namenode.FSDirectory: XAttrs enabled? true 2019-07-18 15:52:05,630 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: Caching file names occurring more than 10 times 2019-07-18 15:52:05,634 INFO org.apache.hadoop.hdfs.server.namenode.snapshot.SnapshotManager: Loaded config captureOpenFiles: true, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true 2019-07-18 15:52:05,637 INFO org.apache.hadoop.util.GSet: Computing capacity for map cachedBlocks 2019-07-18 15:52:05,637 INFO org.apache.hadoop.util.GSet: VM type = 64-bit 2019-07-18 15:52:05,637 INFO org.apache.hadoop.util.GSet: 0.25% max memory 1.8 GB = 4.5 MB 2019-07-18 15:52:05,637 INFO org.apache.hadoop.util.GSet: capacity = 2^19 = 524288 entries 2019-07-18 15:52:05,643 INFO org.apache.hadoop.hdfs.server.namenode.top.metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 2019-07-18 15:52:05,643 INFO org.apache.hadoop.hdfs.server.namenode.top.metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 2019-07-18 15:52:05,643 INFO org.apache.hadoop.hdfs.server.namenode.top.metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 2019-07-18 15:52:05,646 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Retry cache on namenode is enabled 2019-07-18 15:52:05,646 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 2019-07-18 15:52:05,648 INFO org.apache.hadoop.util.GSet: Computing capacity for map NameNodeRetryCache 2019-07-18 15:52:05,648 INFO org.apache.hadoop.util.GSet: VM type = 64-bit 2019-07-18 15:52:05,648 INFO org.apache.hadoop.util.GSet: 0.029999999329447746% max memory 1.8 GB = 555.9 KB 2019-07-18 15:52:05,648 INFO org.apache.hadoop.util.GSet: capacity = 2^16 = 65536 entries 2019-07-18 15:52:05,666 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 1: ExitException 2019-07-18 15:52:05,667 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at device1/192.168.0.104 ************************************************************/

Created 07-18-2019 01:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the stderr.log

[18/Jul/2019 15:52:04 +0000] 2807 MainThread redactor INFO Started launcher: /opt/cloudera/cm-agent/service/hdfs/hdfs.sh format-namenode cluster19

[18/Jul/2019 15:52:04 +0000] 2807 MainThread redactor INFO Re-exec watcher: /opt/cloudera/cm-agent/bin/cm proc_watcher 2811

[18/Jul/2019 15:52:04 +0000] 2812 MainThread redactor INFO Re-exec redactor: /opt/cloudera/cm-agent/bin/cm redactor --fds 3 5

[18/Jul/2019 15:52:04 +0000] 2812 MainThread redactor INFO Started redactor

2019年 07月 18日 星期四 15:52:04 CST

+ source_parcel_environment

+ '[' '!' -z /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/meta/cdh_env.sh ']'

+ OLD_IFS='

'

+ IFS=:

+ SCRIPT_ARRAY=($SCM_DEFINES_SCRIPTS)

+ DIRNAME_ARRAY=($PARCEL_DIRNAMES)

+ IFS='

'

+ COUNT=1

++ seq 1 1

+ for i in `seq 1 $COUNT`

+ SCRIPT=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/meta/cdh_env.sh

+ PARCEL_DIRNAME=CDH-6.2.0-1.cdh6.2.0.p0.967373

+ . /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/meta/cdh_env.sh

++ CDH_DIRNAME=CDH-6.2.0-1.cdh6.2.0.p0.967373

++ export CDH_HADOOP_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop

++ CDH_HADOOP_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop

++ export CDH_MR1_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-0.20-mapreduce

++ CDH_MR1_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-0.20-mapreduce

++ export CDH_HDFS_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-hdfs

++ CDH_HDFS_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-hdfs

++ export CDH_HTTPFS_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-httpfs

++ CDH_HTTPFS_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-httpfs

++ export CDH_MR2_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-mapreduce

++ CDH_MR2_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-mapreduce

++ export CDH_YARN_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-yarn

++ CDH_YARN_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-yarn

++ export CDH_HBASE_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hbase

++ CDH_HBASE_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hbase

++ export CDH_ZOOKEEPER_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/zookeeper

++ CDH_ZOOKEEPER_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/zookeeper

++ export CDH_HIVE_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hive

++ CDH_HIVE_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hive

++ export CDH_HUE_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hue

++ CDH_HUE_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hue

++ export CDH_OOZIE_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/oozie

++ CDH_OOZIE_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/oozie

++ export CDH_HUE_PLUGINS_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop

++ CDH_HUE_PLUGINS_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop

++ export CDH_FLUME_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/flume-ng

++ CDH_FLUME_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/flume-ng

++ export CDH_PIG_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/pig

++ CDH_PIG_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/pig

++ export CDH_HCAT_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hive-hcatalog

++ CDH_HCAT_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hive-hcatalog

++ export CDH_SENTRY_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/sentry

++ CDH_SENTRY_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/sentry

++ export JSVC_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/bigtop-utils

++ JSVC_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/bigtop-utils

++ export CDH_HADOOP_BIN=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop/bin/hadoop

++ CDH_HADOOP_BIN=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop/bin/hadoop

++ export CDH_IMPALA_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/impala

++ CDH_IMPALA_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/impala

++ export CDH_SOLR_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/solr

++ CDH_SOLR_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/solr

++ export CDH_HBASE_INDEXER_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hbase-solr

++ CDH_HBASE_INDEXER_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hbase-solr

++ export SEARCH_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/search

++ SEARCH_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/search

++ export CDH_SPARK_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/spark

++ CDH_SPARK_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/spark

++ export WEBHCAT_DEFAULT_XML=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/etc/hive-webhcat/conf.dist/webhcat-default.xml

++ WEBHCAT_DEFAULT_XML=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/etc/hive-webhcat/conf.dist/webhcat-default.xml

++ export CDH_KMS_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-kms

++ CDH_KMS_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-kms

++ export CDH_PARQUET_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/parquet

++ CDH_PARQUET_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/parquet

++ export CDH_AVRO_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/avro

++ CDH_AVRO_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/avro

++ export CDH_KAFKA_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/kafka

++ CDH_KAFKA_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/kafka

++ export CDH_KUDU_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/kudu

++ CDH_KUDU_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/kudu

+ locate_cdh_java_home

+ '[' -z '' ']'

+ '[' -z /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/bigtop-utils ']'

+ local BIGTOP_DETECT_JAVAHOME=

+ for candidate in "${JSVC_HOME}" "${JSVC_HOME}/.." "/usr/lib/bigtop-utils" "/usr/libexec"

+ '[' -e /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/bigtop-utils/bigtop-detect-javahome ']'

+ BIGTOP_DETECT_JAVAHOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/bigtop-utils/bigtop-detect-javahome

+ break

+ '[' -z /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/bigtop-utils/bigtop-detect-javahome ']'

+ . /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/bigtop-utils/bigtop-detect-javahome

++ BIGTOP_DEFAULTS_DIR=/etc/default

++ '[' -n /etc/default -a -r /etc/default/bigtop-utils ']'

++ JAVA11_HOME_CANDIDATES=('/usr/java/jdk-11' '/usr/lib/jvm/jdk-11' '/usr/lib/jvm/java-11-oracle')

++ OPENJAVA11_HOME_CANDIDATES=('/usr/lib/jvm/jdk-11' '/usr/lib64/jvm/jdk-11')

++ JAVA8_HOME_CANDIDATES=('/usr/java/jdk1.8' '/usr/java/jre1.8' '/usr/lib/jvm/j2sdk1.8-oracle' '/usr/lib/jvm/j2sdk1.8-oracle/jre' '/usr/lib/jvm/java-8-oracle')

++ OPENJAVA8_HOME_CANDIDATES=('/usr/lib/jvm/java-1.8.0-openjdk' '/usr/lib/jvm/java-8-openjdk' '/usr/lib64/jvm/java-1.8.0-openjdk' '/usr/lib64/jvm/java-8-openjdk')

++ MISCJAVA_HOME_CANDIDATES=('/Library/Java/Home' '/usr/java/default' '/usr/lib/jvm/default-java' '/usr/lib/jvm/java-openjdk' '/usr/lib/jvm/jre-openjdk')

++ case ${BIGTOP_JAVA_MAJOR} in

++ JAVA_HOME_CANDIDATES=(${JAVA8_HOME_CANDIDATES[@]} ${MISCJAVA_HOME_CANDIDATES[@]} ${OPENJAVA8_HOME_CANDIDATES[@]} ${JAVA11_HOME_CANDIDATES[@]} ${OPENJAVA11_HOME_CANDIDATES[@]})

++ '[' -z '' ']'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd '/usr/java/jdk1.8*'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd '/usr/java/jre1.8*'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd '/usr/lib/jvm/j2sdk1.8-oracle*'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd '/usr/lib/jvm/j2sdk1.8-oracle/jre*'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd '/usr/lib/jvm/java-8-oracle*'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd '/Library/Java/Home*'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd '/usr/java/default*'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd '/usr/lib/jvm/default-java*'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd '/usr/lib/jvm/java-openjdk*'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd '/usr/lib/jvm/jre-openjdk*'

++ for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}

+++ ls -rvd /usr/lib/jvm/java-1.8.0-openjdk-amd64

++ for candidate in `ls -rvd ${candidate_regex}* 2>/dev/null`

++ '[' -e /usr/lib/jvm/java-1.8.0-openjdk-amd64/bin/java ']'

++ export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-amd64

++ JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-amd64

++ break 2

+ get_java_major_version JAVA_MAJOR

+ '[' -z /usr/lib/jvm/java-1.8.0-openjdk-amd64/bin/java ']'

++ /usr/lib/jvm/java-1.8.0-openjdk-amd64/bin/java -version

+ local 'VERSION_STRING=openjdk version "1.8.0_162"

OpenJDK Runtime Environment (build 1.8.0_162-8u162-b12-1-b12)

OpenJDK 64-Bit Server VM (build 25.162-b12, mixed mode)'

+ local 'RE_JAVA=[java|openjdk][[:space:]]version[[:space:]]\"1\.([0-9][0-9]*)\.?+'

+ [[ openjdk version "1.8.0_162"

OpenJDK Runtime Environment (build 1.8.0_162-8u162-b12-1-b12)

OpenJDK 64-Bit Server VM (build 25.162-b12, mixed mode) =~ [java|openjdk][[:space:]]version[[:space:]]\"1\.([0-9][0-9]*)\.?+ ]]

+ eval JAVA_MAJOR=8

++ JAVA_MAJOR=8

+ '[' 8 -lt 8 ']'

+ verify_java_home

+ '[' -z /usr/lib/jvm/java-1.8.0-openjdk-amd64 ']'

+ echo JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-amd64

+ . /opt/cloudera/cm-agent/service/common/cdh-default-hadoop

++ [[ -z 6 ]]

++ '[' 6 = 3 ']'

++ '[' 6 = -3 ']'

++ '[' 6 -ge 4 ']'

++ export HADOOP_HOME_WARN_SUPPRESS=true

++ HADOOP_HOME_WARN_SUPPRESS=true

++ export HADOOP_PREFIX=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop

++ HADOOP_PREFIX=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop

++ export HADOOP_LIBEXEC_DIR=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop/libexec

++ HADOOP_LIBEXEC_DIR=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop/libexec

++ export HADOOP_CONF_DIR=/var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format

++ HADOOP_CONF_DIR=/var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format

++ export HADOOP_COMMON_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop

++ HADOOP_COMMON_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop

++ export HADOOP_HDFS_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-hdfs

++ HADOOP_HDFS_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-hdfs

++ export HADOOP_MAPRED_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-mapreduce

++ HADOOP_MAPRED_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-mapreduce

++ '[' 6 = 4 ']'

++ '[' 6 -ge 5 ']'

++ export HADOOP_YARN_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-yarn

++ HADOOP_YARN_HOME=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-yarn

++ replace_pid -Xms1961885696 -Xmx1961885696 -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+HeapDumpOnOutOfMemoryError '-XX:HeapDumpPath=/tmp/hdfs_hdfs-NAMENODE-04c87db2a470ce2ac21ae3a8547c2609_pid{{PID}}.hprof' -XX:OnOutOfMemoryError=/opt/cloudera/cm-agent/service/common/killparent.sh

++ sed 's#{{PID}}#2811#g'

++ echo -Xms1961885696 -Xmx1961885696 -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+HeapDumpOnOutOfMemoryError '-XX:HeapDumpPath=/tmp/hdfs_hdfs-NAMENODE-04c87db2a470ce2ac21ae3a8547c2609_pid{{PID}}.hprof' -XX:OnOutOfMemoryError=/opt/cloudera/cm-agent/service/common/killparent.sh

+ export 'HADOOP_NAMENODE_OPTS=-Xms1961885696 -Xmx1961885696 -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp/hdfs_hdfs-NAMENODE-04c87db2a470ce2ac21ae3a8547c2609_pid2811.hprof -XX:OnOutOfMemoryError=/opt/cloudera/cm-agent/service/common/killparent.sh'

+ HADOOP_NAMENODE_OPTS='-Xms1961885696 -Xmx1961885696 -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp/hdfs_hdfs-NAMENODE-04c87db2a470ce2ac21ae3a8547c2609_pid2811.hprof -XX:OnOutOfMemoryError=/opt/cloudera/cm-agent/service/common/killparent.sh'

++ replace_pid

++ echo

++ sed 's#{{PID}}#2811#g'

+ export HADOOP_DATANODE_OPTS=

+ HADOOP_DATANODE_OPTS=

++ replace_pid

++ echo

++ sed 's#{{PID}}#2811#g'

+ export HADOOP_SECONDARYNAMENODE_OPTS=

+ HADOOP_SECONDARYNAMENODE_OPTS=

++ replace_pid

++ echo

++ sed 's#{{PID}}#2811#g'

+ export HADOOP_NFS3_OPTS=

+ HADOOP_NFS3_OPTS=

++ replace_pid

++ echo

++ sed 's#{{PID}}#2811#g'

+ export HADOOP_JOURNALNODE_OPTS=

+ HADOOP_JOURNALNODE_OPTS=

+ '[' 6 -ge 4 ']'

+ HDFS_BIN=/opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-hdfs/bin/hdfs

+ export 'HADOOP_OPTS=-Djava.net.preferIPv4Stack=true '

+ HADOOP_OPTS='-Djava.net.preferIPv4Stack=true '

+ echo 'using /usr/lib/jvm/java-1.8.0-openjdk-amd64 as JAVA_HOME'

+ echo 'using 6 as CDH_VERSION'

+ echo 'using /var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format as CONF_DIR'

+ echo 'using as SECURE_USER'

+ echo 'using as SECURE_GROUP'

+ set_hadoop_classpath

+ set_classpath_in_var HADOOP_CLASSPATH

+ '[' -z HADOOP_CLASSPATH ']'

+ [[ -n /opt/cloudera/cm ]]

++ find /opt/cloudera/cm/lib/plugins -maxdepth 1 -name '*.jar'

++ tr '\n' :

+ ADD_TO_CP=/opt/cloudera/cm/lib/plugins/tt-instrumentation-6.2.0.jar:/opt/cloudera/cm/lib/plugins/event-publish-6.2.0-shaded.jar:

+ [[ -n navigator/cdh6 ]]

+ for DIR in $CM_ADD_TO_CP_DIRS

++ find /opt/cloudera/cm/lib/plugins/navigator/cdh6 -maxdepth 1 -name '*.jar'

++ tr '\n' :

+ PLUGIN=/opt/cloudera/cm/lib/plugins/navigator/cdh6/audit-plugin-cdh6-6.2.0-shaded.jar:

+ ADD_TO_CP=/opt/cloudera/cm/lib/plugins/tt-instrumentation-6.2.0.jar:/opt/cloudera/cm/lib/plugins/event-publish-6.2.0-shaded.jar:/opt/cloudera/cm/lib/plugins/navigator/cdh6/audit-plugin-cdh6-6.2.0-shaded.jar:

+ eval 'OLD_VALUE=$HADOOP_CLASSPATH'

++ OLD_VALUE=

+ NEW_VALUE=/opt/cloudera/cm/lib/plugins/tt-instrumentation-6.2.0.jar:/opt/cloudera/cm/lib/plugins/event-publish-6.2.0-shaded.jar:/opt/cloudera/cm/lib/plugins/navigator/cdh6/audit-plugin-cdh6-6.2.0-shaded.jar:

+ export HADOOP_CLASSPATH=/opt/cloudera/cm/lib/plugins/tt-instrumentation-6.2.0.jar:/opt/cloudera/cm/lib/plugins/event-publish-6.2.0-shaded.jar:/opt/cloudera/cm/lib/plugins/navigator/cdh6/audit-plugin-cdh6-6.2.0-shaded.jar

+ HADOOP_CLASSPATH=/opt/cloudera/cm/lib/plugins/tt-instrumentation-6.2.0.jar:/opt/cloudera/cm/lib/plugins/event-publish-6.2.0-shaded.jar:/opt/cloudera/cm/lib/plugins/navigator/cdh6/audit-plugin-cdh6-6.2.0-shaded.jar

+ set -x

+ replace_conf_dir

+ echo CONF_DIR=/var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format

+ echo CMF_CONF_DIR=

+ EXCLUDE_CMF_FILES=('cloudera-config.sh' 'hue.sh' 'impala.sh' 'sqoop.sh' 'supervisor.conf' 'config.zip' 'proc.json' '*.log' '*.keytab' '*jceks')

++ printf '! -name %s ' cloudera-config.sh hue.sh impala.sh sqoop.sh supervisor.conf config.zip proc.json '*.log' hdfs.keytab '*jceks'

+ find /var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format -type f '!' -path '/var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format/logs/*' '!' -name cloudera-config.sh '!' -name hue.sh '!' -name impala.sh '!' -name sqoop.sh '!' -name supervisor.conf '!' -name config.zip '!' -name proc.json '!' -name '*.log' '!' -name hdfs.keytab '!' -name '*jceks' -exec perl -pi -e 's#\{\{CMF_CONF_DIR}}#/var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format#g' '{}' ';'

Can't open /var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format/supervisor_status: Permission denied.

+ make_scripts_executable

+ find /var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format -regex '.*\.\(py\|sh\)$' -exec chmod u+x '{}' ';'

+ '[' DATANODE_MAX_LOCKED_MEMORY '!=' '' ']'

+ ulimit -l

+ export HADOOP_IDENT_STRING=hdfs

+ HADOOP_IDENT_STRING=hdfs

+ '[' -n '' ']'

+ '[' mkdir '!=' format-namenode ']'

+ acquire_kerberos_tgt hdfs.keytab

+ '[' -z hdfs.keytab ']'

+ KERBEROS_PRINCIPAL=

+ '[' '!' -z '' ']'

+ '[' -n '' ']'

+ '[' validate-writable-empty-dirs = format-namenode ']'

+ '[' file-operation = format-namenode ']'

+ '[' bootstrap = format-namenode ']'

+ '[' failover = format-namenode ']'

+ '[' transition-to-active = format-namenode ']'

+ '[' initializeSharedEdits = format-namenode ']'

+ '[' initialize-znode = format-namenode ']'

+ '[' format-namenode = format-namenode ']'

+ '[' -z /dfs/nn ']'

+ for dfsdir in $DFS_STORAGE_DIRS

+ '[' -e /dfs/nn ']'

+ '[' '!' -d /dfs/nn ']'

+ CLUSTER_ARGS=

+ '[' 2 -eq 2 ']'

+ CLUSTER_ARGS='-clusterId cluster19'

+ '[' 3 = 6 ']'

+ '[' -3 = 6 ']'

+ exec /opt/cloudera/parcels/CDH-6.2.0-1.cdh6.2.0.p0.967373/lib/hadoop-hdfs/bin/hdfs --config /var/run/cloudera-scm-agent/process/40-hdfs-NAMENODE-format namenode -format -clusterId cluster19 -nonInteractive

WARNING: HADOOP_PREFIX has been replaced by HADOOP_HOME. Using value of HADOOP_PREFIX.

WARNING: HADOOP_NAMENODE_OPTS has been replaced by HDFS_NAMENODE_OPTS. Using value of HADOOP_NAMENODE_OPTS.

Running in non-interactive mode, and data appears to exist in Storage Directory /dfs/nn. Not formatting.

Created 07-18-2019 02:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I solved it by cleaning the /dfs/nn and /dfs/dn because once I installed hdfs, and there were some data existed in the hdfs.

Created 07-02-2020 05:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to clear this , can the files be manually deleted?

Please help ,

Created 02-18-2023 07:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

im also facing the same issue please tell me if anybody have a solution for this problem