Support Questions

- Cloudera Community

- Support

- Support Questions

- Configure Eclipse with hadoop plugin

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Configure Eclipse with hadoop plugin

- Labels:

-

Apache Hadoop

Created on 07-03-2015 11:21 AM - edited 09-16-2022 02:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I've download cloudera CDH5. Now I'm configuring Eclipse (on my host machine) woth hadoop plugin.

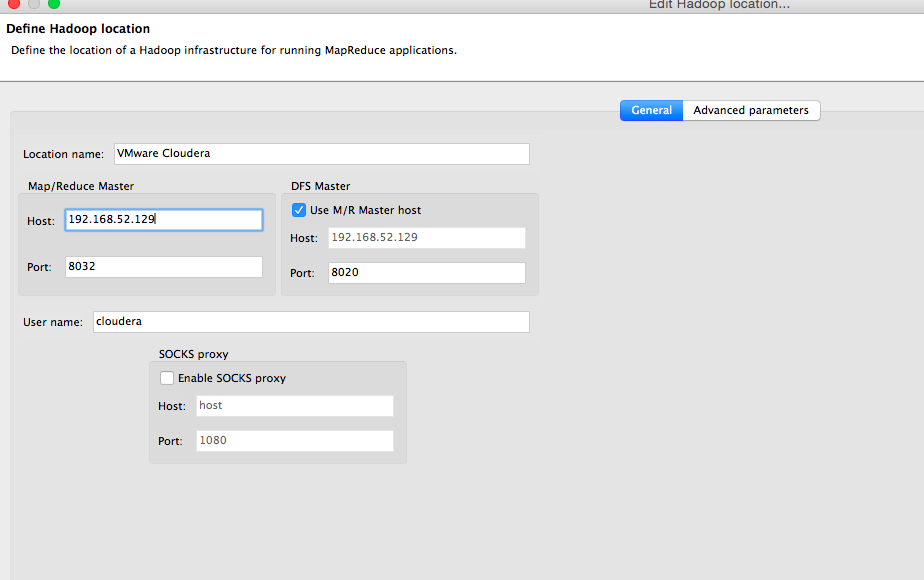

What parameters I have to specify in: "Define Hadoop Location -> Map/Reduce Master -> Port" and "Define Hadoop Location -> DFS Master -> Port"?

I also specify Host with ip of Clouder CDH5 VMware ip and User name=cloudera.

Thanks

Created 07-03-2015 12:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

8032. I'm not that familiar with the hadoop plugin, but you should clarify

whether you want to be using MapReduce from Hadoop 2.x (YARN acts as a

scheduler, and you submit MapReduce jobs through YARN's ports). When they

say "Map/Reduce Master", to me that sounds like MR1, when MapReduce ran

it's own daemons. If it's MR1 you want to be using, you would actually want

to use the JobTracker port, which is 8021.

Even though MR1 is supported in CDH 5, we recommend Hadoop 2 / YARN for

production and MR1 is not running in the QuickStart VM by default. Some

work would be required to shutdown the YARN daemons and start the MR1

daemons; specifically, stopping that hadoop-yarn-resourcemanager and

hadoop-yarn-nodemanager services, uninstalling the hadoop-conf-pseudo

package, and installing the hadoop-0.20-conf-pseudo package instead, and

then starting the hadoop-0.20-mapreduce-jobtracker and

hadoop-0.20-mapreduce-tasktracker services.

>> I also specify Host with ip of Clouder CDH5 VMware ip

Make sure that you can ping that IP from your host machine. By default, the

VM uses "NAT" which means you can't connect from your host machine. You'll

want to use a "bridged" network or something similar instead so that you

can initiate connections from your host machine.

Created 07-03-2015 12:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

8032. I'm not that familiar with the hadoop plugin, but you should clarify

whether you want to be using MapReduce from Hadoop 2.x (YARN acts as a

scheduler, and you submit MapReduce jobs through YARN's ports). When they

say "Map/Reduce Master", to me that sounds like MR1, when MapReduce ran

it's own daemons. If it's MR1 you want to be using, you would actually want

to use the JobTracker port, which is 8021.

Even though MR1 is supported in CDH 5, we recommend Hadoop 2 / YARN for

production and MR1 is not running in the QuickStart VM by default. Some

work would be required to shutdown the YARN daemons and start the MR1

daemons; specifically, stopping that hadoop-yarn-resourcemanager and

hadoop-yarn-nodemanager services, uninstalling the hadoop-conf-pseudo

package, and installing the hadoop-0.20-conf-pseudo package instead, and

then starting the hadoop-0.20-mapreduce-jobtracker and

hadoop-0.20-mapreduce-tasktracker services.

>> I also specify Host with ip of Clouder CDH5 VMware ip

Make sure that you can ping that IP from your host machine. By default, the

VM uses "NAT" which means you can't connect from your host machine. You'll

want to use a "bridged" network or something similar instead so that you

can initiate connections from your host machine.

Created 07-04-2015 02:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks!

From my host I can ping IP VMWare Cloudera. I also edited my host (192.168.52.129 quickstart.cloudera).

This is my Eclipse MapReduce location configuration:

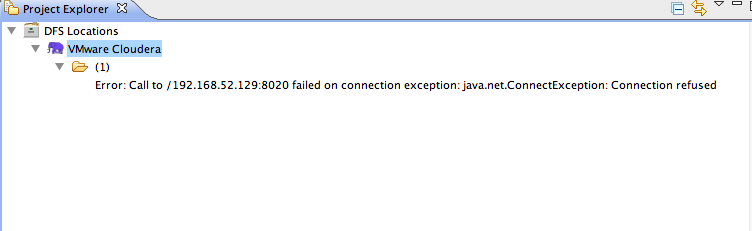

And this is my error:

Yes you are right! I'd like to use MapReduce v2 (YARN). So is it a plugin for MapReduce v2?

Thanks a lot for your help.

Created 09-30-2016 09:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same problem with my eclipse.. I tried to connect DFS Cloudera (VMware) from eclipse(windows) via NAT and bridged network Please someone help me.

Created 04-22-2017 02:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here we need to add Vm credential to get access for Hadoop ecosystem. As i am able to add username and still struggling to get the place where i need to put password.

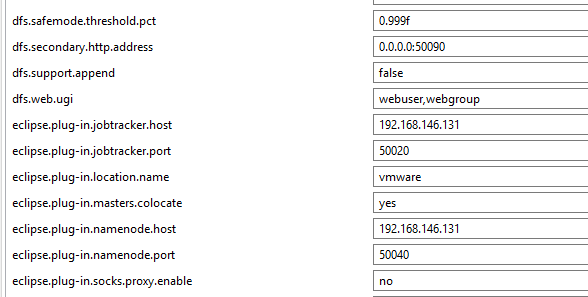

make eclipse-plug-in.user.name=<your VM username>

Created 08-24-2017 11:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sumit

Created 11-07-2018 06:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same problem, any solution for this?