Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Configure Storage capacity of Hadoop cluster

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Configure Storage capacity of Hadoop cluster

- Labels:

-

Apache Hadoop

Created on 03-04-2016 10:22 AM - edited 08-19-2019 04:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

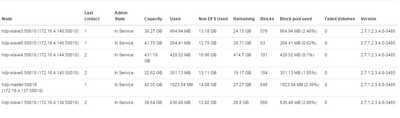

we have 5 node cluster with following configurations for master and slaves.

HDPMaster 35 GB 500 GB HDPSlave1 15 GB 500 GB HDPSlave2 15 GB 500 GB HDPSlave3 15 GB 500 GB HDPSlave4 15 GB 500 GB HDPSlave5 15 GB 500 GB

But the cluster is not taking much space. I am aware of the fact that it will reserve some space for non-dfs use.But,it is taking uneven capacity for each slave node. Is there a way to reconfigure hdfs ?

PFA.

Even though all the nodes have same hard disk, only slave 4 is taking 431GB, remaining all nodes are utilizing very small space. Is there a way to resolve this ?

Created 03-05-2016 10:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have never seen the same number for all the slave nodes because of the data distribution.

To overcome uneven block distribution scenario across the cluster, a utility program called balancer

http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/HDFSCommands.html#balancer

Created 03-07-2016 10:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

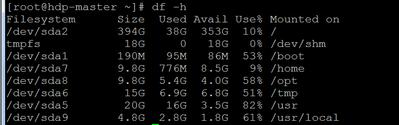

@vinay kumar Whats the output of df -h in slave 4?

You can add /hadoop and restart HDFS and then you can remove other mounts from the settings.

Created on 03-07-2016 10:32 AM - edited 08-19-2019 04:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have attached an image in my previous comment for slave-4 df -h command. So if i remove the other directories...wouldn't it effect the existing cluster in any way ?

I am getting this error after removing other directories and replacing them with /hadoop . And yes,the cluster size has been increased.

Created 03-07-2016 10:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@vinay kumar I was going to add /hadoop and then remove other directories after sometime.

Created 03-07-2016 10:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think now i got the clear picture of it. Since the mount / is partitioned with 400 GB we should use it alone to make use of that memory. But then that configuration is default configuration given by amabari. Wouldn't it affect the cluster in any way ? should i take care of anything ?

Created 03-07-2016 10:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@vinay kumar We allocate dedicated disks for HDFS data. We have to modify the datanode dir setting during the install.

Created 03-07-2016 01:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

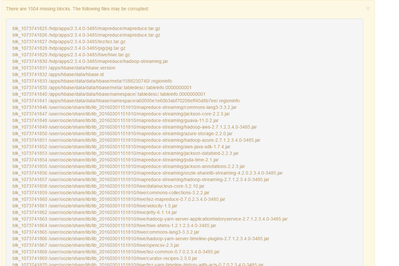

adding /hadoop and deleting other directories after some time is resulting in missing blocks. Is there any way to overcome this? When i run hdfs fsck command.Its showing that all block are missing. The reason for being could be the removal of directories. do we need to copy the data from directories into new directory(/hadoop) will that help ??

Created 03-05-2016 03:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Maybe you have problem in disk partitioning. Can you check how much space you have allocated for partitions used by HDP?

Here's a link for partitioning recommendations http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.3.0/bk_cluster-planning-guide/content/ch_partiti...

Created on 03-07-2016 09:13 AM - edited 08-19-2019 04:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have allocated around 400 GB for / partition. PFA.

Created 04-11-2016 12:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @vinay kumar, I think your partitioning is wrong you are not using "/" for hdfs directory. If you want use full disk capacity, you can create any folder name under "/" example /data/1 on every data node using command "#mkdir -p /data/1" and add to it dfs.datanode.data.dir. restart the hdfs service.

You should get the desired output.

- « Previous

- Next »