Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Connecting to netezza using sqoop, external ta...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Connecting to netezza using sqoop, external table creating fails with syntax error, sqoop doesnt fail.

- Labels:

-

Apache Hive

-

Apache Sqoop

Created 05-10-2019 03:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are pulling data from a Netezza database to hive using sqoop direct option, we are providing a custom SQL it has a syntax error which makes the mapper fail, but the sqoop command doesn't fail.

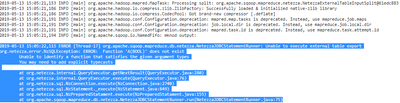

2019-05-09 18:17:05,175 ERROR [Thread-17] org.apache.sqoop.mapreduce.db.netezza.NetezzaJDBCStatementRunner: Unable to execute external table export org.netezza.error.NzSQLException: ERROR: Function 'A(BOOL)' does not exist Unable to identify a function that satisfies the given argument types You may need to add explicit typecasts at org.netezza.internal.QueryExecutor.getNextResult(QueryExecutor.java:280) at org.netezza.internal.QueryExecutor.execute(QueryExecutor.java:76) at org.netezza.sql.NzConnection.execute(NzConnection.java:2740) at org.netezza.sql.NzStatement._execute(NzStatement.java:849) at org.netezza.sql.NzPreparedStatament.execute(NzPreparedStatament.java:155) at org.apache.sqoop.mapreduce.db.netezza.NetezzaJDBCStatementRunner.run(NetezzaJDBCStatementRunner.java:75)

19/05/09 18:17:25 INFO mapreduce.Job: Job job_1557423660592_0171 running in uber mode : false

19/05/09 18:17:25 INFO mapreduce.Job: map 0% reduce 0%

19/05/09 18:17:38 INFO mapreduce.Job: map 25% reduce 0%

19/05/09 18:17:39 INFO mapreduce.Job: map 100% reduce 0%

if I provide --driver org.netezza.Driver option for sqoop command, sqoop fails when it encounters above error, but doesnt use direct method for data import for successful runs.

Please help.

Created 05-10-2019 06:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 05-10-2019 06:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 05-10-2019 10:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you share the sqoop command? Since the sqoop command doesn't fail ss the table create in hive?

Created 05-13-2019 01:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Geoffrey Shelton Okot for looking at my post, below is the sqoop command which I have used, there is a syntax error in the where clause to fail the sqoop command.

Target directory got created, and the command ran for 17 hrs without failing even after the mapper showed it failed while creating netezza external table.

sqoop-import \

-D mapreduce.job.queuename=XDL \

-D mapreduce.task.timeout=60000 \

--verbose \

--connect jdbc:netezza://server/db \

--username username \

--password ***** \

--table table_name \

--where " a(SUBSTR(src_file_nm,23,8)like'20190104%')" \

--target-dir /data/xdl/dev/HIVE_SCHEMA/tgt.db/table_name_20190509 \

--fields-terminated-by '\b' \

--num-mappers 8 \

--null-string '\\N' \

--null-non-string '\\N' \

--outdir /xdl_code/dev/LAND/CDR/TEMP \

--split-by fw_rec_nbr \

--direct

Created 05-13-2019 02:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In case of Netezza direct imports, Sqoop executes a CREATE EXTERNAL TABLE command (so you will needs CREATE EXTERNAL TABLE privilege) to create a backup of the content of the table to a temporary file and it copies the content of this file to the final output on HDFS.

I have modified the script a bit it should create a hive table called kanchamreddy_hive in /data/xdl/dev/...../.../...._20190509/ it could error out because I have tested it against Netezza

sqoop-import \ -D mapreduce.job.queuename=XDL \ -D mapreduce.task.timeout=60000 \ --verbose \ --connect jdbc:netezza://server/db \ --username username \ --password ***** \ --table table_name \ --where " a(SUBSTR(src_file_nm,23,8)like'20190104%')" \ --hive-table table_name_20190509 \ --create-hive-table \ --target-dir /data/xdl/dev/HIVE_SCHEMA/tgt.db \ --fields-terminated-by '\b' \ --num-mappers 8 \ --null-string '\\N' \ --null-non-string '\\N' \ --outdir /xdl_code/dev/LAND/CDR/TEMP \ --split-by fw_rec_nbr \ --direct

Can you try that and revert!

Created on 05-13-2019 03:13 PM - edited 08-17-2019 03:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Geoffrey, I tried the sqoop command you have provided for creating the hive table, still facing the same issue.

HDFS out directory gets created,

Getting a syntax error for creating the external table on NZ, but the mapper didn't fail

Last log message of the mapper

Sqoop job still showing as progressing.

The sqoop version I am using is Sqoop 1.4.6.2.6.4.0-91