Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Connection failed: [Errno 111] Connection refu...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Connection failed: [Errno 111] Connection refused...

- Labels:

-

Hortonworks Data Platform (HDP)

Created on 05-18-2018 01:29 AM - edited 08-17-2019 10:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So, I'm attempting a 'start from scratch' install of HDP2.6.4 on GCP. After 3+ days, I was finally able to get GCP into a state where I was able to get the code installed on my instances.

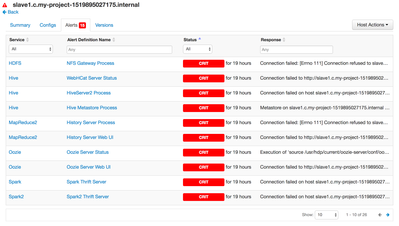

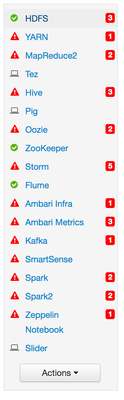

Then the moment of truth as I logged into Ambari...it was a sea of RED, lit up like Christmas tree! 30+ alerts and the only services running were HDFS, Zookeeper, and Flume

Digging into the Resource Manager, there seems to be a bit of a recurring theme:

Connection failed: [ERRNO 111] Connection refused to <machine_name>:<port>

At first, I thought it was simply because I hadn't opened up those ports in the GCP firewall, so I added them. But I'm still encountering the errors.

Any ideas where I've gone wrong?

Created 10-29-2018 12:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had an issue similar when I enabled SSL on HDFS. Map Reduce and YARN.

Symptoms:

- I could connect to HDFS rest interface from the command line internal to the cluster using curl

- I could run the UI for HDFS from internal to the cluster using xwindows enabled putty an XMing

- OpenSSL form internal returned 0 for the HDFS connection

- No external connection to the could be made using curl/openssl/telnet to HDFS

- The ResourceManager and JobHistory UI's both worked fine

- We had a firewall but even when disabled the connection to HDFS failed

The issue was that we have 'internal network IP addresses (infiniband)' and externally accessible IP addresses. The HDFS when enabling https had bound to the internal address and wasn't listening on the external address - hence the connection refused.

The solution was to add the

dfs.namenode.https-bind-host=0.0.0.0 property to get the service to listen across all network interfaces.

Might be worth checking if you (or anybody else that gets a connection refused errors) that have multiple network interfaces, to which the port is binding to.

netstat -nlp | grep 50470

tcp 0 0 <internal IP address>:50470 0.0.0.0:* LISTEN 23521/java

netstat -nlp | grep 50070

tcp 0 0 0.0.0.0:50070 0.0.0.0:* LISTEN 23521/java

Created 01-11-2019 02:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mike Wong Any update here? I have the exact same case

- « Previous

- Next »