Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: ControlRate and BackPressure seems not work fo...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ControlRate and BackPressure seems not work for control the the throughput

- Labels:

-

Apache NiFi

Created on 06-13-2017 10:55 AM - edited 08-17-2019 09:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi all,

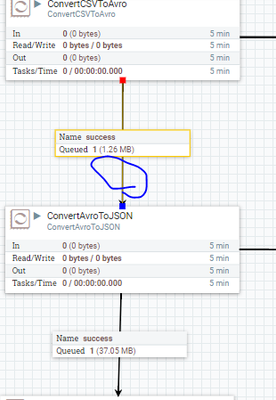

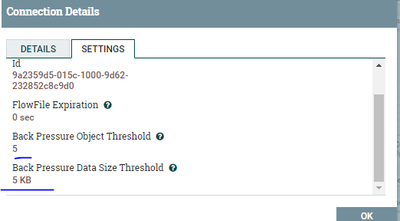

I am very new to nifi, i have try to adopt backpressure to control the throughput and i did a test as bleow, setting the highlight link backpressure threshold as screen cap. however, seems the 37.05MB data pass from "ConvertCSVToAvro" to "ConvertAvroToJSON" quickly , feels like backpressure doesn't work.

I have read https://community.hortonworks.com/articles/9785/nifihdf-dataflow-optimization-part-2-of-2.html and flow the setting.

Would anyone share me tips of using controlrate and backpressure to prevent overwhelm to the system? currently if the input data is large than 500M (CSV), it has OOM error.

Created 06-13-2017 12:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Backpressure is not used to control data rate in your dataflow. The intent of the backpressure setting on connections is to control the amount of allowed queued data. Both Back pressure settings are "soft" limits. Once backpressure kicks in on a connection, the processor feeding that connection will no longer be allowed to run.

So in you case above, you have backpressure set to 5 Objects (FlowFiles) or 5 KB of content. Since your queue is empty, no backpressure was being applied when the 37.05 MB FlowFile arrived at your ConvertCSVToAvro processor, so that processor was allowed to run. That 1 FlowFile was processed through and placed on the outbound connection. It is at that time back pressure kicked in because you exceeded one of your backpressure settings. The ConvertCSVToAvro processor will now be prevented from running until that backpressure drops below 5 FlowFiles or 5 KB of queued data again. If all your processor are processing FlowFiles rapidly, back pressure will be very sparsely applied. Also keep in mind for efficiency some processors work on batches of FlowFiles. You may see for example with a backpressure object threshold of 5 a queue with more then 5 FlowFiles. The batch of FlowFiles are placed on an outbound queue. That processor who did the batch processing will then not be allowed to run again until that outbound connection drops again below 5 FlowFiles.

The ControlRate processor allows you to actually control the throughput of a dataflow. It does not slow the processing. The ControlRate processor will allow data to queue in its input side and based on its configured setting only allow x number of FlowFiles through over y amount of time. lets say it is configured to let 5 KB of data through every 1 minute. If you feed it a 37 MB file, it does not transfer just pieces of that FlowFile. It will feed through the entire 37 MB FlowFile and then not allow another FlowFile through until the average data per 1 minute is 5 KB.

Because of how the above works, data could continue to queue in front of ControlRate. This is where backpressure settings become important to stop upstream processor from running. You can set backpressure all the way upstream to your data ingest processors so they stop accepting new FlowFiles.

Thanks,

Matt

Created 06-13-2017 12:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Backpressure is not used to control data rate in your dataflow. The intent of the backpressure setting on connections is to control the amount of allowed queued data. Both Back pressure settings are "soft" limits. Once backpressure kicks in on a connection, the processor feeding that connection will no longer be allowed to run.

So in you case above, you have backpressure set to 5 Objects (FlowFiles) or 5 KB of content. Since your queue is empty, no backpressure was being applied when the 37.05 MB FlowFile arrived at your ConvertCSVToAvro processor, so that processor was allowed to run. That 1 FlowFile was processed through and placed on the outbound connection. It is at that time back pressure kicked in because you exceeded one of your backpressure settings. The ConvertCSVToAvro processor will now be prevented from running until that backpressure drops below 5 FlowFiles or 5 KB of queued data again. If all your processor are processing FlowFiles rapidly, back pressure will be very sparsely applied. Also keep in mind for efficiency some processors work on batches of FlowFiles. You may see for example with a backpressure object threshold of 5 a queue with more then 5 FlowFiles. The batch of FlowFiles are placed on an outbound queue. That processor who did the batch processing will then not be allowed to run again until that outbound connection drops again below 5 FlowFiles.

The ControlRate processor allows you to actually control the throughput of a dataflow. It does not slow the processing. The ControlRate processor will allow data to queue in its input side and based on its configured setting only allow x number of FlowFiles through over y amount of time. lets say it is configured to let 5 KB of data through every 1 minute. If you feed it a 37 MB file, it does not transfer just pieces of that FlowFile. It will feed through the entire 37 MB FlowFile and then not allow another FlowFile through until the average data per 1 minute is 5 KB.

Because of how the above works, data could continue to queue in front of ControlRate. This is where backpressure settings become important to stop upstream processor from running. You can set backpressure all the way upstream to your data ingest processors so they stop accepting new FlowFiles.

Thanks,

Matt

Created 06-13-2017 03:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks your sharing @Matt Clarke.

so even we set the backpressure/controlrate threshold, we need to avoid a single large file which cause the nifi crashed (e.g. OOM). right?

Created 06-13-2017 04:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

NiFi at is core has no issues working with very large files. Often times, when you run into OOM it is because of what you are trying to do with those very large files after they are in NiFi. In the majority of the cases OOM can be avoided via dataflow design and tweaks to the heap size allocated to the NiFi JVM. The content of a FlowFile does not live in heap memory space, but the FlowFile attributes do (*** except when swapped out to disk in large queues). So avoid extracting large amounts of the content into FlowFile attributes, avoid trying to split very large files in to large numbers of small FlowFiles using a single processor, avoid trying to merge a very large number of FlowFiles in to a single FlowFile, etc... You can still do these types of things but may need to do it in two stages rather then one. For example Splitting large files by every 5000 lines first and then split 5000 line FlowFiles by every line (Huge difference in heap usage).

If you found this answer addressed your question, please mark it as accepted to close out this thread.

Thanks, Matt