Support Questions

- Cloudera Community

- Support

- Support Questions

- ConvertJSONtoSQL Issue: How to get unmatched Colum...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ConvertJSONtoSQL Issue: How to get unmatched Columns?

- Labels:

-

Apache NiFi

Created 12-03-2020 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using ConvertJSONtoSQL to convert simple JSON to a SQL statement for inserting rows in DB2. I am using ConvertJSONtoSQL and PutSQL to write to DB2, but it is omitting the unmatched columns.

How can I add unmatched columns to the SQL? Is there a way to add the matching names in the JSON prior to running ConvertJSONtoSQL?

I tried using AttributestoJSON but it didn't work. Can someone please help me?

Created on 12-04-2020 02:39 AM - edited 12-04-2020 02:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

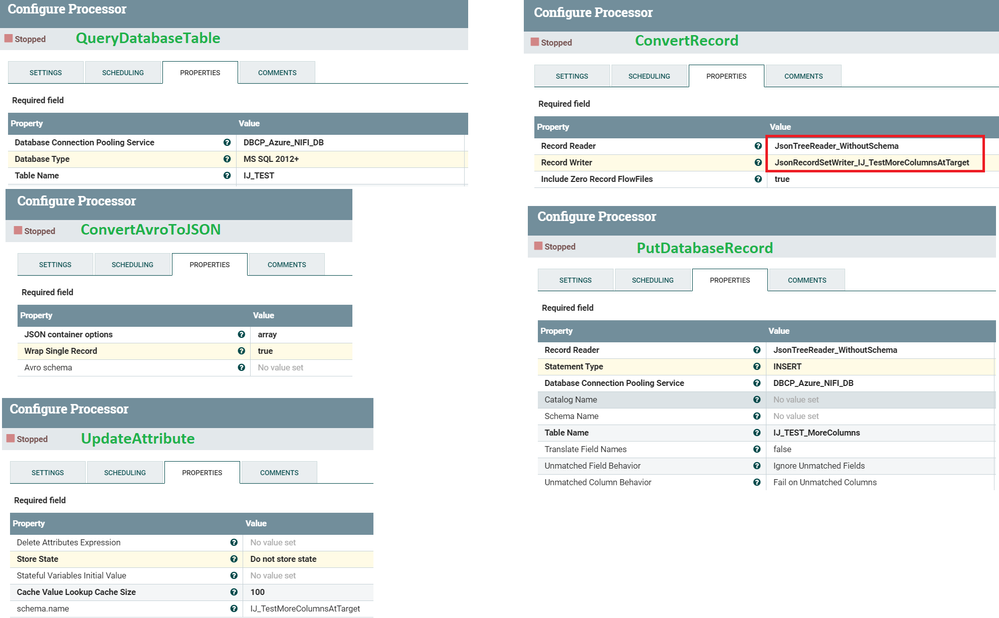

@ang_coder Tried it myself and have to correct my idea a little bit. Have a look at my example.

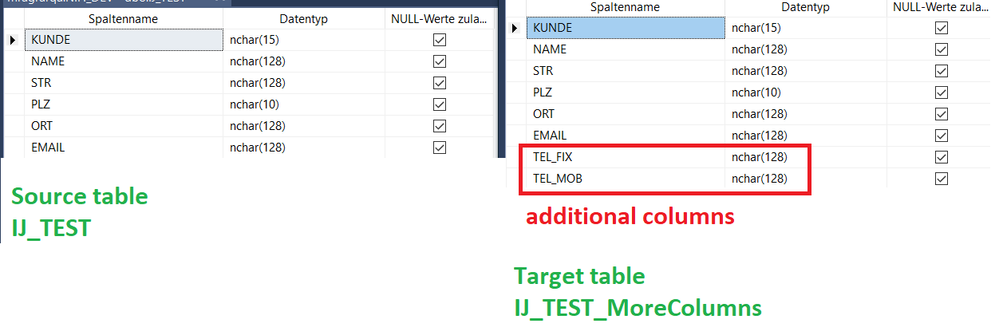

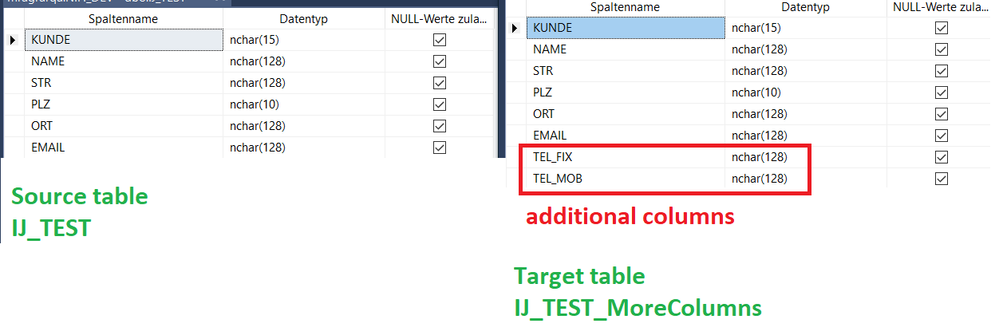

Here are my tables. The target table has two columns more than the source.

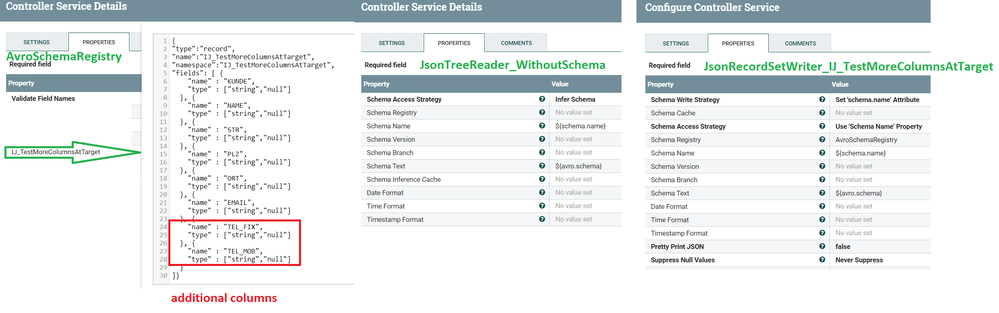

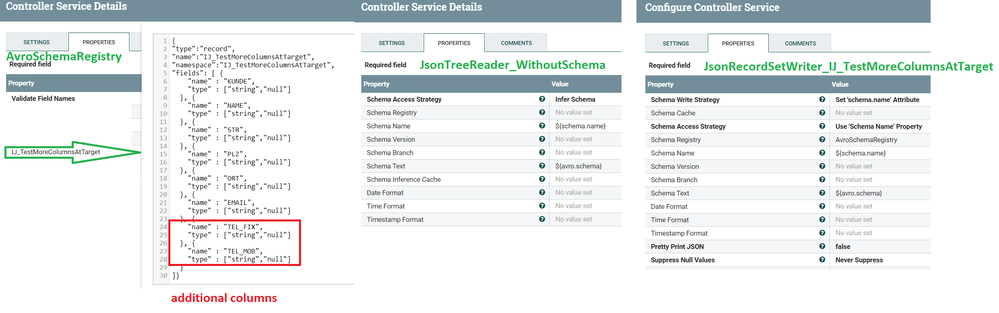

I defined following ControllerServices:

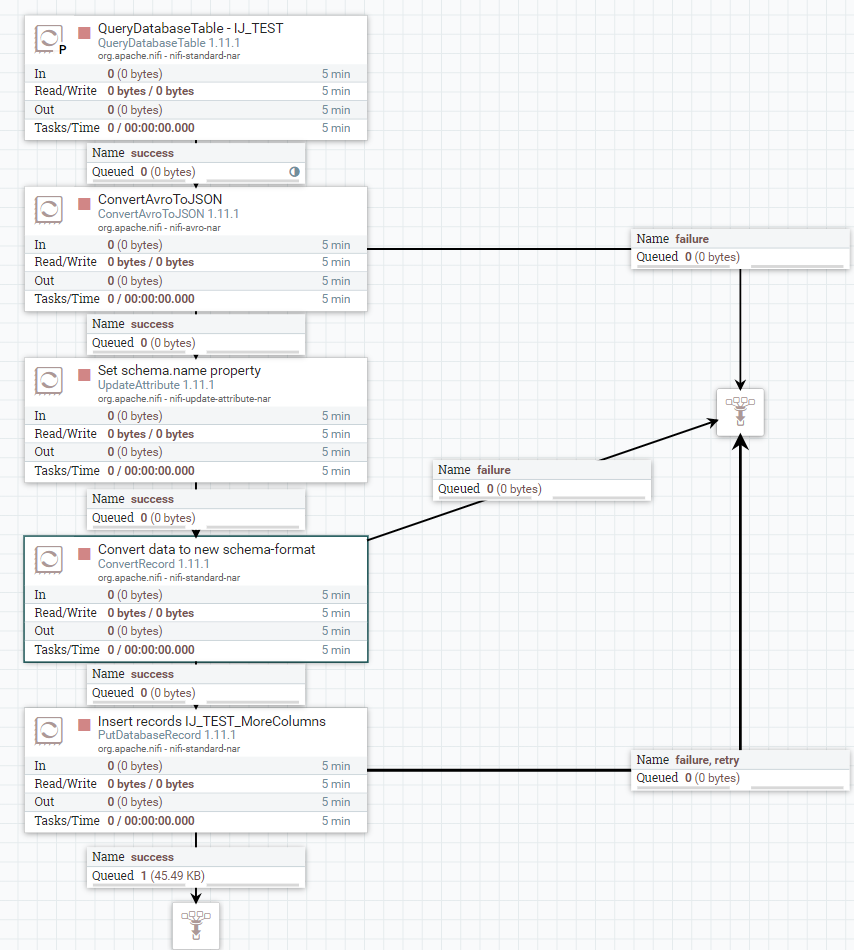

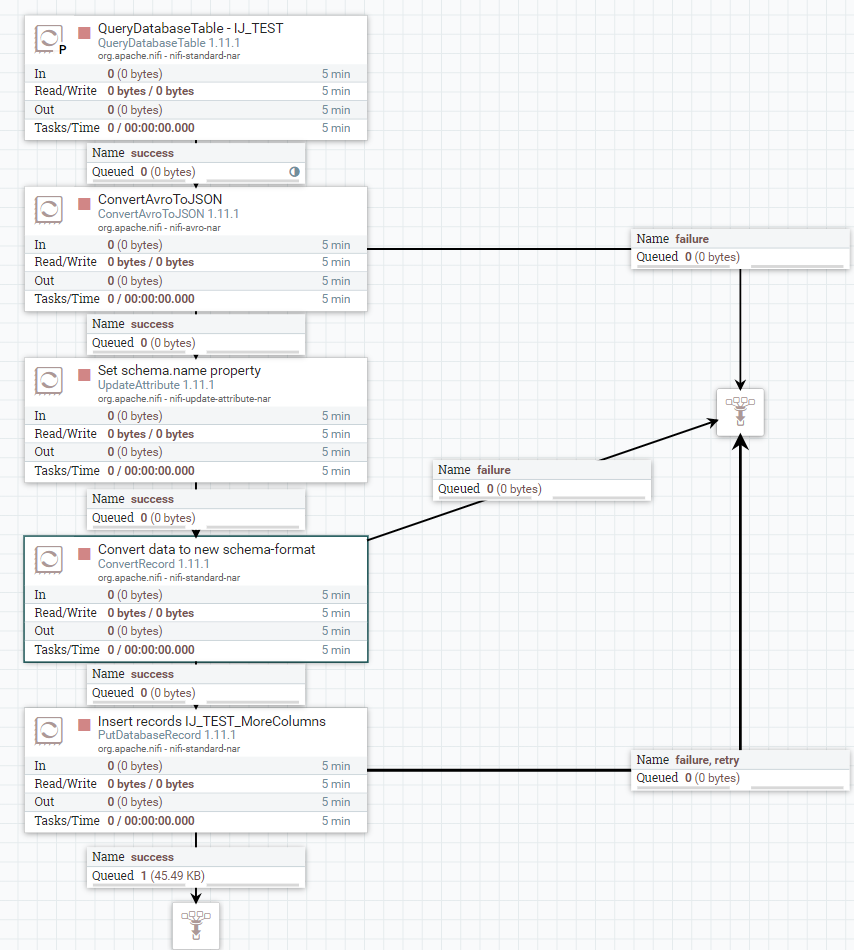

And created this flow:

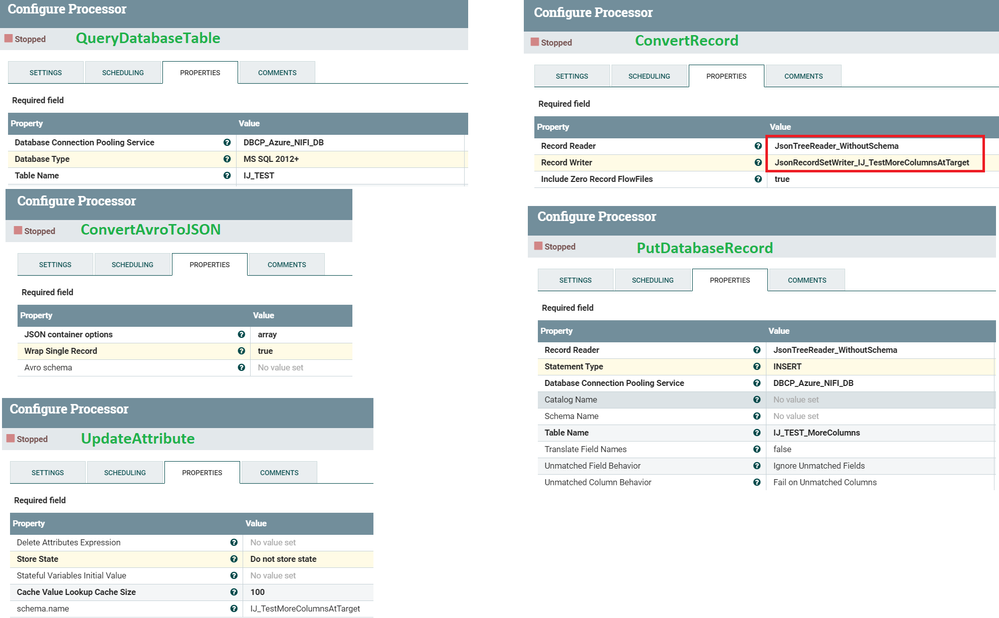

Here the information of the single processors:

In my example it works.

Sure there will be a more elegant solution but I don't know how.

Created on 12-07-2020 11:39 PM - edited 12-07-2020 11:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

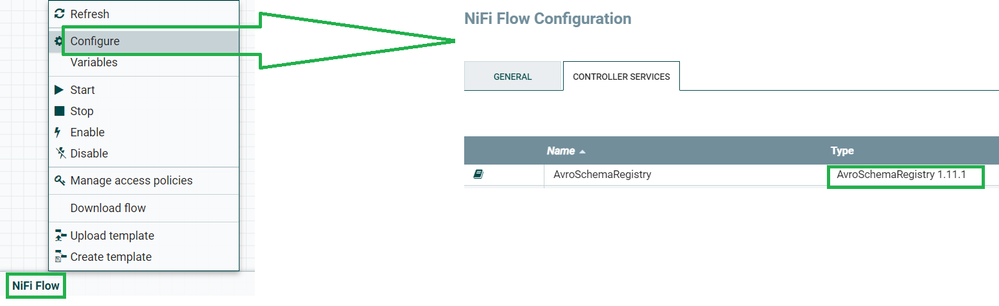

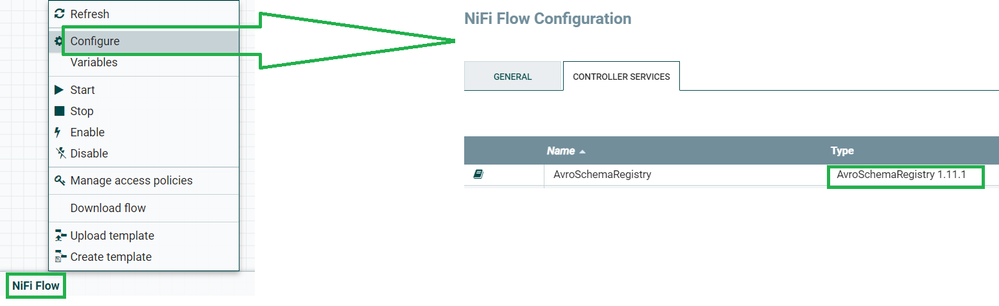

The AvroSchemaRegistry is to set in the ControllerServices.

If it should be available in your whole NiFi just go to the canvas and click anywhere right -> choose "Configure". It is the same place where you define your database connections.

If you already have JSON-format just ignore the first two steps of my example flow where data were retrieved and converted to JSON.

Start with your JSON at UpdateAttribute which doesn't care about the kind of flowfile content.

The ConvertRecord in my example uses an JSON-reader and JSON-writer where the writer points to the AvroSchemaRegistry with the schema.name set in UpdateAttribute. Therefore the JsonRecordSetWriter_IJ_TestMoreColumnsAtTarget (just to take a short name) has the specification "Schema Access Strategy" with option "Use Schema.Name Property". Have a look at the configuration details in my post before.

Hope this helps.

Created 12-04-2020 01:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure if i get you right and haven't done such a thing myselfe until now.

But if you use PutDatabaseRecord instead of PutSQL you have to specify a RecordReader. This will be configured as ControllerService and you can add a schema in AvroSchemaRegistry (also in ControllerServices). In this schema provide the full table definition with default values.

f.e. {

"name" : "zeichen",

"type" : ["string","null"]

},...

I suppose that all columns which are not included by your flowfile should be taken from the schema considering the default values.

Created on 12-04-2020 02:39 AM - edited 12-04-2020 02:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ang_coder Tried it myself and have to correct my idea a little bit. Have a look at my example.

Here are my tables. The target table has two columns more than the source.

I defined following ControllerServices:

And created this flow:

Here the information of the single processors:

In my example it works.

Sure there will be a more elegant solution but I don't know how.

Created 12-07-2020 10:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please tell me where did you set the AvroSchemaRegistry? Since you are retrieving the data from SQL DB, you are getting it as Avro format. My incoming data is json format. So how can I use my flow file directly in Update Attribute and use in ConvertRecord? Does it make sense?

Created on 12-07-2020 11:39 PM - edited 12-07-2020 11:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The AvroSchemaRegistry is to set in the ControllerServices.

If it should be available in your whole NiFi just go to the canvas and click anywhere right -> choose "Configure". It is the same place where you define your database connections.

If you already have JSON-format just ignore the first two steps of my example flow where data were retrieved and converted to JSON.

Start with your JSON at UpdateAttribute which doesn't care about the kind of flowfile content.

The ConvertRecord in my example uses an JSON-reader and JSON-writer where the writer points to the AvroSchemaRegistry with the schema.name set in UpdateAttribute. Therefore the JsonRecordSetWriter_IJ_TestMoreColumnsAtTarget (just to take a short name) has the specification "Schema Access Strategy" with option "Use Schema.Name Property". Have a look at the configuration details in my post before.

Hope this helps.

Created 12-08-2020 11:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thank you very much! This works.