Support Questions

- Cloudera Community

- Support

- Support Questions

- DataNode not decommisioning

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

DataNode not decommisioning

- Labels:

-

Apache Hadoop

Created on 12-22-2017 04:17 AM - edited 08-18-2019 02:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear experts,

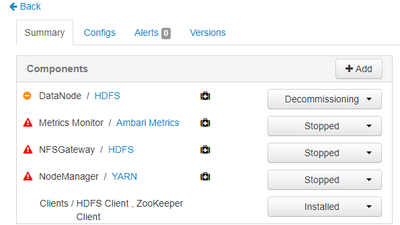

I am running HDP 2.4 on AWS cluster. I am trying to decommission a datanode but it appears the status is stuck at "Decommissioning" for long time. If i try to delete the host the below error message is being displayed. Could you please help ?

Created 12-22-2017 05:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If the Replication factor >= no of datanodes currently and you try to decommission a data node , it may get stuck in Decommissioning state.

Ex: Replication factor is 3, Current data nodes =3. If you are trying to decommission a node now , it may get stuck in that state.

Make sure that no of data node matches the replication factor.

Reference : https://issues.apache.org/jira/browse/HDFS-1590

Thanks,

Aditya

Created 06-22-2018 06:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A datanode is a slave component of NameNode that shouldn't sit on the same host as the standby Namenode which is a Master process. With over 24 nodes you can afford to have at least 3 master nodes (Active & Standby NameNode ,Active & Standby RM and 3 zookepers)

Can you ensure the client components ain't running?

Get Datanode Info

$ hdfs dfsadmin -getDatanodeInfo datanodex:50020

Trigger a block report for the given datanode

$ hdfs dfsadmin -triggerBlockReport datanodex:50020

Can you update exclude file on both machine Resource manager and Namenode (Master Node), if it’s not there then we can create an exclude file on both the machines

$ vi excludes

Add the Datanode/Slave-node address, for decommissioning e.g

10.125.10.107

Run the following command in the Resource Manager:

$ yarn rmadmin -refreshNodes

This command will basically check the yarn-site.xml and process that property.and decommission the mentioned node from yarn. It means now yarn manager will not give any job to this node.

But the tricky part is even if all the datanodes components are not running deleting the datanode would impact your standby NameNode.

I recommend you move the standby Namenode to another host that should ONLY have a DataNode and Node Manager or at most client software like ZK,Kerberos etc

HTH

Created 06-23-2018 09:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any updates?

Created 06-24-2018 07:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately there is no FORCE command for decommissioning in Hadoop. Once you have the host in the excludes file and you run the yarn rmadmin -refreshNodes command that should trigger the decommissioning.

It isn't recommended and good architecture to have a NameNode and DataNode on the same host (Master and Slave/worker respectively) with over 24 nodes you should have planned 3 to 5 master nodes and strictly have DataNode,NodeManager and eg Zk client on the slave (workernodes).

Moving the NameNode to a new node and running the decommissioning will make your work easier and isolate your Master processes from the Slave this is the ONLY solution I see left for you.

HTH