Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Datanode added but not seen by namenode

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Datanode added but not seen by namenode

- Labels:

-

HDFS

-

Hortonworks Data Platform (HDP)

Created 06-06-2022 02:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

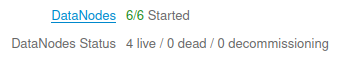

We recently added a two nodes to our cluster through ambari wizard, we installed datanode, nodemanager, Metrics Monitor, LogFeeder

The datanode/nodemanager are starting correctly by not live

topology_mappings.data was updated in both mnode and cnodes

cat /etc/hadoop/conf/topology_mappings.data

[network_topology]

cnode2.2b87d4bc-6cf3-4350-aaf7-eff7227d1aef.datalake.com=/default-rack

10.1.2.172=/default-rack

cnode5.2b87d4bc-6cf3-4350-aaf7-eff7227d1aef.datalake.com=/default-rack

10.1.2.169=/default-rack

cnode4.2b87d4bc-6cf3-4350-aaf7-eff7227d1aef.datalake.com=/default-rack

10.1.2.175=/default-rack

cnode3.2b87d4bc-6cf3-4350-aaf7-eff7227d1aef.datalake.com=/default-rack

10.1.2.67=/default-rack

cnode1.2b87d4bc-6cf3-4350-aaf7-eff7227d1aef.datalake.com=/default-rack

10.1.2.188=/default-rack

cnode6.2b87d4bc-6cf3-4350-aaf7-eff7227d1aef.datalake.com=/default-rack

10.1.2.9=/default-rack

datanodes have 2 external disks to store hdfs data

[root@node6 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/vdb 200G 33M 200G 1% /grid/disk0

/dev/vdc 200G 33M 200G 1% /grid/disk

We are using hdp 2.6.5 with freeipa as ldap, we checked that everything was created successfully (principals, keytabs ...) but logs are showing some warnings/errors with kerberos

datanodes logs:

2022-06-06 10:45:39,357 WARN datanode.DataNode (BPServiceActor.java:retrieveNamespaceInfo(227)) - Problem connecting to server: mnode0.2b87d4bc-6cf3-4350-aaf7-eff7227d1aef.datalake.com/10.1.2.145:8020

2022-06-06 10:45:39,641 WARN datanode.DataNode (BPServiceActor.java:retrieveNamespaceInfo(227)) - Problem connecting to server: mnode1.2b87d4bc-6cf3-4350-aaf7-eff7227d1aef.datalake.com/10.1.2.106:8020

mnode logs:

2022-06-06 10:47:55,038 INFO ipc.Server (Server.java:doRead(1006)) - Socket Reader #1 for port 8020: readAndProcess from client 10.1.2.169 threw exception [org.apache.hadoop.security.authorize.AuthorizationException: User dn/cnode5.2b87d4bc-6cf3-4350-aaf7-eff7227d1aef.datalake.com@2B87D4BC-6CF3-4350-AAF7-EFF7227D1AEF.DATALAKE.COM (auth:KERBEROS) is not authorized for protocol interface org.apache.hadoop.hdfs.server.protocol.DatanodeProtocol: this service is only accessible by dn/10.1.2.169@2B87D4BC-6CF3-4350-AAF7-EFF7227D1AEF.DATALAKE.COM]

Created 06-08-2022 03:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @enirys ,

You will need to add the host entries in the DNS record if freeipa is used to manage the DNS. You can compare the host entries from the other working Datanode in freeipa. Every node in a Data Lake, Data Hub, and a CDP data service should be configured to look up the FreeIPA DNS service for name resolution within the cluster.

Created 06-07-2022 11:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @enirys ,

It looks like a DNS resolution issue. Could you check if this gets resolved by following this article https://my.cloudera.com/knowledge/ERROR-quot-is-not-authorized-for-protocol-interface?id=304462

Created 06-08-2022 01:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rki_

Yes, i confirm it's a dns problem. after adding the two nodes on /etc/hosts it works fine

but as i'm using freeipa how can i acheive that without editing the /etc/hosts file ?

Created 06-08-2022 03:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @enirys ,

You will need to add the host entries in the DNS record if freeipa is used to manage the DNS. You can compare the host entries from the other working Datanode in freeipa. Every node in a Data Lake, Data Hub, and a CDP data service should be configured to look up the FreeIPA DNS service for name resolution within the cluster.

Created 06-09-2022 05:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rki_

Indeed, records dns was not created during enrollment process

creating required records solved my issue

Thanks a lot 😉