Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Datanode replication issue

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Datanode replication issue

- Labels:

-

Apache Hadoop

Created on 10-29-2018 09:16 AM - edited 08-17-2019 04:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

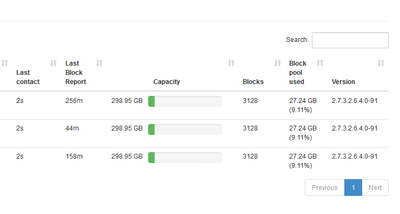

We have uploaded 9 gb of data in HDFS and we have configured 3 nodes and nodes block size as default is 128MB,as we know hadoop replicate data in 3 nodes now in this case if we have uploaded 9GB of data it should comsume 9 X 3 GB = 27GB

however what we can see in the below attached screenshot that it is taking 27GB in each datanode. Can someone please help to understand what went wrong.

Created 10-29-2018 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe there are other files along with your 9GB file and by coincidence the other files constitute 18GB of data.

Other files constitute of many component libraries, ambari data, user data, tmp data.

Run the below command to find which files are taking size :

hadoop fs -du -s -h /*

Go further by putting path in place of * till you find which other files are present which add upto 18G

Created 10-29-2018 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe there are other files along with your 9GB file and by coincidence the other files constitute 18GB of data.

Other files constitute of many component libraries, ambari data, user data, tmp data.

Run the below command to find which files are taking size :

hadoop fs -du -s -h /*

Go further by putting path in place of * till you find which other files are present which add upto 18G

Created 10-29-2018 03:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Soumitra SulavThank you for your help.. and yes sorry other files like libraries, ambari data, user data, tmp data will be taking so much off data.

actually there were some preproccesed data stored for which we were unaware sorry and still thank you

Created 10-29-2018 04:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If it worked for you, please take a moment to login and "Accept" the answer.