Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Distcp job after Hive job

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Distcp job after Hive job

- Labels:

-

Apache Hadoop

-

Apache Hive

-

Apache Oozie

Created on 04-28-2016 01:42 PM - edited 08-19-2019 03:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I currently have a very simple workflow with a Hive script. When I run the workflow, everything is running properly but at the end of each hive query inside my Hive action, I have a job "distcp" that starts.

This is not a part of my workflow, I do not understand why I have this job?

If I run my Hive request inside Hue or anything else I doesn't have a distcp job at the end...

Update :

The bug occurs even if I execute Oozie by the command line.

The coordinator :

<coordinator-app

name="coord_l****"

frequency="0 4 * * *"

start="${startTime}"

end="${endTime}"

timezone="UTC"

xmlns="uri:oozie:coordinator:0.2">

<controls>

<timeout>${my_timeout}</timeout>

<concurrency>${my_concurrency}</concurrency>

<execution>${execution_order}</execution>

<throttle>${materialization_throttle}</throttle>

</controls>

<action>

<workflow>

<app-path>${nameNode}/**/workflow.xml</app-path>

<configuration>

<property>

<name>year</name>

<value>${coord:formatTime(coord:actualTime(),'yyyy')}</value>

</property>

<property>

<name>month</name>

<value>${coord:formatTime(coord:actualTime(),'MM')}</value>

</property>

<property>

<name>day</name>

<value>${coord:formatTime(coord:actualTime(),'dd')}</value>

</property>

<property>

<name>j_30_mprec_year</name>

<value>${coord:formatTime(coord:dateOffset(coord:nominalTime(), -30, 'DAY'), 'yyyy')}</value>

</property>

<property>

<name>j_30_mprec_month</name>

<value>${coord:formatTime(coord:dateOffset(coord:nominalTime(), -30, 'DAY'), 'MM')}</value>

</property>

<property>

<name>j_30_mprec_day</name>

<value>${coord:formatTime(coord:dateOffset(coord:nominalTime(), -30, 'DAY'), 'dd')}</value>

</property>

<property>

<name>j_7_mprec_year</name>

<value>${coord:formatTime(coord:dateOffset(coord:nominalTime(), -7, 'DAY'), 'yyyy')}</value>

</property>

<property>

<name>j_7_mprec_month</name>

<value>${coord:formatTime(coord:dateOffset(coord:nominalTime(), -7, 'DAY'), 'MM')}</value>

</property>

<property>

<name>j_7_mprec_day</name>

<value>${coord:formatTime(coord:dateOffset(coord:nominalTime(), -7, 'DAY'), 'dd')}</value>

</property>

<property>

<name>j_3_mprec_year</name>

<value>${coord:formatTime(coord:dateOffset(coord:nominalTime(), -3, 'DAY'), 'yyyy')}</value>

</property>

<property>

<name>j_3_mprec_month</name>

<value>${coord:formatTime(coord:dateOffset(coord:nominalTime(), -3, 'DAY'), 'MM')}</value>

</property>

<property>

<name>j_3_mprec_day</name>

<value>${coord:formatTime(coord:dateOffset(coord:nominalTime(), -3, 'DAY'), 'dd')}</value>

</property>

</configuration>

</workflow>

</action>

</coordinator-app>

The workflow :

<workflow-app name="wf_lab" xmlns="uri:oozie:workflow:0.4">

<credentials>

<credential name="hcat" type="hcat">

<property>

<name>hcat.metastore.uri</name>

<value>thrift://****</value>

</property>

<property>

<name>hcat.metastore.principal</name>

<value></value>

</property>

</credential>

</credentials>

<start to="shell_date"/>

<action name="shell_date" cred="hcat">

<shell xmlns="uri:oozie:shell-action:0.1">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

</configuration>

<exec>**.sh</exec>

<file>**.sh</file>

<capture-output/>

</shell>

<ok to="maj_t"/>

<error to="kill"/>

</action>

<action name="maj_t" cred="hcat">

<hive xmlns="uri:oozie:hive-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<job-xml>/apps/hive/conf/hive-site.xml</job-xml>

<configuration>

<property>

<name>oozie.hive.defaults</name>

<value>/apps/hive/conf/hive-site.xml</value>

</property>

<property>

<name>tez.queue.name</name>

<value>${queueName}</value>

</property>

<property>

<name>oozie.hive.log.level</name>

<value>INFO</value>

</property>

<property>

<name>hive.execution.engine</name>

<value>tez</value>

</property>

<property>

<name>mapreduce.job.queuename</name>

<value>${queueName}</value>

</property>

</configuration>

<script>**.hql</script>

<param>workflowStartYearDate=${year}</param>

<param>workflowStartMonthDate=${month}</param>

<param>workflowStartDayDate=${day}</param>

<param>j_30_mprec_year=${j_30_mprec_year}</param>

<param>j_30_mprec_month=${j_30_mprec_month}</param>

<param>j_30_mprec_day=${j_30_mprec_day}</param>

<param>j_7_mprec_year=${j_7_mprec_year}</param>

<param>j_7_mprec_month=${j_7_mprec_month}</param>

<param>j_7_mprec_day=${j_7_mprec_day}</param>

<param>j_3_mprec_year=${j_3_mprec_year}</param>

<param>j_3_mprec_month=${j_3_mprec_month}</param>

<param>j_3_mprec_day=${j_3_mprec_day}</param>

<param>workflowOldDay7=${wf:actionData('shell_date')['sub_7']}</param>

<param>workflowOldDay3=${wf:actionData('shell_date')['sub_3']}</param>

</hive>

<ok to="maj_after"/>

<error to="kill"/>

</action>

<action name="maj_after" cred="hcat">

<hive xmlns="uri:oozie:hive-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<job-xml>/apps/hive/conf/hive-site.xml</job-xml>

<configuration>

<property>

<name>oozie.hive.defaults</name>

<value>/apps/hive/conf/hive-site.xml</value>

</property>

<property>

<name>tez.queue.name</name>

<value>${queueName}</value>

</property>

<property>

<name>oozie.hive.log.level</name>

<value>INFO</value>

</property>

<property>

<name>hive.execution.engine</name>

<value>tez</value>

</property>

<property>

<name>mapreduce.job.queuename</name>

<value>${queueName}</value>

</property>

</configuration>

<script>**.hql</script>

<param>workflowStartYearDate=${year}</param>

<param>workflowStartMonthDate=${month}</param>

<param>workflowStartDayDate=${day}</param>

</hive>

<ok to="maj_to"/>

<error to="kill"/>

</action>

<action name="maj_to" cred="hcat">

<hive xmlns="uri:oozie:hive-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<job-xml>/apps/hive/conf/hive-site.xml</job-xml>

<configuration>

<property>

<name>oozie.hive.defaults</name>

<value>/apps/hive/conf/hive-site.xml</value>

</property>

<property>

<name>tez.queue.name</name>

<value>${queueName}</value>

</property>

<property>

<name>oozie.hive.log.level</name>

<value>INFO</value>

</property>

<property>

<name>hive.execution.engine</name>

<value>tez</value>

</property>

<property>

<name>mapreduce.job.queuename</name>

<value>${queueName}</value>

</property>

</configuration>

<script>***.hql</script>

<param>workflowStartYearDate=${year}</param>

<param>workflowStartMonthDate=${month}</param>

<param>workflowStartDayDate=${day}</param>

</hive>

<ok to="end"/>

<error to="kill"/>

</action>

<kill name="kill">

<message>Action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message>

</kill>

<end name="end"/>

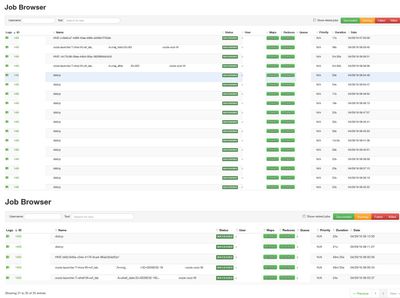

</workflow-app>Picture of the job browser :

As we can see on this picture, the "distcp" job is executed during my Hive Action and starts at the end of each Hive query that I have inside my hive script.

Thanks

Created 04-29-2016 01:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Ludovic Rouleau. There is one known bug that could be causing this. Can you rule this out:

COMPONENT: Hive

VERSION: HDP 2.2.4 (Hive 0.14 + patches)

REFERENCE: BUG-35305

PROBLEM: With CTAS query or INSERT INTO TABLE query, after job finishes, data is moved into destination table with hadoop distcp job.

IMPACT: Hive insert queries get slow

SYMPTOMS: Hive insert queries get slow

WORK AROUND: N/A

SOLUTION: By default this is set to false in HDP 2.2.4 onward.

This issue is observed on upgrades to HDP 2.2.4 if the following configuration is set true in hive-site.xml, set it to false

fs.hdfs.impl.disable.cache=false

The above value is recommended true for HDP 2.2.0 to avoid HiveServer2 OutOfMemory issue

Created 04-28-2016 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Ludovic Rouleau,

I think the only place where it could do the distcp is on the first action: action name = "shell_date"

Look the script is loaded, you should find something like: hadoop distcp hdfs://nn1:8020/xxx hdfs://nn2:8020/xxx

Or you could try to jump the first action and start the workflow directly from the second action:

from: <start to="shell_date"/> to: <start to = "maj_t" />

Created 04-29-2016 07:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately, there is no Hadoop command in my Shell action, just a very simple date calculation. I added a photo of the job browser to better illustrate my point.

Thanks.

Created 04-29-2016 01:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Ludovic Rouleau. There is one known bug that could be causing this. Can you rule this out:

COMPONENT: Hive

VERSION: HDP 2.2.4 (Hive 0.14 + patches)

REFERENCE: BUG-35305

PROBLEM: With CTAS query or INSERT INTO TABLE query, after job finishes, data is moved into destination table with hadoop distcp job.

IMPACT: Hive insert queries get slow

SYMPTOMS: Hive insert queries get slow

WORK AROUND: N/A

SOLUTION: By default this is set to false in HDP 2.2.4 onward.

This issue is observed on upgrades to HDP 2.2.4 if the following configuration is set true in hive-site.xml, set it to false

fs.hdfs.impl.disable.cache=false

The above value is recommended true for HDP 2.2.0 to avoid HiveServer2 OutOfMemory issue

Created 05-02-2016 07:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply @bpreachuk I have exactly the behavior you wrote.

I checked into the hive-site.xml file (that I specified in my workflow ==> <job-xml>) and the settings was good.

<property>

<name>fs.hdfs.impl.disable.cache</name>

<value>false</value>

</property>I also specified in my HQL script "SET fs.hdfs.impl.disable.cache = false;" ( I don't know if I can..) but I still have this distcp job.

Maybe oozie use another hive-site.xml ?