Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Does Zookeeper Server have to run on each work...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Does Zookeeper Server have to run on each worker/slave node for spark-llap?

- Labels:

-

Apache Spark

Created on 02-13-2018 02:10 PM - edited 08-17-2019 07:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

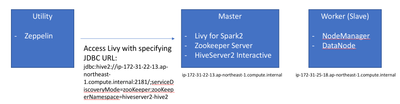

I setup Hive LLAP and spark-llap on HDP 2.6.2 cluster as per Row/Column-level Security in SQL for Apache Spark 2.1.1. It seems to work only when a Zookeeper Server is running on a worker/slave node. Is it by design or a bug?

I setup the HDP 2.6.2 cluster as per the attached simplified diagram. Zeppelin is able to create a Spark session via Livy and run "SHOW DATABASES" queries through HiveServer2 Interactive. However, it stalls when I try to run "SELECT" queries, which needs to run on worker/slave node. I see the following error in /hadoop/yarn/log/<YARN_APPLICATION_ID>/<YARN_CONTAINER_ID>/stderr:

18/02/13 02:24:38 INFO ZooKeeper: Client environment:java.library.path=/usr/hdp/current/hadoop-client/lib/native:/usr/hdp/current/hadoop-client/lib/native/Linux-amd64-64::/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

18/02/13 02:24:38 INFO ZooKeeper: Client environment:java.io.tmpdir=/hadoop/yarn/local/usercache/livy/appcache/application_1518487093485_0008/container_e07_1518487093485_0008_01_000002/tmp

18/02/13 02:24:38 INFO ZooKeeper: Client environment:java.compiler=<NA>

18/02/13 02:24:38 INFO ZooKeeper: Client environment:os.name=Linux

18/02/13 02:24:38 INFO ZooKeeper: Client environment:os.arch=amd64

18/02/13 02:24:38 INFO ZooKeeper: Client environment:os.version=3.10.0-693.11.6.el7.x86_64

18/02/13 02:24:38 INFO ZooKeeper: Client environment:user.name=yarn

18/02/13 02:24:38 INFO ZooKeeper: Client environment:user.home=/home/yarn

18/02/13 02:24:38 INFO ZooKeeper: Client environment:user.dir=/hadoop/yarn/local/usercache/livy/appcache/application_1518487093485_0008/container_e07_1518487093485_0008_01_000002

18/02/13 02:24:38 INFO ZooKeeper: Initiating client connection, connectString= sessionTimeout=1200000 watcher=shadecurator.org.apache.curator.ConnectionState@1620dca0

18/02/13 02:24:38 INFO LlapRegistryService: Using LLAP registry (client) type: Service LlapRegistryService in state LlapRegistryService: STARTED

18/02/13 02:24:38 INFO ClientCnxn: Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

18/02/13 02:24:38 WARN ClientCnxn: Session 0x0 for server null, unexpected error, closing socket connection and attempting reconnect

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:361)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1125)

I confirmed "SELECT" queries work when I install and run Zookeeper Server on the worker/slave node.

Created on 02-14-2018 02:07 AM - edited 08-17-2019 07:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

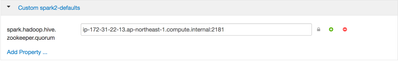

I found we can configure Zookeeper Server address for Spark worker/slave nodes by setting spark.hadoop.hive.zookeeper.quorum at `Custom spark2-default`

Created 02-13-2018 07:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Similar issue:

Problem is your zookeeper address which is pointing to localhost:2181, the worker/slave node does not have a local zookeeper running hence the "Connection refused". Check link above for detailed solution.

Created 02-14-2018 01:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Umair Khan - Thanks for your prompt reply and your try to help. It would have been more helpful if you had provided how to change the zookeeper address since it's not a bug. I already found how to

Created on 02-14-2018 02:07 AM - edited 08-17-2019 07:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found we can configure Zookeeper Server address for Spark worker/slave nodes by setting spark.hadoop.hive.zookeeper.quorum at `Custom spark2-default`