Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: ERROR SparkContext: Error initializing SparkCo...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ERROR SparkContext: Error initializing SparkContext. org.apache.spark.SparkException: Yarn application has already ended! It might have been killed or unable to launch application master.

Created 08-03-2022 03:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have got this issue post java upgradation. Kindly let us know whether this error occurred because of java upgrade or else please suggest a solution.

spark-shell

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

22/08/03 15:34:49 ERROR SparkContext: Error initializing SparkContext.

org.apache.spark.SparkException: Yarn application has already ended! It might have been killed or unable to launch application master.

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:89)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:63)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:164)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:500)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2498)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$7.apply(SparkSession.scala:934)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$7.apply(SparkSession.scala:925)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:925)

at org.apache.spark.repl.Main$.createSparkSession(Main.scala:103)

at $line3.$read$$iw$$iw.<init>(<console>:15)

at $line3.$read$$iw.<init>(<console>:43)

at $line3.$read.<init>(<console>:45)

at $line3.$read$.<init>(<console>:49)

at $line3.$read$.<clinit>(<console>)

at $line3.$eval$.$print$lzycompute(<console>:7)

at $line3.$eval$.$print(<console>:6)

at $line3.$eval.$print(<console>)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at scala.tools.nsc.interpreter.IMain$ReadEvalPrint.call(IMain.scala:793)

at scala.tools.nsc.interpreter.IMain$Request.loadAndRun(IMain.scala:1054)

at scala.tools.nsc.interpreter.IMain$WrappedRequest$$anonfun$loadAndRunReq$1.apply(IMain.scala:645)

at scala.tools.nsc.interpreter.IMain$WrappedRequest$$anonfun$loadAndRunReq$1.apply(IMain.scala:644)

at scala.reflect.internal.util.ScalaClassLoader$class.asContext(ScalaClassLoader.scala:31)

at scala.reflect.internal.util.AbstractFileClassLoader.asContext(AbstractFileClassLoader.scala:19)

at scala.tools.nsc.interpreter.IMain$WrappedRequest.loadAndRunReq(IMain.scala:644)

at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:576)

at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:572)

at scala.tools.nsc.interpreter.IMain$$anonfun$quietRun$1.apply(IMain.scala:231)

at scala.tools.nsc.interpreter.IMain$$anonfun$quietRun$1.apply(IMain.scala:231)

at scala.tools.nsc.interpreter.IMain.beQuietDuring(IMain.scala:221)

at scala.tools.nsc.interpreter.IMain.quietRun(IMain.scala:231)

at org.apache.spark.repl.SparkILoop$$anonfun$initializeSpark$1$$anonfun$apply$mcV$sp$1.apply(SparkILoop.scala:88)

at org.apache.spark.repl.SparkILoop$$anonfun$initializeSpark$1$$anonfun$apply$mcV$sp$1.apply(SparkILoop.scala:88)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.repl.SparkILoop$$anonfun$initializeSpark$1.apply$mcV$sp(SparkILoop.scala:88)

at org.apache.spark.repl.SparkILoop$$anonfun$initializeSpark$1.apply(SparkILoop.scala:88)

at org.apache.spark.repl.SparkILoop$$anonfun$initializeSpark$1.apply(SparkILoop.scala:88)

at scala.tools.nsc.interpreter.ILoop.savingReplayStack(ILoop.scala:91)

at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:87)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1$$anonfun$org$apache$spark$repl$SparkILoop$$anonfun$$loopPostInit$1$1.apply$mcV$sp(SparkILoop.scala:170)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1$$anonfun$org$apache$spark$repl$SparkILoop$$anonfun$$loopPostInit$1$1.apply(SparkILoop.scala:158)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1$$anonfun$org$apache$spark$repl$SparkILoop$$anonfun$$loopPostInit$1$1.apply(SparkILoop.scala:158)

at scala.tools.nsc.interpreter.ILoop$$anonfun$mumly$1.apply(ILoop.scala:189)

at scala.tools.nsc.interpreter.IMain.beQuietDuring(IMain.scala:221)

at scala.tools.nsc.interpreter.ILoop.mumly(ILoop.scala:186)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1.org$apache$spark$repl$SparkILoop$$anonfun$$loopPostInit$1(SparkILoop.scala:158)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1$$anonfun$startup$1$1.apply(SparkILoop.scala:226)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1$$anonfun$startup$1$1.apply(SparkILoop.scala:206)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1.withSuppressedSettings$1(SparkILoop.scala:194)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1.startup$1(SparkILoop.scala:206)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1.apply$mcZ$sp(SparkILoop.scala:241)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1.apply(SparkILoop.scala:141)

at org.apache.spark.repl.SparkILoop$$anonfun$process$1.apply(SparkILoop.scala:141)

at scala.reflect.internal.util.ScalaClassLoader$.savingContextLoader(ScalaClassLoader.scala:97)

at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:141)

at org.apache.spark.repl.Main$.doMain(Main.scala:76)

at org.apache.spark.repl.Main$.main(Main.scala:56)

at org.apache.spark.repl.Main.main(Main.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:900)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:192)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:217)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:137)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

22/08/03 15:34:49 WARN YarnSchedulerBackend$YarnSchedulerEndpoint: Attempted to request executors before the AM has registered!

22/08/03 15:34:49 WARN MetricsSystem: Stopping a MetricsSystem that is not running

org.apache.spark.SparkException: Yarn application has already ended! It might have been killed or unable to launch application master.

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:89)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:63)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:164)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:500)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2498)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$7.apply(SparkSession.scala:934)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$7.apply(SparkSession.scala:925)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:925)

at org.apache.spark.repl.Main$.createSparkSession(Main.scala:103)

... 62 elided

<console>:14: error: not found: value spark

import spark.implicits._

^

<console>:14: error: not found: value spark

import spark.sql

^

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.3.2.3.1.5.6189-1

/_/

Using Scala version 2.11.12 (OpenJDK 64-Bit Server VM, Java 1.8.0_342)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

Please help out.

Created 08-03-2022 10:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ssuja

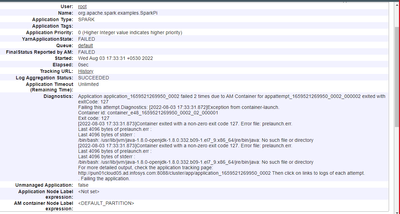

In the above screenshot, we can see clearly, java path location is not able to find. Please verify and update the java path in nodes properly.

Created 08-03-2022 03:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ssuja

Please try to run the SparkPi example and download the application logs. Once you are downloaded check is there any exceptions in the logs.

spark-submit \ --class org.apache.spark.examples.SparkPi \ --master yarn \ --deploy-mode cluster \ --num-executors 1 \ --driver-memory 512m \ --executor-memory 512m \ --executor-cores 1 \ /usr/hdp/current/spark2-client/examples/jars/spark-examples_*.jar 10

Created 08-03-2022 05:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @RangaReddy

Thank you for the reply.

We have four nodes HDP cluster, wherein recently java version upgraded only in three nodes.

In three nodes, we have upgraded the java path manually in the files Eg: spark-env.sh, yarn-env.sh, hadoop-env.sh .

We haven't upgraded the java path in one node where java version is not upgraded.

Executed the above mentioned command. Please find the attached screenshot of application logs.

Kindly help us.

Created 08-03-2022 05:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 08-03-2022 10:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ssuja

In the above screenshot, we can see clearly, java path location is not able to find. Please verify and update the java path in nodes properly.

Created 08-08-2022 02:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ssuja, Has the reply helped resolve your issue? If so, please mark the appropriate reply as the solution, as it will make it easier for others to find the answer in the future.

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community: