Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Ensure RunMongoAggregation runs after PutMongo

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ensure RunMongoAggregation runs after PutMongo

- Labels:

-

Apache NiFi

Created 08-27-2018 12:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

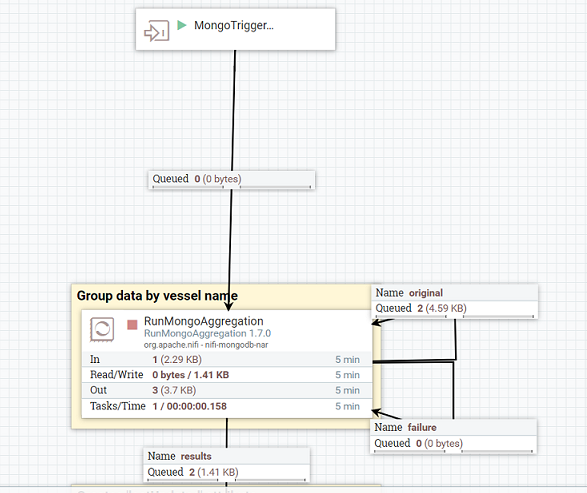

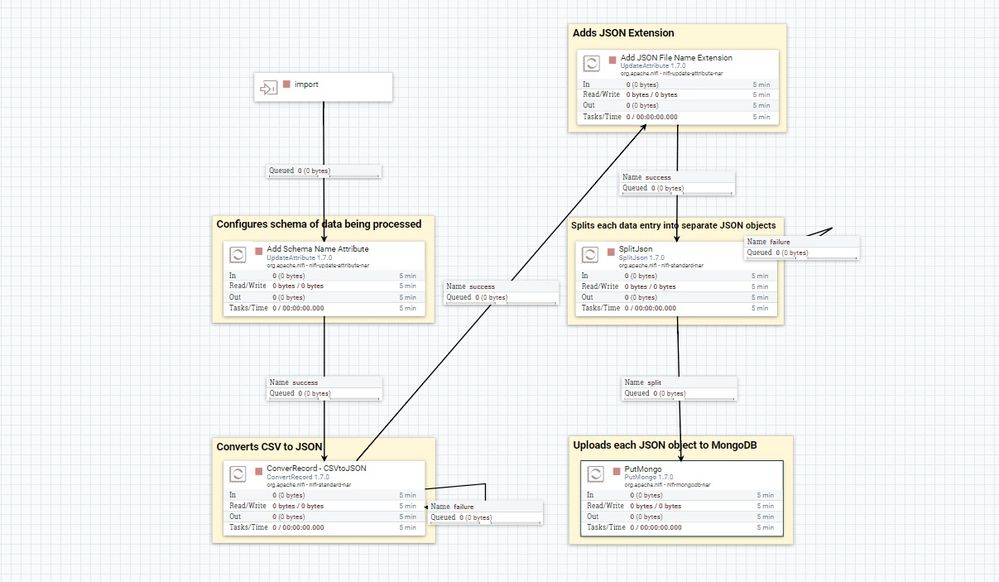

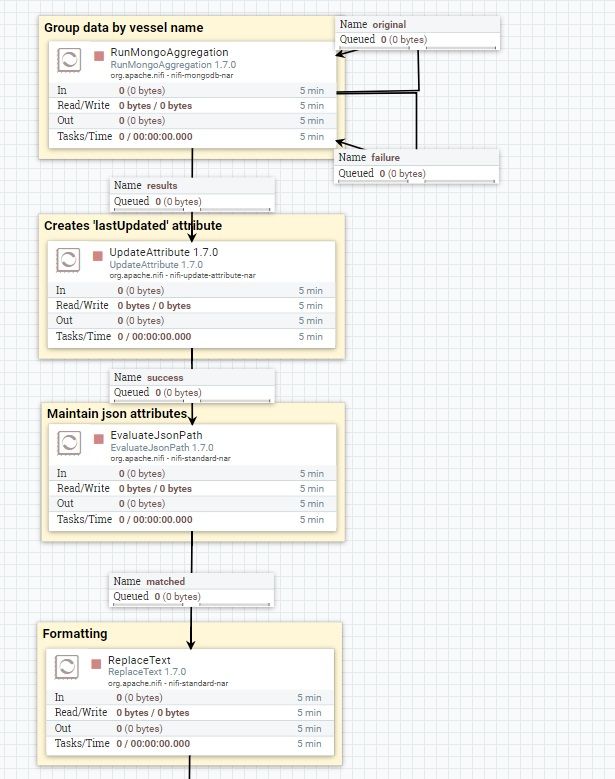

For my NiFi data flow, I am taking CSV, converting them into JSON, posting those JSON files into a database (PutMongo), and then running an aggregation function (RunMongoAggregation). Attached are images to show that data file. My question is how can I construct my flow so that I can ensure that RunMongoAggregation is hit after PutMongo? I've looked into implementing some Wait/Notify pattern but got really lost in the implementation. Ideally, I would like to have a RunMongoAggregation triggered whenever new data gets into the PutMongo processor.

If anyone would like to test the processor, I have attached the template file. It is tested with AIS ship data (find online)

Created on 08-27-2018 12:42 PM - edited 08-17-2019 05:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Instead of PutMongo processor you can use PutMongoRecord processor and you don't need to split the json objects.

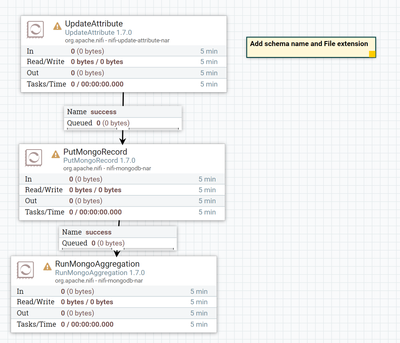

By using PutMongoRecord processor your flow looks some thing like below

Configure the PutMongoRecord processor RecordReader controller service as CsvReader then processor will reads and put the json objects in MongoDatabase.

Then you can run RunMongoAggregation processor to run the aggregation.

(or)

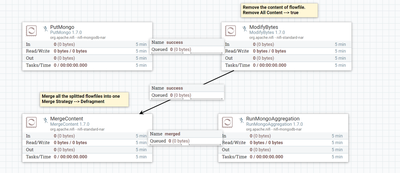

With your existing flow:

Use Merge Content processor after PutMOngo processor and configure the merge Content processor Merge Strategy as Defragment then this processor merges all the splitted json objects into one file.

Then use Merged relationship from MergeCOntent processor to trigger RunMongoAggregation.

By using this way we are going to wait until all the fragments are merged into one file then only we are triggering RunMongoAggregation Processor.

Flow:

Refer to this link for MergeContent configurations.

-

If the Answer helped to resolve your issue, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of issues.

Created on 08-27-2018 12:42 PM - edited 08-17-2019 05:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Instead of PutMongo processor you can use PutMongoRecord processor and you don't need to split the json objects.

By using PutMongoRecord processor your flow looks some thing like below

Configure the PutMongoRecord processor RecordReader controller service as CsvReader then processor will reads and put the json objects in MongoDatabase.

Then you can run RunMongoAggregation processor to run the aggregation.

(or)

With your existing flow:

Use Merge Content processor after PutMOngo processor and configure the merge Content processor Merge Strategy as Defragment then this processor merges all the splitted json objects into one file.

Then use Merged relationship from MergeCOntent processor to trigger RunMongoAggregation.

By using this way we are going to wait until all the fragments are merged into one file then only we are triggering RunMongoAggregation Processor.

Flow:

Refer to this link for MergeContent configurations.

-

If the Answer helped to resolve your issue, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of issues.

Created 08-28-2018 03:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Shu for your quick response. I followed your instructions regarding the PutMongoRecord processor. That helped simplify my flow a bit.

If the RunMongoAggregation is working as I think it is, then as a FlowFile runs through the processor, it will "trigger" the processor and then route onto original, while the aggregation works on the database and output into "results" (see image below). Is that your thought process? Also -- I probably have to clear out the database I'm aggregating on between data-sets to prevent duplicates, do I not? @Shu