Support Questions

- Cloudera Community

- Support

- Support Questions

- Error in installing services after adding a node i...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error in installing services after adding a node in HDP cluster

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created 04-12-2017 03:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Team,

We have development HDP 2.5.3 cluster consisting of 4 nodes and we are adding another node from ambari. After adding the node successfully in the cluster we are failing to install any services in that particular newly added node. Below are the logs from ambari during the failure while adding DataNode in the new node.

Looking for your guidance on how to fix this issue.

stderr: /var/lib/ambari-agent/data/errors-2053.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/hooks/before-ANY/scripts/hook.py", line 35, in <module>

BeforeAnyHook().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 280, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/hooks/before-ANY/scripts/hook.py", line 26, in hook

import params

File "/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/hooks/before-ANY/scripts/params.py", line 191, in <module>

hadoop_conf_dir = conf_select.get_hadoop_conf_dir(force_latest_on_upgrade=True)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/functions/conf_select.py", line 477, in get_hadoop_conf_dir

select(stack_name, "hadoop", version)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/functions/conf_select.py", line 315, in select

shell.checked_call(_get_cmd("set-conf-dir", package, version), logoutput=False, quiet=False, sudo=True)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 71, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of 'ambari-python-wrap /usr/bin/conf-select set-conf-dir --package hadoop --stack-version 2.5.3.0-37 --conf-version 0' returned 1. 2.5.3.0-37 Incorrect stack version

Error: Error: Unable to run the custom hook script ['/usr/bin/python', '/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/hooks/before-ANY/scripts/hook.py', 'ANY', '/var/lib/ambari-agent/data/command-2053.json', '/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/hooks/before-ANY', '/var/lib/ambari-agent/data/structured-out-2053.json', 'INFO', '/var/lib/ambari-agent/tmp']

stdout: /var/lib/ambari-agent/data/output-2053.txt

2017-04-12 19:51:03,007 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.5.3.0-37

2017-04-12 19:51:03,007 - Checking if need to create versioned conf dir /etc/hadoop/2.5.3.0-37/0

2017-04-12 19:51:03,007 - call[('ambari-python-wrap', '/usr/bin/conf-select', 'create-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2017-04-12 19:51:03,030 - call returned (1, '2.5.3.0-37 Incorrect stack version', '')

2017-04-12 19:51:03,031 - checked_call[('ambari-python-wrap', '/usr/bin/conf-select', 'set-conf-dir', '--package', 'hadoop', '--stack-version', '2.5.3.0-37', '--conf-version', '0')] {'logoutput': False, 'sudo': True, 'quiet': False}

Error: Error: Unable to run the custom hook script ['/usr/bin/python', '/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/hooks/before-ANY/scripts/hook.py', 'ANY', '/var/lib/ambari-agent/data/command-2053.json', '/var/lib/ambari-agent/cache/stacks/HDP/2.0.6/hooks/before-ANY', '/var/lib/ambari-agent/data/structured-out-2053.json', 'INFO', '/var/lib/ambari-agent/tmp']

Command failed after 1 tries

Created 04-13-2017 08:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, we had 2.3.4 installed in this node. However we have taken the following steps and its working fine now.

1) Deleted 2.3.4 and current folders under /usr/hdp

2) Restarted the ambari-agent

3) Took care of the issues found in pre run checks (like deleting old rpms, folders and users, etc.)

4) Added the new host. However it required python-argparse rpm

5) Added Data Nodes, Node Managers and other services and they are working fine now.

Created 04-12-2017 03:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To help you, we'd need some more information:

- Which version of HDP are you actually running on currently? Is it 2.5.3.0-37?

- Can you post the entire output from the install command?

- What is the content of /usr/hdp on this host which is having trouble?

Created 04-13-2017 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

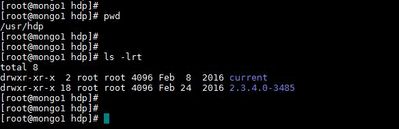

Yes, we are using HDP 2.5.3.0-37. Earlier it was 2.3.4 but we uninstalled that. Provided the screenshot of /usr/hdp directory of the node. What should we do to address the issue?

Created 04-12-2017 05:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks that /usr/hdp/2.5.3.0-37 doesnt exists on the host. Please check the list of directories under /usr/hdp.

In the stderr log it shows that conf-select command failed to set config directory to /usr/hdp/2.5.3.0-37/. This usually is the case when identified version directory doesnt exists under /usr/hdp. In your case it is /usr/hdp/2.5.3.0-37.

Created on 04-13-2017 06:19 AM - edited 08-17-2019 11:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, directory 2.5.3.0-37 doesn't exist under /usr/hdp. What should we do then? Create the directory manually? Providing you the screenshot.

Created 04-13-2017 07:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Guys - can you please help me with this resolution? We are actually stuck with this issue. Looking for guidance.

Created 04-13-2017 07:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see you already have another HDP installation on that host you have added. Generally in a host which is newly added, you would not have /usr/hdp directory. Was this host already part of another cluster before adding it to this cluster?

And what softlink you would see in /usr/hdp/current ?

Created 04-13-2017 08:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, we had 2.3.4 installed in this node. However we have taken the following steps and its working fine now.

1) Deleted 2.3.4 and current folders under /usr/hdp

2) Restarted the ambari-agent

3) Took care of the issues found in pre run checks (like deleting old rpms, folders and users, etc.)

4) Added the new host. However it required python-argparse rpm

5) Added Data Nodes, Node Managers and other services and they are working fine now.

Created 04-13-2017 08:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have also accepted the best answer above as I found out. Thanks for your guidance guys.

Created 04-13-2017 12:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, this node was part of one of the old HDP installations. However we have uninstalled that now and shifted to 2.5.3, a more stabler release. Have undertaken the current steps :

1) Deleted old 2.3.4 and current folder under /usr/hdp

2) Restarted the ambari agent

3) Added the new host again and took care of host run check issues (like pre-existing old 2.3.4 packages and users and folders. Have removed them)

4) Node was successfully added. But had to install a new rpm python-argparse

5) Added DataNode, Node Manager and clients in the new node successfully

Through ambari I can now see this node added successfully with required services.