Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Error running Pyspark Interpreter after Instal...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error running Pyspark Interpreter after Installing Miniconda

- Labels:

-

Apache Zeppelin

Created on 10-12-2017 02:00 AM - edited 08-17-2019 07:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

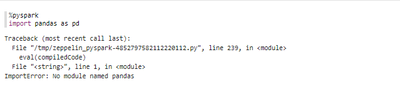

Hi, I am having trouble when running Pyspark Interpreter. I edited zeppelin.pyspark.python variable with /usr/lib/miniconda2/bin/python. Besides, I also can't execute pandas.

Below is the error in Zeppelin UI

Created 10-12-2017 05:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you have pandas installed ? Try installing pandas and run

conda install pandas

Other useful libraries are matplotlib, numpy if you want to install.

Thanks,

Aditya

Created 10-13-2017 03:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aditya, I had installed pandas. I can execute pandas at PySpark CLI tho but not in Zeppelin.

There are errors stated when I run pyspark command:

error: [Errno 111] Connection refused

ERROR:py4j.java_gateway:An error occurred while trying to connect to the Java se rver

Traceback (most recent call last):

File "/usr/hdp/2.5.3.0-37/spark/python/lib/py4j-0.9-src.zip/py4j/java_gateway. py", line 690, in start

self.socket.connect((self.address, self.port))

File "/usr/lib64/python2.7/socket.py", line 224, in meth

return getattr(self._sock,name)(*args)

error: [Errno 111] Connection refused

ERROR:py4j.java_gateway:An error occurred while trying to connect to the Java se rver

Traceback (most recent call last):

File "/usr/hdp/2.5.3.0-37/spark/python/lib/py4j-0.9-src.zip/py4j/java_gateway. py", line 690, in start

self.socket.connect((self.address, self.port))

File "/usr/lib64/python2.7/socket.py", line 224, in meth

return getattr(self._sock,name)(*args)

error: [Errno 111] Connection refused

Traceback (most recent call last):

File "/usr/hdp/2.5.3.0-37/spark/python/pyspark/shell.py", line 43, in <module>

sc = SparkContext(pyFiles=add_files)

File "/usr/hdp/2.5.3.0-37/spark/python/pyspark/context.py", line 115, in __ini t__

conf, jsc, profiler_cls)

File "/usr/hdp/2.5.3.0-37/spark/python/pyspark/context.py", line 172, in _do_i nit

self._jsc = jsc or self._initialize_context(self._conf._jconf)

File "/usr/hdp/2.5.3.0-37/spark/python/pyspark/context.py", line 235, in _init ialize_context

return self._jvm.JavaSparkContext(jconf)

File "/usr/hdp/2.5.3.0-37/spark/python/lib/py4j-0.9-src.zip/py4j/java_gateway. py", line 1062, in __call__

File "/usr/hdp/2.5.3.0-37/spark/python/lib/py4j-0.9-src.zip/py4j/java_gateway. py", line 631, in send_command

File "/usr/hdp/2.5.3.0-37/spark/python/lib/py4j-0.9-src.zip/py4j/java_gateway. py", line 624, in send_command

File "/usr/hdp/2.5.3.0-37/spark/python/lib/py4j-0.9-src.zip/py4j/java_gateway. py", line 579, in _get_connection

File "/usr/hdp/2.5.3.0-37/spark/python/lib/py4j-0.9-src.zip/py4j/java_gateway. py", line 585, in _create_connection

File "/usr/hdp/2.5.3.0-37/spark/python/lib/py4j-0.9-src.zip/py4j/java_gateway. py", line 697, in start

py4j.protocol.Py4JNetworkError: An error occurred while trying to connect to the Java server

>>> import pandas as pd

>>>

Created 10-13-2017 04:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try setting PYSPARK_DRIVER_PYTHON environment variable so that Spark uses Anaconda/Miniconda.

From the logs looks like spark is using pyspark which is bundled

Thanks,

Aditya

Created 10-13-2017 05:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Should I declare the variable like this?

export PYSPARK_DRIVER_PYTHON=miniconda2Created 10-13-2017 06:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try setting the below instead of PYSPARK_DRIVER_PYTHON

export PYSPARK_PYTHON=<anaconda python path>

ex: export PYSPARK_PYTHON=/home/ambari/anaconda3/bin/python

Created 10-13-2017 04:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try setting PYSPARK_DRIVER environment variable so that Spark uses Anaconda/Miniconda.

From the logs looks like spark is using pyspark which is bundled. Check the link for more info

https://spark.apache.org/docs/1.6.2/programming-guide.html#linking-with-spark

Thanks,

Aditya

Created 10-13-2017 06:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try setting the below instead of PYSPARK_DRIVER_PYTHON

export PYSPARK_PYTHON=<anaconda python path>

ex: export PYSPARK_PYTHON=/home/ambari/anaconda3/bin/python

Created on 10-13-2017 06:59 AM - edited 08-17-2019 07:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

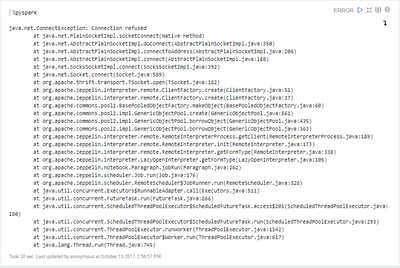

Aditya, I got this error at Zeppelin UI