Support Questions

- Cloudera Community

- Support

- Support Questions

- Exception (noSuchMethodError) trying to run ML exa...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Exception (noSuchMethodError) trying to run ML examples in zeppelin

- Labels:

-

Apache Hive

-

Apache Spark

-

Apache Zeppelin

Created on 05-16-2018 07:29 AM - edited 08-18-2019 12:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

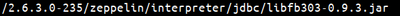

Hi, i'm having a strange exception when i try to run ml example in Zeppelin:

here is the exception:

import org.apache.spark.ml.linalg.Vectors java.lang.IllegalArgumentException: Error while instantiating 'org.apache.spark.sql.hive.HiveSessionStateBuilder': at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$instantiateSessionState(SparkSession.scala:1074) at org.apache.spark.sql.SparkSession$anonfun$sessionState$2.apply(SparkSession.scala:141) at org.apache.spark.sql.SparkSession$anonfun$sessionState$2.apply(SparkSession.scala:140) at scala.Option.getOrElse(Option.scala:121) at org.apache.spark.sql.SparkSession.sessionState$lzycompute(SparkSession.scala:140) at org.apache.spark.sql.SparkSession.sessionState(SparkSession.scala:137) at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:65) at org.apache.spark.sql.SparkSession.createDataFrame(SparkSession.scala:311) ... 46 elided Caused by: org.apache.spark.sql.AnalysisException: java.lang.NoSuchMethodError: com.facebook.fb303.FacebookService$Client.sendBaseOneway(Ljava/lang/String;Lorg/apache/thrift/TBase;)V; at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:106) at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:193) at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:105) at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:93) at org.apache.spark.sql.hive.HiveSessionStateBuilder.externalCatalog(HiveSessionStateBuilder.scala:39) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog$lzycompute(HiveSessionStateBuilder.scala:54) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:52) at org.apache.spark.sql.hive.HiveSessionStateBuilder.catalog(HiveSessionStateBuilder.scala:35) at org.apache.spark.sql.internal.BaseSessionStateBuilder.build(BaseSessionStateBuilder.scala:289) at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$instantiateSessionState(SparkSession.scala:1071) ... 53 more Caused by: java.lang.NoSuchMethodError: com.facebook.fb303.FacebookService$Client.sendBaseOneway(Ljava/lang/String;Lorg/apache/thrift/TBase;)V at com.facebook.fb303.FacebookService$Client.send_shutdown(FacebookService.java:436) at com.facebook.fb303.FacebookService$Client.shutdown(FacebookService.java:430) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.close(HiveMetaStoreClient.java:492) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:156) at com.sun.proxy.$Proxy67.close(Unknown Source) at org.apache.hadoop.hive.ql.metadata.Hive.close(Hive.java:291) at org.apache.hadoop.hive.ql.metadata.Hive.access$000(Hive.java:137) at org.apache.hadoop.hive.ql.metadata.Hive$1.remove(Hive.java:157) at org.apache.hadoop.hive.ql.metadata.Hive.closeCurrent(Hive.java:261) at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:231) at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:208) at org.apache.hadoop.hive.ql.session.SessionState.setAuthorizerV2Config(SessionState.java:765) at org.apache.hadoop.hive.ql.session.SessionState.setupAuth(SessionState.java:736) at org.apache.hadoop.hive.ql.session.SessionState.getAuthenticator(SessionState.java:1391) at org.apache.spark.sql.hive.client.HiveClientImpl.<init>(HiveClientImpl.scala:211) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at org.apache.spark.sql.hive.client.IsolatedClientLoader.createClient(IsolatedClientLoader.scala:268) at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:362) at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:266) at org.apache.spark.sql.hive.HiveExternalCatalog.client$lzycompute(HiveExternalCatalog.scala:66) at org.apache.spark.sql.hive.HiveExternalCatalog.client(HiveExternalCatalog.scala:65) at org.apache.spark.sql.hive.HiveExternalCatalog$anonfun$databaseExists$1.apply$mcZ$sp(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:194) at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:97) ... 62 more

The installation is pretty standard.

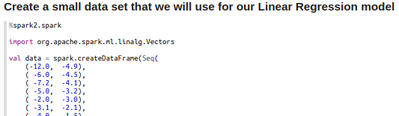

spark.version= 2.2.0.2.6.3.0-235

I'm using useHiveContext=true. Below my full spark interpreter configuration.

java.lang.NoSuchMethodError: com.facebook.fb303.FacebookService$Client.sendBaseOneway(Ljava/lang/String;Lorg/apache/thrift/TBase;)V;

Seem a library configuration error but I just installed spark using Ambari. Any idea how to solve this issue?

Created 05-17-2018 03:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ended up adding :

org.apache.thrift:libfb303:jar:0.9.3

as dependency of the spark interpreter in zeppelin (this included the right version of thrift as transitive dependency). Maybe not the cleanest solution but it works. 🙂

Created 05-16-2018 09:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This looks like an issue caused by an extraneous reference to phoenix-server.jar in the YARN classpath. There's an article on that issue affecting Oozie here, and I suspect the resolution would be similar (either removing the reference or moving it to the end of the classpath):

It's also possible that there's an older version of libfb303 floating around in the path somewhere. Hive should be using 0.9.3 as of the HDP 2.6.4.x stack.

Created on 05-17-2018 03:22 AM - edited 08-18-2019 12:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

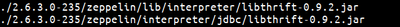

Hi @William Brooks.

I'm using hdp 2.6.3 .

Thanks for your suggestion. I have been able to progress. Inside zeppelin I've found a version of libthrift (0.9.2) that is not compatible with libfb (0.9.3) ... In your opinion what is the best way to fix it?

Created 05-17-2018 03:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ended up adding :

org.apache.thrift:libfb303:jar:0.9.3

as dependency of the spark interpreter in zeppelin (this included the right version of thrift as transitive dependency). Maybe not the cleanest solution but it works. 🙂

Created 05-17-2018 01:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Glad it helped find the solution!

Created 12-29-2019 09:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am unable to find libfb303 in zeppelin 0.8.2, can you please help?