Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: ExecuteSQL - UnresolvedUnionException

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ExecuteSQL - UnresolvedUnionException

- Labels:

-

Apache NiFi

-

Cloudera DataFlow (CDF)

Created on 12-21-2015 05:07 PM - edited 08-19-2019 05:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am getting below Exception while using ExecuteSQL processor to get data from mysql, looks like auto increment field is creating issue. anyone faced this issue or am I missing something?

11:46:44 ESTERRORe7cc632a-4d8f-4362-b5e0-68faefd01c23 ExecuteSQL[id=e7cc632a-4d8f-4362-b5e0-68faefd01c23] ExecuteSQL[id=e7cc632a-4d8f-4362-b5e0-68faefd01c23] failed to process session due to org.apache.avro.file.DataFileWriter$AppendWriteException: org.apache.avro.UnresolvedUnionException: Not in union ["null","int"]: 1: org.apache.avro.file.DataFileWriter$AppendWriteException: org.apache.avro.UnresolvedUnionException: Not in union ["null","int"]: 1 11:46:44 ESTWARNINGe7cc632a-4d8f-4362-b5e0-68faefd01c23 ExecuteSQL[id=e7cc632a-4d8f-4362-b5e0-68faefd01c23] Processor Administratively Yielded for 1 sec due to processing failure

It used to work in 0.3.x version, when tested in 0.4.0 I am getting above error. 😞

Select * from SALARIES; --> gives error

SELECT ID from SALARIES; --> gives error

Select GENDER,AGE,SALARY,ZIPCODE from SALARIES; --> works fine

Thanks In Advance,

Jobin George

Created 12-21-2015 06:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Jobin George ,

I was trying to recreate this error... I have NiFi 0.4.0, MySQL 5.6.26, mysql-connector-java-5.1.38-bin.jar.

Created the same table as you and inserted three rows:

CREATE TABLE SALARIES ( ID int NOT NULL AUTO_INCREMENT, ZIPCODE int, SALARY double, AGE int, GENDER varchar(255), PRIMARY KEY (ID) );

INSERT INTO SALARIES (ZIPCODE, SALARY, AGE, GENDER) VALUES (12345, 100, 30, 'MALE');

INSERT INTO SALARIES (ZIPCODE, SALARY, AGE, GENDER) VALUES (12345, 200, 31, 'MALE');

INSERT INTO SALARIES (SALARY, AGE, GENDER) VALUES (10, 20, 'MALE');

In NiFi I have ExecuteSQL with "select * from salaries;" -> ConvertAvroToJson -> LogAttribute and I see this in the logs:

[{"ID": 1, "ZIPCODE": 12345, "SALARY": 100.0, "AGE": 30, "GENDER": "MALE"},{"ID": 2, "ZIPCODE": 12345, "SALARY": 200.0, "AGE": 31, "GENDER": "MALE"},{"ID": 3, "ZIPCODE": null, "SALARY": 10.0, "AGE": 20, "GENDER": "MALE"}]

If I change the query to "select id from salaries;" I see:

[{"ID": 1},{"ID": 2},{"ID": 3}]

Is there anything that jumps out at you as being different between your setup and mine? different versions of mysql? something specific in your data?

Created 12-21-2015 06:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Jobin George ,

I was trying to recreate this error... I have NiFi 0.4.0, MySQL 5.6.26, mysql-connector-java-5.1.38-bin.jar.

Created the same table as you and inserted three rows:

CREATE TABLE SALARIES ( ID int NOT NULL AUTO_INCREMENT, ZIPCODE int, SALARY double, AGE int, GENDER varchar(255), PRIMARY KEY (ID) );

INSERT INTO SALARIES (ZIPCODE, SALARY, AGE, GENDER) VALUES (12345, 100, 30, 'MALE');

INSERT INTO SALARIES (ZIPCODE, SALARY, AGE, GENDER) VALUES (12345, 200, 31, 'MALE');

INSERT INTO SALARIES (SALARY, AGE, GENDER) VALUES (10, 20, 'MALE');

In NiFi I have ExecuteSQL with "select * from salaries;" -> ConvertAvroToJson -> LogAttribute and I see this in the logs:

[{"ID": 1, "ZIPCODE": 12345, "SALARY": 100.0, "AGE": 30, "GENDER": "MALE"},{"ID": 2, "ZIPCODE": 12345, "SALARY": 200.0, "AGE": 31, "GENDER": "MALE"},{"ID": 3, "ZIPCODE": null, "SALARY": 10.0, "AGE": 20, "GENDER": "MALE"}]

If I change the query to "select id from salaries;" I see:

[{"ID": 1},{"ID": 2},{"ID": 3}]

Is there anything that jumps out at you as being different between your setup and mine? different versions of mysql? something specific in your data?

Created 12-21-2015 07:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @bbende,

Below are the DDLs i used, Not sure if that could be an issue. I had the same setup for HDF lab i created to push data from mysql to phoenix in 0.3.0 which works perfectly.

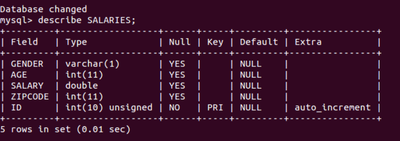

create table SALARIES (GENDER varchar(1), AGE int, SALARY double, ZIPCODE int); load data infile '/tmp/salaries.txt' into table SALARIES fields terminated by ','; alter table SALARIES add column `ID` int(10) unsigned primary KEY AUTO_INCREMENT;

Also, Mysql version i have is: MySQL 5.1.73 [these 2 are the differences i can think of]

Thanks,

Jobin

Created 12-21-2015 07:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok thanks, with your DDL I can recreate. Looking into it now...

Created 12-21-2015 08:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This turns out to be specific to using "unsigned int" which is essentially a Long, but we are generating an Avro schema that expects an "int". Some changes in 0.4.0 that fixed other issues with ExecuteSQL appear to have introduced this. I captured the issue with this JIRA: https://issues.apache.org/jira/browse/NIFI-1319

Created 12-21-2015 09:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Bryan. For now I will recreate the table with out 'unsigned' for labs we have.

Thanks,

Jobin George