Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Executing Shell script to a remote machine in ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Executing Shell script to a remote machine in NiFi in HDF

- Labels:

-

Apache NiFi

-

Cloudera DataFlow (CDF)

Created 06-26-2018 11:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have 8 node cluster . NIFI is installed in only one cluster . i want to use a NiFi processor to trigger shell/python script on a remote machine .

Example :

Machine 1 - Nifi Installed in Machine 1

Machine 4 : Script has to be executed and files supporting the script is available in Machine 4(Script cannot be moved to Nifi node)

Please tell me

1.what processors should I use and how the flow should be . .

2.How to trigger Shell Script using Nifi on a remote machine .

3.How to Log the flow if possible in case of any error / failures and trigger a mail ( optional) i need this scenario for many use cases.

I have googled a lot , Execute script processor is having only ( Python,Ruby,grovvy etc..) not shell script in the list of options.

How to provide SSH username and key/password.

@Shu

Created on 06-27-2018 03:56 AM - edited 08-17-2019 05:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

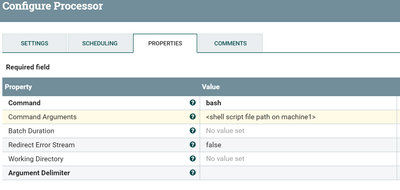

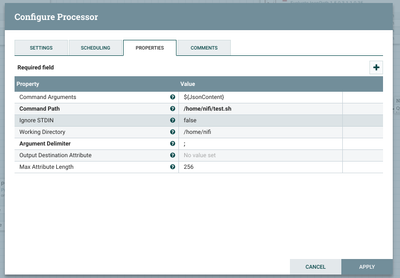

You can use ExecuteProcess (doesn't allow any incoming connections) (or) ExecuteStreamCommand processors to trigger the shell script.

ExecuteProcess configs:

As your executable script is on Machine 4 and NiFi installed on Machine1 so create a shell script on Machine 1 which ssh into Machine 4 and trigger your Python Script.

Refer to this and this links describes how to use username/password while doing ssh to remote machine.

As you are going to store the logs into a file, so you can use Tail file processor to tail the log file and check is there any ERROR/WARN, by using RouteText Processor then trigger mail.

(or)

Fetch the application id (or) application name of the process and then use yarn rest api to get the status of the job

Please refer to how to monitor yarn applications using NiFi and Starting Spark jobs directly via YARN REST API and this link describes yarn rest api capabilities.

Created on 06-26-2018 05:47 PM - edited 08-17-2019 05:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Raj ji!

Not sure If I get you right, but if you need to call an sh from a remote machine, you can use remote process group and at that RPG, use ExecuteStreamCommand, here's an example.

PS: to enable RPG, you'll need to configure site2site configs on Nifi, here's a documentation.

https://docs.hortonworks.com/HDPDocuments/HDF3/HDF-3.1.1/bk_user-guide/content/configure-site-to-sit...

Hope this helps!

Created on 06-27-2018 03:56 AM - edited 08-17-2019 05:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can use ExecuteProcess (doesn't allow any incoming connections) (or) ExecuteStreamCommand processors to trigger the shell script.

ExecuteProcess configs:

As your executable script is on Machine 4 and NiFi installed on Machine1 so create a shell script on Machine 1 which ssh into Machine 4 and trigger your Python Script.

Refer to this and this links describes how to use username/password while doing ssh to remote machine.

As you are going to store the logs into a file, so you can use Tail file processor to tail the log file and check is there any ERROR/WARN, by using RouteText Processor then trigger mail.

(or)

Fetch the application id (or) application name of the process and then use yarn rest api to get the status of the job

Please refer to how to monitor yarn applications using NiFi and Starting Spark jobs directly via YARN REST API and this link describes yarn rest api capabilities.