Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Export from HDFS to mysql using Sqoop

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Export from HDFS to mysql using Sqoop

- Labels:

-

Apache Hive

-

Apache Sqoop

Created on 09-26-2017 02:07 PM - edited 08-17-2019 10:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When i execute this command

sqoop export --connect jdbc:mysql://127.0.0.1/mooc2015 -m 1 --driver com.mysql.jdbc.Driver --table Act_Grade --export-dir /apps/hive/warehouse/hactivitygrade --input-fields-terminated-by '\0001'

I got following error

Warning: /usr/hdp/2.6.1.0-129/accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. 17/09/26 11:54:10 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6.2.6.1.0-129 17/09/26 11:54:10 WARN sqoop.ConnFactory: Parameter --driver is set to an explicit driver however appropriate connection manager is not being set (via --connection-manager). Sqoop is going to fall back to org.apache.sqoop.manager.GenericJdbcManager. Please specify explicitly which connection manager should be used next time. 17/09/26 11:54:10 INFO manager.SqlManager: Using default fetchSize of 1000 17/09/26 11:54:10 INFO tool.CodeGenTool: Beginning code generation 17/09/26 11:54:11 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM Act_Grade AS t WHERE 1=0 17/09/26 11:54:11 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM Act_Grade AS t WHERE 1=0 17/09/26 11:54:11 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/hdp/2.6.1.0-129/hadoop-mapreduce Note: /tmp/sqoop-root/compile/c5caa032c3405b5c7443d0d77d356be1/Act_Grade.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 17/09/26 11:54:14 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/c5caa032c3405b5c7443d0d77d356be1/Act_Grade.jar 17/09/26 11:54:14 INFO mapreduce.ExportJobBase: Beginning export of Act_Grade 17/09/26 11:54:16 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM Act_Grade AS t WHERE 1=0 17/09/26 11:54:17 INFO client.RMProxy: Connecting to ResourceManager at sandbox.hortonworks.com/172.17.0.2:8032 17/09/26 11:54:17 INFO client.AHSProxy: Connecting to Application History server at sandbox.hortonworks.com/172.17.0.2:10200 17/09/26 11:54:26 INFO input.FileInputFormat: Total input paths to process : 8 17/09/26 11:54:26 INFO input.FileInputFormat: Total input paths to process : 8 17/09/26 11:54:26 INFO mapreduce.JobSubmitter: number of splits:1 17/09/26 11:54:27 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1506422992590_0008 17/09/26 11:54:27 INFO impl.YarnClientImpl: Submitted application application_1506422992590_0008 17/09/26 11:54:28 INFO mapreduce.Job: The url to track the job: http://sandbox.hortonworks.com:8088/proxy/application_1506422992590_0008/ 17/09/26 11:54:28 INFO mapreduce.Job: Running job: job_1506422992590_0008 17/09/26 11:54:37 INFO mapreduce.Job: Job job_1506422992590_0008 running in uber mode : false 17/09/26 11:54:37 INFO mapreduce.Job: map 0% reduce 0% 17/09/26 11:54:45 INFO mapreduce.Job: map 100% reduce 0% 17/09/26 11:54:45 INFO mapreduce.Job: Job job_1506422992590_0008 failed with state FAILED due to: Task failed task_1506422992590_0008_m_000000 Job failed as tasks failed. failedMaps:1 failedReduces:0 17/09/26 11:54:46 INFO mapreduce.Job: Counters: 8 Job Counters Failed map tasks=1 Launched map tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=11272 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=5636 Total vcore-milliseconds taken by all map tasks=5636 Total megabyte-milliseconds taken by all map tasks=2818000 17/09/26 11:54:46 WARN mapreduce.Counters: Group FileSystemCounters is deprecated. Use org.apache.hadoop.mapreduce.FileSystemCounter instead 17/09/26 11:54:46 INFO mapreduce.ExportJobBase: Transferred 0 bytes in 29.3334 seconds (0 bytes/sec) 17/09/26 11:54:46 WARN mapreduce.Counters: Group org.apache.hadoop.mapred.Task$Counter is deprecated. Use org.apache.hadoop.mapreduce.TaskCounter instead 17/09/26 11:54:46 INFO mapreduce.ExportJobBase: Exported 0 records. 17/09/26 11:54:46 ERROR mapreduce.ExportJobBase: Export job failed! 17/09/26 11:54:46 ERROR tool.ExportTool: Error during export: Export job failed!

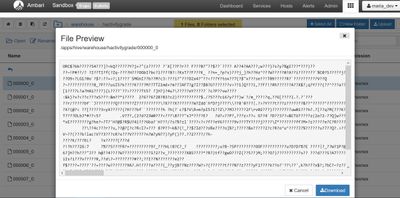

for more informations (images)

Thanks and Regards

Created 09-26-2017 05:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you post the application log from the failed application application_1506422992590_0008? You can collect that by running

yarn logs -applicationId application_1506422992590_0008 > app_logs.txt

Created 09-26-2017 05:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

sqoop export --connect jdbc:mysql://localhost/naveendb --username root --password root --table exporttab --export-dir /hdfsJob/part-m-*;

Created 09-26-2017 08:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I got the same error

Created 09-27-2017 01:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Br Hmedna You are trying to export ORC data into mysql without converting it to text. You should use sqoop hive export to do this. Look at this link https://community.hortonworks.com/questions/22425/sqoop-export-from-hive-table-specifying-delimiters...

Created 09-27-2017 01:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you attach the resource manager log?

And where is the option --update-mode ?

Created 09-27-2017 05:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Br Hmedna,

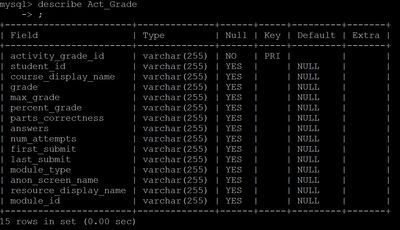

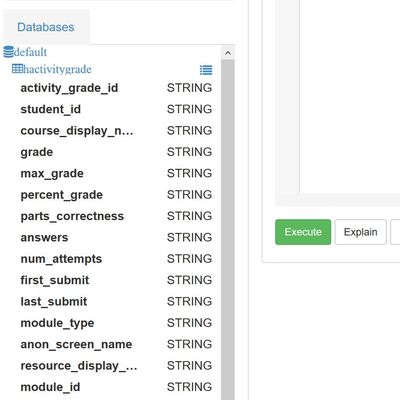

Couple of things, from screen shot your data is in ORC format.

to import and export use HCatalog integration,

the syntax for the export would be

sqoop export --connect jdbc:mysql://127.0.0.1/mooc2015 –username <mysql_User> –password <mysql_pwd> –table Act_Grade –hcatalog-table <hive_table> --hcatalog-database <hive_database_name>

please note that --export-dir option is not supported while on hcat integration, so better use above syntax.

on the side note for debugging your code.

the error you have provided is not the application log, high-level sqoop log.

Yarn app(task) log can be found with the extension(job_1506422992590_0008), where you can find the reason for the failure.

hope this helps !!

Created 08-15-2018 06:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I used below command for oracle and it was perfect worked for me. How can I use customize sql in sqoop export from hive to other DB ?

sqoop export --connect "jdbc:oracle:thin:@10.10.2.19:2632:blbidb" --password "abc_123" --username "churn" --table "CHURN_MODEL_DMP_TMP" --hcatalog-table "abc_test" --hcatalog-database "dd_ads"

Regards,

Khiarul Hasan

Created 12-29-2020 07:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Below export command worked for me.

CREATE table departments_export (departmentid int(11), department_name varchar(45), created_date T1MESTAMP);

sqoop export --connect jdbc:mysql://<host>:3306/DB --username cloudera --password *** \

--table departments_export \

--export-dir '/user/cloudera/departments_new/*' \

-m 1 \

--input-fields-terminated-by ',';

Sample input: 103,Finance,2020-10-10 10:10:00