Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Failed to format namenode

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

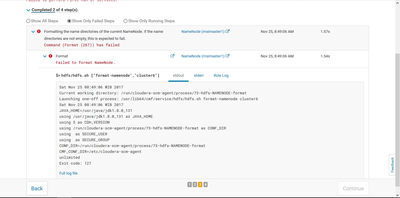

Failed to format namenode

- Labels:

-

Cloudera Manager

-

HDFS

Created 11-23-2017 05:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am trying to install Cloudera Manager and CDH 5.13, but when I add hdfs service and try to start it but return "failed to format namenode".

From stderr I can see that /usr/lib/hadoop-hdfs/bin/hdfs not found, mine is located at /var/lib and not /usr/lib, how can I fix this?

/usr/lib64/cmf/service/hdfs/hdfs.sh: line 273: /usr/lib/hadoop-hdfs/bin/hdfs: No such file or directory

Sat Nov 25 08:49:06 WIB 2017

Sat Nov 25 08:49:06 WIB 2017

+ source_parcel_environment

+ '[' '!' -z '' ']'

+ locate_cdh_java_home

+ '[' -z /usr/java/jdk1.8.0_131 ']'

+ verify_java_home

+ '[' -z /usr/java/jdk1.8.0_131 ']'

+ echo JAVA_HOME=/usr/java/jdk1.8.0_131

+ . /usr/lib64/cmf/service/common/cdh-default-hadoop

++ [[ -z 5 ]]

++ '[' 5 = 3 ']'

++ '[' 5 = -3 ']'

++ '[' 5 -ge 4 ']'

++ export HADOOP_HOME_WARN_SUPPRESS=true

++ HADOOP_HOME_WARN_SUPPRESS=true

++ export HADOOP_PREFIX=/usr/lib/hadoop

++ HADOOP_PREFIX=/usr/lib/hadoop

++ export HADOOP_LIBEXEC_DIR=/usr/lib/hadoop/libexec

++ HADOOP_LIBEXEC_DIR=/usr/lib/hadoop/libexec

++ export HADOOP_CONF_DIR=/run/cloudera-scm-agent/process/73-hdfs-NAMENODE-format

++ HADOOP_CONF_DIR=/run/cloudera-scm-agent/process/73-hdfs-NAMENODE-format

++ export HADOOP_COMMON_HOME=/usr/lib/hadoop

++ HADOOP_COMMON_HOME=/usr/lib/hadoop

++ export HADOOP_HDFS_HOME=/usr/lib/hadoop-hdfs

++ HADOOP_HDFS_HOME=/usr/lib/hadoop-hdfs

++ export HADOOP_MAPRED_HOME=/usr/lib/hadoop-mapreduce

++ HADOOP_MAPRED_HOME=/usr/lib/hadoop-mapreduce

++ '[' 5 = 4 ']'

++ '[' 5 = 5 ']'

++ export HADOOP_YARN_HOME=/usr/lib/hadoop-yarn

++ HADOOP_YARN_HOME=/usr/lib/hadoop-yarn

++ replace_pid -Xms173015040 -Xmx173015040 -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+HeapDumpOnOutOfMemoryError '-XX:HeapDumpPath=/tmp/hdfs_hdfs-NAMENODE-712bef7a49c7804f9bb06998eee2fed7_pid{{PID}}.hprof' -XX:OnOutOfMemoryError=/usr/lib64/cmf/service/common/killparent.sh

++ sed 's#{{PID}}#12451#g'

++ echo -Xms173015040 -Xmx173015040 -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+HeapDumpOnOutOfMemoryError '-XX:HeapDumpPath=/tmp/hdfs_hdfs-NAMENODE-712bef7a49c7804f9bb06998eee2fed7_pid{{PID}}.hprof' -XX:OnOutOfMemoryError=/usr/lib64/cmf/service/common/killparent.sh

+ export 'HADOOP_NAMENODE_OPTS=-Xms173015040 -Xmx173015040 -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp/hdfs_hdfs-NAMENODE-712bef7a49c7804f9bb06998eee2fed7_pid12451.hprof -XX:OnOutOfMemoryError=/usr/lib64/cmf/service/common/killparent.sh'

+ HADOOP_NAMENODE_OPTS='-Xms173015040 -Xmx173015040 -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp/hdfs_hdfs-NAMENODE-712bef7a49c7804f9bb06998eee2fed7_pid12451.hprof -XX:OnOutOfMemoryError=/usr/lib64/cmf/service/common/killparent.sh'

++ replace_pid

++ echo

++ sed 's#{{PID}}#12451#g'

+ export HADOOP_DATANODE_OPTS=

+ HADOOP_DATANODE_OPTS=

++ replace_pid

++ echo

++ sed 's#{{PID}}#12451#g'

+ export HADOOP_SECONDARYNAMENODE_OPTS=

+ HADOOP_SECONDARYNAMENODE_OPTS=

++ replace_pid

++ echo

++ sed 's#{{PID}}#12451#g'

+ export HADOOP_NFS3_OPTS=

+ HADOOP_NFS3_OPTS=

++ replace_pid

++ echo

++ sed 's#{{PID}}#12451#g'

+ export HADOOP_JOURNALNODE_OPTS=

+ HADOOP_JOURNALNODE_OPTS=

+ '[' 5 -ge 4 ']'

+ HDFS_BIN=/usr/lib/hadoop-hdfs/bin/hdfs

+ export 'HADOOP_OPTS=-Djava.net.preferIPv4Stack=true '

+ HADOOP_OPTS='-Djava.net.preferIPv4Stack=true '

+ echo 'using /usr/java/jdk1.8.0_131 as JAVA_HOME'

+ echo 'using 5 as CDH_VERSION'

+ echo 'using /run/cloudera-scm-agent/process/73-hdfs-NAMENODE-format as CONF_DIR'

+ echo 'using as SECURE_USER'

+ echo 'using as SECURE_GROUP'

+ set_hadoop_classpath

+ set_classpath_in_var HADOOP_CLASSPATH

+ '[' -z HADOOP_CLASSPATH ']'

+ [[ -n /usr/share/cmf ]]

++ tr '\n' :

++ find /usr/share/cmf/lib/plugins -maxdepth 1 -name '*.jar'

+ ADD_TO_CP=/usr/share/cmf/lib/plugins/event-publish-5.13.0-shaded.jar:/usr/share/cmf/lib/plugins/tt-instrumentation-5.13.0.jar:

+ [[ -n navigator/cdh5 ]]

+ for DIR in '$CM_ADD_TO_CP_DIRS'

++ find /usr/share/cmf/lib/plugins/navigator/cdh5 -maxdepth 1 -name '*.jar'

++ tr '\n' :

+ PLUGIN=/usr/share/cmf/lib/plugins/navigator/cdh5/audit-plugin-cdh5-2.12.0-shaded.jar:

+ ADD_TO_CP=/usr/share/cmf/lib/plugins/event-publish-5.13.0-shaded.jar:/usr/share/cmf/lib/plugins/tt-instrumentation-5.13.0.jar:/usr/share/cmf/lib/plugins/navigator/cdh5/audit-plugin-cdh5-2.12.0-shaded.jar:

+ eval 'OLD_VALUE=$HADOOP_CLASSPATH'

++ OLD_VALUE=

+ NEW_VALUE=/usr/share/cmf/lib/plugins/event-publish-5.13.0-shaded.jar:/usr/share/cmf/lib/plugins/tt-instrumentation-5.13.0.jar:/usr/share/cmf/lib/plugins/navigator/cdh5/audit-plugin-cdh5-2.12.0-shaded.jar:

+ export HADOOP_CLASSPATH=/usr/share/cmf/lib/plugins/event-publish-5.13.0-shaded.jar:/usr/share/cmf/lib/plugins/tt-instrumentation-5.13.0.jar:/usr/share/cmf/lib/plugins/navigator/cdh5/audit-plugin-cdh5-2.12.0-shaded.jar

+ HADOOP_CLASSPATH=/usr/share/cmf/lib/plugins/event-publish-5.13.0-shaded.jar:/usr/share/cmf/lib/plugins/tt-instrumentation-5.13.0.jar:/usr/share/cmf/lib/plugins/navigator/cdh5/audit-plugin-cdh5-2.12.0-shaded.jar

+ set -x

+ replace_conf_dir

+ echo CONF_DIR=/run/cloudera-scm-agent/process/73-hdfs-NAMENODE-format

+ echo CMF_CONF_DIR=/etc/cloudera-scm-agent

+ EXCLUDE_CMF_FILES=('cloudera-config.sh' 'httpfs.sh' 'hue.sh' 'impala.sh' 'sqoop.sh' 'supervisor.conf' 'config.zip' 'proc.json' '*.log' '*.keytab' '*jceks')

++ printf '! -name %s ' cloudera-config.sh httpfs.sh hue.sh impala.sh sqoop.sh supervisor.conf config.zip proc.json '*.log' hdfs.keytab '*jceks'

+ find /run/cloudera-scm-agent/process/73-hdfs-NAMENODE-format -type f '!' -path '/run/cloudera-scm-agent/process/73-hdfs-NAMENODE-format/logs/*' '!' -name cloudera-config.sh '!' -name httpfs.sh '!' -name hue.sh '!' -name impala.sh '!' -name sqoop.sh '!' -name supervisor.conf '!' -name config.zip '!' -name proc.json '!' -name '*.log' '!' -name hdfs.keytab '!' -name '*jceks' -exec perl -pi -e 's#{{CMF_CONF_DIR}}#/run/cloudera-scm-agent/process/73-hdfs-NAMENODE-format#g' '{}' ';'

+ make_scripts_executable

+ find /run/cloudera-scm-agent/process/73-hdfs-NAMENODE-format -regex '.*\.\(py\|sh\)$' -exec chmod u+x '{}' ';'

+ '[' DATANODE_MAX_LOCKED_MEMORY '!=' '' ']'

+ ulimit -l

+ export HADOOP_IDENT_STRING=hdfs

+ HADOOP_IDENT_STRING=hdfs

+ '[' -n '' ']'

+ '[' mkdir '!=' format-namenode ']'

+ acquire_kerberos_tgt hdfs.keytab

+ '[' -z hdfs.keytab ']'

+ '[' -n '' ']'

+ '[' validate-writable-empty-dirs = format-namenode ']'

+ '[' file-operation = format-namenode ']'

+ '[' bootstrap = format-namenode ']'

+ '[' failover = format-namenode ']'

+ '[' transition-to-active = format-namenode ']'

+ '[' initializeSharedEdits = format-namenode ']'

+ '[' initialize-znode = format-namenode ']'

+ '[' format-namenode = format-namenode ']'

+ '[' -z /data/1/dfs/nn ']'

+ for dfsdir in '$DFS_STORAGE_DIRS'

+ '[' -e /data/1/dfs/nn ']'

+ '[' '!' -d /data/1/dfs/nn ']'

+ CLUSTER_ARGS=

+ '[' 2 -eq 2 ']'

+ CLUSTER_ARGS='-clusterId cluster6'

+ '[' 3 = 5 ']'

+ '[' -3 = 5 ']'

+ exec /usr/lib/hadoop-hdfs/bin/hdfs --config /run/cloudera-scm-agent/process/73-hdfs-NAMENODE-format namenode -format -clusterId cluster6 -nonInteractive

/usr/lib64/cmf/service/hdfs/hdfs.sh: line 273: /usr/lib/hadoop-hdfs/bin/hdfs: No such file or directory

Created 11-26-2017 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 11-23-2017 07:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Whoops

This issue happen because I haven't activated the parcels, it's solved now after I distributed my parcels. Newbie mistake

Created 11-26-2017 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content