Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Failure deploying cluster to localhost - zooke...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Failure deploying cluster to localhost - zookeeper

- Labels:

-

Cloudera DataFlow (CDF)

Created 07-12-2018 07:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

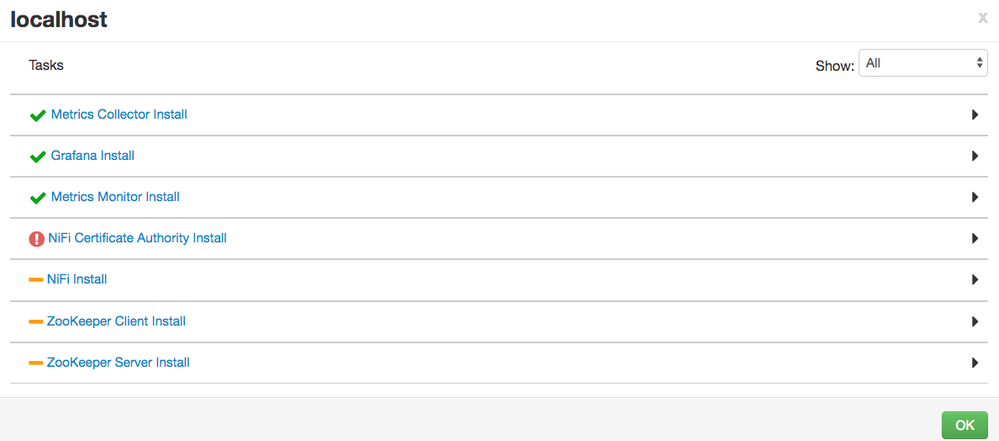

Hi, I am getting failures when deploying to localhost. Trying to test out nifi mainly. Getting failures on zookeeper and nifi certificate auth. I did add a pasword for the Advanced nifi-ambari-ssl-config. But it still seems to fail.. the logs are below, and the screenshot of the failures is attached.

My server setup is good i believe, Ubuntu 16, Build # 3.1.2.0-7.

After i launch the wizard, and do the install options. It gave me 2 warnings about what i missed in my server setup, installing/enabling ntp, and disabling THP. Also changed hostname to localhost.

Seems like zookeeper might be the problem, i'm not sure.

I tried the fix in this article, but i dont' have a /usr/hdp/ folder.

Following this tutorial as closely as i can:

Thanks for any help,

Ron

p.p1 {margin: 0.0px 0.0px 0.0px 0.0px; font: 11.0px Menlo; color: #000000; background-color: #ffffff} p.p2 {margin: 0.0px 0.0px 0.0px 0.0px; font: 11.0px Menlo; color: #000000; background-color: #ffffff; min-height: 13.0px} span.s1 {font-variant-ligatures: no-common-ligatures}

2018-07-12 19:33:25,796 - Stack Feature Version Info: Cluster Stack=3.1, Command Stack=None, Command Version=None -> 3.1

User Group mapping (user_group) is missing in the hostLevelParams

2018-07-12 19:33:25,799 - Group['hadoop'] {}

2018-07-12 19:33:25,800 - Group['nifi'] {}

2018-07-12 19:33:25,800 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-07-12 19:33:25,800 - call['/var/lib/ambari-agent/tmp/changeUid.sh zookeeper'] {}

2018-07-12 19:33:25,806 - call returned (0, '1001')

2018-07-12 19:33:25,807 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': 1001}

2018-07-12 19:33:25,807 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-07-12 19:33:25,808 - call['/var/lib/ambari-agent/tmp/changeUid.sh ams'] {}

2018-07-12 19:33:25,813 - call returned (0, '1002')

2018-07-12 19:33:25,814 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': 1002}

2018-07-12 19:33:25,814 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users'], 'uid': None}

2018-07-12 19:33:25,815 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-07-12 19:33:25,815 - call['/var/lib/ambari-agent/tmp/changeUid.sh nifi'] {}

2018-07-12 19:33:25,821 - call returned (0, '1004')

2018-07-12 19:33:25,821 - User['nifi'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': 1004}

2018-07-12 19:33:25,821 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-07-12 19:33:25,822 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2018-07-12 19:33:25,826 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2018-07-12 19:33:25,837 - Repository['HDF-3.1-repo-4'] {'append_to_file': False, 'base_url': 'http://public-repo-1.hortonworks.com/HDF/ubuntu16/3.x/updates/3.1.2.0', 'action': ['create'], 'components': [u'HDF', 'main'], 'repo_template': '{{package_type}} {{base_url}} {{components}}', 'repo_file_name': 'ambari-hdf-4', 'mirror_list': None}

2018-07-12 19:33:25,843 - File['/tmp/tmp0JlPhQ'] {'content': 'deb http://public-repo-1.hortonworks.com/HDF/ubuntu16/3.x/updates/3.1.2.0 HDF main'}

2018-07-12 19:33:25,843 - Writing File['/tmp/tmp0JlPhQ'] because contents don't match

2018-07-12 19:33:25,843 - File['/tmp/tmphevGen'] {'content': StaticFile('/etc/apt/sources.list.d/ambari-hdf-4.list')}

2018-07-12 19:33:25,843 - Writing File['/tmp/tmphevGen'] because contents don't match

2018-07-12 19:33:25,844 - File['/etc/apt/sources.list.d/ambari-hdf-4.list'] {'content': StaticFile('/tmp/tmp0JlPhQ')}

2018-07-12 19:33:25,844 - Writing File['/etc/apt/sources.list.d/ambari-hdf-4.list'] because contents don't match

2018-07-12 19:33:25,844 - checked_call[['apt-get', 'update', '-qq', '-o', u'Dir::Etc::sourcelist=sources.list.d/ambari-hdf-4.list', '-o', 'Dir::Etc::sourceparts=-', '-o', 'APT::Get::List-Cleanup=0']] {'sudo': True, 'quiet': False}

2018-07-12 19:33:25,975 - checked_call returned (0, '')

2018-07-12 19:33:25,976 - Repository['HDP-UTILS-1.1.0.21-repo-4'] {'append_to_file': True, 'base_url': 'http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.21/repos/ubuntu16', 'action': ['create'], 'components': [u'HDP-UTILS', 'main'], 'repo_template': '{{package_type}} {{base_url}} {{components}}', 'repo_file_name': 'ambari-hdf-4', 'mirror_list': None}

2018-07-12 19:33:25,978 - File['/tmp/tmpdUcPvV'] {'content': 'deb http://public-repo-1.hortonworks.com/HDF/ubuntu16/3.x/updates/3.1.2.0 HDF main\ndeb http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.21/repos/ubuntu16 HDP-UTILS main'}

2018-07-12 19:33:25,978 - Writing File['/tmp/tmpdUcPvV'] because contents don't match

2018-07-12 19:33:25,978 - File['/tmp/tmpQlo4lv'] {'content': StaticFile('/etc/apt/sources.list.d/ambari-hdf-4.list')}

2018-07-12 19:33:25,979 - Writing File['/tmp/tmpQlo4lv'] because contents don't match

2018-07-12 19:33:25,979 - File['/etc/apt/sources.list.d/ambari-hdf-4.list'] {'content': StaticFile('/tmp/tmpdUcPvV')}

2018-07-12 19:33:25,979 - Writing File['/etc/apt/sources.list.d/ambari-hdf-4.list'] because contents don't match

2018-07-12 19:33:25,979 - checked_call[['apt-get', 'update', '-qq', '-o', u'Dir::Etc::sourcelist=sources.list.d/ambari-hdf-4.list', '-o', 'Dir::Etc::sourceparts=-', '-o', 'APT::Get::List-Cleanup=0']] {'sudo': True, 'quiet': False}

2018-07-12 19:33:26,207 - checked_call returned (0, 'W: http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.21/repos/ubuntu16/dists/HDP-UTILS/InRelease: Signature by key DF52ED4F7A3A5882C0994C66B9733A7A07513CAD uses weak digest algorithm (SHA1)')

2018-07-12 19:33:26,207 - Package['unzip'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2018-07-12 19:33:26,221 - Skipping installation of existing package unzip

2018-07-12 19:33:26,221 - Package['curl'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2018-07-12 19:33:26,234 - Skipping installation of existing package curl

2018-07-12 19:33:26,234 - Package['hdf-select'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2018-07-12 19:33:26,246 - Skipping installation of existing package hdf-select

2018-07-12 19:33:26,285 - call[('ambari-python-wrap', u'/usr/bin/hdf-select', 'versions')] {}

2018-07-12 19:33:26,301 - call returned (1, 'Traceback (most recent call last):\nFile "/usr/bin/hdf-select", line 403, in <module>\nprintVersions()\nFile "/usr/bin/hdf-select", line 248, in printVersions\nfor f in os.listdir(root):\nOSError: [Errno 2] No such file or directory: \'/usr/hdf\'')

2018-07-12 19:33:26,425 - Could not determine stack version for component zookeeper by calling '/usr/bin/hdf-select status zookeeper > /tmp/tmpgMWFwS'. Return Code: 1, Output: ERROR: Invalid package - zookeeper

Packages:

accumulo-client

accumulo-gc

accumulo-master

accumulo-monitor

accumulo-tablet

accumulo-tracer

atlas-client

atlas-server

falcon-client

falcon-server

flume-server

hadoop-client

hadoop-hdfs-datanode

hadoop-hdfs-journalnode

hadoop-hdfs-namenode

hadoop-hdfs-nfs3

hadoop-hdfs-portmap

hadoop-hdfs-secondarynamenode

hadoop-httpfs

hadoop-mapreduce-historyserver

hadoop-yarn-nodemanager

hadoop-yarn-resourcemanager

hadoop-yarn-timelineserver

hbase-client

hbase-master

hbase-regionserver

hive-metastore

hive-server2

hive-server2-hive2

hive-webhcat

kafka-broker

knox-server

livy-server

mahout-client

nifi

nifi-registry

oozie-client

oozie-server

phoenix-client

phoenix-server

ranger-admin

ranger-kms

ranger-tagsync

ranger-usersync

registry

slider-client

spark-client

spark-historyserver

spark-thriftserver

spark2-client

spark2-historyserver

spark2-thriftserver

sqoop-client

sqoop-server

storm-client

storm-nimbus

storm-supervisor

streamline

zeppelin-server

zookeeper-client

zookeeper-server

Aliases:

accumulo-server

all

client

hadoop-hdfs-server

hadoop-mapreduce-server

hadoop-yarn-server

hive-server

Created 07-12-2018 08:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, I took an image of the server just after setup, and i tried again on a new ubuntu server, and it worked! Think nifi is running fine, about to check it out.

I removed installing the ambari-metrics. not sure what the difference is, bcz i didn't change much other than that. Oh, one part did complain about disk space, when i went back and tried to reinstall again, so I bumped up from 8 to 32gb.

Seems zookeeper and nifi installed ok.

Created 07-12-2018 08:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, I took an image of the server just after setup, and i tried again on a new ubuntu server, and it worked! Think nifi is running fine, about to check it out.

I removed installing the ambari-metrics. not sure what the difference is, bcz i didn't change much other than that. Oh, one part did complain about disk space, when i went back and tried to reinstall again, so I bumped up from 8 to 32gb.

Seems zookeeper and nifi installed ok.