Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Few Questions on Hadoop 2.x architecture

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Few Questions on Hadoop 2.x architecture

- Labels:

-

Apache Hadoop

Created 10-01-2016 06:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was going through the 2.x architecture, I got few question about the name node and resource manager

To resolve Single point of failure of Namenode in 1.x arch,In Hadoop 2.x have standby namenode.

to reduce the load of the Job Tracker , In 2.x we have Resource Manager.

Wanted to know,

1. What is the role of Namenode and Resource Manager ?

2. As only one resource manager available/cluster , then It could be a Single Point of failure

3. If NameNode storing meta data info about the blocks (as Hadoop 1.x ) , Then which service is responsible for getting data block information after submitting the job.

see the image : http://hortonworks.com/wp-content/uploads/2014/04/YARN_distributed_arch.png

Resource manager directly interacting with Nodes

4. can anyone tell me, how the flow goes for below commands

$ hadoop fs -put <source> <dest>

$ hadoop jar app.jar <app> <inputfilepath> <outputpath>

Created on 10-01-2016 10:27 AM - edited 08-19-2019 04:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Gobi Subramani,

Below answers to your questions:

- The role of NameNode is to manage the HDFS file system. The role of Resource Manager is to manage cluster's ressources (CPU, RAM, etc) by collaborating with Node Managers. I won't write too much on these aspects as lot of documentation is already available. You can read on HDFS and Yarn architecture in the official documentation.

- You can have High availability in Yarn by having active and standby Ressource Managers (more information here)

- If you have a distributed application (Spark, Tez, etc) that needs data from HDFS, it will use Yarn and HDFS. Yarn will enable the application to request containers (which contains the required resources : CPU, RAM, etc) on different nodes. The application will be deployed and running inside these containers. Then, the application will be responsible for getting data from HDFS by exchanging with NameNode and DataNodes.

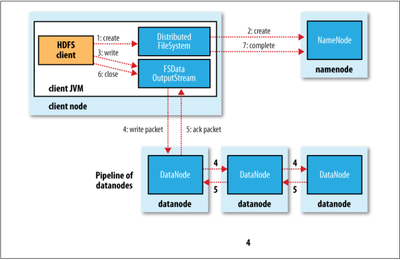

- For the put command, only HDFS is involved. Without going into details : the client asks the NameNode to create a new file in the NameSpace. The NameNode do some checks (file doesn't exists, user have right to write in the directory, etc) and allow the client to write data. At this time, the new file have no data blocks. Then the client starts writing data in blocks. For writing blocks, the HDFS API exchanges with the NameNode to get a list of DataNode on which it can write each block. The number of dataNodes depends on the replication factor and the list is ordered by distance from the client. When NameNode gives the DataNodes list, the API write a data block to the first node, which replicates the same block to the next one and so on. Here's a picture from The Hadoop Definitive Guide that explains this process.

Created on 10-01-2016 10:27 AM - edited 08-19-2019 04:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Gobi Subramani,

Below answers to your questions:

- The role of NameNode is to manage the HDFS file system. The role of Resource Manager is to manage cluster's ressources (CPU, RAM, etc) by collaborating with Node Managers. I won't write too much on these aspects as lot of documentation is already available. You can read on HDFS and Yarn architecture in the official documentation.

- You can have High availability in Yarn by having active and standby Ressource Managers (more information here)

- If you have a distributed application (Spark, Tez, etc) that needs data from HDFS, it will use Yarn and HDFS. Yarn will enable the application to request containers (which contains the required resources : CPU, RAM, etc) on different nodes. The application will be deployed and running inside these containers. Then, the application will be responsible for getting data from HDFS by exchanging with NameNode and DataNodes.

- For the put command, only HDFS is involved. Without going into details : the client asks the NameNode to create a new file in the NameSpace. The NameNode do some checks (file doesn't exists, user have right to write in the directory, etc) and allow the client to write data. At this time, the new file have no data blocks. Then the client starts writing data in blocks. For writing blocks, the HDFS API exchanges with the NameNode to get a list of DataNode on which it can write each block. The number of dataNodes depends on the replication factor and the list is ordered by distance from the client. When NameNode gives the DataNodes list, the API write a data block to the first node, which replicates the same block to the next one and so on. Here's a picture from The Hadoop Definitive Guide that explains this process.

Created 10-02-2016 02:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

Thaks for your quick reply. Couple of pints need to clarify

1. Application master is responsible for getting datablock info from NameNode , and creating container in the respective datanodes for processing the data

2. It is also responsible for monitoring the task and in case it failed, then app master will start the container in different datanode

Created 10-01-2016 10:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please read the link so you can understand easily:-

https://hadoop.apache.org/docs/r2.7.2/hadoop-yarn/hadoop-yarn-site/YARN.html

https://hadoop.apache.org/docs/r2.7.2/hadoop-yarn/hadoop-yarn-site/ResourceManagerHA.html

Every hadoop command internally calls a java utility for the further operations. org.apache.hadoop.fs.FsShell provide command line access to a FileSystem. hadoop fs -put internally calls the corresponding method from the above package.

to undersatnd the fsshell code please go thorugh the link:-