Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Getting Error while performing mapreduce job i...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Getting Error while performing mapreduce job in Hadoop 3.0.0

- Labels:

-

Apache Hadoop

Created on 03-04-2018 01:51 PM - edited 08-17-2019 05:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

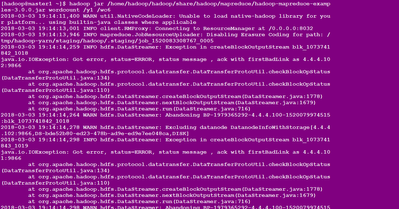

[hadoop@master1 ~]$ hadoop jar /home/hadoop/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examp les-3.0.0.jar wordcount /y1 /wc6

2018-03-03 19:14:11,400 WARN util.NativeCodeLoader: Unable to load native-hadoop library for you r platform... using builtin-java classes where applicable

2018-03-03 19:14:13,001 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

2018-03-03 19:14:13,946 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: / tmp/hadoop-yarn/staging/hadoop/.staging/job_1520083308767_0005

2018-03-03 19:14:14,259 INFO hdfs.DataStreamer: Exception in createBlockOutputStream blk_1073741 842_1018 java.io.IOException: Got error, status=ERROR, status message , ack with firstBadLink as 4.4.4.10 2:9866 at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus (DataTransferProtoUtil.java:134) at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus (DataTransferProtoUtil.java:110) at org.apache.hadoop.hdfs.DataStreamer.createBlockOutputStream(DataStreamer.java:1778) at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1679) at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:716)

2018-03-03 19:14:14,264 WARN hdfs.DataStreamer: Abandoning BP-1979365292-4.4.4.100-1520079974515 :blk_1073741842_1018

2018-03-03 19:14:14,278 WARN hdfs.DataStreamer: Excluding datanode DatanodeInfoWithStorage[4.4.4 .102:9866,DS-bde52b80-ed23-478b-ad9e-ed9e7ee048ca,DISK] 2018-03-03 19:14:14,298 INFO hdfs.DataStreamer: Exception in createBlockOutputStream blk_1073741 843_1019 java.io.IOException: Got error, status=ERROR, status message , ack with firstBadLink as 4.4.4.10 1:9866 at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus (DataTransferProtoUtil.java:134) at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus (DataTransferProtoUtil.java:110) at org.apache.hadoop.hdfs.DataStreamer.createBlockOutputStream(DataStreamer.java:1778) at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1679) at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:716)

2018-03-03 19:14:14,298 WARN hdfs.DataStreamer: Abandoning BP-1979365292-4.4.4.100-1520079974515 :blk_1073741843_1019 2018-03-03 19:14:14,302 WARN hdfs.DataStreamer: Excluding datanode DatanodeInfoWithStorage[4.4.4 .101:9866,DS-f248f737-227b-4dce-b985-411710b93dda,DISK] 2018-03-03 19:14:14,432 INFO input.FileInputFormat: Total input files to process : 1 2018-03-03 19:14:14,611 INFO hdfs.DataStreamer: Exception in createBlockOutputStream blk_1073741 845_1021 java.io.IOException: Got error, status=ERROR, status message , ack with firstBadLink as 4.4.4.10 1:9866 at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus (DataTransferProtoUtil.java:134) at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus (DataTransferProtoUtil.java:110) at org.apache.hadoop.hdfs.DataStreamer.createBlockOutputStream(DataStreamer.java:1778) at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1679) at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:716) 2018-03-03 19:14:14,611 WARN hdfs.DataStreamer: Abandoning BP-1979365292-4.4.4.100-1520079974515 :blk_1073741845_1021 2018-03-03 19:14:14,613 WARN hdfs.DataStreamer: Excluding datanode DatanodeInfoWithStorage[4.4.4 .101:9866,DS-f248f737-227b-4dce-b985-411710b93dda,DISK] 2018-03-03 19:14:14,627 INFO hdfs.DataStreamer: Exception in createBlockOutputStream blk_1073741 846_1022 java.io.IOException: Got error, status=ERROR, status message , ack with firstBadLink as 4.4.4.10 2:9866 at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus (DataTransferProtoUtil.java:134) at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus (DataTransferProtoUtil.java:110) at org.apache.hadoop.hdfs.DataStreamer.createBlockOutputStream(DataStreamer.java:1778) at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1679) at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:716) 2018-03-03 19:14:14,627 WARN hdfs.DataStreamer: Abandoning BP-1979365292-4.4.4.100-1520079974515 :blk_1073741846_1022 2018-03-03 19:14:14,630 WARN hdfs.DataStreamer: Excluding datanode DatanodeInfoWithStorage[4.4.4 .102:9866,DS-bde52b80-ed23-478b-ad9e-ed9e7ee048ca,DISK] 2018-03-03 19:14:14,682 INFO hdfs.DataStreamer: Exception in createBlockOutputStream blk_1073741 848_1024 java.io.IOException: Got error, status=ERROR, status message , ack with firstBadLink as 4.4.4.10 2:9866 at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus (DataTransferProtoUtil.java:134) at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus (DataTransferProtoUtil.java:110) at org.apache.hadoop.hdfs.DataStreamer.createBlockOutputStream(DataStreamer.java:1778) at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1679) at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:716) 2018-03-03 19:14:14,683 WARN hdfs.DataStreamer: Abandoning BP-1979365292-4.4.4.100-1520079974515 :blk_1073741848_1024 2018-03-03 19:14:14,685 WARN hdfs.DataStreamer: Excluding datanode DatanodeInfoWithStorage[4.4.4 .102:9866,DS-bde52b80-ed23-478b-ad9e-ed9e7ee048ca,DISK] 2018-03-03 19:14:14,694 INFO hdfs.DataStreamer: Exception in createBlockOutputStream blk_1073741 849_1025 java.io.EOFException: Unexpected EOF while trying to read response from server at org.apache.hadoop.hdfs.protocolPB.PBHelperClient.vintPrefixed(PBHelperClient.java:446 ) at org.apache.hadoop.hdfs.DataStreamer.createBlockOutputStream(DataStreamer.java:1762) at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1679) at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:716) 2018-03-03 19:14:14,695 WARN hdfs.DataStreamer: Abandoning BP-1979365292-4.4.4.100-1520079974515 :blk_1073741849_1025 2018-03-03 19:14:14,697 WARN hdfs.DataStreamer: Excluding datanode DatanodeInfoWithStorage[4.4.4 .100:9866,DS-a28c45b8-4b44-45de-80e5-e25d1f9a8e3d,DISK] 2018-03-03 19:14:14,704 INFO hdfs.DataStreamer: Exception in createBlockOutputStream blk_1073741 850_1026 java.net.NoRouteToHostException: No route to host at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717) at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206) at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531) at org.apache.hadoop.hdfs.DataStreamer.createSocketForPipeline(DataStreamer.java:253) at org.apache.hadoop.hdfs.DataStreamer.createBlockOutputStream(DataStreamer.java:1725) at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1679) at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:716) 2018-03-03 19:14:14,705 WARN hdfs.DataStreamer: Abandoning BP-1979365292-4.4.4.100-1520079974515 :blk_1073741850_1026 2018-03-03 19:14:14,709 WARN hdfs.DataStreamer: Excluding datanode DatanodeInfoWithStorage[4.4.4 .101:9866,DS-f248f737-227b-4dce-b985-411710b93dda,DISK] 2018-03-03 19:14:14,729 WARN hdfs.DataStreamer: DataStreamer Exception org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /tmp/hadoop-yarn/staging/hadoop /.staging/job_1520083308767_0005/job.splitmetainfo could only be written to 0 of the 1 minReplic ation nodes. There are 3 datanode(s) running and 3 node(s) are excluded in this operation. at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(Bloc kManager.java:2101) at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDir WriteFileOp.java:287) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.j ava:2602) at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.j ava:864) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlo ck(ClientNamenodeProtocolServerSideTranslatorPB.java:549) at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProt ocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEng ine.java:523) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991) at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:869) at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:815) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1962) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2675) at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1491) at org.apache.hadoop.ipc.Client.call(Client.java:1437) at org.apache.hadoop.ipc.Client.call(Client.java:1347) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116) at com.sun.proxy.$Proxy11.addBlock(Unknown Source) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientN amenodeProtocolTranslatorPB.java:496) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler .java:422) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHa ndler.java:165) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler. java:157) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHand ler.java:95) at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java: 359) at com.sun.proxy.$Proxy12.addBlock(Unknown Source) at org.apache.hadoop.hdfs.DFSOutputStream.addBlock(DFSOutputStream.java:1031) at org.apache.hadoop.hdfs.DataStreamer.locateFollowingBlock(DataStreamer.java:1865) at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1668) at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:716) 2018-03-03 19:14:14,731 INFO mapreduce.JobSubmitter: Cleaning up the staging area /tmp/hadoop-ya rn/staging/hadoop/.staging/job_1520083308767_0005 org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /tmp/hadoop-yarn/staging/hadoop /.staging/job_1520083308767_0005/job.splitmetainfo could only be written to 0 of the 1 minReplic ation nodes. There are 3 datanode(s) running and 3 node(s) are excluded in this operation. at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(Bloc kManager.java:2101) at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDir WriteFileOp.java:287) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.j ava:2602) at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.j ava:864) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlo ck(ClientNamenodeProtocolServerSideTranslatorPB.java:549) at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProt ocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEng ine.java:523) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991) at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:869) at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:815) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1962) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2675) at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1491) at org.apache.hadoop.ipc.Client.call(Client.java:1437) at org.apache.hadoop.ipc.Client.call(Client.java:1347) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:228) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116) at com.sun.proxy.$Proxy11.addBlock(Unknown Source) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientN amenodeProtocolTranslatorPB.java:496) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler .java:422) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHa ndler.java:165) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler. java:157) at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHand ler.java:95) at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java: 359) at com.sun.proxy.$Proxy12.addBlock(Unknown Source) at org.apache.hadoop.hdfs.DFSOutputStream.addBlock(DFSOutputStream.java:1031) at org.apache.hadoop.hdfs.DataStreamer.locateFollowingBlock(DataStreamer.java:1865) at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1668) at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:716) [hadoop@master1 ~]$ hdfs dfsadmin -safemode forceexit

Created 03-04-2018 03:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you check the host file of name-node if you are able to access all data node with hostnames?

Thanks

Created 03-04-2018 04:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Already did Sir.

Created 03-04-2018 03:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue seems to be Namenode not able to reach the Data nodes:

"File /tmp/hadoop-yarn/staging/hadoop /.staging/job_1520083308767_0005/job.splitmetainfo could only be written to 0 of the 1 minReplic ation nodes. There are 3 datanode(s) running and 3 node(s) are excluded in this operation. "

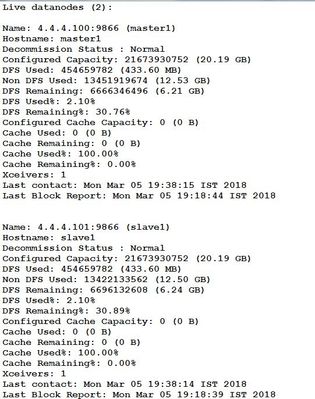

Check the Namenode's reachability to Data nodes using "hdfs dfsadmin -report"

Created on 03-04-2018 04:17 PM - edited 08-17-2019 05:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it seems that all datanodes are working fine and also reachable from namenode.

Created 06-06-2018 01:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Sindhu and @ SUDHIR KUMAR

Did you get the solution for this at all? I have been getting the same errors while running the Sqoop jobs and couldnt find out the solution. I have been through all the suggestions like restarting the cluster and checking "hdfs dfsadmin -report" which shows the datanodes availability. I have a EMR cluster with 3 ec2 instances of datanodes and 1ec2 instance of masternode.

Any help is appreciable.