Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Getting error while starting hbase process in ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Getting error while starting hbase process in Ambari

- Labels:

-

Apache Ambari

-

Apache HBase

Created 12-27-2018 01:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Caused by: java.io.FileNotFoundException: META-INF/native/liborg_apache_hbase_thirdparty_org.apache.hadoop.hbase.shaded.netty_transport_native_epoll_x86_64.so at org.apache.hbase.thirdparty.io.netty.util.internal.NativeLibraryLoader.load(NativeLibraryLoader.java:161) ... 27 more Suppressed: java.lang.UnsatisfiedLinkError: no org_apache_hbase_thirdparty_org.apache.hadoop.hbase.shaded.netty_transport_native_epoll_x86_64 in java.library.path at java.lang.ClassLoader.loadLibrary(ClassLoader.java:1867) at java.lang.Runtime.loadLibrary0(Runtime.java:870) at java.lang.System.loadLibrary(System.java:1122) at org.apache.hbase.thirdparty.io.netty.util.internal.NativeLibraryUtil.loadLibrary(NativeLibraryUtil.java:38) at org.apache.hbase.thirdparty.io.netty.util.internal.NativeLibraryLoader.loadLibrary(NativeLibraryLoader.java:243) at org.apache.hbase.thirdparty.io.netty.util.internal.NativeLibraryLoader.load(NativeLibraryLoader.java:124) ... 27 more Suppressed: java.lang.UnsatisfiedLinkError: no org_apache_hbase_thirdparty_org.apache.hadoop.hbase.shaded.netty_transport_native_epoll_x86_64 in java.library.path at java.lang.ClassLoader.loadLibrary(ClassLoader.java:1867) at java.lang.Runtime.loadLibrary0(Runtime.java:870) at java.lang.System.loadLibrary(System.java:1122) at org.apache.hbase.thirdparty.io.netty.util.internal.NativeLibraryUtil.loadLibrary(NativeLibraryUtil.java:38) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hbase.thirdparty.io.netty.util.internal.NativeLibraryLoader$1.run(NativeLibraryLoader.java:263) at java.security.AccessController.doPrivileged(Native Method) at org.apache.hbase.thirdparty.io.netty.util.internal.NativeLibraryLoader.loadLibraryByHelper(NativeLibraryLoader.java:255) at org.apache.hbase.thirdparty.io.netty.util.internal.NativeLibraryLoader.loadLibrary(NativeLibraryLoader.java:233) ... 28 more

I am using ambari 2.7.1 version and inside Hbase libs directory below jar are present related to netty:

hbase-shaded-netty-2.1.0.jar

netty-all-4.0.23.Final.jar

netty-all-4.0.52.Final.jar

netty-all-4.1.32.Final.jar

netty-buffer-4.1.17.Final.jar

netty-codec-4.1.17.Final.jar

netty-codec-http-4.1.17.Final.jar

netty-common-4.1.17.Final.jar

netty-handler-4.1.17.Final.jar

netty-resolver-4.1.17.Final.jar

netty-transport-4.1.17.Final.jar

Created on 12-27-2018 01:50 PM - edited 08-17-2019 03:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Nihal Shelke ,

This looks more like Netty issue to me referring to https://github.com/netty/netty/issues/6678 ( Netty is a third party jar used by Hbase).

can you try the following workaround

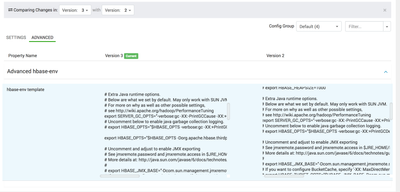

Go to ambari->services>Hbase >configs > hbase-env template -> find the variable export HBASE_OPTS

and add the following startup variables to it.

-Dorg.apache.hbase.thirdparty.io.netty.native.workdir=<some working directory>

I did something like :

export HBASE_OPTS="$HBASE_OPTS -Dorg.apache.hbase.thirdparty.io.netty.native.workdir=/root/asnaik"

and retry starting with ambari and share the results

Please accept this answer if its helpful

Image :

Created 12-28-2018 05:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also only on a master node above directory is get created, not on the region server node.

Created 12-28-2018 07:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @Nihal Shelke,

Does my above comment on setting the custom working directory for Hbase-region server work for you?

I didn't understand your last comment.

You can give some preexisting directory for this the workdir like /root or /users/asnaik which will exist in all hosts by default.

Please login and accept answer if this helped for you

Created 12-28-2018 07:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After adding above options in hbase config getting below error while stating hbase master:

Traceback (most recent call last):

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 995, in restart

self.status(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/HBASE/package/scripts/hbase_master.py", line 106, in status

check_process_status(status_params.hbase_master_pid_file)

File "/usr/lib/ambari-agent/lib/resource_management/libraries/functions/check_process_status.py", line 43, in check_process_status

raise ComponentIsNotRunning()

ComponentIsNotRunning

The above exception was the cause of the following exception:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/HBASE/package/scripts/hbase_master.py", line 170, in <module>

HbaseMaster().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 352, in execute

method(env)

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 1012, in restart

self.post_start(env)

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 424, in post_start

raise Fail("Pid file {0} doesn't exist after starting of the component.".format(pid_file))

resource_management.core.exceptions.Fail: Pid file /var/run/hbase/hbase-hbase-master.pid doesn't exist after starting of the component.

Created 12-28-2018 08:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As we see the error :

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 424, in post_start raise Fail("Pid file {0} doesn't exist after starting of the component.".format(pid_file))resource_management.core.exceptions.Fail: Pid file /var/run/hbase/hbase-hbase-master.pid doesn't exist after starting of the component..

So can you please check if you are able to create an Empty file with user "hbase" as following with the mentioned permission? And then try starting the HBase again.

# su - hbase # ls -l /var/run/hbase/hbase-hbase-master.pid -rw-r--r--. 1 hbase hadoop 5 Dec 23 23:30 /var/run/hbase/hbase-hbase-master.pid

.

If you still face any issue then please try the following approach to start the HBase process and if it starts successfully Manually then next time you can try restarting it from Ambari UI and it should work.

# su -l hbase -c "/usr/hdp/current/hbase-master/bin/hbase-daemon.sh start master; sleep 25"

.

See:

Created 12-28-2018 01:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Below is out put of the command you have mentioned:

hbase@ubuntu21:~$ ls -l /var/run/hbase/hbase-hbase-master.pid ls: cannot access '/var/run/hbase/hbase-hbase-master.pid': No such file or directory

hbase@ubuntu21:~$ ls -l /var/run/hbase/ total 4 -rw-rw-rw- 1 hbase hadoop 4 Dec 28 16:26 phoenix-hbase-queryserver.pid

Also I have sue the command to start the master by command, but still facing same issue.

Created 12-28-2018 04:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Nihal Shelke,

Can you show me what exact change have you done referring to my previous comment .

Is there any eror in hbase-master server out logs ? probably this might be due to some typo in the variable set.

Will revert back the changes i mentioned make the hbase to run smoothly ?

Created 12-31-2018 04:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Akhil S Naik

I am trying to use Hbase (2.1.1) version in hdp stack as we want to use it. Is it possible to change this version in hdp stack in other way.

Created 12-31-2018 05:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As you mentioned that you are trying to use Apache HBase 2.1.1 which is not a tested and certified version with HDP stack yet.

Even the latest HDP 3.1 is certified and tested with HBase 2.0.2 as per the release notes: https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.1.0/release-notes/content/comp_versions.html

So it will be best if you stick to the tested and certified version.