Support Questions

- Cloudera Community

- Support

- Support Questions

- Getting "Caused by: java.lang.NoClassDefFoundError...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Getting "Caused by: java.lang.NoClassDefFoundError: org/apache/spark/SparkConf" after submitting java job in Hue

Created on

03-29-2022

07:36 AM

- last edited on

03-29-2022

10:17 AM

by

cjervis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Community,

I'm running into a problem when I submit a job in Hue editor, from Hue home page I navigate to Query > Editor > Java. I point to the path of my jar file and then add the class name called Hortonwork.SparkTutorial.Main. Then after I hit run I get this error

"Caused by: java.lang.NoClassDefFoundError: org/apache/spark/SparkConf".

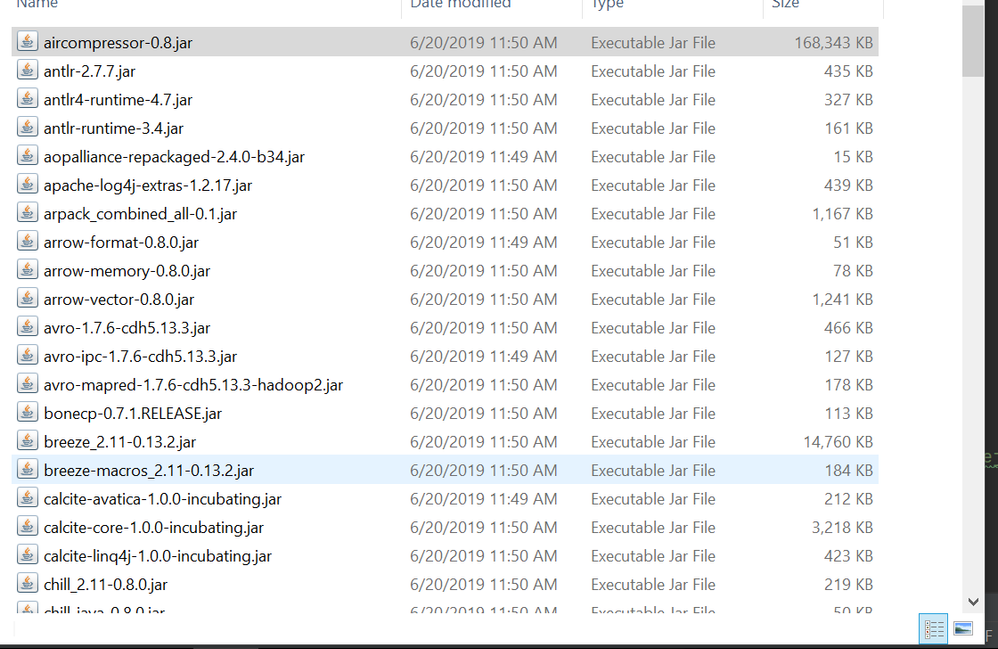

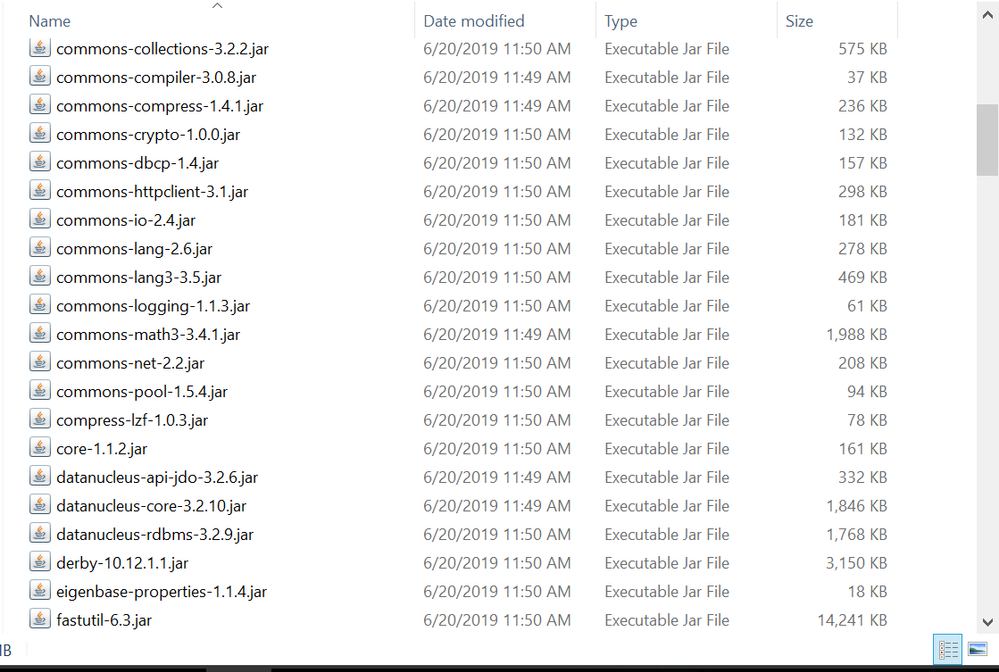

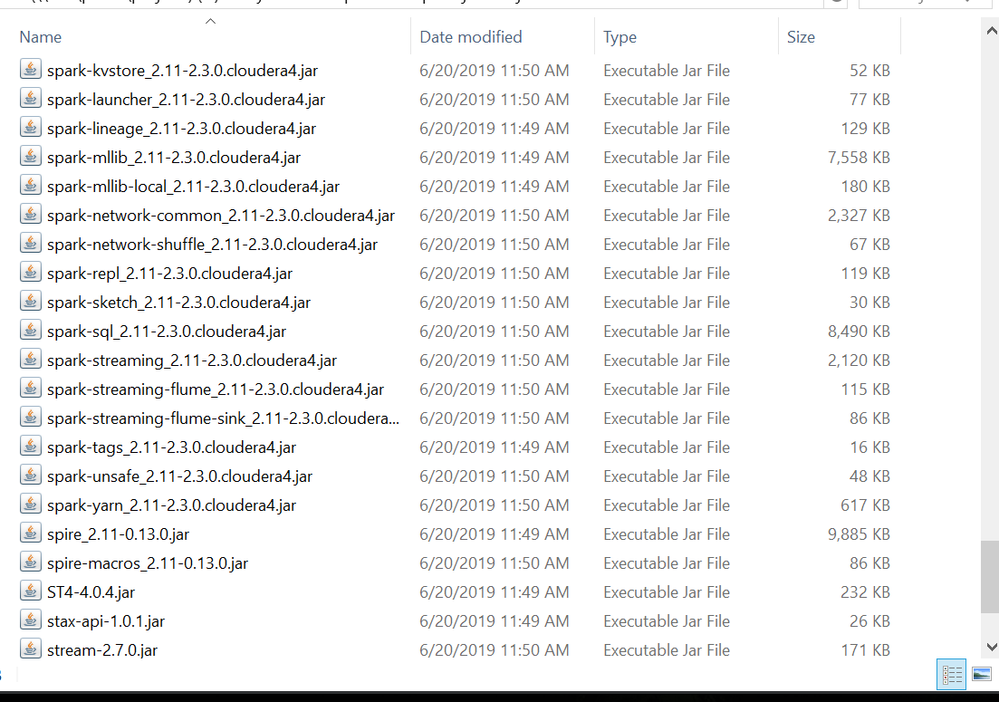

I imagine it has to with not having the jar file SparkConf, I'm using IntelliJ for this project and I've added some jars to my project, I've taken some screen shots of what the jar files look like and have attached them to the post. Curious if any of these jars look familiar to anyone, I may not even be adding the right ones.

Thank you all for the taking the time to read my post and for your help.

Full list error is:

>>> Invoking Main class now >>> INFO: loading log4j config file log4j.properties. INFO: log4j config file log4j.properties loaded successfully. Setting [tez.application.tags] tag: oozie-4908088a838f5d2717d8d8aabe5ccc23 Setting [spark.yarn.tags] tag: oozie-4908088a838f5d2717d8d8aabe5ccc23 Fetching child yarn jobs tag id : oozie-4908088a838f5d2717d8d8aabe5ccc23 Child yarn jobs are found - Main class : Hortonworks.SparkTutorial.Main Arguments : <<< Invocation of Main class completed <<< Failing Oozie Launcher, Main class [org.apache.oozie.action.hadoop.JavaMain], main() threw exception, java.lang.NoClassDefFoundError: org/apache/spark/SparkConf org.apache.oozie.action.hadoop.JavaMainException: java.lang.NoClassDefFoundError: org/apache/spark/SparkConf at org.apache.oozie.action.hadoop.JavaMain.run(JavaMain.java:58) at org.apache.oozie.action.hadoop.LauncherMain.run(LauncherMain.java:81) at org.apache.oozie.action.hadoop.JavaMain.main(JavaMain.java:35) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.oozie.action.hadoop.LauncherMapper.map(LauncherMapper.java:235) at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:54) at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:459) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343) at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1924) at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158) Caused by: java.lang.NoClassDefFoundError: org/apache/spark/SparkConf at Hortonworks.SparkTutorial.Main.main(Main.java:16) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.oozie.action.hadoop.JavaMain.run(JavaMain.java:55) ... 15 more Caused by: java.lang.ClassNotFoundException: org.apache.spark.SparkConf at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ... 21 more Oozie Launcher failed, finishing Hadoop job gracefully Oozie Launcher, uploading action data to HDFS sequence file: hdfs://HSV-ULANZoneB-nameservice1/user/zzmdrakej2/oozie-oozi/0000006-211115170934680-oozie-oozi-W/java-c507--java/action-data.seq Successfully reset security manager from org.apache.oozie.action.hadoop.LauncherSecurityManager@894858 to null Oozie Launcher ends

Created 03-30-2022 04:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Jared ,

The "ClassNotFoundException" means the JVM responsible for running the code has not found one of the required Java classes - which the code relies on. It's great that you have added those jars to your IntelliJ development environment, however that does not mean it will be available during runtime.

One way would be to package all the dependencies in your jar, creating a so called "fat jar", however that is not recommended as with that your application will not be able to benefit from the future bugfixes which will be deployed in the cluster as the cluster is upgraded/patched. That would also have a risk that your application will fail after upgrades due to different class conflicts.

The best way is to set up the running environment to have the needed classes.

Hue / Java editor actually creates a one-time Oozie workflow with a single Java action in it, however it does not really give you flexibilty around customizing all the parts of this workflow and the running environment including what other jars you need to be shipped with the code.

Since your code relies on SparkConf I assume it is actually a Spark based application. It would be a better option to create an Oozie workflow (you can also start from Hue > Apps > Scheduler > change Documents dropdown to Actions) with a Spark action. That will set up all the classpath needed for running Spark apps. That way you do not need to reference any Spark related jars, just the jar with your custom code.

Hope this helps.

Best regards

Miklos

Created 03-30-2022 04:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Jared ,

The "ClassNotFoundException" means the JVM responsible for running the code has not found one of the required Java classes - which the code relies on. It's great that you have added those jars to your IntelliJ development environment, however that does not mean it will be available during runtime.

One way would be to package all the dependencies in your jar, creating a so called "fat jar", however that is not recommended as with that your application will not be able to benefit from the future bugfixes which will be deployed in the cluster as the cluster is upgraded/patched. That would also have a risk that your application will fail after upgrades due to different class conflicts.

The best way is to set up the running environment to have the needed classes.

Hue / Java editor actually creates a one-time Oozie workflow with a single Java action in it, however it does not really give you flexibilty around customizing all the parts of this workflow and the running environment including what other jars you need to be shipped with the code.

Since your code relies on SparkConf I assume it is actually a Spark based application. It would be a better option to create an Oozie workflow (you can also start from Hue > Apps > Scheduler > change Documents dropdown to Actions) with a Spark action. That will set up all the classpath needed for running Spark apps. That way you do not need to reference any Spark related jars, just the jar with your custom code.

Hope this helps.

Best regards

Miklos

Created 04-11-2022 08:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the late reply thank you for you help with this, I will try to run it with Oozie instead. So it is not recommended at all to run spark jobs created with Java from Hue > Query > Editor > Java?

Created 04-07-2022 11:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jarededrake Has the reply helped resolve your issue? If so, please mark the appropriate reply as the solution, as it will make it easier for others to find the answer in the future.

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community: