Support Questions

- Cloudera Community

- Support

- Support Questions

- HA Cluster Blueprint displays 0 datanodes

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HA Cluster Blueprint displays 0 datanodes

- Labels:

-

Apache Ambari

Created on 12-01-2017 12:04 PM - edited 08-17-2019 08:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello I have the following files:

- cluster_configuration.json

{

"Blueprints": {

"stack_name": "HDP",

"stack_version": "2.6"

},

"host_groups": [

{

"name": "namenode1",

"cardinality" : "1",

"components": [

{ "name" : "HST_AGENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "ZKFC" },

{ "name" : "ZOOKEEPER_SERVER" },

{ "name" : "HST_SERVER" },

{ "name" : "METRICS_MONITOR" },

{ "name" : "JOURNALNODE" },

{ "name" : "NAMENODE" },

{ "name" : "APP_TIMELINE_SERVER" },

{ "name" : "METRICS_GRAFANA" }

]

},

{

"name": "namenode2",

"cardinality" : "1",

"components": [

{ "name" : "ACTIVITY_EXPLORER" },

{ "name" : "HST_AGENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "ZKFC" },

{ "name" : "ZOOKEEPER_SERVER" },

{ "name" : "HISTORYSERVER" },

{ "name" : "METRICS_MONITOR" },

{ "name" : "JOURNALNODE" },

{ "name" : "NAMENODE" },

{ "name" : "METRICS_COLLECTOR" }

]

},

{

"name": "namenode3",

"cardinality" : "1",

"components": [

{ "name" : "ACTIVITY_ANALYZER" },

{ "name" : "HST_AGENT" },

{ "name" : "MAPREDUCE2_CLIENT" },

{ "name" : "YARN_CLIENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "ZOOKEEPER_SERVER" },

{ "name" : "METRICS_MONITOR" },

{ "name" : "JOURNALNODE" },

{ "name" : "RESOURCEMANAGER" }

]

},

{

"name": "hosts_group",

"cardinality" : "3",

"components": [

{ "name" : "NODEMANAGER" },

{ "name" : "HST_AGENT" },

{ "name" : "MAPREDUCE2_CLIENT" },

{ "name" : "YARN_CLIENT" },

{ "name" : "HDFS_CLIENT" },

{ "name" : "DATANODE" },

{ "name" : "METRICS_MONITOR" },

{ "name" : "ZOOKEEPER_CLIENT" }

]

}

],

"configurations": [

{

"core-site": {

"properties" : {

"fs.defaultFS" : "HACluster",

"ha.zookeeper.quorum": "%HOSTGROUP::namenode1%:2181,%HOSTGROUP::namenode2%:2181,%HOSTGROUP::namenode3%:2181",

"hadoop.proxyuser.yarn.hosts": "%HOSTGROUP::namenode2%,%HOSTGROUP::namenode3%"

}}

},

{ "hdfs-site": {

"properties" : {

"dfs.client.failover.proxy.provider.mycluster" : "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider",

"dfs.ha.automatic-failover.enabled" : "true",

"dfs.ha.fencing.methods" : "shell(/bin/true)",

"dfs.ha.namenodes.HACluster" : "nn1,nn2",

"dfs.namenode.http-address" : "%HOSTGROUP::namenode1%:50070",

"dfs.namenode.http-address.HACluster.nn1" : "%HOSTGROUP::namenode1%:50070",

"dfs.namenode.http-address.HACluster.nn2" : "%HOSTGROUP::namenode2%:50070",

"dfs.namenode.https-address" : "%HOSTGROUP::namenode1%:50470",

"dfs.namenode.https-address.HACluster.nn1" : "%HOSTGROUP::namenode1%:50470",

"dfs.namenode.https-address.HACluster.nn2" : "%HOSTGROUP::namenode2%:50470",

"dfs.namenode.rpc-address.HACluster.nn1" : "%HOSTGROUP::namenode1%:8020",

"dfs.namenode.rpc-address.HACluster.nn2" : "%HOSTGROUP::namenode2%:8020",

"dfs.namenode.shared.edits.dir" : "qjournal://%HOSTGROUP::namenode1:8485;%HOSTGROUP::namenode2%:8485;%HOSTGROUP::namenode3%:8485/mycluster",

"dfs.nameservices" : "HACluster"

}}

},

{ "yarn-site": {

"properties": {

"yarn.resourcemanager.ha.enabled": "true",

"yarn.resourcemanager.ha.rm-ids": "rm1,rm2",

"yarn.resourcemanager.hostname.rm1": "%HOSTGROUP::namenode2%",

"yarn.resourcemanager.hostname.rm2": "%HOSTGROUP::namenode3%",

"yarn.resourcemanager.webapp.address.rm1": "%HOSTGROUP::namenode2%:8088",

"yarn.resourcemanager.webapp.address.rm2": "%HOSTGROUP::namenode3%:8088",

"yarn.resourcemanager.webapp.https.address.rm1": "%HOSTGROUP::namenode2%:8090",

"yarn.resourcemanager.webapp.https.address.rm2": "%HOSTGROUP::namenode3%:8090",

"yarn.resourcemanager.recovery.enabled": "true",

"yarn.resourcemanager.store.class": "org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore",

"yarn.resourcemanager.zk-address": "%HOSTGROUP::namenode1%:2181,%HOSTGROUP::namenode2%:2181,%HOSTGROUP::namenode3%:2181",

"yarn.client.failover-proxy-provider": "org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider",

"yarn.resourcemanager.cluster-id": "yarn-cluster",

"yarn.resourcemanager.ha.automatic-failover.zk-base-path": "/yarn-leader-election"

}

}

}

]

}

- hdputils-repo.json

{

"Repositories":{

"base_url":"http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.21/repos/ubuntu16",

"verify_base_url":true

}

}

- hostmap.json

{

"blueprint":"HACluster",

"default_password":"admin",

"host_groups": [

{

"name": "namenode1",

"hosts":

[

{ "fqdn": "namenode1" }

]

},

{

"name": "namenode2",

"hosts":

[

{ "fqdn": "namenode2" }

]

},

{

"name": "namenode3",

"hosts":

[

{ "fqdn": "namenode3" }

]

},

{

"name": "hosts_group",

"hosts":

[

{ "fqdn": "datanode1" },

{ "fqdn": "datanode2" },

{ "fqdn": "datanode3" }

]

}

]

}- repo.json

{

"Repositories":{

"base_url":"http://public-repo-1.hortonworks.com/HDP/ubuntu16/2.x/updates/2.6.3.0/",

"verify_base_url":true

}

}

Then I launch this blue print this way:

$ curl -i -H "X-Requested-By: ambari" -X POST -u admin:admin http://ambariserver:8080/api/v1/blueprints/HACluster -d @cluster_configuration.json $ curl -i -H "X-Requested-By: ambari" -X PUT -u admin:admin http://ambariserver:8080/api/v1/stacks/HDP/versions/2.6/operating_systems/ubuntu16/repositories/HDP-... -d @repo.json $ curl -i -H "X-Requested-By: ambari" -X PUT -u admin:admin http://ambariserver:8080/api/v1/stacks/HDP/versions/2.6/operating_systems/ubuntu16/repositories/HDP-... -d @hdputils-repo.json $ curl -i -H "X-Requested-By: ambari" -X POST -u admin:admin http://ambariserver:8080/api/v1/clusters/HACluster -d @hostmap.json

I am trying to create a 3 namenode and 3 datanode structure with HA HDFS.

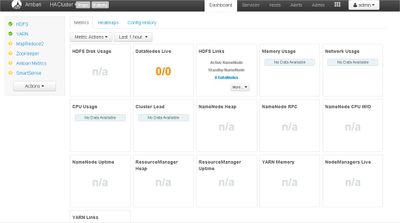

When I launch the blue print, no errors are displayed but then hosts are not registered as you can see on the attached screenshots.

I get the following error at /var/log/ambari-server/ambari-server.log

java.lang.IllegalArgumentException: Unable to match blueprint host group token to a host group: namenode1:8485;

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor.getHostStrings(BlueprintConfigurationProcessor.java:1248)

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor.access$700(BlueprintConfigurationProcessor.java:63)

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor$MultipleHostTopologyUpdater.updateForClusterCreate(BlueprintConfigurationProcessor.java:1870)

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor.doUpdateForClusterCreate(BlueprintConfigurationProcessor.java:355)

at org.apache.ambari.server.topology.ClusterConfigurationRequest.process(ClusterConfigurationRequest.java:152)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask.call(ConfigureClusterTask.java:79)

at org.apache.ambari.server.security.authorization.internal.InternalAuthenticationInterceptor.invoke(InternalAuthenticationInterceptor.java:45)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask.call(ConfigureClusterTask.java:45)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

01 Dec 2017 12:51:15,602 INFO [ambari-client-thread-32] TopologyManager:963 - TopologyManager.processAcceptedHostOffer: queue tasks for host = namenode1 which responded ACCEPTED

01 Dec 2017 12:51:15,603 INFO [ambari-client-thread-32] TopologyManager:988 - TopologyManager.processAcceptedHostOffer: queueing tasks for host = namenode1

01 Dec 2017 12:51:15,603 INFO [ambari-client-thread-32] TopologyManager:863 - TopologyManager.processRequest: host name = datanode1 is mapped to LogicalRequest ID = 1 and will be removed from the reserved hosts.

01 Dec 2017 12:51:15,603 INFO [ambari-client-thread-32] TopologyManager:876 - TopologyManager.processRequest: offering host name = datanode1 to LogicalRequest ID = 1

01 Dec 2017 12:51:15,608 INFO [ambari-client-thread-32] LogicalRequest:101 - LogicalRequest.offer: attempting to match a request to a request for a reserved host to hostname = datanode1

01 Dec 2017 12:51:15,608 INFO [ambari-client-thread-32] LogicalRequest:110 - LogicalRequest.offer: request mapping ACCEPTED for host = datanode1

01 Dec 2017 12:51:15,608 INFO [ambari-client-thread-32] LogicalRequest:113 - LogicalRequest.offer returning response, reservedHost list size = 0

01 Dec 2017 12:51:15,617 INFO [ambari-client-thread-32] TopologyManager:886 - TopologyManager.processRequest: host name = datanode1 was ACCEPTED by LogicalRequest ID = 1 , host has been removed from available hosts.

01 Dec 2017 12:51:15,618 INFO [ambari-client-thread-32] ClusterTopologyImpl:158 - ClusterTopologyImpl.addHostTopology: added host = datanode1 to host group = hosts_group

01 Dec 2017 12:51:15,634 INFO [ambari-client-thread-32] TopologyManager:963 - TopologyManager.processAcceptedHostOffer: queue tasks for host = datanode1 which responded ACCEPTED

01 Dec 2017 12:51:15,638 INFO [ambari-client-thread-32] TopologyManager:988 - TopologyManager.processAcceptedHostOffer: queueing tasks for host = datanode1

01 Dec 2017 12:51:15,638 INFO [ambari-client-thread-32] TopologyManager:904 - TopologyManager.processRequest: not all required hosts have been matched, so adding LogicalRequest ID = 1 to outstanding requests

01 Dec 2017 12:51:15,640 INFO [ambari-client-thread-32] AmbariManagementControllerImpl:1624 - Received a updateCluster request, clusterId=null, clusterName=HACluster, securityType=null, request={ clusterName=HACluster, clusterId=null, provisioningState=INSTALLED, securityType=null, stackVersion=HDP-2.6, desired_scv=null, hosts=[] }

01 Dec 2017 12:51:16,594 WARN [pool-20-thread-1] BlueprintConfigurationProcessor:1546 - The property 'dfs.namenode.secondary.http-address' is associated with the component 'SECONDARY_NAMENODE' which isn't mapped to any host group. This may affect configuration topology resolution.

01 Dec 2017 12:51:16,598 ERROR [pool-20-thread-1] ConfigureClusterTask:116 - Could not determine required host groups

java.lang.IllegalArgumentException: Unable to match blueprint host group token to a host group: namenode1:8485;

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor$MultipleHostTopologyUpdater.getRequiredHostGroups(BlueprintConfigurationProcessor.java:2037)

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor.getRequiredHostGroups(BlueprintConfigurationProcessor.java:303)

at org.apache.ambari.server.topology.ClusterConfigurationRequest.getRequiredHostGroups(ClusterConfigurationRequest.java:126)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask.getTopologyRequiredHostGroups(ConfigureClusterTask.java:113)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask.call(ConfigureClusterTask.java:71)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask$$EnhancerByGuice$$3479de41.CGLIB$call$1(<generated>)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask$$EnhancerByGuice$$3479de41$$FastClassByGuice$$a8ae8ea0.invoke(<generated>)

at com.google.inject.internal.cglib.proxy.$MethodProxy.invokeSuper(MethodProxy.java:228)

at com.google.inject.internal.InterceptorStackCallback$InterceptedMethodInvocation.proceed(InterceptorStackCallback.java:72)

at org.apache.ambari.server.security.authorization.internal.InternalAuthenticationInterceptor.invoke(InternalAuthenticationInterceptor.java:45)

at com.google.inject.internal.InterceptorStackCallback$InterceptedMethodInvocation.proceed(InterceptorStackCallback.java:72)

at com.google.inject.internal.InterceptorStackCallback.intercept(InterceptorStackCallback.java:52)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask$$EnhancerByGuice$$3479de41.call(<generated>)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask.call(ConfigureClusterTask.java:45)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

01 Dec 2017 12:51:16,598 INFO [pool-20-thread-1] ConfigureClusterTask:78 - All required host groups are complete, cluster configuration can now begin

01 Dec 2017 12:51:16,598 INFO [pool-20-thread-1] BlueprintConfigurationProcessor:579 - Config recommendation strategy being used is NEVER_APPLY)

01 Dec 2017 12:51:16,598 INFO [pool-20-thread-1] BlueprintConfigurationProcessor:598 - No recommended configurations are applied. (strategy: NEVER_APPLY)

01 Dec 2017 12:51:16,829 INFO [pool-4-thread-1] AsyncCallableService:92 - Task ConfigureClusterTask exception during execution

java.lang.IllegalArgumentException: Unable to match blueprint host group token to a host group: namenode1:8485;

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor.getHostStrings(BlueprintConfigurationProcessor.java:1248)

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor.access$700(BlueprintConfigurationProcessor.java:63)

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor$MultipleHostTopologyUpdater.updateForClusterCreate(BlueprintConfigurationProcessor.java:1870)

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor.doUpdateForClusterCreate(BlueprintConfigurationProcessor.java:355)

at org.apache.ambari.server.topology.ClusterConfigurationRequest.process(ClusterConfigurationRequest.java:152)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask.call(ConfigureClusterTask.java:79)

at org.apache.ambari.server.security.authorization.internal.InternalAuthenticationInterceptor.invoke(InternalAuthenticationInterceptor.java:45)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask.call(ConfigureClusterTask.java:45)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

01 Dec 2017 12:51:17,845 WARN [pool-20-thread-1] BlueprintConfigurationProcessor:1546 - The property 'dfs.namenode.secondary.http-address' is associated with the component 'SECONDARY_NAMENODE' which isn't mapped to any host group. This may affect configuration topology resolution.

01 Dec 2017 12:51:17,852 ERROR [pool-20-thread-1] ConfigureClusterTask:116 - Could not determine required host groups

java.lang.IllegalArgumentException: Unable to match blueprint host group token to a host group: namenode1:8485;

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor$MultipleHostTopologyUpdater.getRequiredHostGroups(BlueprintConfigurationProcessor.java:2037)

at org.apache.ambari.server.controller.internal.BlueprintConfigurationProcessor.getRequiredHostGroups(BlueprintConfigurationProcessor.java:303)

at org.apache.ambari.server.topology.ClusterConfigurationRequest.getRequiredHostGroups(ClusterConfigurationRequest.java:126)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask.getTopologyRequiredHostGroups(ConfigureClusterTask.java:113)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask.call(ConfigureClusterTask.java:71)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask$$EnhancerByGuice$$3479de41.CGLIB$call$1(<generated>)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask$$EnhancerByGuice$$3479de41$$FastClassByGuice$$a8ae8ea0.invoke(<generated>)

at com.google.inject.internal.cglib.proxy.$MethodProxy.invokeSuper(MethodProxy.java:228)

at com.google.inject.internal.InterceptorStackCallback$InterceptedMethodInvocation.proceed(InterceptorStackCallback.java:72)

at org.apache.ambari.server.security.authorization.internal.InternalAuthenticationInterceptor.invoke(InternalAuthenticationInterceptor.java:45)

at com.google.inject.internal.InterceptorStackCallback$InterceptedMethodInvocation.proceed(InterceptorStackCallback.java:72)

at com.google.inject.internal.InterceptorStackCallback.intercept(InterceptorStackCallback.java:52)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask$$EnhancerByGuice$$3479de41.call(<generated>)

at org.apache.ambari.server.topology.tasks.ConfigureClusterTask.call(ConfigureClusterTask.java:45)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

01 Dec 2017 12:51:17,860 INFO [pool-20-thread-1] ConfigureClusterTask:78 - All required host groups are complete, cluster configuration can now begin

01 Dec 2017 12:51:17,860 INFO [pool-20-thread-1] BlueprintConfigurationProcessor:579 - Config recommendation strategy being used is NEVER_APPLY)

01 Dec 2017 12:51:17,860 INFO [pool-20-thread-1] BlueprintConfigurationProcessor:598 - No recommended configurations are applied. (strategy: NEVER_APPLY)

01 Dec 2017 12:51:18,000 INFO [pool-4-thread-1] AsyncCallableService:92 - Task ConfigureClusterTask exception during execution

What I am missing? How can I register the hosts?? Can someone please help me? Thanks.

Created 12-01-2017 12:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Edit this line in cluster_configuration.json

( %) is missing

"dfs.namenode.shared.edits.dir" : "qjournal://%HOSTGROUP::namenode1:8485;%HOSTGROUP::namenode2%:8485;%HOSTGROUP::namenode3%:8485/mycluster",

to

"dfs.namenode.shared.edits.dir" : "qjournal://%HOSTGROUP::namenode1%:8485;%HOSTGROUP::namenode2%:8485;%HOSTGROUP::namenode3%:8485/mycluster",

Thanks,

Aditya

Created 12-01-2017 12:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Edit this line in cluster_configuration.json

( %) is missing

"dfs.namenode.shared.edits.dir" : "qjournal://%HOSTGROUP::namenode1:8485;%HOSTGROUP::namenode2%:8485;%HOSTGROUP::namenode3%:8485/mycluster",

to

"dfs.namenode.shared.edits.dir" : "qjournal://%HOSTGROUP::namenode1%:8485;%HOSTGROUP::namenode2%:8485;%HOSTGROUP::namenode3%:8485/mycluster",

Thanks,

Aditya

Created 12-01-2017 12:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Aditya Sirna !!! It worked!! I didn´t reallize of that small detail! Thanks a lot!