Support Questions

- Cloudera Community

- Support

- Support Questions

- HAWQ to HIVE data type mapping

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HAWQ to HIVE data type mapping

- Labels:

-

Apache Hive

Created 04-19-2016 07:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm putting together a dataType mapping table and I'm looking for suggestions specifically around "interval", "bit", "dates".

1. HAWQ -> Hive, Interval

2. HAWQ -> Hive, bit. I see that boolean doesn't convert, is the only option TinyInt?

3. HAWQ -> Hive, date. Best practice String or Date. I know Hive can read a String in Date format dynamically.

4. HAWQ -> Hive, Timestamp as String or Timestamp?

5. Does sqoop cover HAWQ?

| HAWQ | HIVE | Suggestion |

| bigint | bigint | Bigint |

| integer | int | Int |

| character varying(20) | varchar(20) | Varchar(20) |

| timestamp without time zone | timestamp | Timestamp |

| numeric | int | Int |

| timestamp without time zone DEFAULT now() | timestamp | Timestamp |

| character varying | int | Varchar, string |

| text | string | String |

| double precision | double | Double if < 9-15 precision, else Decimal(0,0) |

| text[] | array<string> | Array<string> |

| boolean | boolean | Boolean |

| date | date | Date |

| character varying[] | array<string> | array<string> |

| timestamp with time zone | timestamp | Timestamp |

| interval | String | Int |

| bit(1) | TinyInt | |

| Char | Char | Char : will hold whitespace but DB engine will ignore in case of “USA”== “USA ” |

Created on 05-16-2016 01:09 PM - edited 08-19-2019 04:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Results from using sqoop to move data from HAWQ to HIVE. @Artem Ervits and @cstanca

| HAWQ | Hive | Result |

| int | int | worked |

| text | string | worked |

| date | string | write=string, onRead date operations work |

| timestamp | string | write=string, onRead ts operations work |

| bit | boolean | conversion does not work |

| decimal | double | mostly works, precision loss > 9 |

| double precision | double | works |

| real | double | works |

| interval | Breaks! | sqoop mapping error |

| bit varying | Breaks! | sqoop mapping error |

| time | string | write=string, onRead time operations work |

| char | string | write=string, onRead you need wildcard expression, recommend trimming |

| char varying | string | write=string, onRead holds whitespace, recommend trimming |

| varchar | string | works |

| boolean | boolean | works |

| numeric | double | works |

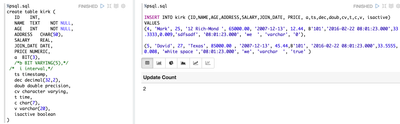

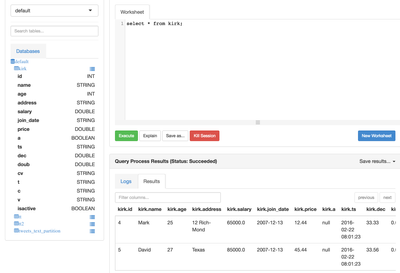

%sh sqoop import --username zeppelin --password zeppelin --connect jdbc:postgresql://jdbcurl --query 'SELECT id,name,join_date,age,a,b,i FROM kirk WHERE $CONDITIONS' -m 1 --target-dir /user/zeppelin/kirk/t6 --map-column-java a=String,i=String,b=String

-- select * select * from kirk ; -- int check between inclusive select age from kirk where age between 25 and 27; -- decimal check select dec from kirk where dec > 33.32; -- string like and wildcard select address from kirk where address like '%Rich%'; -- date is a string but operates like date select join_date from kirk where join_date between '2007-12-13' and '2007-12-15'; -- timestamp, works string on write but operates like TS select ts from kirk where ts > '2016-02-22 08:01:22' -- BIT NOT CORRECT select a from kirk where a =false or a = 1 -- character varying, without white space matches select cv from kirk where cv = 'sdfsadf'; -- character varying, with white space select cv from kirk where cv = 'white space'; -- not matching select cv from kirk where cv = 'white space '; -- matching -- character, doesn't match unless wildcard select c from kirk where c like 'we%'; -- boolean, both true/false and 1/0 are converted properly select id, isactive from kirk where isactive = true or isactive = 0

Created 04-19-2016 09:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe you can use postgres driver and Sqoop from HAWQ using standard postgres connection string.

Created 04-21-2016 04:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kirk Haslbeck

Couple observations:

- numeric in HAWQ is not equivalent with int in Hive. The equivalent is decimal or double, even float.

- varying varchar or varying char have an alias, e.g. varchar(x) or char(x), either way is fine

Most common way to load data to HAWQ and with the best throughput is using gpload utility. Not heard of a sqoop option, at least was not available about one year ago. gpload would work best with delimited flat files.

Created on 05-16-2016 01:09 PM - edited 08-19-2019 04:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Results from using sqoop to move data from HAWQ to HIVE. @Artem Ervits and @cstanca

| HAWQ | Hive | Result |

| int | int | worked |

| text | string | worked |

| date | string | write=string, onRead date operations work |

| timestamp | string | write=string, onRead ts operations work |

| bit | boolean | conversion does not work |

| decimal | double | mostly works, precision loss > 9 |

| double precision | double | works |

| real | double | works |

| interval | Breaks! | sqoop mapping error |

| bit varying | Breaks! | sqoop mapping error |

| time | string | write=string, onRead time operations work |

| char | string | write=string, onRead you need wildcard expression, recommend trimming |

| char varying | string | write=string, onRead holds whitespace, recommend trimming |

| varchar | string | works |

| boolean | boolean | works |

| numeric | double | works |

%sh sqoop import --username zeppelin --password zeppelin --connect jdbc:postgresql://jdbcurl --query 'SELECT id,name,join_date,age,a,b,i FROM kirk WHERE $CONDITIONS' -m 1 --target-dir /user/zeppelin/kirk/t6 --map-column-java a=String,i=String,b=String

-- select * select * from kirk ; -- int check between inclusive select age from kirk where age between 25 and 27; -- decimal check select dec from kirk where dec > 33.32; -- string like and wildcard select address from kirk where address like '%Rich%'; -- date is a string but operates like date select join_date from kirk where join_date between '2007-12-13' and '2007-12-15'; -- timestamp, works string on write but operates like TS select ts from kirk where ts > '2016-02-22 08:01:22' -- BIT NOT CORRECT select a from kirk where a =false or a = 1 -- character varying, without white space matches select cv from kirk where cv = 'sdfsadf'; -- character varying, with white space select cv from kirk where cv = 'white space'; -- not matching select cv from kirk where cv = 'white space '; -- matching -- character, doesn't match unless wildcard select c from kirk where c like 'we%'; -- boolean, both true/false and 1/0 are converted properly select id, isactive from kirk where isactive = true or isactive = 0

Created 05-16-2016 01:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interval data type is not supported in Hive, yet. See https://issues.apache.org/jira/browse/HIVE-5021. Until HIVE-5021 feature is added, I would use two BigInt fields in Hive target table: startInterval, endInterval. Queries using these two fields in WHERE clauses would run better, being more appropriate for indexing and fast scan.

For bit[n] in HAWQ, I would use a char, varchar, or string data type in Hive, depends on how big the string needs to be.